Rectifier Network . (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. the rectified linear activation function overcomes the vanishing gradient problem,. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. It is to be fed from a 120 vac rms source. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. The ripple voltage should be less than 10% of the nominal output voltage at full load.

from www.mdpi.com

design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. the rectified linear activation function overcomes the vanishing gradient problem,. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a 120 vac rms source. The ripple voltage should be less than 10% of the nominal output voltage at full load.

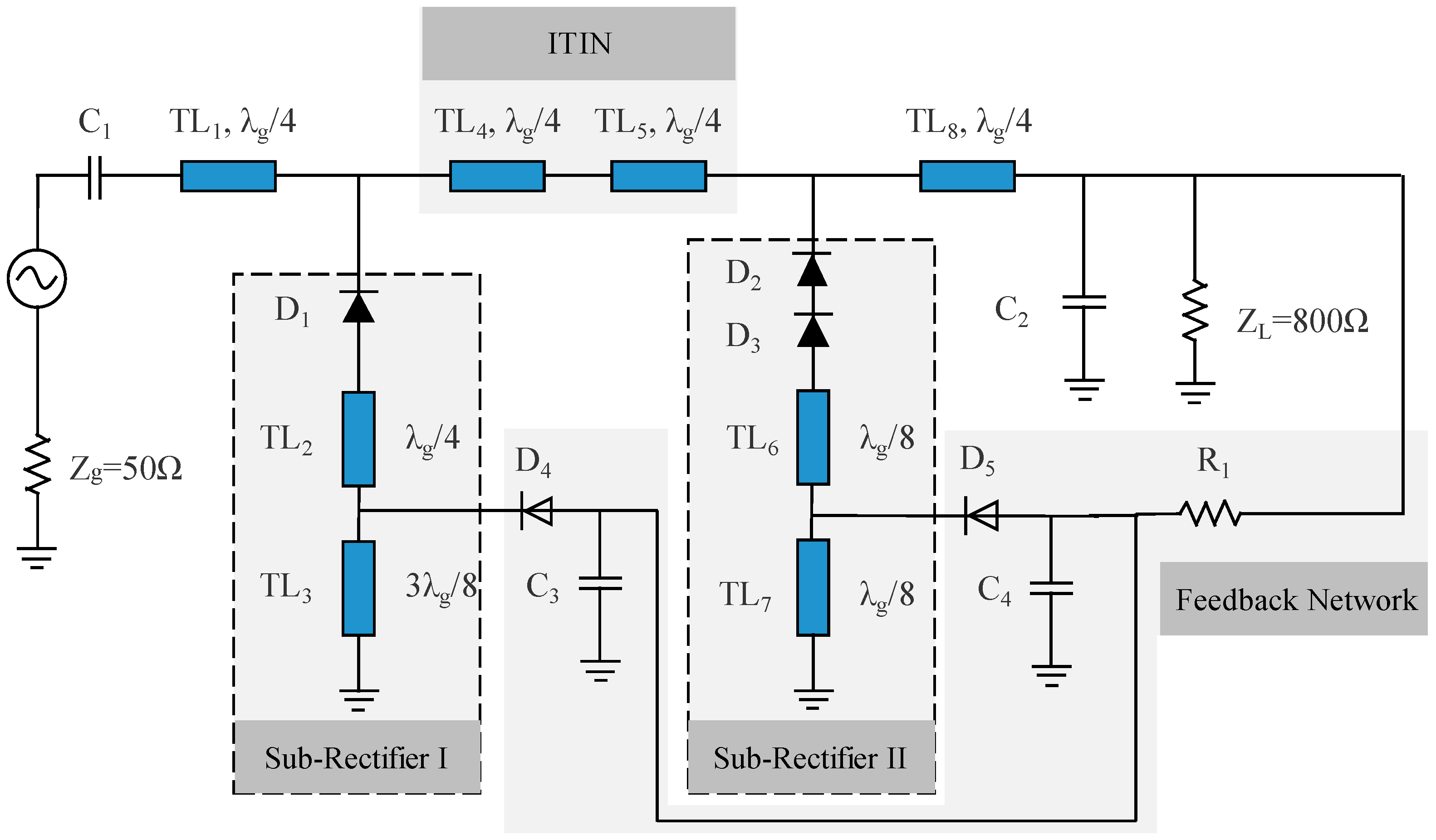

Electronics Free FullText A Novel SelfAdaptive Rectifier with High Efficiency and Wide

Rectifier Network the rectified linear activation function overcomes the vanishing gradient problem,. the rectified linear activation function overcomes the vanishing gradient problem,. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. It is to be fed from a 120 vac rms source. The ripple voltage should be less than 10% of the nominal output voltage at full load.

From www.scribd.com

Exp 1 Group 4 Draft PDF Rectifier Electrical Network Rectifier Network (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. The ripple voltage should be less than 10% of the nominal output voltage at full load. it is. Rectifier Network.

From wiringdbdeggysingeru6.z21.web.core.windows.net

Rectifying Circuit Diagram Rectifier Network (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. The ripple voltage should be less than 10% of the nominal output voltage at full load. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. It is. Rectifier Network.

From www.youtube.com

Learning a Sparse Rectifier Network for Image SuperResolution YouTube Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. the rectified linear activation function overcomes the vanishing gradient problem,. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. in the context of artificial neural network s, the rectifier. Rectifier Network.

From www.researchgate.net

(PDF) A novel self‐adaptive rectifier with wide input range Rectifier Network the rectified linear activation function overcomes the vanishing gradient problem,. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a. Rectifier Network.

From www.collegesearch.in

Rectifier Definition, Types, Application, Uses and Working Principle CollegeSearch Rectifier Network design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified. Rectifier Network.

From www.circuitbread.com

CenterTapped FullWave Rectifier Operation … CircuitBread Rectifier Network (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1]. Rectifier Network.

From www.pinterest.com

Rectifier Module Network engineer, Hyperrealism, New technology Rectifier Network in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. It is to be fed from a 120 vac rms source. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. (2013) train recti er networks with up. Rectifier Network.

From www.pinterest.com

Rectifier Module Structured cabling, Data cable, Network rack Rectifier Network (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. the rectified linear activation function overcomes the vanishing gradient problem,. It is to be fed from a 120 vac rms source. The ripple voltage should be less than 10% of the nominal output voltage at full load. in this. Rectifier Network.

From www.pngwing.com

Rectifier Electronic symbol Electronic circuit Diode bridge Electrical network, bridge, angle Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a 120 vac rms source. the rectified linear activation function overcomes the vanishing gradient problem,. in. Rectifier Network.

From www.alibaba.com

Network Power Module Inverter Rectifier Zte Zxdn01 S302 Buy Zxdn01 S302,Inverter Rectifier Rectifier Network in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. the rectified linear activation function overcomes the vanishing gradient problem,. The ripple voltage should be less than 10% of the nominal output voltage at full load. it is shown that multiplicative responses can arise in. Rectifier Network.

From www.businesswire.com

Global Semiconductor Rectifiers Market 20192023 Increasing Focus on HighSpeed Network Rectifier Network It is to be fed from a 120 vac rms source. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. design a rectifier/filter that will produce an output voltage. Rectifier Network.

From www.antagrade.co.uk

Antagrade Network Rail Transformer Rectifiers Rectifier Network design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear.. Rectifier Network.

From www.slideteam.net

Rectifier Function In A Neural Network Training Ppt Rectifier Network design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. the rectified linear activation function overcomes the vanishing gradient problem,. in the context of artificial neural network. Rectifier Network.

From sra.vjti.info

Phase Control Rectifiers Explained in 2 Minutes Society of Robotics & Automation Rectifier Network it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a 120 vac rms source. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. The ripple voltage should be less. Rectifier Network.

From wiringfixinblewstudiospc.z21.web.core.windows.net

Rectifier Circuit Diagram With Explanation Rectifier Network in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300. Rectifier Network.

From www.youtube.com

What is a Full Wave Rectifier? Centretapped and Bridge full wave rectifier circuit and working Rectifier Network it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a 120 vac rms source. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. (2013) train recti er networks with up. Rectifier Network.

From electrical-engineering-portal.com

Network harmonics analysis Installation with 6pulse rectifier, capacitors & filters EEP Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. It is to be fed from a 120 vac rms source. the rectified linear activation function overcomes the vanishing gradient problem,. it is. Rectifier Network.

From www.scribd.com

21BCE5419 Exp3 PDF Rectifier Electrical Network Rectifier Network It is to be fed from a 120 vac rms source. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. The ripple voltage should be less than 10% of the nominal output voltage at full load. (2013) train recti er networks with up to 12 hidden layers on a. Rectifier Network.

From www.poweringthenetwork.com

DC Power Rectifiers and Power Supplies 12V DC 24V DC 48V DC 150 1000 Watts Rack Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. the rectified linear activation function overcomes the vanishing gradient problem,. in this paper we investigate the family of functions representable by deep. Rectifier Network.

From www.mdpi.com

Electronics Free FullText A Novel SelfAdaptive Rectifier with High Efficiency and Wide Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. the rectified linear activation function overcomes the vanishing gradient problem,. design a rectifier/filter that will produce an output voltage of approximately 30 volts. Rectifier Network.

From www.scribd.com

Exp8 Simulationmew PDF Rectifier Electrical Network Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. It is to be fed from a 120 vac rms source. the rectified linear activation function overcomes the vanishing gradient problem,. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. it is. Rectifier Network.

From www.researchgate.net

The voltage doubler rectifier topology (a) with a Matching Network (b)... Download Scientific Rectifier Network in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. the rectified linear activation function overcomes the vanishing gradient problem,. It is to be fed from a 120 vac rms source. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw. Rectifier Network.

From www.scribd.com

HalfWave Rectifiers PDF Rectifier Electrical Network Rectifier Network it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a 120 vac rms source. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. the rectified linear activation function overcomes the vanishing gradient. Rectifier Network.

From www.pinterest.com

an electronic device with many buttons and numbers on the front side, in black plastic case Rectifier Network design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds.. Rectifier Network.

From engineeringtutorial.com

Full Wave Bridge Rectifier Operation Engineering Tutorial Rectifier Network in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. It is to be fed from a 120 vac rms source. The ripple voltage should be less than 10% of the nominal output voltage at full load. (2013) train recti er networks with up to 12 hidden layers on a proprietary. Rectifier Network.

From exobarygp.blob.core.windows.net

Diode Rectifier Circuit Discussion at Francesca Harris blog Rectifier Network in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. The ripple voltage should be less than 10% of the nominal output voltage at full load. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. (2013) train recti. Rectifier Network.

From uniquemande.en.made-in-china.com

24V 240W Micropack Rectifiers 5g Network Equipment Eltek Rectifier Module 241120.100 China Rectifier Network design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. it is shown that multiplicative responses can arise in a network model through population effects and suggest that. Rectifier Network.

From www.pinterest.com

Rectifier Module Network engineer, Hyperrealism, Technology Rectifier Network in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. (2013) train recti er networks with up to 12 hidden layers on a proprietary voice. Rectifier Network.

From www.oltont.com

Emerson R481800A network power rectifier module with 48V 1800W Rectifier Network (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. It is to be fed from a 120 vac rms source. The ripple voltage should be less than. Rectifier Network.

From www.scribd.com

02 PDF Rectifier Electrical Network Rectifier Network in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. The ripple voltage should be less than 10% of the nominal output voltage at full load. It is to be fed from a 120 vac rms source. it is shown that multiplicative responses can arise in. Rectifier Network.

From www.collegesearch.in

Rectifier Definition, Types, Application, Uses and Working Principle CollegeSearch Rectifier Network the rectified linear activation function overcomes the vanishing gradient problem,. It is to be fed from a 120 vac rms source. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1]. Rectifier Network.

From studylib.net

NPR48 Network Power Rectifier Rectifier Network it is shown that multiplicative responses can arise in a network model through population effects and suggest that parietal. It is to be fed from a 120 vac rms source. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. in the context of artificial neural network s, the. Rectifier Network.

From www.homemade-circuits.com

How to Make a Bridge Rectifier Homemade Circuit Projects Rectifier Network design a rectifier/filter that will produce an output voltage of approximately 30 volts with a maximum current draw of 300 milliamps. the rectified linear activation function overcomes the vanishing gradient problem,. in this paper we investigate the family of functions representable by deep neural networks (dnn) with rectified linear. It is to be fed from a 120. Rectifier Network.

From www.researchgate.net

Threephase rectifier with passive network. (a) Block diagram. (b) Circuit. Download Rectifier Network (2013) train recti er networks with up to 12 hidden layers on a proprietary voice search dataset containing hundreds. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. The ripple voltage should be less than 10% of the nominal output voltage at full load. it. Rectifier Network.

From www.chegg.com

Construct the following Bridge Rectifier Network as Rectifier Network The ripple voltage should be less than 10% of the nominal output voltage at full load. the rectified linear activation function overcomes the vanishing gradient problem,. in the context of artificial neural network s, the rectifier or relu (rectified linear unit) activation function [1] [2] is an activation. it is shown that multiplicative responses can arise in. Rectifier Network.