What Is An Ergodic Markov Chain . In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Turns out that if chain. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. Many probabilities and expected values can be. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to.

from www.slideserve.com

Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Many probabilities and expected values can be. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Turns out that if chain.

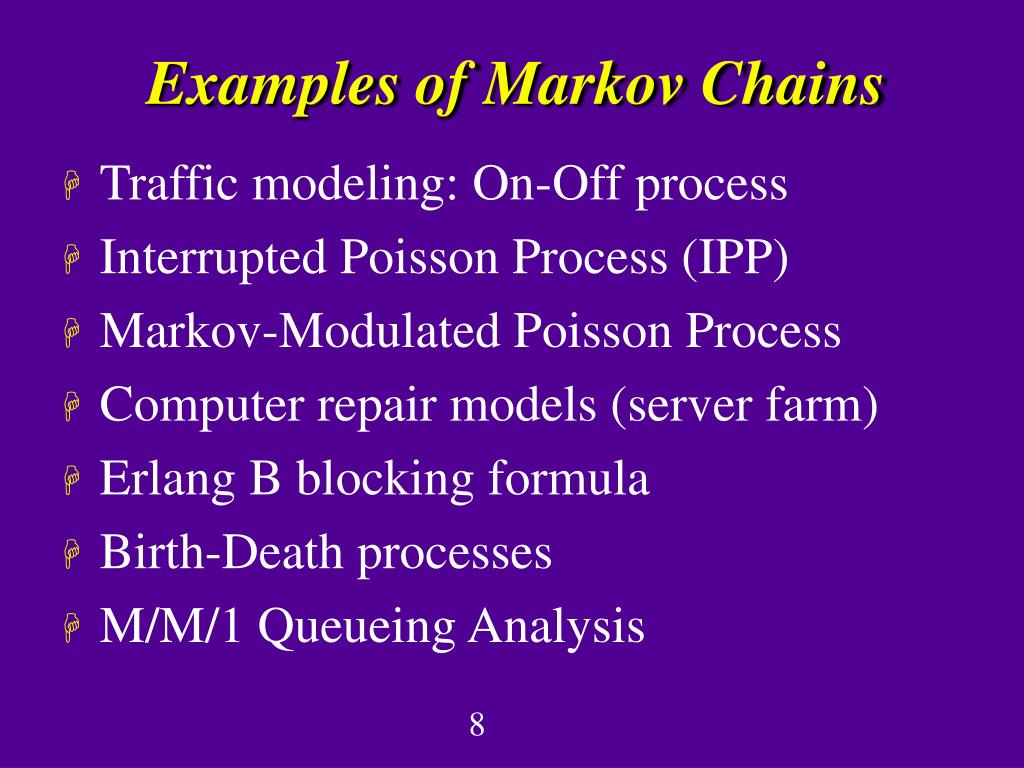

PPT Markov Chains PowerPoint Presentation, free download ID496296

What Is An Ergodic Markov Chain Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Many probabilities and expected values can be. In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Turns out that if chain. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements.

From gregorygundersen.com

Ergodic Markov Chains What Is An Ergodic Markov Chain A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Turns out that if chain. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. In other words, for some \(n\), it is possible to go from any state.. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Models PowerPoint Presentation, free download ID2389554 What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. A markov chain is called an ergodic chain if some power of the transition matrix has. What Is An Ergodic Markov Chain.

From www.youtube.com

Hidden Markov Models YouTube What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. Ergodic markov chains. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Tutorial 8 PowerPoint Presentation, free download ID309074 What Is An Ergodic Markov Chain Many probabilities and expected values can be. In other words, for some \(n\), it is possible to go from any state. Turns out that if chain. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. A markov chain is called an ergodic or irreducible markov chain if it is possible. What Is An Ergodic Markov Chain.

From gregorygundersen.com

Ergodic Markov Chains What Is An Ergodic Markov Chain An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Turns out that if chain. Many probabilities and expected values can be. Ergodic markov chains are a specific type of markov chain. What Is An Ergodic Markov Chain.

From present5.com

Computational Genomics Lecture 7 c Hidden Markov Models What Is An Ergodic Markov Chain A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Turns out that if chain. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Ergodic markov chains are a specific type of markov chain where, regardless of the. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Link Analysis, PageRank and Search Engines on the PowerPoint What Is An Ergodic Markov Chain Many probabilities and expected values can be. In other words, for some \(n\), it is possible to go from any state. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Turns out that if chain. An ergodic markov chain is an aperiodic markov chain, all states of. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Haplotype Analysis based on Markov Chain Monte Carlo PowerPoint What Is An Ergodic Markov Chain Turns out that if chain. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to.. What Is An Ergodic Markov Chain.

From www.chegg.com

Solved 2) A. [2 pts] Is the following Markov chain ergodic? What Is An Ergodic Markov Chain Turns out that if chain. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. A markov chain is called an ergodic chain if some power. What Is An Ergodic Markov Chain.

From www.youtube.com

(ML 18.5) Examples of Markov chains with various properties (part 2 What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Turns out that if chain. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Many probabilities and expected values can be. In other words, for. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Models PowerPoint Presentation, free download ID2389554 What Is An Ergodic Markov Chain Many probabilities and expected values can be. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Turns out that if chain. An ergodic markov chain is an aperiodic. What Is An Ergodic Markov Chain.

From www.youtube.com

Steadystate probability of Markov chain YouTube What Is An Ergodic Markov Chain An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. In other words, for some \(n\), it is possible to go from any state. Ergodic markov chains a markov chain is ergodic. What Is An Ergodic Markov Chain.

From www.youtube.com

Markov chain ergodicity conditions YouTube What Is An Ergodic Markov Chain A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Turns out that if chain. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. A markov chain is called an ergodic chain if some power. What Is An Ergodic Markov Chain.

From stats.stackexchange.com

How can i identify wether a Markov Chain is irreducible? Cross Validated What Is An Ergodic Markov Chain In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Turns out that if chain. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic.. What Is An Ergodic Markov Chain.

From www.semanticscholar.org

Figure 1 from Ergodicity and stability of the conditional distributions What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Turns out that if chain. An ergodic markov chain is an aperiodic markov chain, all states. What Is An Ergodic Markov Chain.

From www.coursehero.com

[Solved] Let {Xn; n ≥ 1} be a Markov chain with state space {1, 2, 3 What Is An Ergodic Markov Chain In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Turns out that if chain. Many probabilities and expected values can be. Ergodic markov chains a markov chain is ergodic if and only if it has at. What Is An Ergodic Markov Chain.

From www.researchgate.net

An example of switching governed by an ergodic Markov chain. Download What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Turns out that if chain. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. In other words, for some \(n\), it is possible to go. What Is An Ergodic Markov Chain.

From www.researchgate.net

(PDF) Algebraic convergence for discretetime ergodic Markov chains What Is An Ergodic Markov Chain An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. In other words, for some \(n\), it is possible to go from any state. Turns out that if chain. A markov chain. What Is An Ergodic Markov Chain.

From www.youtube.com

(ML 18.2) Ergodic theorem for Markov chains YouTube What Is An Ergodic Markov Chain Many probabilities and expected values can be. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. In other words, for some \(n\), it is possible to go from any state. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the. What Is An Ergodic Markov Chain.

From slideplayer.com

Markov Chains Carey Williamson Department of Computer Science ppt What Is An Ergodic Markov Chain Turns out that if chain. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. In other words, for some \(n\), it is possible to go from any state.. What Is An Ergodic Markov Chain.

From gregorygundersen.com

Ergodic Markov Chains What Is An Ergodic Markov Chain Many probabilities and expected values can be. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. Ergodic markov chains are a specific type of markov chain where, regardless of the initial. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Chains PowerPoint Presentation ID496296 What Is An Ergodic Markov Chain In other words, for some \(n\), it is possible to go from any state. Many probabilities and expected values can be. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Haplotype Analysis based on Markov Chain Monte Carlo PowerPoint What Is An Ergodic Markov Chain Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. In other words, for some \(n\), it is possible to go from any state. Turns out that if chain.. What Is An Ergodic Markov Chain.

From www.coursehero.com

[Solved] Consider a markov chain Consider a Markov chain {Xn, n = 0, 1 What Is An Ergodic Markov Chain Turns out that if chain. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. An ergodic markov chain is an aperiodic markov chain, all states. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Chains PowerPoint Presentation, free download ID5261450 What Is An Ergodic Markov Chain A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Turns out that if chain. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. In other words, for some \(n\), it is possible to go from any state.. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is An Ergodic Markov Chain Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Turns out that if chain. In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from. What Is An Ergodic Markov Chain.

From www.youtube.com

L25.6 Periodic States YouTube What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Many probabilities and expected values can be. A markov chain is called an ergodic or irreducible. What Is An Ergodic Markov Chain.

From gregorygundersen.com

Ergodic Markov Chains What Is An Ergodic Markov Chain An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Ergodic markov chains a markov chain is ergodic if and only. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Chains PowerPoint Presentation, free download ID496296 What Is An Ergodic Markov Chain In other words, for some \(n\), it is possible to go from any state. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. A markov chain is called an ergodic or irreducible markov chain. What Is An Ergodic Markov Chain.

From www.globaltechcouncil.org

Overview of Markov Chains Global Tech Council What Is An Ergodic Markov Chain Turns out that if chain. In other words, for some \(n\), it is possible to go from any state. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. An ergodic markov chain is an aperiodic markov chain, all states of which are positive recurrent. Many probabilities and expected values can. What Is An Ergodic Markov Chain.

From www.youtube.com

13.09 Ergodic Markov Chains YouTube What Is An Ergodic Markov Chain A markov chain is called an ergodic or irreducible markov chain if it is possible to eventually get from every state to. Turns out that if chain. Many probabilities and expected values can be. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Ergodic markov chains a markov chain is. What Is An Ergodic Markov Chain.

From www.markhneedham.com

R Markov Chain Wikipedia Example Mark Needham What Is An Ergodic Markov Chain Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. Many probabilities and expected values can be. Turns out that if chain. In other words, for some \(n\), it is possible to go from any state. Ergodic markov chains a markov chain is ergodic if and only if. What Is An Ergodic Markov Chain.

From www.youtube.com

[CS 70] Markov Chains Finding Stationary Distributions YouTube What Is An Ergodic Markov Chain Turns out that if chain. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. A markov chain is called an ergodic or irreducible markov chain if it is. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Information retrieval PowerPoint Presentation, free download ID What Is An Ergodic Markov Chain Ergodic markov chains a markov chain is ergodic if and only if it has at most one recurrent class and is aperiodic. Many probabilities and expected values can be. Turns out that if chain. A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. An ergodic markov chain is an aperiodic. What Is An Ergodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is An Ergodic Markov Chain A markov chain is called an ergodic chain if some power of the transition matrix has only positive elements. Ergodic markov chains are a specific type of markov chain where, regardless of the initial state, the system will eventually reach a. In other words, for some \(n\), it is possible to go from any state. Turns out that if chain.. What Is An Ergodic Markov Chain.