From www.researchgate.net

Onehot vector representation and embedding representation for semantic Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. The idea of this post is inspired by. Those are meant to be. This module is often used to store word embeddings and. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From www.youtube.com

torch.nn.Embedding explained (+ Characterlevel language model) YouTube Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks,. Torch Embedding One Hot.

From blog.csdn.net

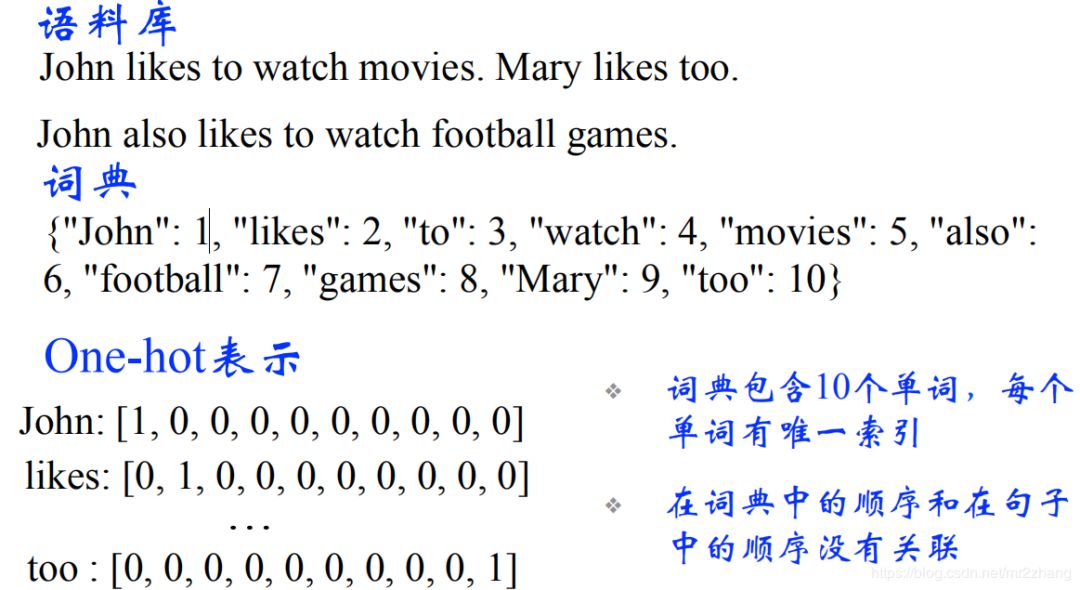

一文搞懂onehot和embedding_one hotCSDN博客 Torch Embedding One Hot That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. Those are meant to be. The idea of this post is inspired by. This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. The idea of this post is inspired by. Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. This. Torch Embedding One Hot.

From blog.csdn.net

pytorch embedding层详解(从原理到实战)CSDN博客 Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks,. Torch Embedding One Hot.

From www.cnblogs.com

[NLP]embedding——onehot,word2vec,fasttext,Glove,sentence embedding Torch Embedding One Hot This module is often used to store word embeddings and. Those are meant to be. The idea of this post is inspired by. a simple lookup table that stores embeddings of a fixed dictionary and size. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From www.cnblogs.com

[NLP]embedding——onehot,word2vec,fasttext,Glove,sentence embedding Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. The idea of this. Torch Embedding One Hot.

From github.com

API doc problems of embedding, one_hot · Issue 9056 · PaddlePaddle Torch Embedding One Hot Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. The idea of this post is inspired by. This. Torch Embedding One Hot.

From blog.csdn.net

一文看懂推荐系统:召回04:离散特征处理,onehot编码和embedding特征嵌入_离散特征 embedingCSDN博客 Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. The idea of this post is inspired by. Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. a simple lookup table. Torch Embedding One Hot.

From www.youtube.com

Feature EngineeringHow to Perform One Hot Encoding for Multi Torch Embedding One Hot This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. Those are meant to be. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From www.researchgate.net

Comparison between onehot word vector and word embedding vector Torch Embedding One Hot The idea of this post is inspired by. Those are meant to be. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and. That is,. Torch Embedding One Hot.

From www.cnblogs.com

[NLP]embedding——onehot,word2vec,fasttext,Glove,sentence embedding Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. Those are meant to be. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. a simple lookup table. Torch Embedding One Hot.

From towardsdatascience.com

Word Embedding in NLP OneHot Encoding and SkipGram Neural Network Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. The idea of this post is inspired by. This module is often used to store word embeddings and. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. That is, we represent the word \(w\). Torch Embedding One Hot.

From discuss.pytorch.org

Predict a categorical variable and then embed it (onehot?) autograd Torch Embedding One Hot This module is often used to store word embeddings and. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0,. Torch Embedding One Hot.

From blog.csdn.net

【Pytorch基础教程28】浅谈torch.nn.embedding_torch embeddingCSDN博客 Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. This module is often used to store word embeddings and. Those are meant to be. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. a simple lookup table. Torch Embedding One Hot.

From www.featureform.com

Embeddings in Machine Learning Everything You Need to Know FeatureForm Torch Embedding One Hot The idea of this post is inspired by. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. Those are meant to be. This. Torch Embedding One Hot.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. Those are meant to be. This module is often used to store word embeddings and. a simple lookup table. Torch Embedding One Hot.

From www.youtube.com

OneHot Encoding and Word embedding Natural Language Processing in Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. This module is often used to store word embeddings and. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. The idea of this post is inspired by. a simple lookup table that stores embeddings of a. Torch Embedding One Hot.

From blog.gopenai.com

Difference between OneHot Encoding vs Embedding by Dr. Alvin Ang Torch Embedding One Hot The idea of this post is inspired by. This module is often used to store word embeddings and. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. a simple lookup table that stores embeddings of a fixed dictionary and size. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks,. Torch Embedding One Hot.

From www.cnblogs.com

[NLP]embedding——onehot,word2vec,fasttext,Glove,sentence embedding Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. The idea of this post is inspired by. Those are meant to be. a simple lookup table that stores embeddings of a fixed dictionary and size. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This. Torch Embedding One Hot.

From www.youtube.com

EMB1/ Onehot encoding et Embedding YouTube Torch Embedding One Hot This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. The idea of this post is inspired by. Those are meant to be. That is,. Torch Embedding One Hot.

From today-gaze-697915.framer.app

Embedding이란 무엇이고, 어떻게 사용하는가? 싱클리(Syncly) Torch Embedding One Hot Those are meant to be. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table. Torch Embedding One Hot.

From www.sohu.com

从Onehot, Word embedding到Transformer,一步步教你理解Bert_关系 Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. Those are meant to. Torch Embedding One Hot.

From www.cnblogs.com

[NLP]embedding——onehot,word2vec,fasttext,Glove,sentence embedding Torch Embedding One Hot Those are meant to be. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. The idea of this post is inspired by. This. Torch Embedding One Hot.

From bbs.huaweicloud.com

从Onehot, Word embedding到Transformer,一步步教你理解Bert云社区华为云 Torch Embedding One Hot Those are meant to be. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. This module is often used to store word embeddings and. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. a simple lookup table that stores embeddings of a fixed dictionary and. Torch Embedding One Hot.

From blog.csdn.net

06_1.Pytorch中如何表示字符串、word embedding、One hot、Embedding(Word2vec、BERT Torch Embedding One Hot That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. Those are meant to be. This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. The idea of this post is inspired by. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From coderzcolumn.com

How to Use GloVe Word Embeddings With PyTorch Networks? Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. Those are meant to be. The idea of this post is inspired by. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This. Torch Embedding One Hot.

From heung-bae-lee.github.io

임베딩이란? DataLatte's IT Blog Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and. Torch Embedding One Hot.

From www.cnblogs.com

[NLP]embedding——onehot,word2vec,fasttext,Glove,sentence embedding Torch Embedding One Hot That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. Those are meant to be. a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and. The idea of this post is inspired by. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From www.homedepot.ca

Bernzomatic TS4000KC High Heat Torch The Home Depot Canada Torch Embedding One Hot The idea of this post is inspired by. This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. Those are meant to be. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. That is,. Torch Embedding One Hot.

From bbs.huaweicloud.com

从Onehot, Word embedding到Transformer,一步步教你理解Bert云社区华为云 Torch Embedding One Hot The idea of this post is inspired by. Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. a simple lookup table that stores embeddings of a fixed dictionary and size. This. Torch Embedding One Hot.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch Embedding One Hot Those are meant to be. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and. The idea of this post is inspired by. in pytorch, torch.embedding (part of the torch.nn module) is a building. Torch Embedding One Hot.

From www.tensorflow.org

Word embeddings Text TensorFlow Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. This module is often used to store word embeddings and. a simple lookup table that stores embeddings of a fixed dictionary and size. Those are meant to. Torch Embedding One Hot.

From blog.csdn.net

一文看懂词嵌入word embedding(2种算法+其他文本表示比较)_weixin_43612023的博客CSDN博客 Torch Embedding One Hot in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. This module is often used to store word embeddings and. That is, we represent the word \(w\) by \[\overbrace{\left[ 0, 0,. The idea of this post is inspired by. Those are meant to be. a simple lookup table. Torch Embedding One Hot.

From www.researchgate.net

Two sequence encoding models used in CRISPR/Cas9 onehot encoding and Torch Embedding One Hot a simple lookup table that stores embeddings of a fixed dictionary and size. This module is often used to store word embeddings and. in pytorch, torch.embedding (part of the torch.nn module) is a building block used in neural networks, specifically for tasks that. The idea of this post is inspired by. Those are meant to be. That is,. Torch Embedding One Hot.