Pyspark Rdd Numpartitions . Return a new rdd that has exactly numpartitions partitions. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). This function takes 2 parameters; Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Returns a new :class:dataframe that has exactly numpartitions partitions. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Returns the number of partitions in rdd. Similar to coalesce defined on an :class: You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. Numpartitions and *cols , when one is specified the other is optional. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. Repartition() is a wider transformation that. Int) → pyspark.rdd.rdd [t] [source] ¶.

from data-flair.training

Repartition() is a wider transformation that. Returns a new :class:dataframe that has exactly numpartitions partitions. Returns the number of partitions in rdd. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd In the case of scala,. Numpartitions and *cols , when one is specified the other is optional. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Returns the number of partitions in rdd. Int) → pyspark.rdd.rdd [t] [source] ¶. Similar to coalesce defined on an :class:

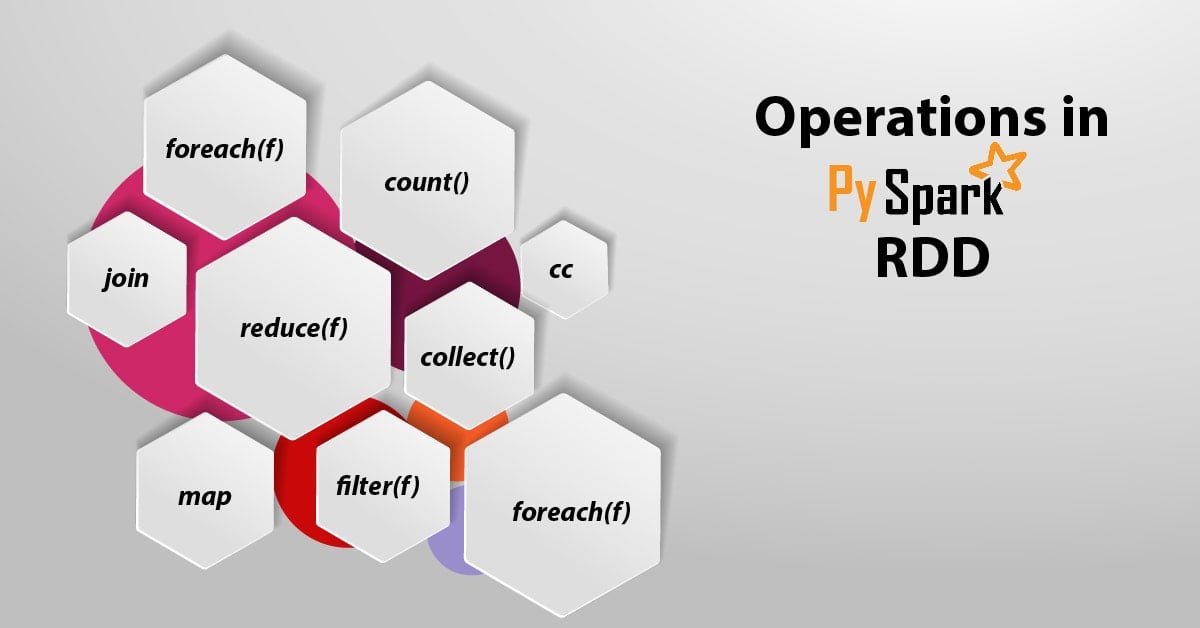

PySpark RDD With Operations and Commands DataFlair

Pyspark Rdd Numpartitions Returns the number of partitions in rdd. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Returns a new :class:dataframe that has exactly numpartitions partitions. In the case of scala,. Return a new rdd that has exactly numpartitions partitions. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Rdd.getnumpartitions() → int [source] ¶. Int) → pyspark.rdd.rdd [t] [source] ¶. Returns the number of partitions in rdd. Repartition() is a wider transformation that. You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. This function takes 2 parameters; >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Numpartitions and *cols , when one is specified the other is optional. Similar to coalesce defined on an :class: Returns the number of partitions in rdd.

From medium.com

Spark RDD (Low Level API) Basics using Pyspark by Sercan Karagoz Pyspark Rdd Numpartitions You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). This function takes 2 parameters; Int) → pyspark.rdd.rdd [t] [source] ¶. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Numpartitions and *cols , when one is specified the other is optional. In the case of scala,. Similar to coalesce defined on an :class: Returns the number of. Pyspark Rdd Numpartitions.

From www.projectpro.io

PySpark RDD Cheat Sheet A Comprehensive Guide Pyspark Rdd Numpartitions Return a new rdd that has exactly numpartitions partitions. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Similar to coalesce defined on an :class: In the case of scala,. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to. Pyspark Rdd Numpartitions.

From blog.csdn.net

pysparkRddgroupbygroupByKeycogroupgroupWith用法_pyspark rdd groupby Pyspark Rdd Numpartitions You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Rdd.getnumpartitions() → int [source] ¶. In the case of scala,. Int) → pyspark.rdd.rdd [t] [source] ¶. Similar to coalesce. Pyspark Rdd Numpartitions.

From blog.csdn.net

PySpark中RDD的数据输出详解_pythonrdd打印内容CSDN博客 Pyspark Rdd Numpartitions You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. Returns the number of partitions in rdd. Repartition() is a wider transformation that. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Rdd.getnumpartitions() → int [source] ¶. Returns a new :class:dataframe that has exactly numpartitions partitions. Pyspark.sql.dataframe.repartition() method is used. Pyspark Rdd Numpartitions.

From www.projectpro.io

PySpark RDD Cheat Sheet A Comprehensive Guide Pyspark Rdd Numpartitions Numpartitions and *cols , when one is specified the other is optional. Return a new rdd that has exactly numpartitions partitions. In the case of scala,. Returns the number of partitions in rdd. Repartition() is a wider transformation that. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Returns a new :class:dataframe that has exactly numpartitions partitions. You need to. Pyspark Rdd Numpartitions.

From blog.csdn.net

【Python】PySpark 数据计算 ① ( RDDmap 方法 RDDmap 语法 传入普通函数 传入 lambda Pyspark Rdd Numpartitions Numpartitions and *cols , when one is specified the other is optional. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Returns a new :class:dataframe that has exactly numpartitions partitions. Similar to coalesce defined on an :class: You can get the number of partitions in. Pyspark Rdd Numpartitions.

From www.projectpro.io

How to sample records using PySpark Pyspark Rdd Numpartitions Return a new rdd that has exactly numpartitions partitions. Rdd.getnumpartitions() → int [source] ¶. You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Returns a new :class:dataframe that has exactly numpartitions partitions. Numpartitions and *cols , when one is specified the other is. Pyspark Rdd Numpartitions.

From blog.csdn.net

PySpark数据分析基础核心数据集RDD原理以及操作一文详解(一)_rdd中rCSDN博客 Pyspark Rdd Numpartitions Similar to coalesce defined on an :class: You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Return a new rdd that has exactly numpartitions partitions. Returns the number of partitions in rdd. >>> rdd = sc.parallelize([1, 2, 3, 4], 2). Pyspark Rdd Numpartitions.

From blog.csdn.net

pyspark.RDD aggregate 操作详解_pyspark rdd aggregateCSDN博客 Pyspark Rdd Numpartitions In the case of scala,. Returns the number of partitions in rdd. This function takes 2 parameters; Similar to coalesce defined on an :class: Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of. Pyspark Rdd Numpartitions.

From annefou.github.io

Introduction to bigdata using PySpark Introduction to (Py)Spark Pyspark Rdd Numpartitions Int) → pyspark.rdd.rdd [t] [source] ¶. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Repartition() is a wider transformation that. Returns the number of partitions in rdd.. Pyspark Rdd Numpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Pyspark Rdd Numpartitions Returns the number of partitions in rdd. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. This function takes 2 parameters; In the case of scala,. Returns a new :class:dataframe that. Pyspark Rdd Numpartitions.

From blog.csdn.net

PySpark数据分析基础核心数据集RDD常用函数操作一文详解(三)_pyspark numpartitionCSDN博客 Pyspark Rdd Numpartitions Int) → pyspark.rdd.rdd [t] [source] ¶. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. Pyspark.sql.dataframe.repartition() method is used to. Pyspark Rdd Numpartitions.

From blog.csdn.net

Python大数据之PySpark(五)RDD详解_pyspark rddCSDN博客 Pyspark Rdd Numpartitions Repartition() is a wider transformation that. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. Similar to coalesce defined on an :class: This function takes 2 parameters; Int) → pyspark.rdd.rdd [t] [source] ¶. Returns the number of partitions in rdd. Numpartitions and *cols , when one is specified the other is optional. You can get the number. Pyspark Rdd Numpartitions.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Pyspark Rdd Numpartitions Similar to coalesce defined on an :class: >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. In the case of scala,. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Returns the number of partitions in rdd.. Pyspark Rdd Numpartitions.

From www.youtube.com

How to use distinct RDD transformation in PySpark PySpark 101 Part Pyspark Rdd Numpartitions >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Numpartitions and *cols , when one is specified the other is optional. Pyspark.sql.dataframe.repartition() method is used to increase or. Pyspark Rdd Numpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Pyspark Rdd Numpartitions Returns the number of partitions in rdd. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. Returns the number of. Pyspark Rdd Numpartitions.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Pyspark Rdd Numpartitions Return a new rdd that has exactly numpartitions partitions. Rdd.getnumpartitions() → int [source] ¶. Repartition() is a wider transformation that. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. This function takes 2 parameters; Numpartitions and *cols , when one is specified the other is optional. Returns a. Pyspark Rdd Numpartitions.

From fyodlejvy.blob.core.windows.net

How To Create Rdd From Csv File In Pyspark at Patricia Lombard blog Pyspark Rdd Numpartitions In the case of scala,. Return a new rdd that has exactly numpartitions partitions. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). This function takes 2 parameters; Similar to coalesce defined on an :class: Returns the number of partitions in rdd. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions,. Pyspark Rdd Numpartitions.

From blog.csdn.net

11pyspark的RDD的变换与动作算子总结_rdd转述和rdd动作的关系CSDN博客 Pyspark Rdd Numpartitions Numpartitions and *cols , when one is specified the other is optional. Return a new rdd that has exactly numpartitions partitions. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Returns a new :class:dataframe that has exactly numpartitions partitions. Int) → pyspark.rdd.rdd [t] [source] ¶.. Pyspark Rdd Numpartitions.

From www.projectpro.io

PySpark RDD Cheat Sheet A Comprehensive Guide Pyspark Rdd Numpartitions >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Similar to coalesce defined on an :class:. Pyspark Rdd Numpartitions.

From sparkbyexamples.com

PySpark Create RDD with Examples Spark by {Examples} Pyspark Rdd Numpartitions Similar to coalesce defined on an :class: You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Returns the number of partitions in rdd. Repartition() is a wider transformation that. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Numpartitions and *cols ,. Pyspark Rdd Numpartitions.

From ittutorial.org

PySpark RDD Example IT Tutorial Pyspark Rdd Numpartitions Int) → pyspark.rdd.rdd [t] [source] ¶. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd In the case of scala,. Numpartitions and *cols , when one is specified the other is optional. Returns a new :class:dataframe that. Pyspark Rdd Numpartitions.

From www.youtube.com

Tutorial 7 PySpark RDD GroupBy function and Reading Documentation Pyspark Rdd Numpartitions In the case of scala,. Return a new rdd that has exactly numpartitions partitions. Int) → pyspark.rdd.rdd [t] [source] ¶. Similar to coalesce defined on an :class: Rdd.getnumpartitions() → int [source] ¶. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Repartition() is a wider transformation that. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number. Pyspark Rdd Numpartitions.

From blog.csdn.net

PySpark中RDD的数据输出详解_pythonrdd打印内容CSDN博客 Pyspark Rdd Numpartitions You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). In the case of scala,. Int) → pyspark.rdd.rdd [t] [source] ¶. Returns the number of partitions in rdd. This function takes 2 parameters; Numpartitions and *cols , when one is specified the other is optional. Return a new rdd that has exactly numpartitions partitions. Spark rdd provides getnumpartitions,. Pyspark Rdd Numpartitions.

From giobtyevn.blob.core.windows.net

Df Rdd Getnumpartitions Pyspark at Lee Lemus blog Pyspark Rdd Numpartitions Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Returns a new :class:dataframe that has exactly numpartitions partitions. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Rdd.getnumpartitions() → int [source] ¶. Similar to. Pyspark Rdd Numpartitions.

From www.oreilly.com

1. Introduction to Spark and PySpark Data Algorithms with Spark [Book] Pyspark Rdd Numpartitions Returns the number of partitions in rdd. You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert. Pyspark Rdd Numpartitions.

From blog.csdn.net

Python大数据之PySpark(五)RDD详解_pyspark rddCSDN博客 Pyspark Rdd Numpartitions >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Numpartitions and *cols , when one is specified the other is optional. Similar to coalesce defined on an :class: You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Repartition() is a wider transformation that. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of. Pyspark Rdd Numpartitions.

From medium.com

Pyspark RDD. Resilient Distributed Datasets (RDDs)… by Muttineni Sai Pyspark Rdd Numpartitions Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Returns the number of partitions in rdd. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or. Pyspark Rdd Numpartitions.

From zhuanlan.zhihu.com

PySpark实战 17:使用 Python 扩展 PYSPARK:RDD 和用户定义函数 (1) 知乎 Pyspark Rdd Numpartitions Returns the number of partitions in rdd. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd Returns the number of partitions in rdd. Int) → pyspark.rdd.rdd [t] [source] ¶. >>> rdd = sc.parallelize([1, 2, 3, 4], 2). Pyspark Rdd Numpartitions.

From blog.csdn.net

pysparkRddgroupbygroupByKeycogroupgroupWith用法_pyspark rdd groupby Pyspark Rdd Numpartitions Returns a new :class:dataframe that has exactly numpartitions partitions. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Pyspark.sql.dataframe.repartition() method is used to increase or decrease the rdd/dataframe partitions by number of partitions or by single column name or multiple column names. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. In the case of scala,. You. Pyspark Rdd Numpartitions.

From www.youtube.com

What is PySpark RDD II Resilient Distributed Dataset II PySpark II Pyspark Rdd Numpartitions Similar to coalesce defined on an :class: You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). In the case of scala,. Repartition() is a wider transformation that. This function takes 2 parameters; Returns the number of partitions in rdd. Return a new rdd that has exactly numpartitions partitions. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns. Pyspark Rdd Numpartitions.

From sparkbyexamples.com

Convert PySpark RDD to DataFrame Spark By {Examples} Pyspark Rdd Numpartitions You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. Numpartitions and *cols , when one is specified the other is optional. In the case of scala,. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Rdd.getnumpartitions() → int [source] ¶. This function takes 2 parameters; Pyspark.sql.dataframe.repartition() method is used. Pyspark Rdd Numpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Pyspark Rdd Numpartitions Returns the number of partitions in rdd. Similar to coalesce defined on an :class: This function takes 2 parameters; Repartition() is a wider transformation that. You can get the number of partitions in a pyspark dataframe using the `rdd.getnumpartitions()` method or the. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Return a new rdd that has exactly numpartitions partitions.. Pyspark Rdd Numpartitions.

From blog.csdn.net

pysparkRddgroupbygroupByKeycogroupgroupWith用法_pyspark rdd groupby Pyspark Rdd Numpartitions You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. Repartition() is a wider transformation that. Similar to coalesce defined on an :class: This function takes 2 parameters; Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to. Pyspark Rdd Numpartitions.

From downloads.apache.org

pyspark.RDD.aggregateByKey — PySpark 3.1.3 documentation Pyspark Rdd Numpartitions Returns the number of partitions in rdd. Repartition() is a wider transformation that. Spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd This function takes 2 parameters; In the case of scala,. Rdd.getnumpartitions() → int [source] ¶.. Pyspark Rdd Numpartitions.