What Is Not A Markov Chain . a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. The changes are not completely predictable, but rather are governed by probability. the markov chain is the process x 0,x 1,x 2,. , xn = in = p xn. such a process or experiment is called a markov chain or markov process. The state of a markov chain at time t is the value ofx t. For example, if x t = 6, we say the process. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. a markov chain describes a system whose state changes over time. The process was first studied by a russian. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property.

from www.chegg.com

a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. a markov chain describes a system whose state changes over time. the markov chain is the process x 0,x 1,x 2,. The changes are not completely predictable, but rather are governed by probability. The state of a markov chain at time t is the value ofx t. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. such a process or experiment is called a markov chain or markov process. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. The process was first studied by a russian.

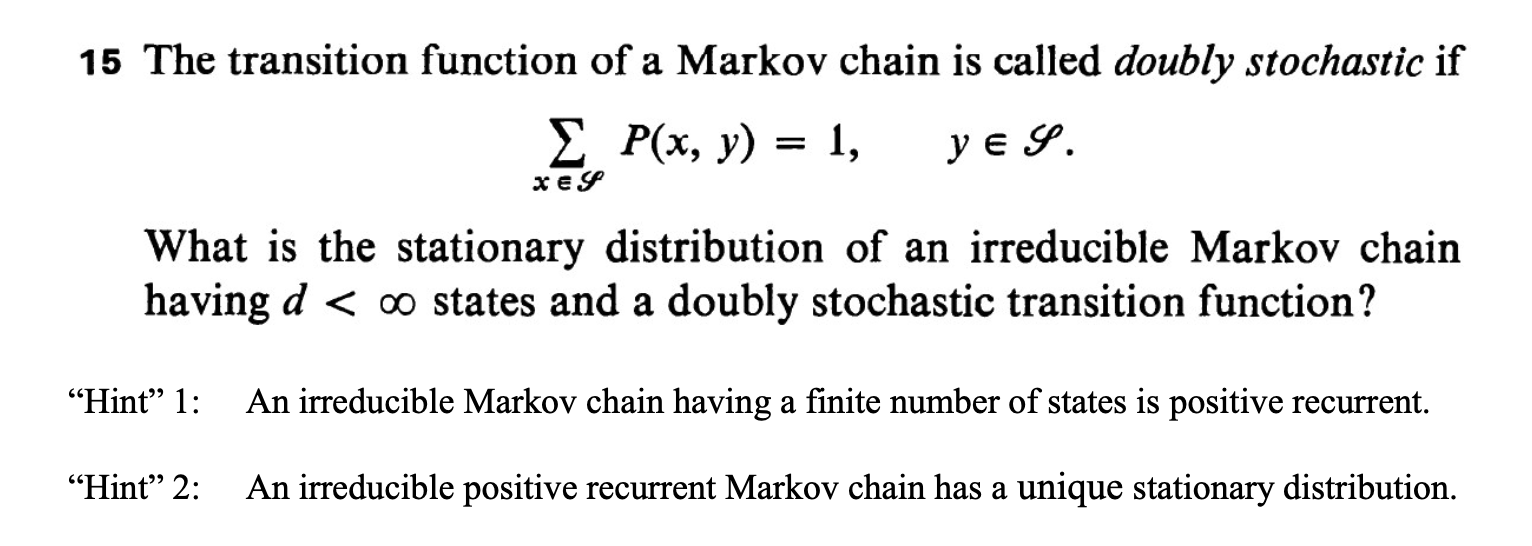

Solved 15 The transition function of a Markov chain is

What Is Not A Markov Chain a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. The process was first studied by a russian. The changes are not completely predictable, but rather are governed by probability. For example, if x t = 6, we say the process. , xn = in = p xn. The state of a markov chain at time t is the value ofx t. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a markov chain describes a system whose state changes over time. the markov chain is the process x 0,x 1,x 2,. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. such a process or experiment is called a markov chain or markov process.

From math.stackexchange.com

stochastic processes A question regarding Markov Chains Mathematics What Is Not A Markov Chain , xn = in = p xn. The process was first studied by a russian. such a process or experiment is called a markov chain or markov process. For example, if x t = 6, we say the process. The changes are not completely predictable, but rather are governed by probability. a markov chain is a mathematical system. What Is Not A Markov Chain.

From towardsdatascience.com

A brief introduction to Markov chains Towards Data Science What Is Not A Markov Chain The changes are not completely predictable, but rather are governed by probability. The state of a markov chain at time t is the value ofx t. For example, if x t = 6, we say the process. such a process or experiment is called a markov chain or markov process. a markov chain essentially consists of a set. What Is Not A Markov Chain.

From bookdown.org

Chapter 8 Stochastic Process and Markov Chains LectureNotes.knit What Is Not A Markov Chain The state of a markov chain at time t is the value ofx t. such a process or experiment is called a markov chain or markov process. The process was first studied by a russian. , xn = in = p xn. a markov chain essentially consists of a set of transitions, which are determined by some probability. What Is Not A Markov Chain.

From www.latentview.com

Markov Chain Overview Characteristics & Applications What Is Not A Markov Chain a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. the markov chain is the process x 0,x 1,x 2,. , xn = in = p xn. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with. What Is Not A Markov Chain.

From www.chegg.com

A Markov chain has a transition probability matrix What Is Not A Markov Chain a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. a markov chain describes a system whose state changes over time. the markov chain is the process x 0,x 1,x 2,. For example, if x t = 6, we say the process. a fair coin. What Is Not A Markov Chain.

From www.researchgate.net

Markov chains a, Markov chain for L = 1. States are represented by What Is Not A Markov Chain such a process or experiment is called a markov chain or markov process. The state of a markov chain at time t is the value ofx t. , xn = in = p xn. the markov chain is the process x 0,x 1,x 2,. a markov chain essentially consists of a set of transitions, which are determined. What Is Not A Markov Chain.

From www.slideserve.com

PPT Chapter 17 Markov Chains PowerPoint Presentation, free download What Is Not A Markov Chain the markov chain is the process x 0,x 1,x 2,. The changes are not completely predictable, but rather are governed by probability. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. The process was first studied by a russian. The state of a markov chain at time t is. What Is Not A Markov Chain.

From www.coursehero.com

[Solved] Transition Probability 2. A Markov chain with state space {1 What Is Not A Markov Chain a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. definition 12.1 the sequence x is called a markov chain if it satisfies the markov. What Is Not A Markov Chain.

From brilliant.org

Markov Chains Stationary Distributions Practice Problems Online What Is Not A Markov Chain The changes are not completely predictable, but rather are governed by probability. the markov chain is the process x 0,x 1,x 2,. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. , xn = in = p xn. a markov chain essentially consists of a set of transitions,. What Is Not A Markov Chain.

From www.slideserve.com

PPT Markov Models PowerPoint Presentation, free download ID2389554 What Is Not A Markov Chain For example, if x t = 6, we say the process. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. , xn = in = p xn. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. The. What Is Not A Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is Not A Markov Chain a markov chain describes a system whose state changes over time. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. the markov chain is the process x 0,x 1,x 2,. , xn = in = p xn. a fair coin is tossed repeatedly with results $y_0, y_1,. What Is Not A Markov Chain.

From www.chegg.com

Solved 15 The transition function of a Markov chain is What Is Not A Markov Chain The process was first studied by a russian. the markov chain is the process x 0,x 1,x 2,. For example, if x t = 6, we say the process. The state of a markov chain at time t is the value ofx t. a markov chain describes a system whose state changes over time. a markov chain. What Is Not A Markov Chain.

From www.slideserve.com

PPT Hidden Markov Model PowerPoint Presentation, free download ID What Is Not A Markov Chain a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the. What Is Not A Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is Not A Markov Chain such a process or experiment is called a markov chain or markov process. The process was first studied by a russian. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. The changes are not completely predictable, but rather are governed by probability. a markov chain essentially consists of a set. What Is Not A Markov Chain.

From www.slideserve.com

PPT Markov Chain Part 1 PowerPoint Presentation, free download ID What Is Not A Markov Chain The state of a markov chain at time t is the value ofx t. a markov chain describes a system whose state changes over time. such a process or experiment is called a markov chain or markov process. the markov chain is the process x 0,x 1,x 2,. , xn = in = p xn. a. What Is Not A Markov Chain.

From www.analyticsvidhya.com

A Comprehensive Guide on Markov Chain Analytics Vidhya What Is Not A Markov Chain For example, if x t = 6, we say the process. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. such a process or experiment is called a markov chain or markov process. The process was first studied by a russian. a markov chain essentially consists of a. What Is Not A Markov Chain.

From www.slideshare.net

Markov Chains What Is Not A Markov Chain For example, if x t = 6, we say the process. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. a markov chain essentially consists of a set of. What Is Not A Markov Chain.

From www.chegg.com

Solved 16. Which of the following Markov chains with What Is Not A Markov Chain a markov chain is a mathematical system that experiences transitions from one state to another according to certain. The process was first studied by a russian. such a process or experiment is called a markov chain or markov process. The state of a markov chain at time t is the value ofx t. a fair coin is. What Is Not A Markov Chain.

From medium.com

Demystifying Markov Clustering. Introduction to markov clustering… by What Is Not A Markov Chain the markov chain is the process x 0,x 1,x 2,. , xn = in = p xn. such a process or experiment is called a markov chain or markov process. The state of a markov chain at time t is the value ofx t. a markov chain essentially consists of a set of transitions, which are determined. What Is Not A Markov Chain.

From www.chegg.com

Consider a Markov chain on Ω={1,2,3,4,5,6} specified What Is Not A Markov Chain The state of a markov chain at time t is the value ofx t. The changes are not completely predictable, but rather are governed by probability. the markov chain is the process x 0,x 1,x 2,. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. . What Is Not A Markov Chain.

From dataaspirant.com

markov chain simulation What Is Not A Markov Chain such a process or experiment is called a markov chain or markov process. , xn = in = p xn. The process was first studied by a russian. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. For example, if x t = 6, we say the process. a markov. What Is Not A Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is Not A Markov Chain The state of a markov chain at time t is the value ofx t. a markov chain describes a system whose state changes over time. For example, if x t = 6, we say the process. The changes are not completely predictable, but rather are governed by probability. The process was first studied by a russian. the markov. What Is Not A Markov Chain.

From www.youtube.com

Matrix Limits and Markov Chains YouTube What Is Not A Markov Chain such a process or experiment is called a markov chain or markov process. For example, if x t = 6, we say the process. The state of a markov chain at time t is the value ofx t. a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the. What Is Not A Markov Chain.

From towardsdatascience.com

Markov Chain Models in Sports. A model describes mathematically what What Is Not A Markov Chain , xn = in = p xn. such a process or experiment is called a markov chain or markov process. The changes are not completely predictable, but rather are governed by probability. For example, if x t = 6, we say the process. a markov chain essentially consists of a set of transitions, which are determined by some. What Is Not A Markov Chain.

From www.youtube.com

Markov Chains Recurrence, Irreducibility, Classes Part 2 YouTube What Is Not A Markov Chain such a process or experiment is called a markov chain or markov process. The changes are not completely predictable, but rather are governed by probability. The process was first studied by a russian. the markov chain is the process x 0,x 1,x 2,. a markov chain describes a system whose state changes over time. a markov. What Is Not A Markov Chain.

From stats.stackexchange.com

How can i identify wether a Markov Chain is irreducible? Cross Validated What Is Not A Markov Chain The process was first studied by a russian. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. a markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability. For example, if x t = 6, we say. What Is Not A Markov Chain.

From medium.com

Markov Chain Medium What Is Not A Markov Chain a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. The state of a markov chain at time t is the value ofx t. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. a markov chain is a mathematical. What Is Not A Markov Chain.

From www.slideserve.com

PPT Bayesian Methods with Monte Carlo Markov Chains II PowerPoint What Is Not A Markov Chain the markov chain is the process x 0,x 1,x 2,. definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. a markov chain describes a system whose state changes over time. For example, if x t = 6, we say the process. The process was first studied by a russian. The. What Is Not A Markov Chain.

From fyoxcrinc.blob.core.windows.net

What Is Stationary Distribution Of Markov Chain at Leonard Sales blog What Is Not A Markov Chain , xn = in = p xn. a markov chain describes a system whose state changes over time. The process was first studied by a russian. the markov chain is the process x 0,x 1,x 2,. For example, if x t = 6, we say the process. a fair coin is tossed repeatedly with results $y_0, y_1,. What Is Not A Markov Chain.

From www.geeksforgeeks.org

Markov Chains in NLP What Is Not A Markov Chain a markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the markov. The changes are not completely predictable, but rather are governed by probability. the markov chain is the process x 0,x 1,x 2,. The state of a markov chain at time t is the value ofx t. . What Is Not A Markov Chain.

From studylib.net

Solutions Markov Chains 1 What Is Not A Markov Chain The process was first studied by a russian. a markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability. For example, if x t = 6, we say the process. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$. What Is Not A Markov Chain.

From www.chegg.com

Solved Problem 1. A Markov chain has states {0,1,2,3,4}. The What Is Not A Markov Chain a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. a markov chain is a mathematical system that experiences transitions from one state to another according to certain. , xn = in = p xn. The state of a markov chain at time t is the value. What Is Not A Markov Chain.

From www.youtube.com

Markov Chains VISUALLY EXPLAINED + History! YouTube What Is Not A Markov Chain definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. a markov chain describes a system whose state changes over time. The process was first studied by a russian. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. The. What Is Not A Markov Chain.

From www.shiksha.com

Markov Chain Types, Properties and Applications Shiksha Online What Is Not A Markov Chain The changes are not completely predictable, but rather are governed by probability. a markov chain describes a system whose state changes over time. a fair coin is tossed repeatedly with results $y_0, y_1, y_2, \dots$ that are either $0$ or $1$ with probability $1/2$. The state of a markov chain at time t is the value ofx t.. What Is Not A Markov Chain.

From www.youtube.com

L24.4 DiscreteTime FiniteState Markov Chains YouTube What Is Not A Markov Chain definition 12.1 the sequence x is called a markov chain if it satisfies the markov property. The state of a markov chain at time t is the value ofx t. such a process or experiment is called a markov chain or markov process. The process was first studied by a russian. a markov chain essentially consists of. What Is Not A Markov Chain.