Databricks View Mount Points . Thanks for the links, you made my day. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can get this information by running dbutils.fs.mounts() command (see. You can use dbutils.fs.mounts() how looks like. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. %fs mounts this will give you all the mount points and.

from www.graphable.ai

You can use dbutils.fs.mounts() how looks like. You can get this information by running dbutils.fs.mounts() command (see. Thanks for the links, you made my day. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. %fs mounts this will give you all the mount points and. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object.

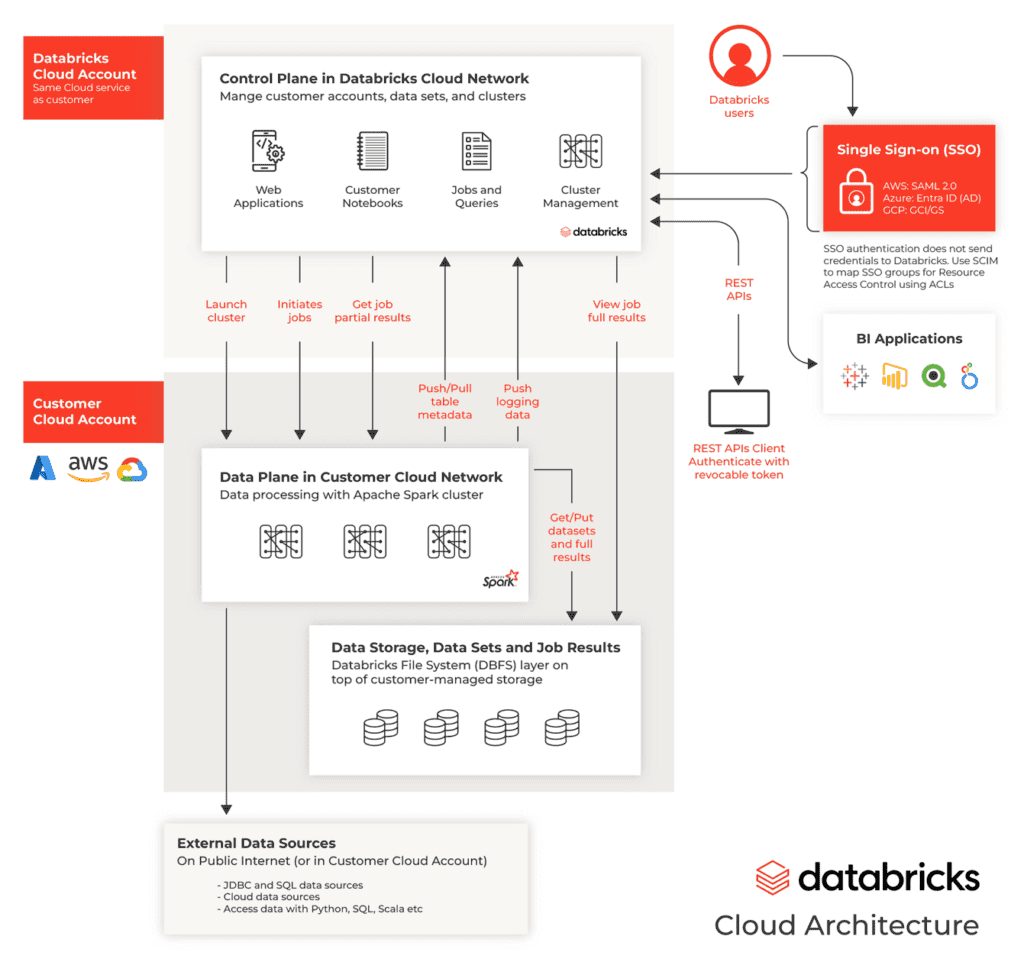

Databricks Architecture A Concise Explanation

Databricks View Mount Points Thanks for the links, you made my day. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. You can use dbutils.fs.mounts() how looks like. %fs mounts this will give you all the mount points and. You can get this information by running dbutils.fs.mounts() command (see. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Thanks for the links, you made my day.

From blog.brq.com

Como configurar Mount Points do Azure Data Lake no Azure Databricks Databricks View Mount Points You can use dbutils.fs.mounts() how looks like. Thanks for the links, you made my day. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Previously i was able to run the. Databricks View Mount Points.

From doc-iaaslz.mcs.thalesdigital.io

Deploy Azure DataBricks in Private Link scenario dbaas documentation Databricks View Mount Points %fs mounts this will give you all the mount points and. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can get this. Databricks View Mount Points.

From azureops.org

Mount and Unmount Data Lake in Databricks AzureOps Databricks View Mount Points Thanks for the links, you made my day. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. %fs mounts this will give you all the mount points and. You can get this information by running dbutils.fs.mounts() command (see. Previously i was able to run the folowing command in databricks to see. Databricks View Mount Points.

From stackoverflow.com

scala How to list all the mount points in Azure Databricks? Stack Databricks View Mount Points You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. You can use dbutils.fs.mounts() how looks like. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to. Databricks View Mount Points.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks View Mount Points %fs mounts this will give you all the mount points and. You can use dbutils.fs.mounts() how looks like. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems. Databricks View Mount Points.

From www.youtube.com

21 What is mount point create mount point using dbutils.fs.mount Databricks View Mount Points Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. You can use dbutils.fs.mounts() how looks like. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. You can get this information. Databricks View Mount Points.

From www.databricks.com

NFS Mounting in Databricks Product Databricks Blog Databricks View Mount Points You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Thanks for the links, you made my day. Previously i was able. Databricks View Mount Points.

From blog.ithubcity.com

25 Delete or Unmount Mount Points in Azure Databricks Databricks View Mount Points You can use dbutils.fs.mounts() how looks like. %fs mounts this will give you all the mount points and. Thanks for the links, you made my day. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. You can simply use. Databricks View Mount Points.

From www.youtube.com

Create mount points using sas token in databricks AWS and Azure and Databricks View Mount Points You can get this information by running dbutils.fs.mounts() command (see. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. %fs mounts this will give you all. Databricks View Mount Points.

From www.youtube.com

Databricks Mount To AWS S3 And Import Data YouTube Databricks View Mount Points You can use dbutils.fs.mounts() how looks like. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Thanks for the links, you made my day. %fs mounts this will give you all the mount points and. You can get this. Databricks View Mount Points.

From stackoverflow.com

scala How to list all the mount points in Azure Databricks? Stack Databricks View Mount Points %fs mounts this will give you all the mount points and. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Databricks mounts create a link between. Databricks View Mount Points.

From www.youtube.com

20. Delete or Unmount Mount Points in Azure Databricks YouTube Databricks View Mount Points Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. You can use dbutils.fs.mounts() how looks like. Thanks for the. Databricks View Mount Points.

From community.databricks.com

How to migrate from mount points to Unity Catalog Databricks Databricks View Mount Points Thanks for the links, you made my day. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. You can get this information by running dbutils.fs.mounts() command (see. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems. Databricks View Mount Points.

From www.youtube.com

How to mount a azure storage folder to databricks (DBFS)? YouTube Databricks View Mount Points Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. %fs mounts this will give you all the mount points. Databricks View Mount Points.

From community.databricks.com

How to migrate from mount points to Unity Catalog Databricks Databricks View Mount Points Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can simply use the. Databricks View Mount Points.

From infinitelambda.com

How to Use Databricks on AWS for PySpark Data Flows Infinite Lambda Databricks View Mount Points You can use dbutils.fs.mounts() how looks like. You can get this information by running dbutils.fs.mounts() command (see. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Databricks mounts create a link between a workspace and cloud object storage, which. Databricks View Mount Points.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks View Mount Points Thanks for the links, you made my day. %fs mounts this will give you all the mount points and. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Previously i was. Databricks View Mount Points.

From takethenotes.com

Exploring The World Of Mount Points In Linux Disk Management Take The Databricks View Mount Points You can get this information by running dbutils.fs.mounts() command (see. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object.. Databricks View Mount Points.

From www.aiophotoz.com

How To Configure Azure Data Lake Mount Points On Azure Databricks Databricks View Mount Points Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. %fs mounts this will give you all the mount points and. Thanks for the links, you made. Databricks View Mount Points.

From learn.microsoft.com

Azure Databricks를 사용한 최신 분석 아키텍처 Azure Architecture Center Databricks View Mount Points Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can get this information by running dbutils.fs.mounts() command (see. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2. Databricks View Mount Points.

From blog.brq.com

Como configurar Mount Points do Azure Data Lake no Azure Databricks Databricks View Mount Points You can get this information by running dbutils.fs.mounts() command (see. You can use dbutils.fs.mounts() how looks like. Thanks for the links, you made my day. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Mount points in databricks serve as a bridge, linking your. Databricks View Mount Points.

From www.vrogue.co

Como Configurar Mount Points Do Azure Data Lake No Az vrogue.co Databricks View Mount Points You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Thanks for the links, you made my day. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. You can get this. Databricks View Mount Points.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks View Mount Points You can get this information by running dbutils.fs.mounts() command (see. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Mount points in databricks serve. Databricks View Mount Points.

From www.tpsearchtool.com

How To Configure Azure Data Lake Mount Points On Azure Databricks Images Databricks View Mount Points Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Databricks mounts create a link between a workspace and cloud object storage,. Databricks View Mount Points.

From www.youtube.com

Databricks Mounts Mount your AWS S3 bucket to Databricks YouTube Databricks View Mount Points Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Thanks for the links, you made my day. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. You can use dbutils.fs.mounts() how looks like.. Databricks View Mount Points.

From www.graphable.ai

Databricks Architecture A Concise Explanation Databricks View Mount Points You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. You can get this information by running dbutils.fs.mounts() command (see. You can use dbutils.fs.mounts() how looks like. %fs mounts this will give you all the mount points and. Databricks mounts create a link between a workspace and cloud object storage, which enables. Databricks View Mount Points.

From rajanieshkaushikk.com

Empower Data Analysis with Materialized Views in Databricks SQL Databricks View Mount Points Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. You can simply use the databricks filesystem commands to navigate through the mount points available. Databricks View Mount Points.

From www.youtube.com

Azure Databricks Configure Datalake Mount Point Do it yourself Databricks View Mount Points You can use dbutils.fs.mounts() how looks like. You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. Databricks mounts create a link. Databricks View Mount Points.

From datalyseis.com

mount adls in DataBricks with SPN and oauth2 DataLyseis Databricks View Mount Points Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Mount points in databricks serve as a bridge, linking your databricks file system (dbfs) to cloud object storage, such as azure data lake storage gen2 (adls gen2), amazon s3, or. You can simply use the databricks filesystem commands to navigate. Databricks View Mount Points.

From www.youtube.com

How to Mount or Connect your AWS S3 Bucket in Databricks YouTube Databricks View Mount Points You can use dbutils.fs.mounts() how looks like. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Mount points in databricks serve as a bridge,. Databricks View Mount Points.

From www.youtube.com

How to create Mount Point using Service Principal in Databricks YouTube Databricks View Mount Points Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. You can get this information by running dbutils.fs.mounts() command (see. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can simply use the. Databricks View Mount Points.

From hxehawklw.blob.core.windows.net

Mount Point Databricks at Jessica Botello blog Databricks View Mount Points You can simply use the databricks filesystem commands to navigate through the mount points available in your cluster. %fs mounts this will give you all the mount points and. Thanks for the links, you made my day. You can use dbutils.fs.mounts() how looks like. Databricks mounts create a link between a workspace and cloud object storage, which enables you to. Databricks View Mount Points.

From www.youtube.com

Views in Databricks Standard View, Temp View and Global View using Databricks View Mount Points Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. Thanks for the links, you made my day. You can use dbutils.fs.mounts() how looks like.. Databricks View Mount Points.

From community.databricks.com

How to migrate from mount points to Unity Catalog Databricks Databricks View Mount Points Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. Thanks for the links, you made my day. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can get this information by running. Databricks View Mount Points.

From blog.brq.com

Como configurar Mount Points do Azure Data Lake no Azure Databricks Databricks View Mount Points Databricks mounts create a link between a workspace and cloud object storage, which enables you to interact with cloud object. You can use dbutils.fs.mounts() how looks like. Previously i was able to run the folowing command in databricks to see a list of the mount points but it seems the system does. You can simply use the databricks filesystem commands. Databricks View Mount Points.