Partitioning And Bucketing In Pyspark . In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In this blog post, we’ll delve into the. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning.

from sparkbyexamples.com

In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In this blog post, we’ll delve into the. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe.

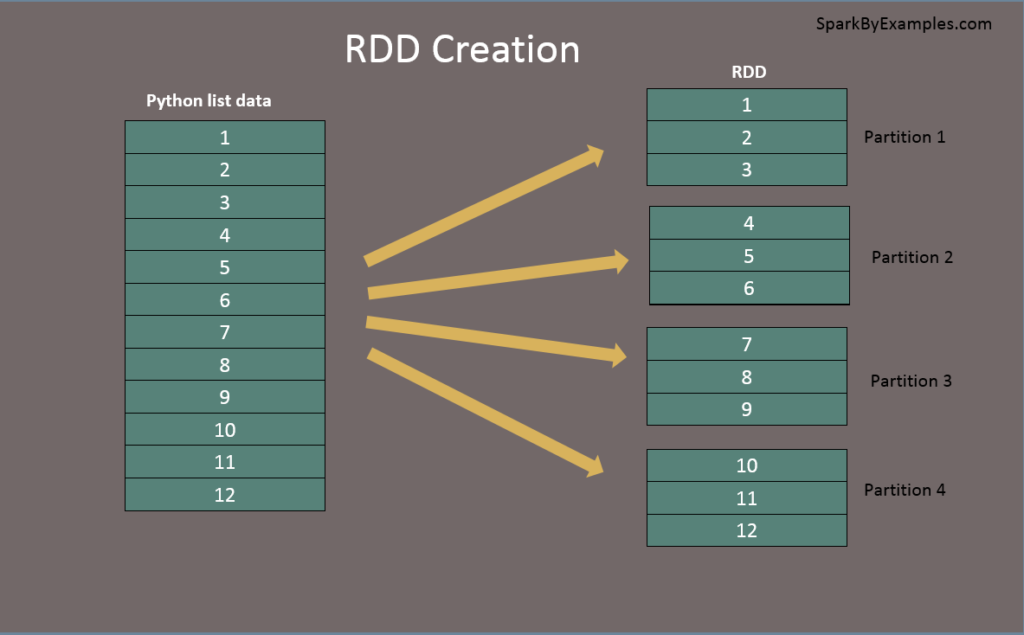

PySpark RDD Tutorial Learn with Examples Spark By {Examples}

Partitioning And Bucketing In Pyspark In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). In this blog post, we’ll delve into the. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle.

From dev.to

The 5minute guide to using bucketing in Pyspark DEV Community Partitioning And Bucketing In Pyspark Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. At a high level, hive partition is a. Partitioning And Bucketing In Pyspark.

From pub.towardsai.net

Pyspark MLlib Classification using Pyspark ML by Muttineni Sai Partitioning And Bucketing In Pyspark In this blog post, we’ll delve into the. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique. Partitioning And Bucketing In Pyspark.

From blog.det.life

Data Partitioning and Bucketing Examples and Best Practices by Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In this blog post, we’ll delve into the. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: Partitioning and bucketing in pyspark refer to two different techniques for organizing data in. Partitioning And Bucketing In Pyspark.

From medium.com

Partitioning & Bucketing in Hive… by Vaishali S Medium Partitioning And Bucketing In Pyspark At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). Partitioning and bucketing in pyspark refer to two different. Partitioning And Bucketing In Pyspark.

From thepythoncoding.blogspot.com

Coding with python What is the difference between 𝗣𝗮𝗿𝘁𝗶𝘁𝗶𝗼𝗻𝗶𝗻𝗴 𝗮𝗻𝗱 Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: Guide into pyspark bucketing —. Partitioning And Bucketing In Pyspark.

From www.okera.com

Bucketing in Hive Hive Bucketing Example With Okera Okera Partitioning And Bucketing In Pyspark Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In this blog post,. Partitioning And Bucketing In Pyspark.

From medium.com

Apache Spark Bucketing and Partitioning. by Jay Nerd For Tech Medium Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: At a high level, hive partition is a way to split the large table into smaller tables based on the values of. Partitioning And Bucketing In Pyspark.

From www.youtube.com

Partitioning and bucketing in Spark Lec9 Practical video YouTube Partitioning And Bucketing In Pyspark In this blog post, we’ll delve into the. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is. Partitioning And Bucketing In Pyspark.

From bigdatansql.com

Bucketing_With_Partitioning Big Data and SQL Partitioning And Bucketing In Pyspark Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. At a high. Partitioning And Bucketing In Pyspark.

From www.youtube.com

Hive Partitioning and Bucketing YouTube Partitioning And Bucketing In Pyspark Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In the. Partitioning And Bucketing In Pyspark.

From www.newsletter.swirlai.com

SAI 26 Partitioning and Bucketing in Spark (Part 1) Partitioning And Bucketing In Pyspark In this blog post, we’ll delve into the. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. The. Partitioning And Bucketing In Pyspark.

From data-flair.training

Hive Partitioning vs Bucketing Advantages and Disadvantages DataFlair Partitioning And Bucketing In Pyspark At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). In the realm of pyspark, efficient data management becomes. Partitioning And Bucketing In Pyspark.

From sparkbyexamples.com

Hive Bucketing Explained with Examples Spark By {Examples} Partitioning And Bucketing In Pyspark In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: In this blog post, we’ll delve into the. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to. Partitioning And Bucketing In Pyspark.

From python.plainenglish.io

What is Partitioning vs Bucketing in Apache Hive? (Partitioning vs Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. In this blog post, we’ll delve into the. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data. Partitioning And Bucketing In Pyspark.

From blog.det.life

Data Partitioning and Bucketing Examples and Best Practices by Partitioning And Bucketing In Pyspark Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. In this blog post, we’ll delve into the. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe.. Partitioning And Bucketing In Pyspark.

From www.analyticsvidhya.com

Partitioning And Bucketing in Hive Bucketing vs Partitioning Partitioning And Bucketing In Pyspark At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). The motivation is to optimize the performance of a. Partitioning And Bucketing In Pyspark.

From www.ppmy.cn

PySpark基础入门(6):Spark Shuffle Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. In this blog post, we’ll delve into the. Partitioning and bucketing in pyspark refer to two different techniques for. Partitioning And Bucketing In Pyspark.

From blogs.diggibyte.com

“Optimizing Query Performance in PySpark with Partitioning, Bucketing Partitioning And Bucketing In Pyspark Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. At a high level, hive partition is a way to. Partitioning And Bucketing In Pyspark.

From www.mamicode.com

[pySpark][笔记]spark tutorial from spark official site在ipython notebook 下 Partitioning And Bucketing In Pyspark In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In this blog post, we’ll delve into the. At a high level, hive partition is a way to split the large table. Partitioning And Bucketing In Pyspark.

From sparkbyexamples.com

Hive Partitioning vs Bucketing with Examples? Spark By {Examples} Partitioning And Bucketing In Pyspark Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a. Partitioning And Bucketing In Pyspark.

From ittutorial.org

PySpark RDD Example IT Tutorial Partitioning And Bucketing In Pyspark At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). The motivation is to optimize the performance of a. Partitioning And Bucketing In Pyspark.

From medium.com

List PySpark Partitioning/Bucketing Curated by Luke Teo Medium Partitioning And Bucketing In Pyspark Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. At a high level, hive. Partitioning And Bucketing In Pyspark.

From sparkbyexamples.com

PySpark partitionBy() Write to Disk Example Spark By {Examples} Partitioning And Bucketing In Pyspark In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form. Partitioning And Bucketing In Pyspark.

From www.linkedin.com

PySpark Bucketing Simplified Data Organization for Faster Performance Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. Bucketing is an. Partitioning And Bucketing In Pyspark.

From www.newsletter.swirlai.com

SAI 26 Partitioning and Bucketing in Spark (Part 1) Partitioning And Bucketing In Pyspark Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. In this blog post, we’ll delve into the. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating. Partitioning And Bucketing In Pyspark.

From www.newsletter.swirlai.com

SAI 26 Partitioning and Bucketing in Spark (Part 1) Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. Partitioning and bucketing in pyspark. Partitioning And Bucketing In Pyspark.

From sparkbyexamples.com

PySpark RDD Tutorial Learn with Examples Spark By {Examples} Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. In pyspark, databricks,. Partitioning And Bucketing In Pyspark.

From www.youtube.com

100. Databricks Pyspark Spark Architecture Internals of Partition Partitioning And Bucketing In Pyspark In this blog post, we’ll delve into the. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you can specify how many buckets you want). Bucketing. Partitioning And Bucketing In Pyspark.

From www.youtube.com

Bucketing in Hive with Example Hive Partitioning with Bucketing Partitioning And Bucketing In Pyspark In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a manageable form (you. Partitioning And Bucketing In Pyspark.

From www.youtube.com

Partitioning and Bucketing in Hive 1 YouTube Partitioning And Bucketing In Pyspark Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. At a high level, hive partition is a way to split the large table into smaller tables based on the values of a column (one partition for each distinct values) whereas bucket is a technique to divide the data in a. Partitioning And Bucketing In Pyspark.

From www.newsletter.swirlai.com

SAI 26 Partitioning and Bucketing in Spark (Part 1) Partitioning And Bucketing In Pyspark In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. The motivation is to optimize the performance of. Partitioning And Bucketing In Pyspark.

From www.youtube.com

Partitioning Spark Data Frames using Databricks and Pyspark YouTube Partitioning And Bucketing In Pyspark Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. Bucketing is an optimization technique that decomposes data into more manageable parts (buckets) to determine data partitioning. In pyspark, databricks, and similar. Partitioning And Bucketing In Pyspark.

From medium.com

Spark Partitioning vs Bucketing partitionBy vs bucketBy Medium Partitioning And Bucketing In Pyspark The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: In this blog post, we’ll delve into the. Partitioning and bucketing in pyspark refer to two different techniques for organizing data in. Partitioning And Bucketing In Pyspark.

From medium.com

How does PySpark work? — step by step (with pictures) Partitioning And Bucketing In Pyspark In the realm of pyspark, efficient data management becomes crucial, and three key strategies stand out: The motivation is to optimize the performance of a join query by avoiding shuffles (aka exchanges) of tables participating in the join. At a high level, hive partition is a way to split the large table into smaller tables based on the values of. Partitioning And Bucketing In Pyspark.

From medium.com

Partitioning vs Bucketing in Spark and Hive by Shivani Panchiwala Partitioning And Bucketing In Pyspark Partitioning and bucketing in pyspark refer to two different techniques for organizing data in a dataframe. In pyspark, databricks, and similar big data processing platforms, partitioning and bucketing are techniques used for optimizing. Guide into pyspark bucketing — an optimization technique that uses buckets to determine data partitioning and avoid data shuffle. In the realm of pyspark, efficient data management. Partitioning And Bucketing In Pyspark.