Adapters Machine Learning . To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per.

from zhuanlan.zhihu.com

To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task.

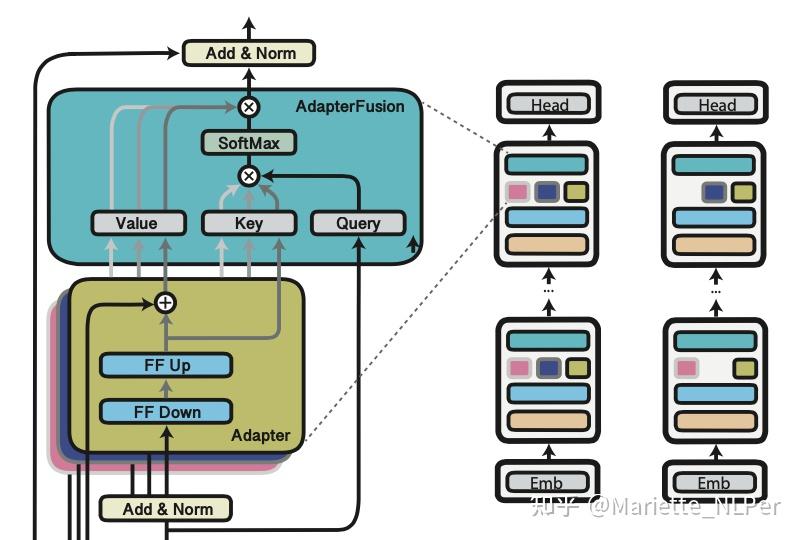

PARAMETEREFFICIENT TRANSFER LEARNING 之Adapter tuning的论文汇总1 知乎

Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text.

From www.researchgate.net

Machine learning algorithms and common applications. Download Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From aclanthology.org

Adapters A Unified Library for ParameterEfficient and Modular Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From www.turing.com

The Ultimate Guide to Transformer Deep Learning Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From morioh.com

How to Choose the Machine Learning Algorithm That’s Right for You Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From blog.csdn.net

CLIPAdapter Better VisionLanguage Models with Feature AdaptersCSDN博客 Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From zhuanlan.zhihu.com

PARAMETEREFFICIENT TRANSFER LEARNING 之Adapter tuning的论文汇总1 知乎 Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From zhuanlan.zhihu.com

【NLP学习】Adapter的简介 知乎 Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From datasciencedojo.com

Top 8 Machine Learning algorithms explained Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From zhuanlan.zhihu.com

PARAMETEREFFICIENT TRANSFER LEARNING 之Adapter tuning的论文汇总1 知乎 Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From docs.adapterhub.ml

Adapter Methods — AdapterHub documentation Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From paperswithcode.com

Exploring Adapterbased Transfer Learning for Systems Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From zhuanlan.zhihu.com

PARAMETEREFFICIENT TRANSFER LEARNING 之Adapter tuning的论文汇总1 知乎 Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From www.pinterest.com

Nonexhaustive list of adapters to read different file storage formats Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From www.researchgate.net

(PDF) CDRAdapter Learning Adapters to Dig Out More Transferring Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From www.projectpro.io

Machine Learning Model Deployment A Beginner’s Guide Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From www.elastic.co

What is Machine Learning? A Comprehensive ML Guide Elastic Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From www.designworldonline.com

Adapters Enable Better Communication Between Machines Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From blog.csdn.net

CLIPAdapter Better VisionLanguage Models with Feature AdaptersCSDN博客 Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From aclanthology.org

LanguageFamily Adapters for LowResource Multilingual Neural Machine Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From www.researchgate.net

(PDF) T2IAdapter Learning Adapters to Dig out More Controllable Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From deepai.org

Communication Efficient Federated Learning for Multilingual Neural Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From medium.com

Understanding Boosting in Machine Learning A Comprehensive Guide by Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From paperswithcode.com

Adapters for Enhanced Modeling of Multilingual Knowledge and Text Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From www.scribd.com

LLM Adapters PDF Matrix (Mathematics) Machine Learning Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From deepai.org

Exploring Adapterbased Transfer Learning for Systems Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From www.thinkautonomous.ai

19 Machine Learning Types you need to know (Advanced Mindmap) Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From softei.com

Adapter board allows machine learning based sensor fusion Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From towardsdatascience.com

How Machine Learning Works!. An introduction into Machine Learning Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From deepai.org

MultiDomain Learning with Modulation Adapters DeepAI Adapters Machine Learning To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From cacm.acm.org

Techniques for Interpretable Machine Learning January 2020 Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every. Adapters Machine Learning.

From cloud2data.com

Know About The Types Of Machine Learning Cloud2Data Adapters Machine Learning A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From www.semanticscholar.org

Figure 1 from Learning Adapters for CodeSwitching Speech Recognition Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.

From lena-voita.github.io

Transfer Learning Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable parameters per. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26. Adapters Machine Learning.

From enstoa.com

An Introduction to Adapters Enstoa Adapters Machine Learning The main idea of this paper is to enable transfer learning for nlp on an incoming stream of tasks without training a new model for every new task. To demonstrate adapter's effectiveness, we transfer the recently proposed bert transformer model to 26 diverse text. A recent paper proposes adapter modules which provide parameter efficiency by only adding a few trainable. Adapters Machine Learning.