Dropout Neural Network Tensorflow . Dropout works by probabilistically removing, or “dropping out,”. Reduce the capacity of the network. Deploy ml on mobile, microcontrollers and other edge devices. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout is applied after certain layers to. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural.

from learnopencv.com

Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Deploy ml on mobile, microcontrollers and other edge devices. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout is applied after certain layers to. Reduce the capacity of the network. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout works by probabilistically removing, or “dropping out,”. To recap, here are the most common ways to prevent overfitting in neural networks:

Implementing a CNN in TensorFlow & Keras

Dropout Neural Network Tensorflow Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout works by probabilistically removing, or “dropping out,”. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Deploy ml on mobile, microcontrollers and other edge devices. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Reduce the capacity of the network. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout is applied after certain layers to. To recap, here are the most common ways to prevent overfitting in neural networks:

From www.digitalocean.com

How To Build a Neural Network to Recognize Handwritten Digits with TensorFlow DigitalOcean Dropout Neural Network Tensorflow The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of. Dropout Neural Network Tensorflow.

From www.thecrazyprogrammer.com

Introduction to TensorFlow Dropout Neural Network Tensorflow We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout is applied after certain layers to. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel.. Dropout Neural Network Tensorflow.

From www.researchgate.net

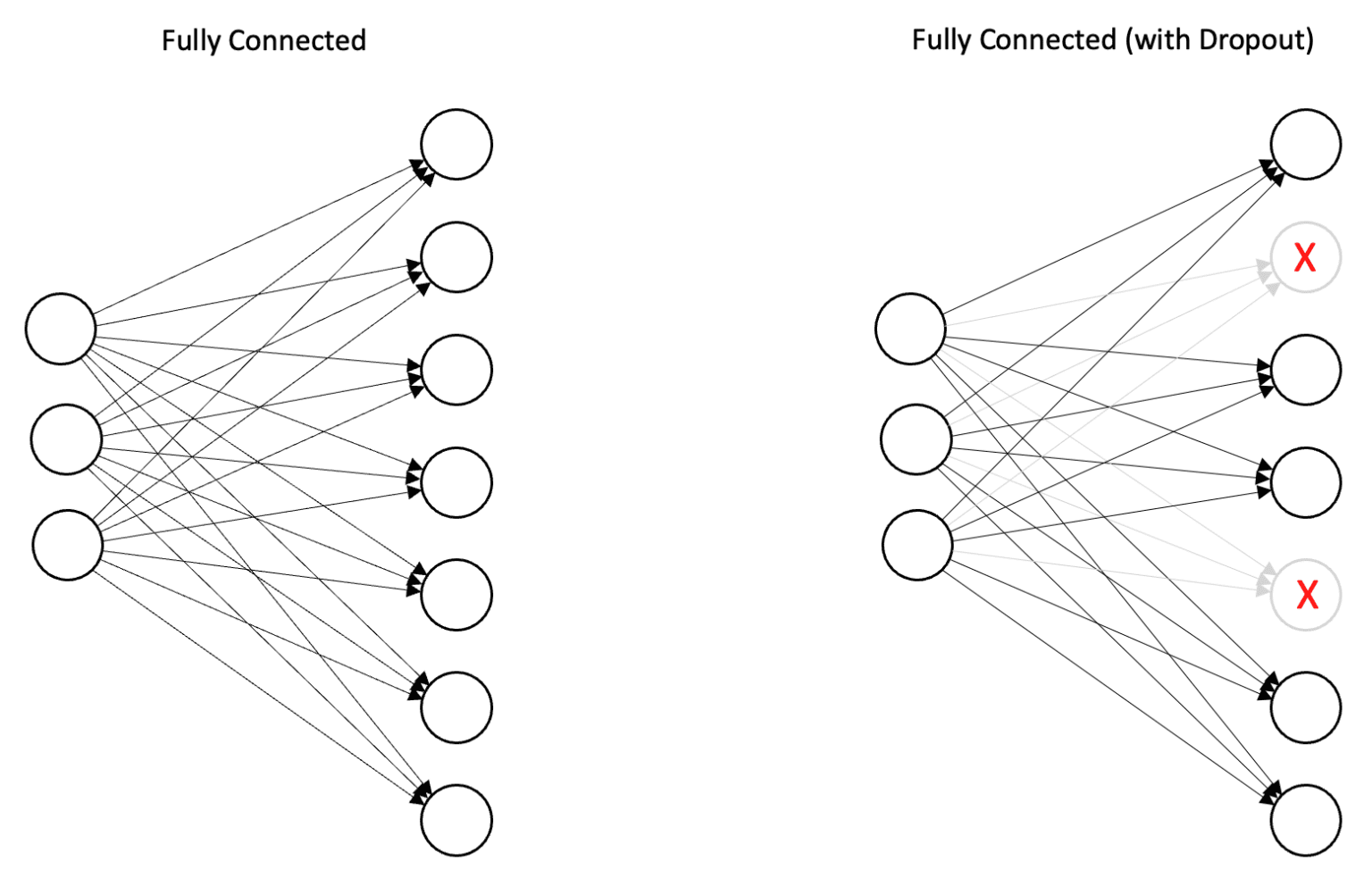

Dropout figure. (a) Traditional neural network. (b) Dropout neural network. Download Dropout Neural Network Tensorflow Reduce the capacity of the network. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Deploy ml on mobile, microcontrollers and other edge devices. Dropout. Dropout Neural Network Tensorflow.

From www.researchgate.net

13 Dropout Neural Net Model (Srivastava et al., 2014) a) standard... Download Scientific Diagram Dropout Neural Network Tensorflow Dropout works by probabilistically removing, or “dropping out,”. Reduce the capacity of the network. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Deploy ml. Dropout Neural Network Tensorflow.

From learnopencv.com

Implementing a CNN in TensorFlow & Keras Dropout Neural Network Tensorflow Dropout works by probabilistically removing, or “dropping out,”. Deploy ml on mobile, microcontrollers and other edge devices. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in. Dropout Neural Network Tensorflow.

From wikidocs.net

Z_15. Dropout EN Deep Learning Bible 1. from Scratch Eng. Dropout Neural Network Tensorflow To recap, here are the most common ways to prevent overfitting in neural networks: Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout is applied after certain layers to. Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout works by probabilistically removing, or “dropping out,”. Deploy ml on mobile,. Dropout Neural Network Tensorflow.

From www.researchgate.net

Example of dropout in a hypothetical neural network. The blue hatched... Download Scientific Dropout Neural Network Tensorflow Reduce the capacity of the network. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout works by probabilistically removing, or “dropping out,”. Dropout technique works. Dropout Neural Network Tensorflow.

From www.techtarget.com

What is Dropout? Understanding Dropout in Neural Networks Dropout Neural Network Tensorflow Dropout works by probabilistically removing, or “dropping out,”. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout is applied after certain layers to. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. The dropout layer randomly sets input units to 0 with a frequency. Dropout Neural Network Tensorflow.

From www.researchgate.net

An example of dropout neural network Download Scientific Diagram Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. Reduce the capacity of the network. Dropout is applied after certain layers to. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. To recap, here are the most common ways to prevent. Dropout Neural Network Tensorflow.

From www.researchgate.net

Neural network structure (left) with and (right) without dropout layer. Download Scientific Dropout Neural Network Tensorflow Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Deploy ml on mobile, microcontrollers and other edge devices. Dropout is applied after certain layers to. Reduce the capacity of the network. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. We will create a simple. Dropout Neural Network Tensorflow.

From www.researchgate.net

A neural network with (a) and without (b) dropout layers. The red... Download Scientific Diagram Dropout Neural Network Tensorflow Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout works by probabilistically removing, or “dropping out,”. To recap, here are the most common ways to prevent overfitting in neural networks: We will create a simple convolutional neural network (cnn) with dropout. Dropout Neural Network Tensorflow.

From programmathically.com

Dropout Regularization in Neural Networks How it Works and When to Use It Programmathically Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout works by probabilistically removing, or “dropping out,”. Deploy ml on mobile, microcontrollers and other edge devices. Dropout technique works by randomly reducing the number of interconnecting neurons. Dropout Neural Network Tensorflow.

From shichaoji.com

tensorflow neural network dropout decay learning rate Data Science Notebook Dropout Neural Network Tensorflow Deploy ml on mobile, microcontrollers and other edge devices. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. To recap, here are the most common ways to prevent overfitting in neural networks: The dropout layer randomly sets input units to 0 with a frequency of rate at each step. Dropout Neural Network Tensorflow.

From www.reddit.com

Dropout in neural networks what it is and how it works r/learnmachinelearning Dropout Neural Network Tensorflow Reduce the capacity of the network. Deploy ml on mobile, microcontrollers and other edge devices. Dropout regularization is a computationally cheap way to regularize a deep neural network. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. We will create a simple convolutional neural network. Dropout Neural Network Tensorflow.

From datascience.stackexchange.com

How dropout work during testing in neural network Data Science Stack Exchange Dropout Neural Network Tensorflow We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Reduce the capacity of the network. Dropout is applied after certain layers to. Dropout works by probabilistically removing, or “dropping out,”.. Dropout Neural Network Tensorflow.

From www.youtube.com

What is Dropout technique in Neural networks YouTube Dropout Neural Network Tensorflow To recap, here are the most common ways to prevent overfitting in neural networks: Dropout is applied after certain layers to. Dropout regularization is a computationally cheap way to regularize a deep neural network. Reduce the capacity of the network. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout works by probabilistically removing, or. Dropout Neural Network Tensorflow.

From www.youtube.com

Convolutional Neural Networks Deep Learning basics with Python, TensorFlow and Keras p.3 YouTube Dropout Neural Network Tensorflow Dropout is applied after certain layers to. To recap, here are the most common ways to prevent overfitting in neural networks: Reduce the capacity of the network. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout works by probabilistically removing, or. Dropout Neural Network Tensorflow.

From stackoverflow.com

tensorflow Convolutional Neural Network Dropout kills performance Stack Overflow Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout is applied after certain layers to. Deploy ml on mobile, microcontrollers and other edge devices. Reduce the capacity of the network. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. The dropout layer randomly sets input units to 0 with a. Dropout Neural Network Tensorflow.

From rpmarchildon.com

Building Neural Networks in TensorFlow Ryan P. Marchildon Dropout Neural Network Tensorflow Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Deploy ml on mobile, microcontrollers and other edge devices. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Dropout regularization is a computationally cheap way to. Dropout Neural Network Tensorflow.

From www.bualabs.com

Dropout คืออะไร แนะนำการใช้ Dropout ลด Overfit ใน Deep Neural Network Regularization ep.2 Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Deploy ml on mobile, microcontrollers and other edge devices. Reduce the capacity of the network. The dropout layer randomly sets input units to 0 with a frequency of. Dropout Neural Network Tensorflow.

From www.researchgate.net

Dropout schematic (a) Standard neural network; (b) after applying dropout. Download Dropout Neural Network Tensorflow Reduce the capacity of the network. To recap, here are the most common ways to prevent overfitting in neural networks: We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout is applied after certain layers to. Deploy ml on mobile, microcontrollers and other edge devices. Dropout is a regularization. Dropout Neural Network Tensorflow.

From stackabuse.com

Introduction to Neural Networks with ScikitLearn Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout works by probabilistically removing, or “dropping out,”. Dropout is applied after certain layers to. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Deploy ml on mobile, microcontrollers and other edge devices. Dropout is a regularization method that approximates training a. Dropout Neural Network Tensorflow.

From medium.com

Create a Convolutional Neural Network with TensorFlow Dropout Neural Network Tensorflow The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout is applied after certain layers to. Dropout is a regularization method that approximates training a large number of neural networks with. Dropout Neural Network Tensorflow.

From www.researchgate.net

Schematic diagram of Dropout. (a) Primitive neural network. (b) Neural... Download Scientific Dropout Neural Network Tensorflow Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Reduce the capacity of the network. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout works. Dropout Neural Network Tensorflow.

From stackoverflow.com

tensorflow Convolutional Neural Network Dropout kills performance Stack Overflow Dropout Neural Network Tensorflow Dropout works by probabilistically removing, or “dropping out,”. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. Dropout is applied after certain layers to. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. To recap, here are the most common ways to prevent overfitting. Dropout Neural Network Tensorflow.

From www.researchgate.net

Neural network model using dropout. Download Scientific Diagram Dropout Neural Network Tensorflow To recap, here are the most common ways to prevent overfitting in neural networks: Dropout regularization is a computationally cheap way to regularize a deep neural network. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Dropout is a regularization method that approximates training a. Dropout Neural Network Tensorflow.

From www.baeldung.com

How ReLU and Dropout Layers Work in CNNs Baeldung on Computer Science Dropout Neural Network Tensorflow Deploy ml on mobile, microcontrollers and other edge devices. Dropout is applied after certain layers to. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout regularization is a computationally cheap way to regularize a deep. Dropout Neural Network Tensorflow.

From www.youtube.com

Tensorflow 17 Regularization dropout (neural network tutorials) YouTube Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. To recap, here are the most common ways to prevent overfitting in neural networks: Deploy ml on mobile, microcontrollers and other edge devices. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting.. Dropout Neural Network Tensorflow.

From www.frontiersin.org

Frontiers Dropout in Neural Networks Simulates the Paradoxical Effects of Deep Brain Dropout Neural Network Tensorflow Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout regularization is a computationally cheap way to regularize a deep neural network. Deploy ml on mobile, microcontrollers and other edge devices. Reduce the capacity of the. Dropout Neural Network Tensorflow.

From www.oreilly.com

4. Fully Connected Deep Networks TensorFlow for Deep Learning [Book] Dropout Neural Network Tensorflow Dropout regularization is a computationally cheap way to regularize a deep neural network. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. The dropout layer randomly sets input units to. Dropout Neural Network Tensorflow.

From www.researchgate.net

Example of dropout Neural Network (a) A standard Neural Network; (b) A... Download Scientific Dropout Neural Network Tensorflow To recap, here are the most common ways to prevent overfitting in neural networks: Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout regularization is a computationally cheap way to regularize a deep neural network. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural.. Dropout Neural Network Tensorflow.

From www.youtube.com

Tensorflow Tutorial How to use dropout and batch normalisation in Deep neural network(EASY Dropout Neural Network Tensorflow Deploy ml on mobile, microcontrollers and other edge devices. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. Dropout technique works by randomly reducing the number of interconnecting neurons within a neural. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during. Dropout Neural Network Tensorflow.

From www.youtube.com

Tutorial 9 Drop Out Layers in Multi Neural Network YouTube Dropout Neural Network Tensorflow Dropout is applied after certain layers to. The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Reduce the capacity of the network. To recap, here are the most common ways to prevent overfitting in neural networks: Dropout technique works by randomly reducing the number of. Dropout Neural Network Tensorflow.

From www.researchgate.net

Dropout figure. (a) Traditional neural network. (b) Dropout neural network. Download Dropout Neural Network Tensorflow The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Dropout is applied after certain layers to. Dropout works by probabilistically removing, or “dropping out,”. Reduce the capacity of the network. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the. Dropout Neural Network Tensorflow.

From stackoverflow.com

tensorflow Subsequent dropout layers all connected to the first in keras? Stack Overflow Dropout Neural Network Tensorflow The dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. We will create a simple convolutional neural network (cnn) with dropout layers to demonstrate the use of dropout in tensorflow. Dropout is applied after certain layers to. Dropout works by probabilistically removing, or “dropping out,”. To. Dropout Neural Network Tensorflow.