Pyspark Catch Java Exception . using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Your end goal may be to save these error messages to. here's an example of how to test a pyspark function that throws an exception. handling errors in pyspark. in such a situation, you may find yourself wanting to catch all possible exceptions. base exception for handling errors generated from pyspark. Pyspark errors can be handled in the usual python way, with a try / except block. Analysisexception ( [message, error_class,.]) failed to analyze a sql. In this example, we're verifying. i am creating new application and looking for ideas how to handle exceptions in spark, for example.

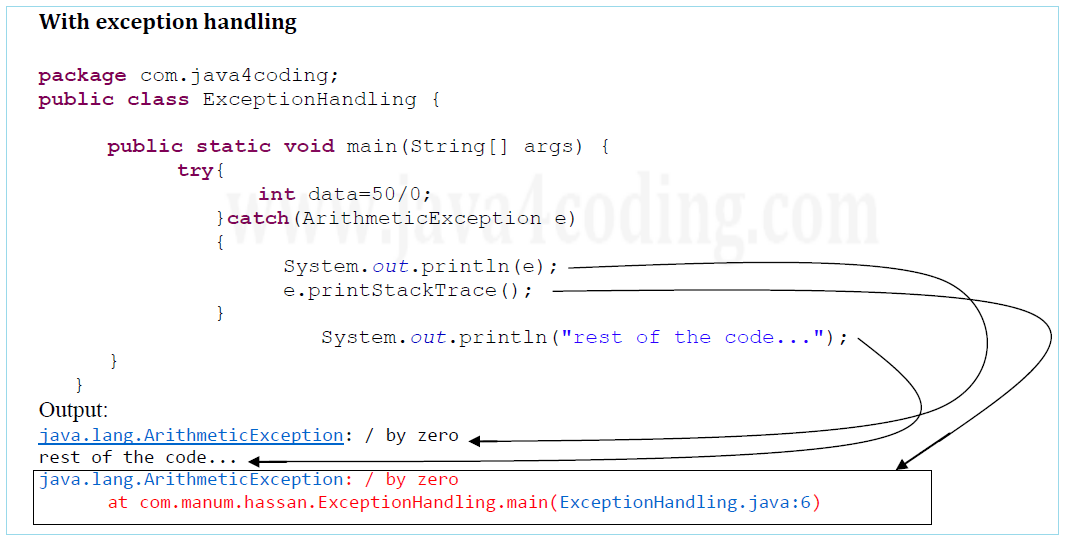

from www.java4coding.com

In this example, we're verifying. base exception for handling errors generated from pyspark. Pyspark errors can be handled in the usual python way, with a try / except block. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. i am creating new application and looking for ideas how to handle exceptions in spark, for example. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. handling errors in pyspark. Analysisexception ( [message, error_class,.]) failed to analyze a sql. Your end goal may be to save these error messages to. here's an example of how to test a pyspark function that throws an exception.

try catch in Java java4coding

Pyspark Catch Java Exception Analysisexception ( [message, error_class,.]) failed to analyze a sql. in such a situation, you may find yourself wanting to catch all possible exceptions. Analysisexception ( [message, error_class,.]) failed to analyze a sql. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. Pyspark errors can be handled in the usual python way, with a try / except block. i am creating new application and looking for ideas how to handle exceptions in spark, for example. here's an example of how to test a pyspark function that throws an exception. base exception for handling errors generated from pyspark. In this example, we're verifying. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Your end goal may be to save these error messages to. handling errors in pyspark.

From www.pdffiller.com

Fillable Online Pyspark Exception Java gateway process exited before Pyspark Catch Java Exception In this example, we're verifying. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. handling errors in pyspark. Your end goal may be to save these error messages to. i am creating new application and looking for ideas how to handle exceptions in spark, for example. Pyspark errors. Pyspark Catch Java Exception.

From stackoverflow.com

python Facing Py4JJavaError on executing PySpark Code Stack Overflow Pyspark Catch Java Exception i am creating new application and looking for ideas how to handle exceptions in spark, for example. Pyspark errors can be handled in the usual python way, with a try / except block. base exception for handling errors generated from pyspark. here's an example of how to test a pyspark function that throws an exception. Your end. Pyspark Catch Java Exception.

From www.youtube.com

try catch in java with example YouTube Pyspark Catch Java Exception here's an example of how to test a pyspark function that throws an exception. i am creating new application and looking for ideas how to handle exceptions in spark, for example. base exception for handling errors generated from pyspark. In this example, we're verifying. using try/catch blocks allows the user to catch any exceptions that may. Pyspark Catch Java Exception.

From blog.csdn.net

(最新最全)pyspark报错Exception Java gateway process exited before sending Pyspark Catch Java Exception in such a situation, you may find yourself wanting to catch all possible exceptions. Analysisexception ( [message, error_class,.]) failed to analyze a sql. In this example, we're verifying. Pyspark errors can be handled in the usual python way, with a try / except block. handling errors in pyspark. after around 180k parquet tables written to hadoop, the. Pyspark Catch Java Exception.

From stackoverflow.com

apache spark Exception Java gateway process exited before sending Pyspark Catch Java Exception after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. In this example, we're verifying. Analysisexception ( [message, error_class,.]) failed to analyze a sql. here's an example of how to test a pyspark function that throws an exception. Your end goal may be to save these error messages to. . Pyspark Catch Java Exception.

From thedevnews.com

When to throw and catch Exception in Java? [Best Practice] The Dev News Pyspark Catch Java Exception i am creating new application and looking for ideas how to handle exceptions in spark, for example. handling errors in pyspark. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. here's an example of how to test a pyspark function that throws an exception. Analysisexception ( [message,. Pyspark Catch Java Exception.

From 9to5answer.com

[Solved] Pyspark error Java gateway process exited 9to5Answer Pyspark Catch Java Exception base exception for handling errors generated from pyspark. in such a situation, you may find yourself wanting to catch all possible exceptions. i am creating new application and looking for ideas how to handle exceptions in spark, for example. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in. Pyspark Catch Java Exception.

From stackoverflow.com

java Pyspark File Not Found Exception Stack Overflow Pyspark Catch Java Exception base exception for handling errors generated from pyspark. In this example, we're verifying. Analysisexception ( [message, error_class,.]) failed to analyze a sql. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. in such a situation, you may find yourself wanting to catch all possible exceptions. here's an. Pyspark Catch Java Exception.

From www.simplilearn.com

Java Exception Handling [Easy and Simplified Guide] Pyspark Catch Java Exception Pyspark errors can be handled in the usual python way, with a try / except block. handling errors in pyspark. base exception for handling errors generated from pyspark. Your end goal may be to save these error messages to. in such a situation, you may find yourself wanting to catch all possible exceptions. here's an example. Pyspark Catch Java Exception.

From blog.csdn.net

Python搭建PySpark执行环境入口时出现RuntimeError Java gateway process exited Pyspark Catch Java Exception handling errors in pyspark. here's an example of how to test a pyspark function that throws an exception. Analysisexception ( [message, error_class,.]) failed to analyze a sql. i am creating new application and looking for ideas how to handle exceptions in spark, for example. Pyspark errors can be handled in the usual python way, with a try. Pyspark Catch Java Exception.

From www.hotzxgirl.com

Python Pyspark Exception Java Gateway Process Exited Before Sending Pyspark Catch Java Exception Analysisexception ( [message, error_class,.]) failed to analyze a sql. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. here's an example of how to test a pyspark function that throws an exception. Your end goal may be to save these error messages to. using try/catch blocks allows the. Pyspark Catch Java Exception.

From www.benchresources.net

Java throws keyword or clause Pyspark Catch Java Exception i am creating new application and looking for ideas how to handle exceptions in spark, for example. Pyspark errors can be handled in the usual python way, with a try / except block. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. in such a situation, you may. Pyspark Catch Java Exception.

From www.java4coding.com

try catch in Java java4coding Pyspark Catch Java Exception In this example, we're verifying. here's an example of how to test a pyspark function that throws an exception. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Pyspark errors. Pyspark Catch Java Exception.

From www.vrogue.co

Java Monkeys Exception Hierarchy And Types Of Excepti vrogue.co Pyspark Catch Java Exception in such a situation, you may find yourself wanting to catch all possible exceptions. Analysisexception ( [message, error_class,.]) failed to analyze a sql. In this example, we're verifying. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. using try/catch blocks allows the user to catch any exceptions that. Pyspark Catch Java Exception.

From blog.csdn.net

泪目!一天终于解决了这个bug Pyspark Exception Java gateway process exited before Pyspark Catch Java Exception Pyspark errors can be handled in the usual python way, with a try / except block. Your end goal may be to save these error messages to. In this example, we're verifying. in such a situation, you may find yourself wanting to catch all possible exceptions. Analysisexception ( [message, error_class,.]) failed to analyze a sql. handling errors in. Pyspark Catch Java Exception.

From ecomputernotes.com

Exception Handling in Java with Examples Computer Notes Pyspark Catch Java Exception Analysisexception ( [message, error_class,.]) failed to analyze a sql. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. here's an example of how to test a pyspark function that throws an exception. Your end goal may be to save these error messages to. base exception for handling errors. Pyspark Catch Java Exception.

From tutorial.eyehunts.com

try catch finally Java Blocks Exception Handling Examples EyeHunts Pyspark Catch Java Exception in such a situation, you may find yourself wanting to catch all possible exceptions. handling errors in pyspark. Your end goal may be to save these error messages to. base exception for handling errors generated from pyspark. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Pyspark. Pyspark Catch Java Exception.

From stackoverflow.com

Java JDBC Mysql connection only works inside try catch Stack Overflow Pyspark Catch Java Exception In this example, we're verifying. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. Analysisexception ( [message, error_class,.]) failed to analyze a sql. in such a situation, you may find. Pyspark Catch Java Exception.

From www.youtube.com

Java Tutorial For Beginners 36 Catching and Handling Exceptions in Pyspark Catch Java Exception In this example, we're verifying. handling errors in pyspark. base exception for handling errors generated from pyspark. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Pyspark errors can be handled in the usual python way, with a try / except block. Your end goal may be to. Pyspark Catch Java Exception.

From blog.51cto.com

泪目!一天终于解决了这个bug Pyspark Exception Java gateway process exited before Pyspark Catch Java Exception Pyspark errors can be handled in the usual python way, with a try / except block. Analysisexception ( [message, error_class,.]) failed to analyze a sql. in such a situation, you may find yourself wanting to catch all possible exceptions. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. . Pyspark Catch Java Exception.

From ceucrsnp.blob.core.windows.net

How To Use Catch In Java at Steven Belote blog Pyspark Catch Java Exception Your end goal may be to save these error messages to. Analysisexception ( [message, error_class,.]) failed to analyze a sql. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. here's an example of how to test a pyspark function that throws an exception. base exception for handling errors. Pyspark Catch Java Exception.

From stackoverflow.com

python PySpark multithreaded read Answer from Java Pyspark Catch Java Exception i am creating new application and looking for ideas how to handle exceptions in spark, for example. handling errors in pyspark. Pyspark errors can be handled in the usual python way, with a try / except block. Analysisexception ( [message, error_class,.]) failed to analyze a sql. using try/catch blocks allows the user to catch any exceptions that. Pyspark Catch Java Exception.

From tutorial.eyehunts.com

try catch Java Block Exception Handling Example Eyehunts Pyspark Catch Java Exception handling errors in pyspark. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. base exception for handling errors generated from pyspark. Your end goal may be to save these error messages to. In this example, we're verifying. Analysisexception ( [message, error_class,.]) failed to analyze a sql. i. Pyspark Catch Java Exception.

From stackoverflow.com

databricks Catching spark exceptions in PySpark Stack Overflow Pyspark Catch Java Exception here's an example of how to test a pyspark function that throws an exception. i am creating new application and looking for ideas how to handle exceptions in spark, for example. handling errors in pyspark. Your end goal may be to save these error messages to. Analysisexception ( [message, error_class,.]) failed to analyze a sql. Pyspark errors. Pyspark Catch Java Exception.

From www.codenong.com

pyspark实例化GraphFrame出现 java.lang.ClassNotFoundException org Pyspark Catch Java Exception using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. here's an example of how to test a pyspark function that throws an exception. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Analysisexception ( [message, error_class,.]) failed to analyze. Pyspark Catch Java Exception.

From blog.csdn.net

运行pyspark 代码提示Exception Java gateway process exited before sending its Pyspark Catch Java Exception i am creating new application and looking for ideas how to handle exceptions in spark, for example. base exception for handling errors generated from pyspark. handling errors in pyspark. Your end goal may be to save these error messages to. here's an example of how to test a pyspark function that throws an exception. using. Pyspark Catch Java Exception.

From blog.csdn.net

(最新最全)pyspark报错Exception Java gateway process exited before sending Pyspark Catch Java Exception here's an example of how to test a pyspark function that throws an exception. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. i am creating new application and looking for ideas how to handle exceptions in spark, for example. handling errors in pyspark. in such. Pyspark Catch Java Exception.

From www.programiz.com

Java Exception Handling (try...catch...finally) Pyspark Catch Java Exception Analysisexception ( [message, error_class,.]) failed to analyze a sql. handling errors in pyspark. base exception for handling errors generated from pyspark. here's an example of how to test a pyspark function that throws an exception. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. Your end goal. Pyspark Catch Java Exception.

From www.atatus.com

Handling Exceptions in Java Pyspark Catch Java Exception handling errors in pyspark. In this example, we're verifying. i am creating new application and looking for ideas how to handle exceptions in spark, for example. in such a situation, you may find yourself wanting to catch all possible exceptions. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception. Pyspark Catch Java Exception.

From stackoverflow.com

python Pyspark on windows throws exception while running job Stack Pyspark Catch Java Exception In this example, we're verifying. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Analysisexception ( [message, error_class,.]) failed to analyze a sql. i am creating new application and looking. Pyspark Catch Java Exception.

From www.java4coding.com

try catch in Java java4coding Pyspark Catch Java Exception Analysisexception ( [message, error_class,.]) failed to analyze a sql. Your end goal may be to save these error messages to. in such a situation, you may find yourself wanting to catch all possible exceptions. here's an example of how to test a pyspark function that throws an exception. using try/catch blocks allows the user to catch any. Pyspark Catch Java Exception.

From blog.devgenius.io

Exception Handling in Java. Exception Handling in Java is one of… by Pyspark Catch Java Exception handling errors in pyspark. in such a situation, you may find yourself wanting to catch all possible exceptions. Pyspark errors can be handled in the usual python way, with a try / except block. Analysisexception ( [message, error_class,.]) failed to analyze a sql. base exception for handling errors generated from pyspark. Your end goal may be to. Pyspark Catch Java Exception.

From blog.csdn.net

window pyspark + conda下配置_conda install javaCSDN博客 Pyspark Catch Java Exception Pyspark errors can be handled in the usual python way, with a try / except block. after around 180k parquet tables written to hadoop, the python worker unexpectedly crashes due to eofexception in java. Analysisexception ( [message, error_class,.]) failed to analyze a sql. handling errors in pyspark. using try/catch blocks allows the user to catch any exceptions. Pyspark Catch Java Exception.

From stackoverflow.com

python Pyspark Exception Java gateway process exited before sending Pyspark Catch Java Exception i am creating new application and looking for ideas how to handle exceptions in spark, for example. using try/catch blocks allows the user to catch any exceptions that may occur during execution of their code. in such a situation, you may find yourself wanting to catch all possible exceptions. Pyspark errors can be handled in the usual. Pyspark Catch Java Exception.

From www.youtube.com

PYTHON Pyspark Exception Java gateway process exited before sending Pyspark Catch Java Exception in such a situation, you may find yourself wanting to catch all possible exceptions. Analysisexception ( [message, error_class,.]) failed to analyze a sql. Your end goal may be to save these error messages to. base exception for handling errors generated from pyspark. using try/catch blocks allows the user to catch any exceptions that may occur during execution. Pyspark Catch Java Exception.