Partition A Data Set . Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data partitioning criteria and the. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. For a more complete approach take a look at the createdatapartition function in the. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. There are numerous approaches to achieve data partitioning.

from fake-database.blogspot.com

If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. There are numerous approaches to achieve data partitioning. Data partitioning criteria and the. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. For a more complete approach take a look at the createdatapartition function in the. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data.

Database Partitioning Types Fake Database

Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data partitioning criteria and the. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. For a more complete approach take a look at the createdatapartition function in the. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. There are numerous approaches to achieve data partitioning.

From exoutxbql.blob.core.windows.net

Partition Data Set In R at Amparo Hyman blog Partition A Data Set There are numerous approaches to achieve data partitioning. For a more complete approach take a look at the createdatapartition function in the. Data partitioning criteria and the. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Data partitioning is the process of dividing a large dataset into smaller,. Partition A Data Set.

From www.researchgate.net

Dataset and partition. a shows the partition details about how the Partition A Data Set Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data partitioning criteria and the. If you. Partition A Data Set.

From www.cockroachlabs.com

What is data partitioning, and how to do it right Partition A Data Set In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data partitioning criteria and the. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a. Partition A Data Set.

From www.researchgate.net

Horizontally and vertically partitioned data. Horizontal partitions Partition A Data Set Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. There are numerous approaches to achieve data partitioning. For a more complete approach take a look at the createdatapartition function in. Partition A Data Set.

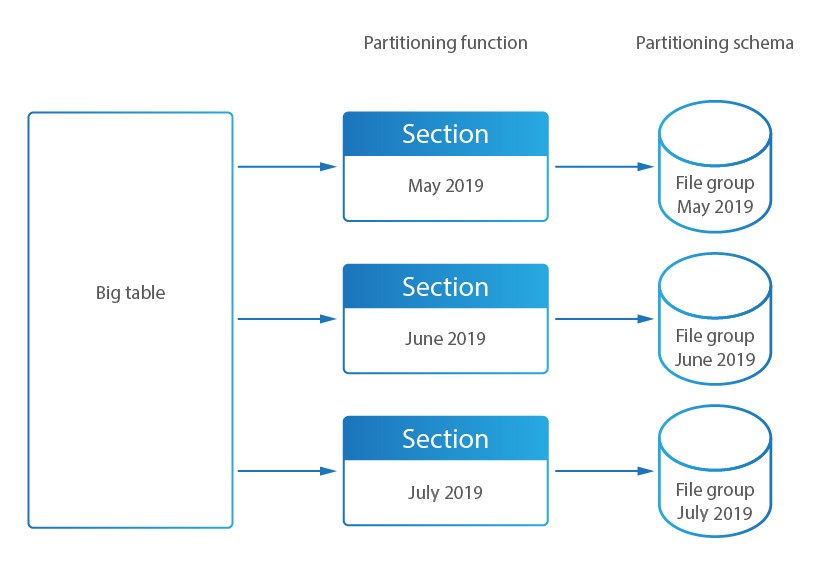

From learn.microsoft.com

Data partitioning strategies Azure Architecture Center Microsoft Learn Partition A Data Set There are numerous approaches to achieve data partitioning. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. In system design, partitioning strategies play a critical role in managing. Partition A Data Set.

From consumer.huawei.com

How to create disk partitions on Windows 10 HUAWEI Global Partition A Data Set For a more complete approach take a look at the createdatapartition function in the. Data partitioning criteria and the. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you. Partition A Data Set.

From fake-database.blogspot.com

Database Partitioning Types Fake Database Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. For a more complete approach take a look at the createdatapartition function in the. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. In system. Partition A Data Set.

From knowledge.dataiku.com

Concept Partitioning — Dataiku Knowledge Base Partition A Data Set There are numerous approaches to achieve data partitioning. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. For a more complete approach take a look at the createdatapartition function in the. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need. Partition A Data Set.

From www.partitionwizard.com

How to Set Partition as Logical MiniTool Partition Wizard Tutorial Partition A Data Set If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called. Partition A Data Set.

From www.slideserve.com

PPT Set Theory PowerPoint Presentation, free download ID1821887 Partition A Data Set Data partitioning criteria and the. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. There are numerous approaches to achieve data partitioning. For a more complete approach take a look at the createdatapartition function in the. Data partitioning is the process of dividing a large dataset into smaller,. Partition A Data Set.

From questdb.io

What Is Database Partitioning? Partition A Data Set Data partitioning criteria and the. For a more complete approach take a look at the createdatapartition function in the. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. If you. Partition A Data Set.

From www.slideserve.com

PPT CS201 Data Structures and Discrete Mathematics I PowerPoint Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data partitioning criteria and the. There are numerous approaches to achieve data partitioning. If you want to split the data set once. Partition A Data Set.

From recoverit.wondershare.com

What Is Basic Data Partition & Its Difference From Primary Partition Partition A Data Set In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. For a more complete approach take a look at the createdatapartition function in the. Data splitting is a crucial. Partition A Data Set.

From www.slideserve.com

PPT Data Partitioning SQL Server Satya PowerPoint Presentation Partition A Data Set Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. For a more complete approach take a look at the createdatapartition function in the. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. If you want to split the data set once in two. Partition A Data Set.

From www.slideserve.com

PPT Sets PowerPoint Presentation, free download ID7164 Partition A Data Set There are numerous approaches to achieve data partitioning. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. For a more complete approach take a look. Partition A Data Set.

From github.com

[DOC] How to partition a dataset with nvt.Dataset & workflow so that Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data partitioning criteria and the. There are numerous approaches to achieve data partitioning. Data partitioning is. Partition A Data Set.

From www.turing.com

Resilient Distribution Dataset Immutability in Apache Spark Partition A Data Set There are numerous approaches to achieve data partitioning. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data partitioning criteria and the. Data partitioning is the process of dividing a. Partition A Data Set.

From www.youtube.com

Partitions of a set YouTube Partition A Data Set Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data partitioning criteria and the. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as. Partition A Data Set.

From www.chegg.com

For Analytic Solver, partition the data sets into 50 Partition A Data Set Data partitioning criteria and the. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. For a more complete approach take a look at the createdatapartition function in the. If you want to split the. Partition A Data Set.

From www.youtube.com

PARTITION SET (set theory) how to partition a set with example 🔥 Partition A Data Set Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data splitting is a crucial process in. Partition A Data Set.

From www.easeus.com

How to Partition 1TB Hard Disk in Windows 11/10 (2 Methods) EaseUS Partition A Data Set There are numerous approaches to achieve data partitioning. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data partitioning criteria and the. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. For a more complete approach take a look at the createdatapartition function. Partition A Data Set.

From arpitbhayani.me

Data Partitioning Partition A Data Set For a more complete approach take a look at the createdatapartition function in the. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data splitting is a crucial process. Partition A Data Set.

From www.slideserve.com

PPT Basics of Set Theory PowerPoint Presentation, free download ID Partition A Data Set In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Partitioning a. Partition A Data Set.

From knowledge.dataiku.com

Concept Partitioning — Dataiku Knowledge Base Partition A Data Set If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. There are numerous approaches to achieve data partitioning. Data splitting is a crucial process in machine learning, involving the. Partition A Data Set.

From www.researchgate.net

Ordinal regression data sets partitions Download Table Partition A Data Set If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. For a more complete approach take a look at the createdatapartition function in the. Data partitioning. Partition A Data Set.

From knowledge.dataiku.com

Concept Summary Partitioned Models — Dataiku Knowledge Base Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. There are numerous approaches to achieve data partitioning. Data partitioning criteria and the. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. Data partitioning is the process of dividing a. Partition A Data Set.

From www.youtube.com

How to Partition a Set into subsets of disjoint sets YouTube Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. There are numerous approaches to achieve data partitioning. Data partitioning is the process of dividing a large dataset into smaller, more. Partition A Data Set.

From subscription.packtpub.com

Partitioning Introducing Microsoft SQL Server 2019 Partition A Data Set Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. For a more complete approach take a look at the createdatapartition function in the. There are numerous approaches to achieve data. Partition A Data Set.

From www.youtube.com

Partitions of a Set Set Theory YouTube Partition A Data Set Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. There are numerous approaches to achieve data partitioning. In system design, partitioning strategies play a critical role in managing. Partition A Data Set.

From www.slideserve.com

PPT Sets, Functions and Relations PowerPoint Presentation, free Partition A Data Set Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called. Partition A Data Set.

From www.researchgate.net

The dataset partition process of 5‐fold cross‐validation. Download Partition A Data Set If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. In system design, partitioning strategies play a critical role in managing and scaling large datasets across. Partition A Data Set.

From www.sqlshack.com

How to automate Table Partitioning in SQL Server Partition A Data Set In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such. Partition A Data Set.

From docs.griddb.net

Database function GridDB Docs Partition A Data Set Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data partitioning criteria and the. For a more complete approach take a look at the createdatapartition function in the.. Partition A Data Set.

From www.youtube.com

Combinatorics of Set Partitions [Discrete Mathematics] YouTube Partition A Data Set Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. In system design, partitioning strategies play a critical role in managing and scaling large datasets across distributed systems. If you want to split the data set once in two parts, you can use numpy.random.shuffle, or numpy.random.permutation if you need to keep. Data. Partition A Data Set.

From www.slideserve.com

PPT CS1022 Computer Programming & Principles PowerPoint Presentation Partition A Data Set Partitioning a large dataframe can significantly improve performance by allowing for more efficient processing of subsets of data. Data splitting is a crucial process in machine learning, involving the partitioning of a dataset into different subsets, such as training,. Data partitioning is the process of dividing a large dataset into smaller, more manageable subsets called partitions. Data partitioning criteria and. Partition A Data Set.