Airflow Dag Multiple Files . In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. It will take each file, execute it, and then load any dag objects from that file. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. You can create a dag template with subtasks creating a dag factory. Airflow loads dags from python source files, which it looks for inside its configured dag_folder. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Utilise globals() and save the python code into the dags_folder,. Get to know the best ways to dynamically generate dags in apache airflow. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all.

from betterdatascience.com

Airflow loads dags from python source files, which it looks for inside its configured dag_folder. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all. It will take each file, execute it, and then load any dag objects from that file. Get to know the best ways to dynamically generate dags in apache airflow. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Utilise globals() and save the python code into the dags_folder,. You can create a dag template with subtasks creating a dag factory. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server.

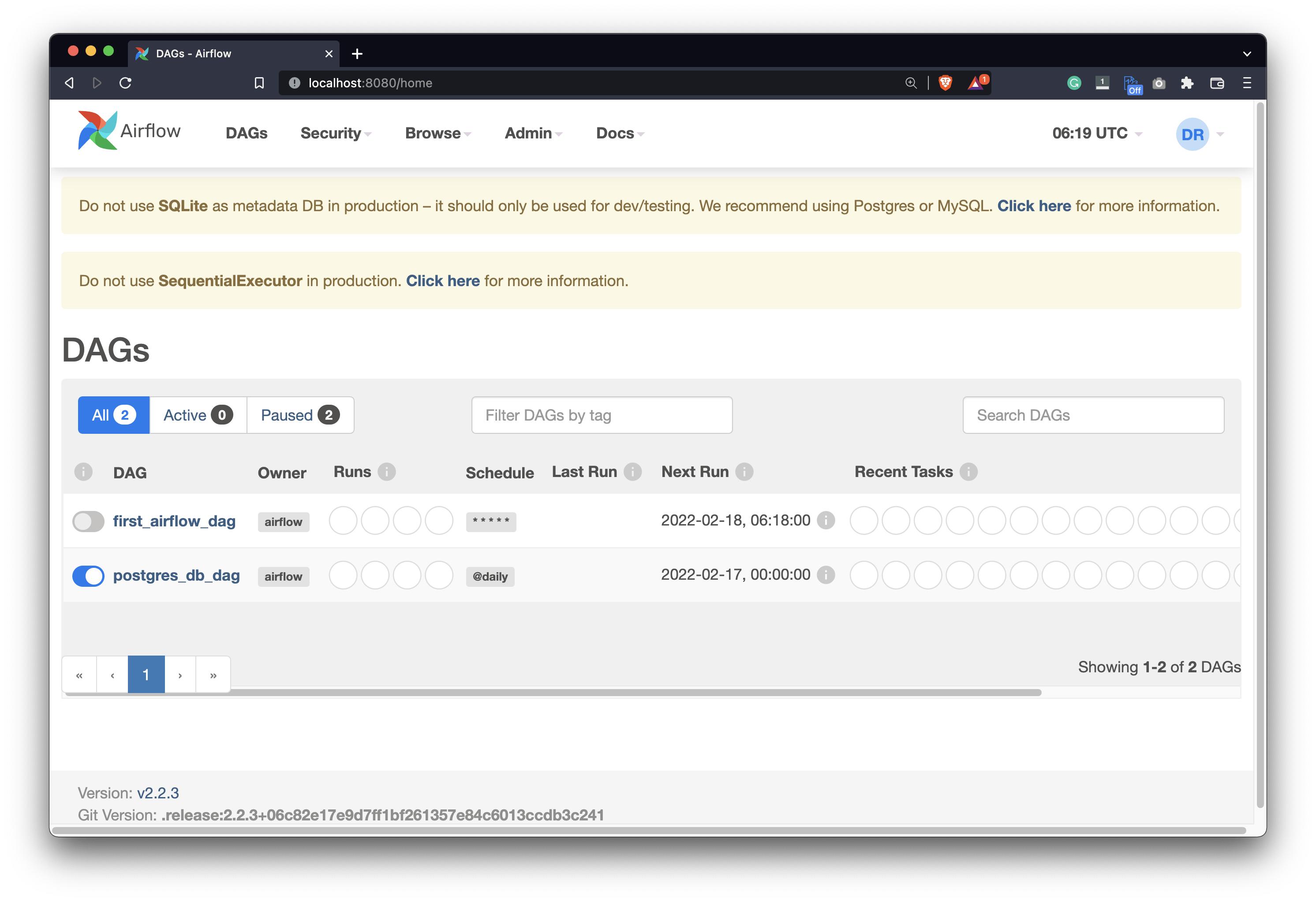

Apache Airflow for Data Science How to Work With Databases (Postgres

Airflow Dag Multiple Files In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. Utilise globals() and save the python code into the dags_folder,. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Get to know the best ways to dynamically generate dags in apache airflow. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. You can create a dag template with subtasks creating a dag factory. It will take each file, execute it, and then load any dag objects from that file. Airflow loads dags from python source files, which it looks for inside its configured dag_folder.

From betterdatascience.com

Apache Airflow for Data Science How to Write Your First DAG in 10 Airflow Dag Multiple Files Utilise globals() and save the python code into the dags_folder,. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic. Airflow Dag Multiple Files.

From 9to5answer.com

[Solved] External files in Airflow DAG 9to5Answer Airflow Dag Multiple Files In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. You can create a dag template with subtasks creating a dag factory. Get to know the best ways to dynamically generate dags in apache airflow. Utilise globals() and save the python code into the dags_folder,.. Airflow Dag Multiple Files.

From www.youtube.com

How Dags are scheduled along with understanding how states of dags Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Utilise globals() and save the python code into the dags_folder,. Upon iterating over the collection of things to. Airflow Dag Multiple Files.

From www.youtube.com

Basic AIRFLOW DAG Structure How to execute AIRFLOW DAG from ADMIN UI Airflow Dag Multiple Files Get to know the best ways to dynamically generate dags in apache airflow. It will take each file, execute it, and then load any dag objects from that file. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. In this tutorial, we will learn. Airflow Dag Multiple Files.

From blog.usejournal.com

Testing in Airflow Part 1 — DAG Validation Tests, DAG Definition Tests Airflow Dag Multiple Files The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. It will take each file, execute it, and then load any dag objects from that file. You can create a dag template with subtasks creating a dag factory. In this article, we will explore using. Airflow Dag Multiple Files.

From www.agari.com

Airflow DAG Scheduling Workflows at Agari Airflow Dag Multiple Files In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. Utilise globals() and save the python code into the dags_folder,. Airflow loads dags from python source files, which it looks for inside its configured dag_folder. You can create a dag template with subtasks creating a. Airflow Dag Multiple Files.

From betterdatascience.com

Apache Airflow for Data Science How to Work With Databases (Postgres Airflow Dag Multiple Files Get to know the best ways to dynamically generate dags in apache airflow. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. You can create a dag template with subtasks creating a dag factory. Utilise globals() and save the python code into the. Airflow Dag Multiple Files.

From github.com

GitHub learnbigdata/airflow_dag_example Airflow Dag Multiple Files Get to know the best ways to dynamically generate dags in apache airflow. It will take each file, execute it, and then load any dag objects from that file. Utilise globals() and save the python code into the dags_folder,. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks. Airflow Dag Multiple Files.

From copyprogramming.com

Airflow Creating a DAG in Airflow using the UI through Airflow's Airflow Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the. Airflow Dag Multiple Files.

From www.qubole.com

Apache Airflow DAG Tutorial Qubole Airflow Dag Multiple Files Utilise globals() and save the python code into the dags_folder,. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all.. Airflow Dag Multiple Files.

From towardsdatascience.com

How to Upload Files to Google Drive using Airflow by Denis Gontcharov Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. You can create a dag template with subtasks creating a dag factory. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Upon iterating over the collection of things. Airflow Dag Multiple Files.

From towardsdatascience.com

Break Up a Big Airflow DAG into Multiple Files by L. D. Nicolas May Airflow Dag Multiple Files Airflow loads dags from python source files, which it looks for inside its configured dag_folder. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory. Airflow Dag Multiple Files.

From www.astronomer.io

How to View and Manage DAGs in Apache Airflow® Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. Airflow loads dags from python source files, which it looks for inside its configured dag_folder. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Utilise globals() and. Airflow Dag Multiple Files.

From hevodata.com

All About Airflow server Made Easy 101 Airflow Dag Multiple Files The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. In this tutorial, we will learn how to use the. Airflow Dag Multiple Files.

From www.youtube.com

2. How to write your first DAG code in Apache Airflow ? Airflow Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. Get to know the best ways to dynamically generate dags in apache airflow. You can create a dag template with subtasks creating a dag factory. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether. Airflow Dag Multiple Files.

From engineering.autotrader.co.uk

Autogenerating an Airflow DAG using the dbt manifest Airflow Dag Multiple Files The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. It will take each file, execute it, and then load. Airflow Dag Multiple Files.

From www.youtube.com

Create First DAG/Workflow Apache Airflow Practical Tutorial Part 3 Airflow Dag Multiple Files Get to know the best ways to dynamically generate dags in apache airflow. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Airflow loads dags from python source files, which it looks for inside its configured dag_folder. In this article, we will explore. Airflow Dag Multiple Files.

From www.qubole.com

Airflow DAG Tutorial Airflow Operators Qubole Airflow Dag Multiple Files The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. In this article, we will explore using a structured. Airflow Dag Multiple Files.

From www.scribd.com

Airflow DAG Best Practices DAG As Configuration File PDF Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. Utilise globals() and save the python code into the dags_folder,. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Upon iterating over the collection of things to generate. Airflow Dag Multiple Files.

From www.upsolver.com

Apache Airflow When to Use it & Avoid it Airflow Dag Multiple Files You can create a dag template with subtasks creating a dag factory. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. Get to know the best ways to dynamically generate dags in apache airflow. Utilise globals() and save the python code into the dags_folder,.. Airflow Dag Multiple Files.

From cwl-airflow.readthedocs.io

How it works — CWLAirflow documentation Airflow Dag Multiple Files In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Utilise globals() and save the python code into the dags_folder,. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic. Airflow Dag Multiple Files.

From airflow.apache.org

DAG Serialization — Airflow Documentation Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. Utilise globals() and save the python code into the dags_folder,. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Upon iterating over the collection of things to generate. Airflow Dag Multiple Files.

From towardsdatascience.com

Getting started with Apache Airflow by Adnan Siddiqi Towards Data Airflow Dag Multiple Files In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Airflow loads dags from python source files, which it looks for inside its configured dag_folder. In this article, we will explore using a structured data flat file to store the dynamic configuration as a. Airflow Dag Multiple Files.

From airflow.apache.org

What is Airflow? — Airflow Documentation Airflow Dag Multiple Files Utilise globals() and save the python code into the dags_folder,. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp. Airflow Dag Multiple Files.

From blog.dagworks.io

Simplify Airflow DAG Creation and Maintenance with Hamilton Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. Utilise globals() and save the python code into the dags_folder,. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. In this article, we will explore using a. Airflow Dag Multiple Files.

From www.youtube.com

How to manage Airflow Dags in Production Dags versioning & Deployment Airflow Dag Multiple Files The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all. It will take each file, execute it, and then load any. Airflow Dag Multiple Files.

From blog.duyet.net

Airflow DAG Serialization Airflow Dag Multiple Files It will take each file, execute it, and then load any dag objects from that file. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all. Utilise globals() and save the python code into the dags_folder,. You can create a dag template with subtasks creating a. Airflow Dag Multiple Files.

From www.blef.fr

Airflow dynamic DAGs Airflow Dag Multiple Files In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. The trick to breaking up dags is to have. Airflow Dag Multiple Files.

From www.youtube.com

Airflow Splitting DAG definition across multiple files YouTube Airflow Dag Multiple Files Get to know the best ways to dynamically generate dags in apache airflow. It will take each file, execute it, and then load any dag objects from that file. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. You can create a dag template. Airflow Dag Multiple Files.

From airflow.apache.org

DAG File Processing — Airflow Documentation Airflow Dag Multiple Files Utilise globals() and save the python code into the dags_folder,. Get to know the best ways to dynamically generate dags in apache airflow. Airflow loads dags from python source files, which it looks for inside its configured dag_folder. Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to. Airflow Dag Multiple Files.

From betterdatascience.com

Apache Airflow for Data Science How to Write Your First DAG in 10 Airflow Dag Multiple Files Airflow loads dags from python source files, which it looks for inside its configured dag_folder. It will take each file, execute it, and then load any dag objects from that file. Utilise globals() and save the python code into the dags_folder,. Get to know the best ways to dynamically generate dags in apache airflow. Upon iterating over the collection of. Airflow Dag Multiple Files.

From quadexcel.com

How to write your first DAG in Apache Airflow Airflow tutorials Airflow Dag Multiple Files In this article, we will explore using a structured data flat file to store the dynamic configuration as a variable to implement a dynamic workflow. In this tutorial, we will learn how to use the airflow sftp operator to transfer multiple files from a local directory to a remote sftp server. Airflow loads dags from python source files, which it. Airflow Dag Multiple Files.

From vaultspeed.com

VaultSpeed delivers automated workflows for Apache Airflow. Airflow Dag Multiple Files Utilise globals() and save the python code into the dags_folder,. It will take each file, execute it, and then load any dag objects from that file. You can create a dag template with subtasks creating a dag factory. Get to know the best ways to dynamically generate dags in apache airflow. In this article, we will explore using a structured. Airflow Dag Multiple Files.

From copyprogramming.com

How to branch multiple paths in Airflow DAG using branch operator? Airflow Dag Multiple Files Upon iterating over the collection of things to generate dags for, you can use the context to determine whether you need to generate all. Utilise globals() and save the python code into the dags_folder,. Get to know the best ways to dynamically generate dags in apache airflow. In this tutorial, we will learn how to use the airflow sftp operator. Airflow Dag Multiple Files.

From medium.com

High Performance Airflow Dags. The below write up describes how we can Airflow Dag Multiple Files Get to know the best ways to dynamically generate dags in apache airflow. The trick to breaking up dags is to have the dag in one file, for example my_dag.py, and the logical chunks of tasks or taskgroups. Utilise globals() and save the python code into the dags_folder,. In this article, we will explore using a structured data flat file. Airflow Dag Multiple Files.