Standard Deviation Definition In Computer . standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard deviation (sd) is a single number that summarizes the variability in a dataset. Standard deviation measures the dispersion of the data. Histogram of data values with a wide spread. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. It represents the typical distance between each. standard deviation is the deviation of the data from the mean value of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. the standard deviation is a single number that estimates the spread, or width, of the data.

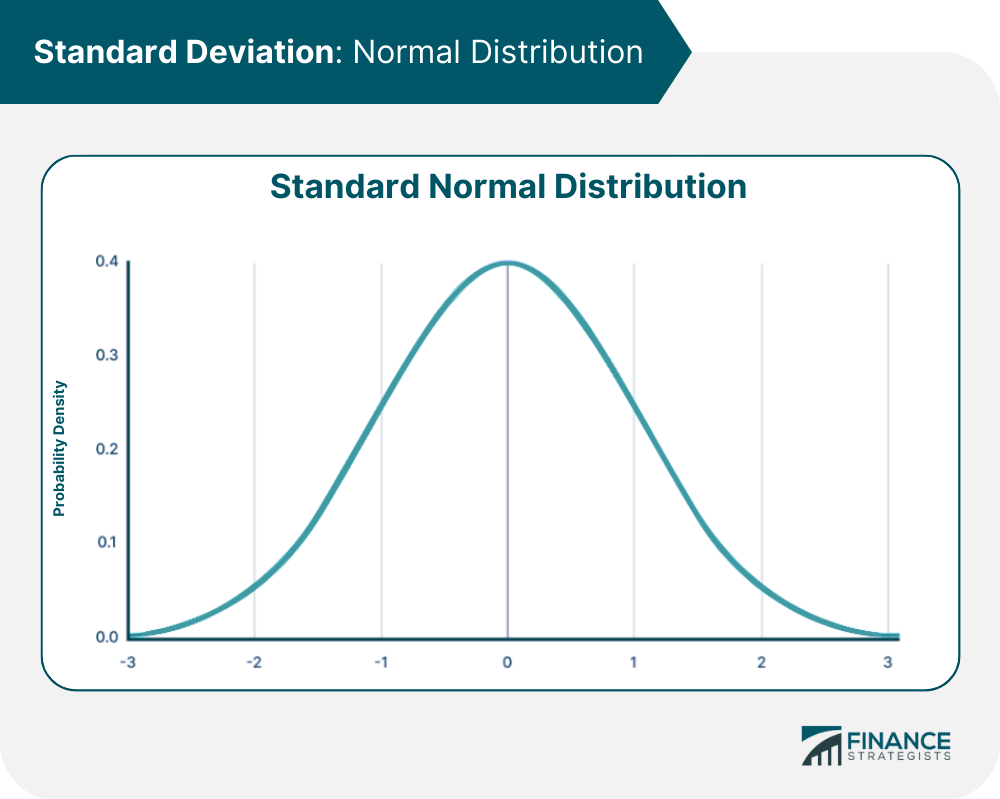

from www.financestrategists.com

It represents the typical distance between each. the standard deviation (sd) is a single number that summarizes the variability in a dataset. Histogram of data values with a wide spread. standard deviation is the deviation of the data from the mean value of the data. Standard deviation measures the dispersion of the data. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard deviation is a single number that estimates the spread, or width, of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations.

Standard Deviation Definition, Calculation, & Applications

Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. It represents the typical distance between each. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. Standard deviation measures the dispersion of the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard deviation is a single number that estimates the spread, or width, of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the deviation of the data from the mean value of the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. Histogram of data values with a wide spread.

From pcmbnotes4u.com

Standard Deviation Definition, Formula, Applications, and Examples Standard Deviation Definition In Computer the standard deviation is a single number that estimates the spread, or width, of the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. Standard. Standard Deviation Definition In Computer.

From www.adda247.com

Standard Deviation Definition, Formula, Examples Standard Deviation Definition In Computer The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. Histogram of data values with a wide spread. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard. Standard Deviation Definition In Computer.

From www.slideserve.com

PPT Standard Deviation and Z score PowerPoint Presentation, free Standard Deviation Definition In Computer the standard deviation (sd) is a single number that summarizes the variability in a dataset. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. The standard deviation of a given data set can be defined as the + ve square root of the. Standard Deviation Definition In Computer.

From www.computerguidehindi.com

Standard deviation क्या है? Computerguidehindi India's No1 Computer Standard Deviation Definition In Computer the standard deviation (sd) is a single number that summarizes the variability in a dataset. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the deviation of the data from the mean value of the data. Standard deviation measures the. Standard Deviation Definition In Computer.

From creativeakademy.org

Standard deviation Definition, Formulas, Uses, and Examples Standard Deviation Definition In Computer standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. The standard deviation of a given data set can be defined as the + ve square root of. Standard Deviation Definition In Computer.

From www.youtube.com

Standard Deviation Definition, Formula & Calculation Examples YouTube Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. Histogram of data values with a wide spread. the standard deviation is a single number that estimates the spread, or width, of the data. standard deviation is the deviation of the data from the mean value of the data. It represents the typical distance between each. the standard deviation. Standard Deviation Definition In Computer.

From www.erp-information.com

Standard Deviation (Formula, Example, and Calculation) Standard Deviation Definition In Computer the standard deviation is a single number that estimates the spread, or width, of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. It represents the typical distance between each. standard deviation is the square root of the variance, providing a. Standard Deviation Definition In Computer.

From exoahoqem.blob.core.windows.net

Standard Deviation Calculator With N And P at Larry Thielen blog Standard Deviation Definition In Computer It represents the typical distance between each. the standard deviation is a single number that estimates the spread, or width, of the data. standard deviation is the deviation of the data from the mean value of the data. Histogram of data values with a wide spread. standard deviation is the square root of the variance, providing a. Standard Deviation Definition In Computer.

From mungfali.com

Standard Deviation Formula Explained Standard Deviation Definition In Computer The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the deviation of the data from the mean value of the data. the standard deviation is a single number that estimates the spread, or width, of the data. standard deviation. Standard Deviation Definition In Computer.

From www.youtube.com

Standard Deviation Quite Easy if you Understand the Concept YouTube Standard Deviation Definition In Computer The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. Histogram of data values with a wide spread. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. Standard deviation measures. Standard Deviation Definition In Computer.

From exozrkgbh.blob.core.windows.net

Standard Deviation Definition Dataset at Marie Merritt blog Standard Deviation Definition In Computer standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. Standard deviation measures the dispersion of the data. standard deviation is the deviation of the data from. Standard Deviation Definition In Computer.

From www.slideserve.com

PPT Standard Deviation PowerPoint Presentation, free download ID Standard Deviation Definition In Computer standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard deviation (sd) is a single number. Standard Deviation Definition In Computer.

From www.youtube.com

How To Calculate The Standard Deviation YouTube Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. Histogram of data values with a wide spread. standard. Standard Deviation Definition In Computer.

From www.youtube.com

How to Calculate Standard Deviation Statistical Analysis Tutorial 7 Standard Deviation Definition In Computer The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. It represents the typical distance between each. Standard deviation measures. Standard Deviation Definition In Computer.

From www.linkedin.com

How to understand Standard Deviation (the easy way)? Standard Deviation Definition In Computer standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. Standard deviation measures the dispersion of the data. the. Standard Deviation Definition In Computer.

From examples.yourdictionary.com

Examples of Standard Deviation and How It’s Used Standard Deviation Definition In Computer Histogram of data values with a wide spread. the standard deviation (sd) is a single number that summarizes the variability in a dataset. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation is a single number that estimates. Standard Deviation Definition In Computer.

From capital.com

Standard Deviation Meaning and Definition Standard Deviation Definition In Computer It represents the typical distance between each. standard deviation is the deviation of the data from the mean value of the data. the standard deviation is a single number that estimates the spread, or width, of the data. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in. Standard Deviation Definition In Computer.

From www.financestrategists.com

Standard Deviation Definition, Calculation, & Applications Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. Histogram of data values with a wide spread. It represents the typical distance between each. standard deviation is the deviation of the data from the mean value of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of. Standard Deviation Definition In Computer.

From curvebreakerstestprep.com

Standard Deviation Variation from the Mean Curvebreakers Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. standard deviation is measured in a unit similar to. Standard Deviation Definition In Computer.

From www.storyofmathematics.com

Standard Deviation Definition & Meaning Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. It represents the typical distance between each. the standard deviation is a single number that estimates the spread, or width, of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is. Standard Deviation Definition In Computer.

From www.slideserve.com

PPT Standard Deviation PowerPoint Presentation, free download ID254271 Standard Deviation Definition In Computer the standard deviation is a single number that estimates the spread, or width, of the data. the standard deviation (sd) is a single number that summarizes the variability in a dataset. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. The. Standard Deviation Definition In Computer.

From www.standarddeviationcalculator.io

What Is Standard Deviation and Why Is It Important? Standard Deviation Definition In Computer Histogram of data values with a wide spread. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the. Standard Deviation Definition In Computer.

From www.thestreet.com

What Is Standard Deviation? Definition, Calculation & Example TheStreet Standard Deviation Definition In Computer It represents the typical distance between each. standard deviation is the deviation of the data from the mean value of the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. Histogram of data values with a wide spread. the standard deviation. Standard Deviation Definition In Computer.

From medium.com

Standard deviation definition with examples by alex Medium Standard Deviation Definition In Computer It represents the typical distance between each. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation is a single number that estimates the spread, or width, of the data. The standard deviation of a given data set can be. Standard Deviation Definition In Computer.

From www.adda247.com

Standard Deviation Definition, Formula, Examples Standard Deviation Definition In Computer the standard deviation is a single number that estimates the spread, or width, of the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard deviation (sd) is a single number that summarizes the variability in a dataset. It represents. Standard Deviation Definition In Computer.

From www.questionpro.com

Standard Deviation What it is, + How to calculate + Uses Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. the standard. Standard Deviation Definition In Computer.

From pmstudycircle.com

What is Standard Deviation? Definition, Formula & Example PM Study Circle Standard Deviation Definition In Computer standard deviation is the deviation of the data from the mean value of the data. Histogram of data values with a wide spread. the standard deviation (sd) is a single number that summarizes the variability in a dataset. Standard deviation measures the dispersion of the data. It represents the typical distance between each. standard deviation is measured. Standard Deviation Definition In Computer.

From forestparkgolfcourse.com

Standard Deviation Formula and Uses vs. Variance (2024) Standard Deviation Definition In Computer Standard deviation measures the dispersion of the data. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. It represents the typical distance between each. The standard deviation of a given data set can be defined as the + ve square root of the. Standard Deviation Definition In Computer.

From ncertguides.com

Understanding Standard Deviation Definition, Formulas, Steps, and Standard Deviation Definition In Computer the standard deviation (sd) is a single number that summarizes the variability in a dataset. Standard deviation measures the dispersion of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the deviation of the data from the mean. Standard Deviation Definition In Computer.

From www.scribbr.com

How to Calculate Standard Deviation (Guide) Calculator & Examples Standard Deviation Definition In Computer The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. the standard deviation is a single number that estimates. Standard Deviation Definition In Computer.

From exozrkgbh.blob.core.windows.net

Standard Deviation Definition Dataset at Marie Merritt blog Standard Deviation Definition In Computer standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. Standard deviation measures the dispersion of the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. . Standard Deviation Definition In Computer.

From www.investopedia.com

Standard Error (SE) Definition Standard Deviation in Statistics Explained Standard Deviation Definition In Computer the standard deviation (sd) is a single number that summarizes the variability in a dataset. the standard deviation is a single number that estimates the spread, or width, of the data. The standard deviation of a given data set can be defined as the + ve square root of the mean of the squared deviations. Histogram of data. Standard Deviation Definition In Computer.

From www.adda247.com

Standard Deviation Definition, Formula, Examples Standard Deviation Definition In Computer the standard deviation is a single number that estimates the spread, or width, of the data. Standard deviation measures the dispersion of the data. standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. The standard deviation of a given data set can be. Standard Deviation Definition In Computer.

From www.thoughtco.com

How to Calculate a Sample Standard Deviation Standard Deviation Definition In Computer Histogram of data values with a wide spread. standard deviation is the square root of the variance, providing a measure of the spread of the dataset in the same units as the data. It represents the typical distance between each. the standard deviation (sd) is a single number that summarizes the variability in a dataset. standard deviation. Standard Deviation Definition In Computer.

From www.slideserve.com

PPT STANDARD DEVIATION PowerPoint Presentation, free download ID Standard Deviation Definition In Computer standard deviation is measured in a unit similar to the units of the mean of data, whereas the variance is measured in squared units. It represents the typical distance between each. standard deviation is the deviation of the data from the mean value of the data. standard deviation is the square root of the variance, providing a. Standard Deviation Definition In Computer.