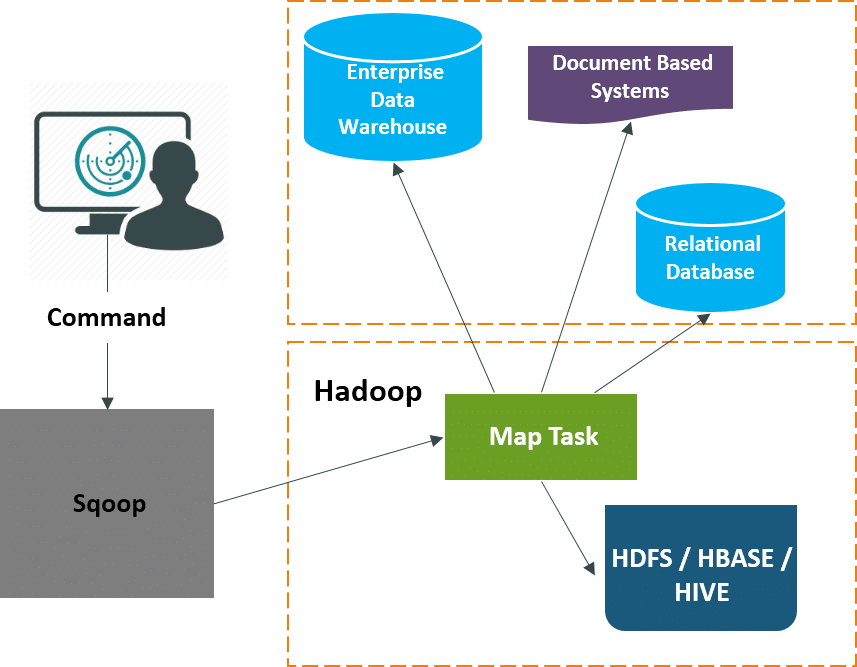

Sqoop Delete Data . It uses mapreduce to import and export the data,. It performs the security operation of data with the help of kerberos. With the help of sqoop, we can. You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop is a tool to transfer data between hadoop and relational databases. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Sqoop helps us to load the processed data directly into the hive or hbase.

from www.traininghub.io

Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. It uses mapreduce to import and export the data,. With the help of sqoop, we can. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. It performs the security operation of data with the help of kerberos. Sqoop helps us to load the processed data directly into the hive or hbase. You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop is a tool to transfer data between hadoop and relational databases.

Apache Sqoop Tutorial TrainingHub.io

Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. It performs the security operation of data with the help of kerberos. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. With the help of sqoop, we can. Sqoop is a tool to transfer data between hadoop and relational databases. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Sqoop helps us to load the processed data directly into the hive or hbase. It uses mapreduce to import and export the data,. You have to first delete the job and then create the job agan.

From blog.csdn.net

Sqoop 安装部署_sqoop部署CSDN博客 Sqoop Delete Data Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. It uses mapreduce to import and export the data,. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. It. Sqoop Delete Data.

From www.projectpro.io

Connect to a database and import data into HBase using Sqoop Sqoop Delete Data It uses mapreduce to import and export the data,. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop helps us to load the processed data directly into the hive. Sqoop Delete Data.

From blog.csdn.net

Sqoop导入导出的几个例子_sqoop 从hive导出csv文件CSDN博客 Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. You have to first delete the job and then create the job agan. It uses mapreduce to import and export the data,. With the help of sqoop, we can.. Sqoop Delete Data.

From blog.csdn.net

用Sqoop将MySQL的表导入到HDFS中,表导入成功,但数据为空,报错:Unknown column ‘????‘ in ‘field list‘_sqoop任务成功了但是没有数据是 Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. You have to first delete the job and then create the job agan. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop. Sqoop Delete Data.

From www.youtube.com

Sqoop Job [create, execute, delete, modify at run time] YouTube Sqoop Delete Data It uses mapreduce to import and export the data,. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop.. Sqoop Delete Data.

From www.zhihu.com

sqoop的使用之导入到hive和mysql Sqoop Delete Data You have to first delete the job and then create the job agan. It uses mapreduce to import and export the data,. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. It performs the security operation of data with the help of kerberos. Sqoop is a tool to transfer data. Sqoop Delete Data.

From www.slideserve.com

PPT Apache Sqoop Tutorial Sqoop Import & Export Data From MySQL To HDFS Hadoop Training Sqoop Delete Data You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. It performs the security operation of data with the help of kerberos. Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop. Sqoop Delete Data.

From www.cloudduggu.com

Apache Sqoop Architecture Tutorial CloudDuggu Sqoop Delete Data With the help of sqoop, we can. You have to first delete the job and then create the job agan. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop is a tool to transfer data. Sqoop Delete Data.

From www.slideserve.com

PPT Apache Sqoop Tutorial Sqoop Import & Export Data From MySQL To HDFS Hadoop Training Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Sqoop helps us to load the processed data directly into the hive or hbase. It performs the security operation of data with the help of kerberos. It uses mapreduce. Sqoop Delete Data.

From www.educba.com

Sqoop Commands Complete List of Sqoop Commands with Tips & Tricks Sqoop Delete Data Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop is a tool to transfer data between hadoop and relational databases. Sqoop import, as it implies, is used to transfer data from relational databases to. Sqoop Delete Data.

From www.bmabk.com

结构数据采集工具Sqoop的安装和使用 极客之音 Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. With the help of sqoop, we can. It uses mapreduce to import and export the data,. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data. Sqoop Delete Data.

From blog.sqlauthority.com

Big Data Interacting with Hadoop What is Sqoop? What is Zookeeper? Day 17 of 21 SQL Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. You have to first delete the job and then create the job agan. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. With the help of sqoop, we can. Sqoop import, as it implies, is used to transfer. Sqoop Delete Data.

From www.scaler.com

Import and Export Command in Sqoop Scaler Topics Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. You have to first delete the job and then. Sqoop Delete Data.

From programmerah.com

Sqoop Error Can‘t parse input data ‘\N‘ [How to Solve] ProgrammerAH Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. It performs the security operation of data with the help of kerberos. It uses mapreduce to import and export the data,. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. You have to first delete the job and. Sqoop Delete Data.

From www.projectpro.io

What are the different file formats supported in Sqoop Sqoop Delete Data Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop is a tool to transfer data between hadoop and relational databases. You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. It. Sqoop Delete Data.

From www.acte.in

Apache Sqoop A Concise Tutorial Just An Hour ACTE Sqoop Delete Data With the help of sqoop, we can. Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop is a tool to transfer data between hadoop and relational databases. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. It uses mapreduce to import and export. Sqoop Delete Data.

From www.geeksforgeeks.org

Import and Export Data using SQOOP Sqoop Delete Data With the help of sqoop, we can. Sqoop helps us to load the processed data directly into the hive or hbase. It uses mapreduce to import and export the data,. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop import, as it implies, is used to transfer data from. Sqoop Delete Data.

From www.progress.com

Scoop Up Some Insider Knowledge on Apache Sqoop Sqoop Delete Data It uses mapreduce to import and export the data,. You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system. Sqoop Delete Data.

From data-flair.training

Sqoop Validation Interfaces & Limitations of Sqoop Validate DataFlair Sqoop Delete Data It uses mapreduce to import and export the data,. You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. It performs the security operation of data with the help of kerberos. Sqoop is a tool to transfer. Sqoop Delete Data.

From subscription.packtpub.com

Workings of Sqoop Data Lake for Enterprises Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. With the help of sqoop, we can. Sqoop helps us to load the processed data directly into the hive or hbase. You have to first delete the job and then create the job agan. It performs the security operation of data with the help of kerberos. Sqoop is. Sqoop Delete Data.

From www.traininghub.io

Apache Sqoop Tutorial TrainingHub.io Sqoop Delete Data It uses mapreduce to import and export the data,. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop is a tool to transfer data between hadoop and relational databases. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data.. Sqoop Delete Data.

From www.slideserve.com

PPT Apache Sqoop Tutorial Sqoop Import & Export Data From MySQL To HDFS Hadoop Training Sqoop Delete Data It performs the security operation of data with the help of kerberos. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. You have to first delete the job and then create the job agan. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache. Sqoop Delete Data.

From blog.csdn.net

Sqoop的一些基本操作_deletetargetdirCSDN博客 Sqoop Delete Data It performs the security operation of data with the help of kerberos. You have to first delete the job and then create the job agan. Sqoop is a tool to transfer data between hadoop and relational databases. Sqoop helps us to load the processed data directly into the hive or hbase. It uses mapreduce to import and export the data,.. Sqoop Delete Data.

From www.slideserve.com

PPT Apache Sqoop Tutorial Sqoop Import & Export Data From MySQL To HDFS Hadoop Training Sqoop Delete Data Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop helps us to load the processed data directly into the hive or hbase. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. With the help of sqoop, we can.. Sqoop Delete Data.

From www.zhihu.com

sqoop的使用之导入到hive和mysql Sqoop Delete Data Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache. Sqoop Delete Data.

From bigdatansql.com

SQOOP Complete Tutorial Part10 Big Data and SQL Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. You have to first delete the job and then create the job agan. With the help of sqoop, we can. Sqoop is basically used to transfer data from relational. Sqoop Delete Data.

From data-flair.training

Sqoop Validation Interfaces & Limitations of Sqoop Validate DataFlair Sqoop Delete Data Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop is a tool to transfer data between hadoop and relational databases. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop import, as it implies, is used to transfer. Sqoop Delete Data.

From www.youtube.com

Sqoop Tutorial Sqoop Architecture Sqoop Commands Sqoop Export COSO IT YouTube Sqoop Delete Data Sqoop import, as it implies, is used to transfer data from relational databases to a hadoop file system (hdfs), and sqoop export does the opposite of this, i.e, from hadoop to. Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to. Sqoop Delete Data.

From techvidvan.com

Sqoop Validation How Sqoop Validates Copied Data TechVidvan Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. With the help of sqoop, we can. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop helps us to load the processed data directly into the hive or hbase. It uses mapreduce to import and export. Sqoop Delete Data.

From data-flair.training

Apache Sqoop Architecture How Sqoop works Internally DataFlair Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. With the help of sqoop, we can. You have to first delete the job and then create the job agan. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop helps us to load the processed data. Sqoop Delete Data.

From www.researchgate.net

Apache SQOOP data import architecture Download Scientific Diagram Sqoop Delete Data Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop helps us to load the processed data directly into the hive or hbase. Sqoop import, as it implies, is used. Sqoop Delete Data.

From blog.csdn.net

vm中sqoop的安装_vmsqoCSDN博客 Sqoop Delete Data Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop is a tool to transfer data between hadoop and relational databases. Sqoop helps us to load the processed data directly into the hive or hbase. You have to first delete the job and then create the job agan. Sqoop import,. Sqoop Delete Data.

From blog.csdn.net

Sqoop基本原理及常用方法CSDN博客 Sqoop Delete Data It performs the security operation of data with the help of kerberos. Sqoop is a tool to transfer data between hadoop and relational databases. You have to first delete the job and then create the job agan. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. It uses mapreduce to. Sqoop Delete Data.

From www.hadooplessons.info

Hadoop Lessons Importing data from RDBMS to Hadoop using Apache Sqoop Sqoop Delete Data Sqoop is a tool to transfer data between hadoop and relational databases. Sqoop helps us to load the processed data directly into the hive or hbase. It performs the security operation of data with the help of kerberos. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. With the help. Sqoop Delete Data.

From www.youtube.com

Sqoop Introduction SQOOP Big Data Data Engineer bigdata sqoop YouTube Sqoop Delete Data With the help of sqoop, we can. Apache sqoop is a data ingestion tool designed for efficiently transferring bulk data between apache hadoop and structured data. Sqoop is basically used to transfer data from relational databases such as mysql, oracle to data warehouses such as hadoop. Sqoop is a tool to transfer data between hadoop and relational databases. You have. Sqoop Delete Data.