Rdd Reducebykey Max . Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Actually you have a pairrdd. It is a wider transformation as. Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Callable[[k], int] = ) →.

from sparkbyexamples.com

Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. It is a wider transformation as. Actually you have a pairrdd. Callable[[k], int] = ) →. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey:

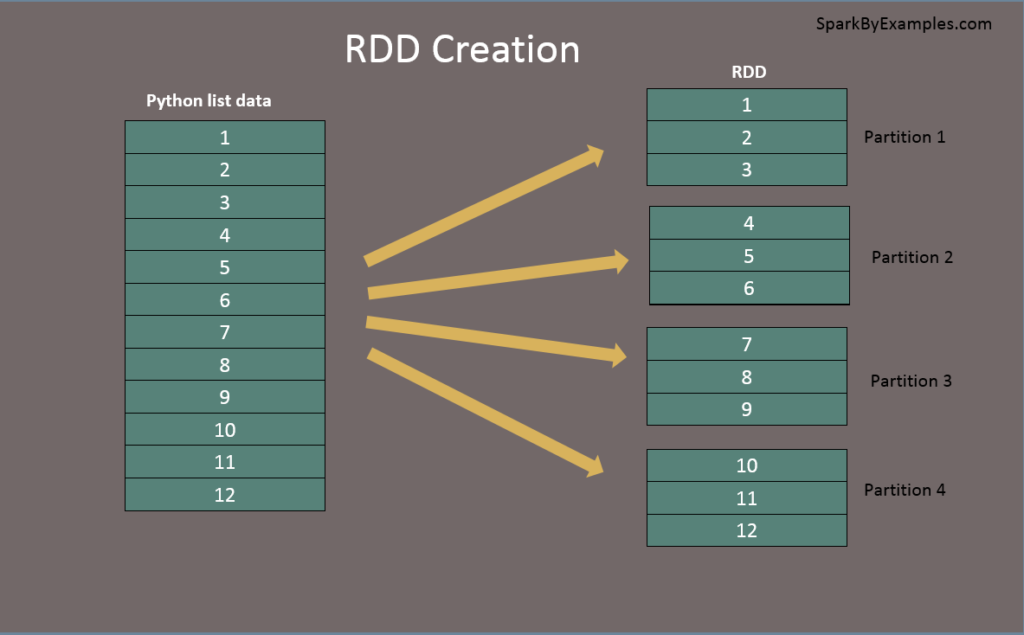

PySpark RDD Tutorial Learn with Examples Spark By {Examples}

Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. One of the best ways to do it is with reducebykey: Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. It is a wider transformation as. Callable[[k], int] = ) →. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Callable[[k], int] = ) →. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Actually you have a pairrdd. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function.

From blog.csdn.net

pyspark RDD reduce、reduceByKey、reduceByKeyLocally用法CSDN博客 Rdd Reducebykey Max One of the best ways to do it is with reducebykey: This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ). Rdd Reducebykey Max.

From slidesplayer.com

《Spark编程基础》 教材官网: 第5章 RDD编程 (PPT版本号: 2018年2月) ppt download Rdd Reducebykey Max You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: One of the best ways to do it is with reducebykey: This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and. Rdd Reducebykey Max.

From blog.csdn.net

理解RDD的reduceByKey与groupByKeyCSDN博客 Rdd Reducebykey Max Callable[[k], int] = ) →. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Callable[[k], int] = ) →. Actually you have a pairrdd. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key. Rdd Reducebykey Max.

From blog.csdn.net

groupByKey&reduceByKey_groupbykey和reducebykey 示例CSDN博客 Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and. Rdd Reducebykey Max.

From www.youtube.com

How to do Word Count in Spark Sparkshell RDD flatMap Rdd Reducebykey Max It is a wider transformation as. Callable[[k], int] = ) →. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Actually you have a pairrdd. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function. Rdd Reducebykey Max.

From www.youtube.com

大数据IMF传奇行动 第17课:RDD案例(join、cogroup、reduceByKey、groupByKey等) YouTube Rdd Reducebykey Max Actually you have a pairrdd. Callable[[k], int] = ) →. It is a wider transformation as. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) This code creates a. Rdd Reducebykey Max.

From www.youtube.com

Spark reduceByKey Or groupByKey YouTube Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. You can use the reducebykey () function to group the. Rdd Reducebykey Max.

From blog.csdn.net

RDD 中的 reducebyKey 与 groupByKey 哪个性能高?_rdd中reducebykey和groupbykey性能CSDN博客 Rdd Reducebykey Max One of the best ways to do it is with reducebykey: Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Pyspark rdd's reducebykey(~) method aggregates the. Rdd Reducebykey Max.

From blog.csdn.net

Spark RDD的flatMap、mapToPair、reduceByKey三个算子详解CSDN博客 Rdd Reducebykey Max Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: This code creates a pairrdd of (key, value) pairs, then uses. Rdd Reducebykey Max.

From blog.csdn.net

Spark大数据学习之路六 RDD的方法两大类转换和行动 10KVreduceByKeyCSDN博客 Rdd Reducebykey Max Callable[[k], int] = ) →. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Actually you have a pairrdd. You can use the reducebykey () function to group the values by key,. Rdd Reducebykey Max.

From www.youtube.com

Ch.0424 Demo GroupByKey Vs. ReduceByKey YouTube Rdd Reducebykey Max This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Callable[[k], int] = ) →. Pyspark rdd's reducebykey(~) method aggregates the. Rdd Reducebykey Max.

From www.youtube.com

What is reduceByKey and how does it work. YouTube Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: It is a wider transformation as. Actually you have a pairrdd. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Pyspark rdd's reducebykey(~) method aggregates the rdd data. Rdd Reducebykey Max.

From blog.csdn.net

大数据:spark RDD编程,构建,RDD算子,map,flatmap,reduceByKey,mapValues,groupBy Rdd Reducebykey Max Actually you have a pairrdd. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: Callable[[k], int] =. Rdd Reducebykey Max.

From zhuanlan.zhihu.com

RDD(二):RDD算子 知乎 Rdd Reducebykey Max Callable[[k], int] = ) →. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. Actually you have a pairrdd. It is a wider transformation as. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey. Rdd Reducebykey Max.

From blog.csdn.net

理解RDD的reduceByKey与groupByKeyCSDN博客 Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and. Rdd Reducebykey Max.

From proedu.co

Apache Spark RDD reduceByKey transformation Proedu Rdd Reducebykey Max Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Spark rdd reducebykey () transformation is used to merge. Rdd Reducebykey Max.

From blog.csdn.net

groupByKey&reduceByKey_groupbykey和reducebykey 示例CSDN博客 Rdd Reducebykey Max Callable[[k], int] = ) →. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Actually you have a pairrdd. It is a wider transformation as. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and. Rdd Reducebykey Max.

From aitechtogether.com

【Python】PySpark 数据计算 ③ ( RDDreduceByKey 函数概念 RDDreduceByKey 方法工作流程 Rdd Reducebykey Max Callable[[k], int] = ) →. It is a wider transformation as. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key.. Rdd Reducebykey Max.

From www.showmeai.tech

图解大数据 基于RDD大数据处理分析Spark操作 Rdd Reducebykey Max Actually you have a pairrdd. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. It is a wider transformation as. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Pyspark rdd's reducebykey(~) method aggregates the. Rdd Reducebykey Max.

From www.youtube.com

RDD Transformations groupByKey, reduceByKey, sortByKey Using Scala Rdd Reducebykey Max Callable[[k], int] = ) →. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max). Rdd Reducebykey Max.

From blog.csdn.net

Spark RDD/Core 编程 API入门系列 之rdd案例(map、filter、flatMap、groupByKey Rdd Reducebykey Max This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey. Rdd Reducebykey Max.

From www.youtube.com

53 Spark RDD PairRDD ReduceByKey YouTube Rdd Reducebykey Max Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. Callable[[k], int] = ) →. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey () function to group the. Rdd Reducebykey Max.

From www.youtube.com

065 尚硅谷 SparkCore 核心编程 RDD 转换算子 reduceByKey YouTube Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the. Rdd Reducebykey Max.

From sparkbyexamples.com

PySpark RDD Tutorial Learn with Examples Spark By {Examples} Rdd Reducebykey Max Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. Spark rdd reducebykey (). Rdd Reducebykey Max.

From www.analyticsvidhya.com

Spark Transformations and Actions On RDD Rdd Reducebykey Max Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. It is a wider transformation as. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Actually you have a pairrdd. This code creates a. Rdd Reducebykey Max.

From www.youtube.com

Difference between groupByKey() and reduceByKey() in Spark RDD API Rdd Reducebykey Max Actually you have a pairrdd. Callable[[k], int] = ) →. Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Callable[[k], int] = ) →. Minimum =. Rdd Reducebykey Max.

From www.youtube.com

RDD Advance Transformation And Actions groupbykey And reducebykey Rdd Reducebykey Max Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. One of the best ways to do it is with reducebykey: Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) You can use the reducebykey () function to group. Rdd Reducebykey Max.

From blog.csdn.net

spark03:RDD、map算子、flatMap算子、reduceByKey算子、mapValues算子、groupBy算子_map算子和 Rdd Reducebykey Max It is a wider transformation as. Callable[[k], int] = ) →. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and. Rdd Reducebykey Max.

From blog.csdn.net

RDD中groupByKey和reduceByKey区别_groupbykey reducebykey区别CSDN博客 Rdd Reducebykey Max Callable[[k], int] = ) →. One of the best ways to do it is with reducebykey: This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. You can use the reducebykey () function to group the values by key, then. Rdd Reducebykey Max.

From www.youtube.com

reduce vs reducebykey YouTube Rdd Reducebykey Max It is a wider transformation as. One of the best ways to do it is with reducebykey: Actually you have a pairrdd. Callable[[k], int] = ) →. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Minimum = rdd.reducebykey(min). Rdd Reducebykey Max.

From www.linkedin.com

28 reduce VS reduceByKey in Apache Spark RDDs Rdd Reducebykey Max Spark rdd reducebykey () transformation is used to merge the values of each key using an associative reduce function. Callable[[k], int] = ) →. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. It is a wider transformation as. One of the best ways to do it is with reducebykey: Minimum = rdd.reducebykey(min) maximum. Rdd Reducebykey Max.

From www.slideshare.net

Apache Spark KeyValue RDD Big Data Hadoop Spark Tutorial Rdd Reducebykey Max Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) It is a wider transformation as. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Callable[[k], int] = ) →. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for. Rdd Reducebykey Max.

From www.youtube.com

Databricks Spark RDD Difference between the reduceByKey vs Rdd Reducebykey Max Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. Actually you have a pairrdd. Callable[[k], int] = ) →. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. One of the best ways. Rdd Reducebykey Max.

From slideplayer.com

DataIntensive Distributed Computing ppt download Rdd Reducebykey Max Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. This code creates a pairrdd of (key, value) pairs, then uses the reducebykey function to group the values by key and find the minimum and maximum values for each key. Minimum = rdd.reducebykey(min) maximum = rdd.reducebykey(max) Callable[[k], int] = ) →. You can use the. Rdd Reducebykey Max.

From www.youtube.com

067 尚硅谷 SparkCore 核心编程 RDD 转换算子 groupByKey & reduceByKey的区别 YouTube Rdd Reducebykey Max It is a wider transformation as. You can use the reducebykey () function to group the values by key, then use the min and max functions on the resulting rdd: Callable[[k], int] = ) →. Pyspark rdd's reducebykey(~) method aggregates the rdd data by key, and perform a reduction operation. One of the best ways to do it is with. Rdd Reducebykey Max.