Two Parameter Bayesian . What are the possible values of the parameter? Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. The parameter σ2 is called a nuisance parameter here. Finding out the optimal hyperparameter combination of a neural network. Start to construct the bayes table. What are the prior probabilities? Consider a large & complex neural network that solves a classification problem. In this chapter, we illustrate bayesian learning from several two parameter problems.

from www.slideserve.com

In this chapter, we illustrate bayesian learning from several two parameter problems. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. The parameter σ2 is called a nuisance parameter here. Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. What are the possible values of the parameter? Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Consider a large & complex neural network that solves a classification problem. Finding out the optimal hyperparameter combination of a neural network. Start to construct the bayes table. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework.

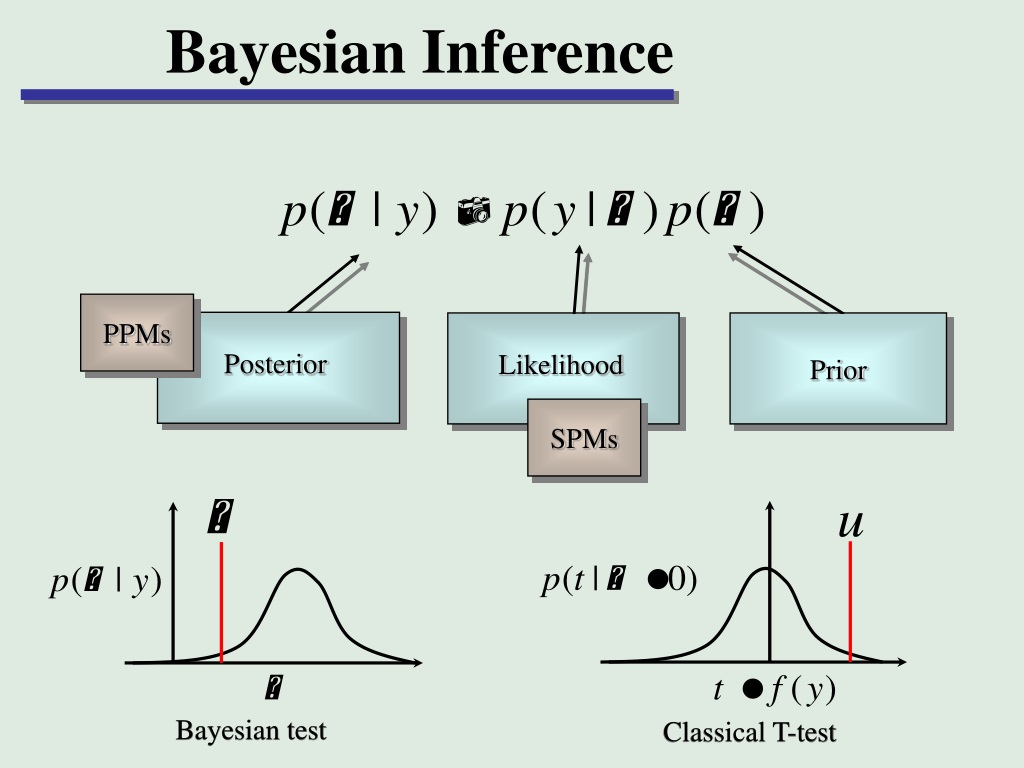

PPT Bayesian Inference and Posterior Probability Maps PowerPoint

Two Parameter Bayesian Start to construct the bayes table. What are the possible values of the parameter? The parameter σ2 is called a nuisance parameter here. In this chapter, we illustrate bayesian learning from several two parameter problems. Consider a large & complex neural network that solves a classification problem. What are the prior probabilities? We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. Start to construct the bayes table. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. Finding out the optimal hyperparameter combination of a neural network. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network.

From towardsdatascience.com

Grid Search and Bayesian Optimization simply explained by Dominik Two Parameter Bayesian We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. The parameter σ2 is called a nuisance parameter here. More generally, we will consider. Two Parameter Bayesian.

From www.cloudthat.com

Bayesian Inference in Machine Learning Harnessing Uncertainty for Two Parameter Bayesian We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. What are the prior probabilities? What are the possible values of the parameter? Start to construct the bayes table. The parameter σ2 is called a nuisance parameter here. This section describes how to set up. Two Parameter Bayesian.

From www.slidestalk.com

13_Bayesian Model Selection and Averaging May 2017.pptx UCL Two Parameter Bayesian Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Consider a large & complex neural network that solves a classification problem. The parameter σ2 is called a nuisance parameter here. Start to construct the bayes table. More generally, we will consider a situation in which the parameter vector. Two Parameter Bayesian.

From towardsdatascience.com

Bayes’ rule with a simple and practical example by Tirthajyoti Sarkar Two Parameter Bayesian Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. Finding out the optimal hyperparameter combination of a neural network. We are going to introduce continuous variables and how to elicit probability distributions,. Two Parameter Bayesian.

From www.codegigs.app

Classification algorithms Naive Bayes & Decision Trees Two Parameter Bayesian What are the prior probabilities? This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. The parameter σ2 is called a nuisance parameter here. Finding out the optimal hyperparameter combination of a neural network. Consider a large & complex neural network that solves. Two Parameter Bayesian.

From www.slideserve.com

PPT Bayesian models for fMRI data PowerPoint Presentation, free Two Parameter Bayesian More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. In this chapter, we illustrate bayesian learning from several two parameter problems. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework.. Two Parameter Bayesian.

From www.r-bloggers.com

Bayesian Network Example with the bnlearn Package Rbloggers Two Parameter Bayesian Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. Finding out the optimal hyperparameter combination of a neural network. Consider a large & complex neural network that solves a classification. Two Parameter Bayesian.

From doingbayesiandataanalysis.blogspot.com

Doing Bayesian Data Analysis Bayesian Item Response Theory in JAGS A Two Parameter Bayesian Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. The parameter σ2 is called a nuisance parameter here. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. Finding out the optimal hyperparameter combination of. Two Parameter Bayesian.

From traventul.exblog.jp

A tutorial on bayesian estimation and tracking applicable to Two Parameter Bayesian Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. Consider a large & complex neural network that solves a classification problem. What are the prior probabilities? What are the possible values of. Two Parameter Bayesian.

From thecustomizewindows.com

Bayesian Probability Concept Two Parameter Bayesian Start to construct the bayes table. Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. Finding out the optimal hyperparameter combination of a neural network. The parameter σ2 is called a nuisance parameter here. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into. Two Parameter Bayesian.

From dibsmethodsmeetings.github.io

Bayesian Indices of Significance Duke Institute for Brain Sciences Two Parameter Bayesian What are the prior probabilities? Consider a large & complex neural network that solves a classification problem. What are the possible values of the parameter? We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. More generally, we will consider a situation in which the. Two Parameter Bayesian.

From doingbayesiandataanalysis.blogspot.com

Doing Bayesian Data Analysis Bayesian assessment of null values Two Parameter Bayesian Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. What are the possible values of the parameter? Start to construct the bayes table. We are going to introduce continuous variables and how. Two Parameter Bayesian.

From www.slideserve.com

PPT Bayesian Inference and Posterior Probability Maps PowerPoint Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. The parameter. Two Parameter Bayesian.

From www.youtube.com

Tutorial in Bayesian Statistics Part 2 Parameter estimation and Two Parameter Bayesian Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. The parameter σ2 is called a nuisance parameter here. Consider a large & complex. Two Parameter Bayesian.

From www.bayesianspectacles.org

Bayes Factors for Those Who Hate Bayes Factors Bayesian Spectacles Two Parameter Bayesian Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. Start to construct the bayes table. What are the prior probabilities? The parameter σ2 is called a nuisance parameter here. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and. Two Parameter Bayesian.

From doingbayesiandataanalysis.blogspot.com

Doing Bayesian Data Analysis Bayesian Item Response Theory in JAGS A Two Parameter Bayesian Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Start to construct the bayes table. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. What are the possible values of. Two Parameter Bayesian.

From www.youtube.com

Bayesian analysis in a nutshell YouTube Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. What are the possible values of the parameter? This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. What are the prior probabilities? Start to construct the bayes table. Hyperparameters may be the. Two Parameter Bayesian.

From www.researchgate.net

Bayesian optimization of hyperparameters. (a)(f) The average mAP of Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. Start to construct the bayes table. What are the prior probabilities? The parameter σ2 is called a nuisance parameter here. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. Hyperparameters may be the number. Two Parameter Bayesian.

From www.ahajournals.org

Bayesian Hierarchical Modeling and the Integration of Heterogeneous Two Parameter Bayesian Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. The parameter σ2 is called a nuisance parameter here. What are the possible values of the parameter? What are the prior probabilities? Start to construct the bayes table. Consider a large & complex neural network that solves a classification problem. In this chapter, we. Two Parameter Bayesian.

From krasserm.github.io

Bayesian regression with linear basis function models Martin Krasser Two Parameter Bayesian More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. Start to construct the bayes table. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Consider a large & complex neural network that solves a. Two Parameter Bayesian.

From www.frontiersin.org

Frontiers Increasing Interpretability of Bayesian Probabilistic Two Parameter Bayesian In this chapter, we illustrate bayesian learning from several two parameter problems. Finding out the optimal hyperparameter combination of a neural network. Consider a large & complex neural network that solves a classification problem. What are the prior probabilities? What are the possible values of the parameter? The parameter σ2 is called a nuisance parameter here. Start to construct the. Two Parameter Bayesian.

From bmcmedresmethodol.biomedcentral.com

Analysis of Bayesian posterior significance and effect size indices for Two Parameter Bayesian Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. In this chapter, we illustrate bayesian learning from several two parameter problems. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. The parameter σ2 is called a nuisance. Two Parameter Bayesian.

From datainsights.de

Introduction to Bayesian inference with PyStan Part I Data Insights Two Parameter Bayesian Consider a large & complex neural network that solves a classification problem. Start to construct the bayes table. What are the possible values of the parameter? Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. The parameter σ2 is called a nuisance parameter here. More generally, we will. Two Parameter Bayesian.

From stackoverflow.com

artificial intelligence How to use a Bayesian Network to compute Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. What are the possible values of the parameter? The parameter σ2 is called a nuisance parameter here. Consider a large & complex neural network that solves a classification problem. Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. We are going to introduce. Two Parameter Bayesian.

From gregorygundersen.com

Bayesian Linear Regression Two Parameter Bayesian Consider a large & complex neural network that solves a classification problem. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution. Two Parameter Bayesian.

From towardsdatascience.com

Bayesian HyperParameter Optimization Neural Networks, TensorFlow Two Parameter Bayesian In this chapter, we illustrate bayesian learning from several two parameter problems. Consider a large & complex neural network that solves a classification problem. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. More generally, we will consider a situation in which the parameter. Two Parameter Bayesian.

From stats.stackexchange.com

machine learning Parameters in Naive Bayes Cross Validated Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian framework. More generally, we will consider a situation in which the parameter vector θ = (θ1, θ2) is partitioned into two (possibly also vector. What are. Two Parameter Bayesian.

From www.youtube.com

Bayesian Estimation Examples YouTube Two Parameter Bayesian What are the possible values of the parameter? Hyperparameters may be the number of hidden units, number of hidden layers, etc in that network. Start to construct the bayes table. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. More generally, we will consider a situation in which. Two Parameter Bayesian.

From www.youtube.com

Bayesian parameter estimation YouTube Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. What are the possible values of the parameter? Consider a large & complex neural network that solves a classification problem. The parameter σ2 is called a nuisance parameter here. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution. Two Parameter Bayesian.

From michael-franke.github.io

9.1 Bayes rule for parameter estimation An Introduction to Data Analysis Two Parameter Bayesian Start to construct the bayes table. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. Consider a large & complex neural. Two Parameter Bayesian.

From www.researchgate.net

Basic structure of a Bayesian network and how it can be used for Two Parameter Bayesian What are the possible values of the parameter? Start to construct the bayes table. Finding out the optimal hyperparameter combination of a neural network. In this chapter, we illustrate bayesian learning from several two parameter problems. We are going to introduce continuous variables and how to elicit probability distributions, from a prior belief to a posterior distribution using the bayesian. Two Parameter Bayesian.

From www.researchgate.net

Bayesian hyperparameter optimization strategy. Download Scientific Two Parameter Bayesian This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. The parameter σ2 is called a nuisance parameter here. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. Hyperparameters may be. Two Parameter Bayesian.

From becominghuman.ai

Naive Bayes Theorem. Introduction by Jinde Shubham Human Two Parameter Bayesian This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. More generally, we will consider a situation in which the parameter vector. Two Parameter Bayesian.

From harry45.github.io

Bayesian Evidence The Gaussian Linear Model Two Parameter Bayesian Finding out the optimal hyperparameter combination of a neural network. Consider a large & complex neural network that solves a classification problem. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data \ (d_2\) that depend on a. What are the possible values of the parameter? Hyperparameters may be the number of hidden units, number. Two Parameter Bayesian.

From www.mauriciopoppe.com

Bayesian Networks Mauricio Poppe Two Parameter Bayesian Consider a large & complex neural network that solves a classification problem. Start to construct the bayes table. This section describes how to set up a multiple linear regression model, how to specify prior distributions for regression coefficients of multiple predictors, and how to make. Suppose we have data \ (d_1\) that depend on parameter \ (\theta_1\), and independent data. Two Parameter Bayesian.