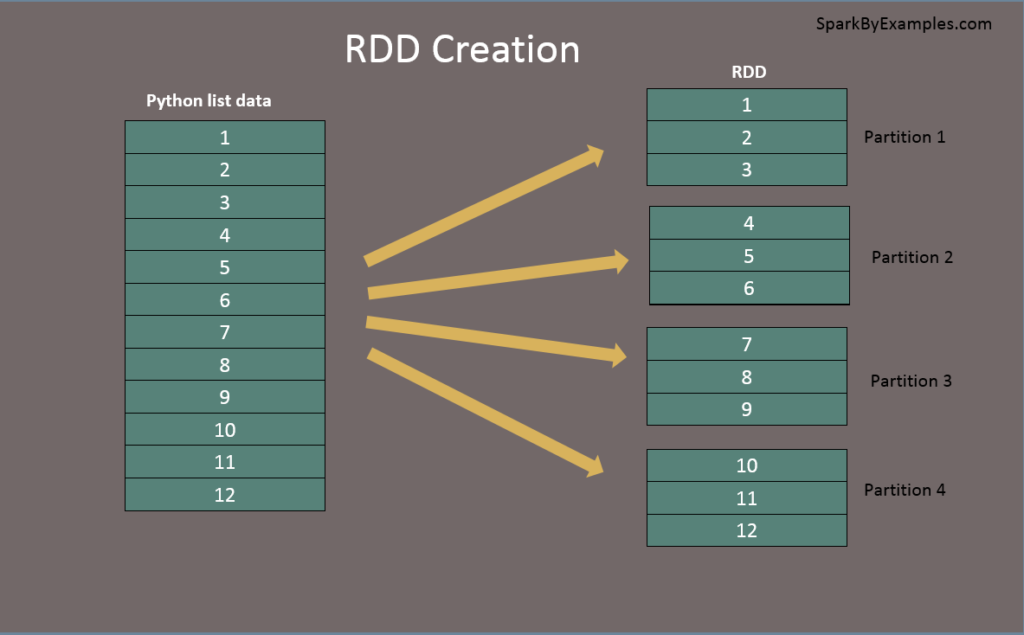

Pyspark Rdd Reduce Example . Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We can create a rdd in two different ways, from existing source and external source. We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd

from sparkbyexamples.com

To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can create a rdd in two different ways, from existing source and external source. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples.

PySpark RDD Tutorial Learn with Examples Spark By {Examples}

Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Learn to use reduce () with java, python examples. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can apply two types of operations on rdd, namely “transformation” and “action”. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can create a rdd in two different ways, from existing source and external source.

From www.youtube.com

Create First RDD(Resilient Distributed Dataset) in PySpark PySpark 101 Part 2 DM Pyspark Rdd Reduce Example Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce () with java, python examples. We can create a rdd in two different ways, from existing source and external source. Callable [[t, t], t]) → t [source] ¶ reduces the. Pyspark Rdd Reduce Example.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Pyspark Rdd Reduce Example Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can create a rdd in two different ways, from existing source and external source. Learn to use reduce () with java, python examples. Callable [[t, t], t]) → t [source] ¶ reduces the. Pyspark Rdd Reduce Example.

From www.youtube.com

Pyspark RDD Operations Actions in Pyspark RDD Fold vs Reduce Glom() Pyspark tutorials Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. Learn to use reduce () with java, python examples. We can apply two types of operations on rdd, namely “transformation” and “action”. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i. Pyspark Rdd Reduce Example.

From azurelib.com

How to create an RDD in PySpark Azure Databricks? Pyspark Rdd Reduce Example Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can create a rdd in two different ways, from existing source and external source. We can apply two types of operations on rdd, namely “transformation” and “action”. Callable [[t, t], t]) → t [source]. Pyspark Rdd Reduce Example.

From www.dataiku.com

How to use PySpark in Dataiku DSS Dataiku Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd. Pyspark Rdd Reduce Example.

From stackoverflow.com

PySpark (Python 2.7) How to flatten values after reduce Stack Overflow Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We. Pyspark Rdd Reduce Example.

From blog.csdn.net

pyspark RDD reduce、reduceByKey、reduceByKeyLocally用法CSDN博客 Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can apply two types of operations on rdd, namely “transformation” and “action”. We can create a rdd in two different ways, from existing source and external source. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements. Pyspark Rdd Reduce Example.

From sparkbyexamples.com

PySpark Convert DataFrame to RDD Spark By {Examples} Pyspark Rdd Reduce Example We can apply two types of operations on rdd, namely “transformation” and “action”. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Pyspark Rdd Reduce Example.

From www.analyticsvidhya.com

Spark Transformations and Actions On RDD Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can create a rdd in two different ways, from existing source and external source. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can apply. Pyspark Rdd Reduce Example.

From www.youtube.com

Practical RDD action reduce in PySpark using Jupyter PySpark 101 Part 25 DM DataMaking Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We can apply two types of operations on rdd, namely “transformation” and “action”. Spark rdd reduce() aggregate action function is used to calculate. Pyspark Rdd Reduce Example.

From www.youtube.com

RDD 2 RDD Operations In PySpark RDD Actions & Transformations YouTube Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used. Pyspark Rdd Reduce Example.

From blog.csdn.net

pyspark RDD reduce、reduceByKey、reduceByKeyLocally用法CSDN博客 Pyspark Rdd Reduce Example We can apply two types of operations on rdd, namely “transformation” and “action”. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used to calculate. Pyspark Rdd Reduce Example.

From sparkbyexamples.com

Convert PySpark RDD to DataFrame Spark By {Examples} Pyspark Rdd Reduce Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can apply two types of operations on rdd, namely “transformation” and “action”.. Pyspark Rdd Reduce Example.

From sparkbyexamples.com

PySpark Random Sample with Example Spark By {Examples} Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can apply two types of operations on rdd, namely “transformation” and “action”. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements. Pyspark Rdd Reduce Example.

From blog.csdn.net

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter、distinct、sort By方法、分布式集群 Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. Pyspark Rdd Reduce Example.

From www.projectpro.io

PySpark RDD Cheat Sheet A Comprehensive Guide Pyspark Rdd Reduce Example We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark. Pyspark Rdd Reduce Example.

From www.javatpoint.com

PySpark RDD javatpoint Pyspark Rdd Reduce Example We can apply two types of operations on rdd, namely “transformation” and “action”. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can create a rdd in two different ways, from existing source and external source. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements. Pyspark Rdd Reduce Example.

From sparkbyexamples.com

PySpark RDD Tutorial Learn with Examples Spark By {Examples} Pyspark Rdd Reduce Example Learn to use reduce () with java, python examples. We can create a rdd in two different ways, from existing source and external source. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We can apply two types of operations on rdd, namely “transformation” and “action”. To summarize. Pyspark Rdd Reduce Example.

From medium.com

Pyspark RDD. Resilient Distributed Datasets (RDDs)… by Muttineni Sai Rohith CodeX Medium Pyspark Rdd Reduce Example Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. Callable [[t, t], t]) → t [source] ¶ reduces the elements of. Pyspark Rdd Reduce Example.

From scales.arabpsychology.com

PySpark Convert RDD To DataFrame (With Example) Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative. Pyspark Rdd Reduce Example.

From www.analyticsvidhya.com

Create RDD in Apache Spark using Pyspark Analytics Vidhya Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. Learn to use reduce () with java, python examples. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of. Pyspark Rdd Reduce Example.

From blog.51cto.com

【Python】PySpark 数据计算 ④ ( RDDfilter 方法 过滤 RDD 中的元素 RDDdistinct 方法 对 RDD 中的元素去重 )_51CTO博客 Pyspark Rdd Reduce Example Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can create a rdd in two different ways,. Pyspark Rdd Reduce Example.

From www.youtube.com

Pyspark Tutorial 5, RDD Actions,reduce,countbykey,countbyvalue,fold,variance,stats, Pyspark Rdd Reduce Example We can apply two types of operations on rdd, namely “transformation” and “action”. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce (). Pyspark Rdd Reduce Example.

From stackoverflow.com

pyspark Spark RDD Fault tolerant Stack Overflow Pyspark Rdd Reduce Example Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can apply two types of operations on rdd, namely “transformation” and “action”. We can create a rdd in two different ways, from existing source and external source. To summarize reduce, excluding driver side. Pyspark Rdd Reduce Example.

From www.youtube.com

PySpark Tutorial 3 PySpark RDD Tutorial PySpark with Python YouTube Pyspark Rdd Reduce Example Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces. Pyspark Rdd Reduce Example.

From ittutorial.org

PySpark RDD Example IT Tutorial Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. To summarize. Pyspark Rdd Reduce Example.

From www.youtube.com

What is RDD in Spark? How to create RDD PySpark RDD Tutorial PySpark For Beginners Data Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can apply two types of operations on rdd, namely “transformation” and “action”. Callable [[t, t], t]) →. Pyspark Rdd Reduce Example.

From sparkbyexamples.com

PySpark Create RDD with Examples Spark by {Examples} Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce () with java, python examples. We can create a rdd in two different ways,. Pyspark Rdd Reduce Example.

From www.youtube.com

Spark DataFrame Intro & vs RDD PySpark Tutorial for Beginners YouTube Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. We can apply two types of operations on rdd, namely “transformation” and “action”. Learn to use reduce () with java, python examples. We can create a rdd in two different ways, from existing source and external source. Callable [[t, t], t]) → t [source]. Pyspark Rdd Reduce Example.

From blog.csdn.net

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter、distinct、sort By方法、分布式集群 Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. Learn to use reduce () with java, python examples. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. Pyspark Rdd Reduce Example.

From www.youtube.com

Pyspark RDD Tutorial What Is RDD In Pyspark? Pyspark Tutorial For Beginners Simplilearn Pyspark Rdd Reduce Example Learn to use reduce () with java, python examples. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces. Pyspark Rdd Reduce Example.

From zhuanlan.zhihu.com

PySpark实战 17:使用 Python 扩展 PYSPARK:RDD 和用户定义函数 (1) 知乎 Pyspark Rdd Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms (mappartitions) as the basic. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. We can create a rdd in two different ways, from existing source and external source. We can apply two types of operations on. Pyspark Rdd Reduce Example.

From www.youtube.com

RDD Processing PySpark Big Data YouTube Pyspark Rdd Reduce Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd Learn to use reduce () with java, python examples. We can create a. Pyspark Rdd Reduce Example.

From www.projectpro.io

PySpark RDD Cheat Sheet A Comprehensive Guide Pyspark Rdd Reduce Example We can create a rdd in two different ways, from existing source and external source. We can apply two types of operations on rdd, namely “transformation” and “action”. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative binary. Learn to use reduce () with java, python examples. Spark rdd. Pyspark Rdd Reduce Example.

From www.youtube.com

PySpark RDD Tutorial PySpark Tutorial for Beginners PySpark Online Training Edureka YouTube Pyspark Rdd Reduce Example We can apply two types of operations on rdd, namely “transformation” and “action”. Spark rdd reduce() aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd We can create a rdd in two different ways, from existing source and external source. To summarize reduce, excluding driver side. Pyspark Rdd Reduce Example.