Shuffle Partitions In Spark . From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. Choosing the right partitioning method is crucial and depends on factors such as numeric. Shuffling is the process of exchanging data between partitions. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. As a result, data rows can move between worker nodes. This article is dedicated to one of the most fundamental processes in spark — the shuffle.

from dev.to

This article is dedicated to one of the most fundamental processes in spark — the shuffle. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Choosing the right partitioning method is crucial and depends on factors such as numeric. As a result, data rows can move between worker nodes. Shuffling is the process of exchanging data between partitions. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data.

Spark tip Disable Coalescing Post Shuffle Partitions for compute

Shuffle Partitions In Spark Shuffling is the process of exchanging data between partitions. Shuffling is the process of exchanging data between partitions. As a result, data rows can move between worker nodes. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Choosing the right partitioning method is crucial and depends on factors such as numeric. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. This article is dedicated to one of the most fundamental processes in spark — the shuffle. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed.

From duanmeng.github.io

Spark Shuffle Nonamateur First Look Shuffle Partitions In Spark From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. As a result, data rows can move between worker nodes. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as. Shuffle Partitions In Spark.

From www.openkb.info

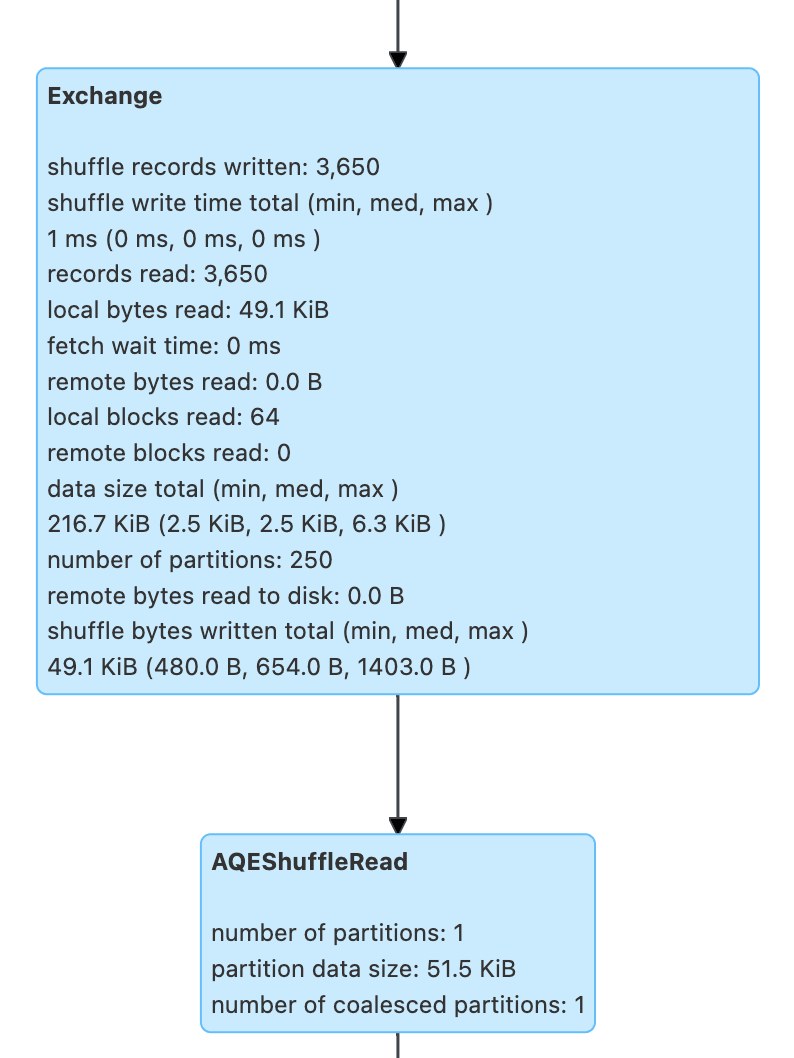

Spark Tuning Adaptive Query Execution(1) Dynamically coalescing Shuffle Partitions In Spark To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. This article is dedicated to one of the most fundamental processes in spark — the shuffle. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling. Shuffle Partitions In Spark.

From anhcodes.dev

Spark working internals, and why should you care? Shuffle Partitions In Spark To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. As a result, data rows can move between worker nodes. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. Choosing. Shuffle Partitions In Spark.

From dev.to

Spark tip Disable Coalescing Post Shuffle Partitions for compute Shuffle Partitions In Spark As a result, data rows can move between worker nodes. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. This article is dedicated to one of the most fundamental processes in spark — the shuffle. Spark.sql.shuffle.partitions determines. Shuffle Partitions In Spark.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan Shuffle Partitions In Spark From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. This article is dedicated to one of the most fundamental processes in spark. Shuffle Partitions In Spark.

From 0x0fff.com

Spark Architecture Shuffle Distributed Systems Architecture Shuffle Partitions In Spark Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. As a result, data rows can move between worker nodes. Shuffling is the process of exchanging data between partitions. This article is dedicated to one of the most fundamental processes in spark — the shuffle. To understand what a shuffle actually is. Shuffle Partitions In Spark.

From www.linuxeden.com

Adaptive Execution 让 Spark SQL 更高效更好用Linuxeden开源社区 Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Choosing the right partitioning method is crucial and depends on factors such as numeric. Shuffling is the process of exchanging data between partitions. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. Spark automatically. Shuffle Partitions In Spark.

From kyuubi.readthedocs.io

How To Use Spark Adaptive Query Execution (AQE) in Kyuubi — Apache Kyuubi Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. Choosing the right partitioning method is crucial and depends on factors such as numeric. Spark automatically triggers the shuffle when we perform aggregation and join. Shuffle Partitions In Spark.

From www.confessionsofadataguy.com

"Don't mess with the dials," they said. Spark (PySpark) Shuffle Shuffle Partitions In Spark Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. As a result, data rows can move between worker nodes. To understand what a shuffle actually is and when it occurs, we will firstly look at the. Shuffle Partitions In Spark.

From zhuanlan.zhihu.com

Spark提高shuffle操作的并行度 知乎 Shuffle Partitions In Spark As a result, data rows can move between worker nodes. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Choosing the right partitioning method is crucial and depends on factors such as numeric. Shuffling is the process of exchanging data between partitions. Partitioning in spark improves performance by reducing data shuffle and providing. Shuffle Partitions In Spark.

From anhcodes.dev

Spark working internals, and why should you care? Shuffle Partitions In Spark Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Spark automatically triggers the shuffle when we. Shuffle Partitions In Spark.

From spark-internals.books.yourtion.com

Shuffle过程 Apache Spark 的设计与实现 Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. To understand what a shuffle actually is and when it occurs,. Shuffle Partitions In Spark.

From slideplayer.com

Building Data Processing Pipelines with Spark at Scale ppt download Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. To understand what a shuffle actually is and when it occurs, we. Shuffle Partitions In Spark.

From anhcodes.dev

Spark working internals, and why should you care? Shuffle Partitions In Spark Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. To understand what a shuffle actually is and when it occurs, we. Shuffle Partitions In Spark.

From slideplayer.com

Spark Programming By J. H. Wang May 9, ppt download Shuffle Partitions In Spark This article is dedicated to one of the most fundamental processes in spark — the shuffle. Choosing the right partitioning method is crucial and depends on factors such as numeric. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. While running spark jobs, it’s important to monitor the performance and adjust. Shuffle Partitions In Spark.

From www.youtube.com

Basics of Apache Spark Shuffle Partition [200] learntospark YouTube Shuffle Partitions In Spark As a result, data rows can move between worker nodes. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Shuffling is the process of exchanging data between partitions. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. While running spark jobs, it’s important to. Shuffle Partitions In Spark.

From sivaprasad-mandapati.medium.com

Spark Joins Tuning Part2(Shuffle Partitions,AQE) by Sivaprasad Shuffle Partitions In Spark Shuffling is the process of exchanging data between partitions. This article is dedicated to one of the most fundamental processes in spark — the shuffle. As a result, data rows can move between worker nodes. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Choosing the right partitioning method is crucial and. Shuffle Partitions In Spark.

From sharkdtu.github.io

Spark Shuffle原理及相关调优 守护之鲨 Shuffle Partitions In Spark As a result, data rows can move between worker nodes. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Choosing the right partitioning method is crucial and depends on factors such as numeric. This article is dedicated to one of the most fundamental processes in spark — the shuffle. From the. Shuffle Partitions In Spark.

From www.reddit.com

Are Spark shuffle partitions differed in 3.2 and 3.1 spark version? r Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. This article is dedicated to one of the most fundamental processes in spark — the shuffle. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. To understand what a shuffle actually is and when it occurs,. Shuffle Partitions In Spark.

From www.coursera.org

Shuffle Partitions Spark Core Concepts Coursera Shuffle Partitions In Spark As a result, data rows can move between worker nodes. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. To understand what a shuffle actually is and when it occurs, we will firstly look at the. Shuffle Partitions In Spark.

From www.dezyre.com

How Data Partitioning in Spark helps achieve more parallelism? Shuffle Partitions In Spark This article is dedicated to one of the most fundamental processes in spark — the shuffle. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. As a result, data rows can move between worker nodes. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in. Shuffle Partitions In Spark.

From www.pinterest.com

a diagram that shows how to use the shuffle Shuffle Partitions In Spark Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Shuffling is the process of exchanging data between partitions. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. To understand. Shuffle Partitions In Spark.

From 0x0fff.com

Spark Architecture Shuffle Distributed Systems Architecture Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Shuffling is the process of exchanging data between partitions. Spark.sql.shuffle.partitions determines. Shuffle Partitions In Spark.

From www.youtube.com

TechAway 24 Spark Deep Dive Partition & Shuffle (by Romain) YouTube Shuffle Partitions In Spark This article is dedicated to one of the most fundamental processes in spark — the shuffle. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that. Shuffle Partitions In Spark.

From anhcodes.dev

Debug long running Spark job Shuffle Partitions In Spark As a result, data rows can move between worker nodes. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Choosing the right partitioning method is crucial and depends on factors such as numeric. Spark.sql.shuffle.partitions determines the. Shuffle Partitions In Spark.

From www.upscpdf.in

spark.sql.shuffle.partitions UPSCPDF Shuffle Partitions In Spark To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Shuffling is the process of exchanging data between. Shuffle Partitions In Spark.

From datacook.tistory.com

Spark Partitions Tuning / Part 02 DataCook Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Choosing the right partitioning method is crucial and depends on factors such as numeric. Shuffling is the process of exchanging data between partitions. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. This article is dedicated. Shuffle Partitions In Spark.

From www.waitingforcode.com

What's new in Apache Spark 3.0 shuffle partitions coalesce on Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. Choosing the right partitioning method is crucial and depends on factors such as numeric. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. This article is dedicated to one of the most fundamental processes in spark. Shuffle Partitions In Spark.

From www.youtube.com

Shuffle Partition Spark Optimization 10x Faster! YouTube Shuffle Partitions In Spark Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. Choosing the right partitioning method is crucial and depends on factors. Shuffle Partitions In Spark.

From toge510.com

【Apache Spark】Shuffle Partitionシャッフルパーティションの最適設定値とは? と〜げのブログ Shuffle Partitions In Spark While running spark jobs, it’s important to monitor the performance and adjust the shuffle partitions as needed. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. This article is dedicated to one of the most fundamental processes in spark — the shuffle. Shuffling is the process of exchanging data between partitions. To understand. Shuffle Partitions In Spark.

From www.confessionsofadataguy.com

"Don't mess with the dials," they said. Spark (PySpark) Shuffle Shuffle Partitions In Spark Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark sql. As a result, data rows can move between worker nodes. To understand what a shuffle actually is and when it occurs, we will firstly look at. Shuffle Partitions In Spark.

From sparkbyexamples.com

Spark SQL Shuffle Partitions Spark By {Examples} Shuffle Partitions In Spark This article is dedicated to one of the most fundamental processes in spark — the shuffle. From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. Shuffling is the process of exchanging data between partitions. Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. Spark.sql.shuffle.partitions determines the. Shuffle Partitions In Spark.

From sparkbyexamples.com

Difference between spark.sql.shuffle.partitions vs spark.default Shuffle Partitions In Spark From the answer here, spark.sql.shuffle.partitions configures the number of partitions that are used when shuffling data. This article is dedicated to one of the most fundamental processes in spark — the shuffle. As a result, data rows can move between worker nodes. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark.. Shuffle Partitions In Spark.

From www.gangofcoders.net

How does Spark partition(ing) work on files in HDFS? Gang of Coders Shuffle Partitions In Spark Shuffling is the process of exchanging data between partitions. As a result, data rows can move between worker nodes. This article is dedicated to one of the most fundamental processes in spark — the shuffle. Partitioning in spark improves performance by reducing data shuffle and providing fast access to data. From the answer here, spark.sql.shuffle.partitions configures the number of partitions. Shuffle Partitions In Spark.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Shuffle Partitions In Spark Spark automatically triggers the shuffle when we perform aggregation and join operations on rdd and dataframe. To understand what a shuffle actually is and when it occurs, we will firstly look at the spark. Shuffling is the process of exchanging data between partitions. Spark.sql.shuffle.partitions determines the number of partitions to use when shuffling data for joins or aggregations in spark. Shuffle Partitions In Spark.