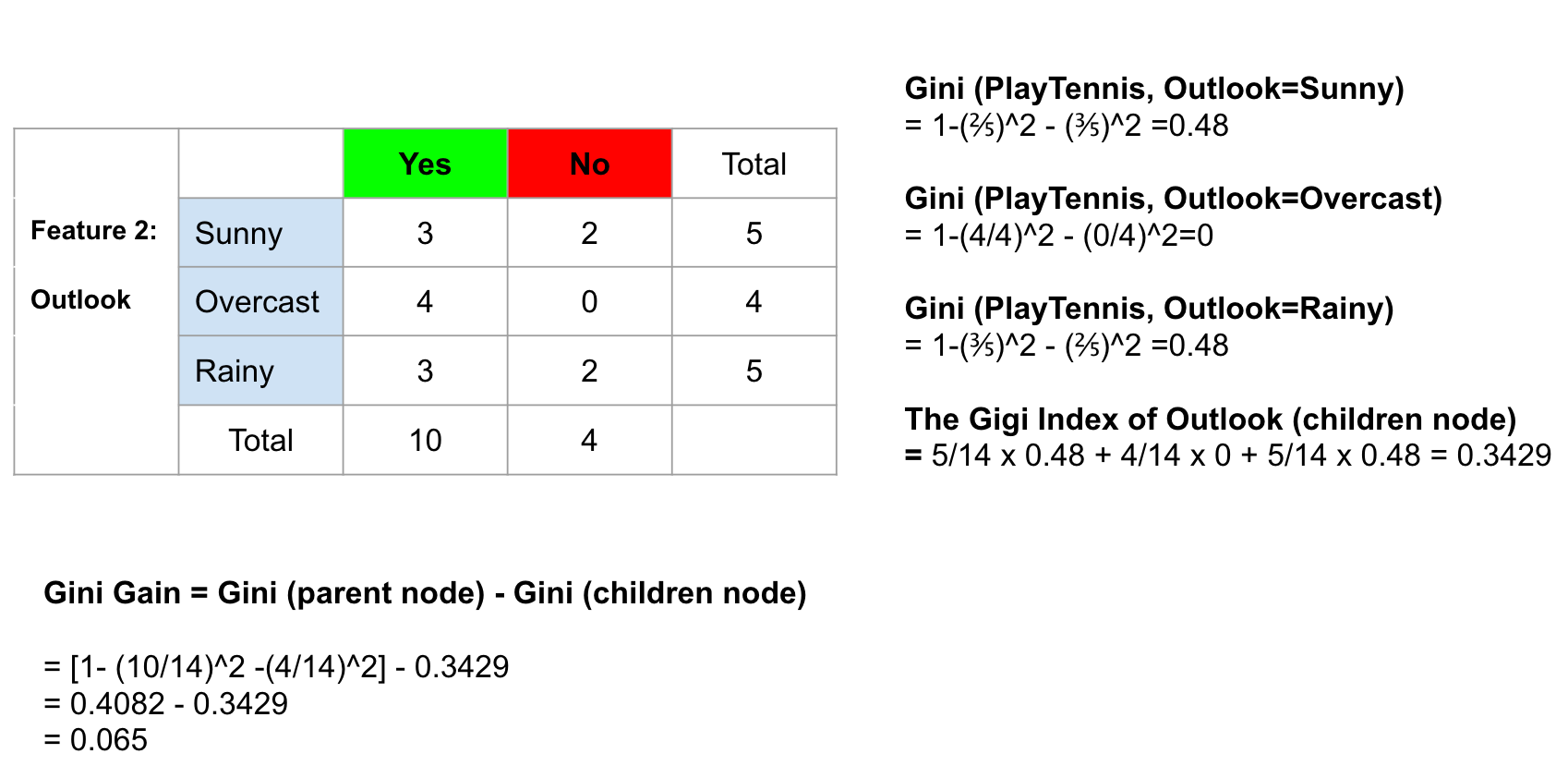

Gini Index Sklearn . Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. It is also known as the. It means an attribute with lower gini index should be preferred. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. In this tutorial, you covered a lot of details about decision trees; Read more in the user guide. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified.

from www.kdnuggets.com

Read more in the user guide. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. It means an attribute with lower gini index should be preferred. In this tutorial, you covered a lot of details about decision trees; It is also known as the. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit.

Decision Tree Intuition From Concept to Application KDnuggets

Gini Index Sklearn Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. In this tutorial, you covered a lot of details about decision trees; It means an attribute with lower gini index should be preferred. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. Read more in the user guide. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. It is also known as the.

From www.datacamp.com

Python Decision Tree Classification Tutorial ScikitLearn Gini Index Sklearn The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Read more in the user guide. In this tutorial, you covered a lot of details about decision trees; The gini index, also known as gini. Gini Index Sklearn.

From www.studyiq.com

Gini Coefficient, Definition, Formula, Importance, Calculation Gini Index Sklearn The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. In this tutorial, you covered a lot of details about decision trees; Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. How they work, attribute selection. Gini Index Sklearn.

From blog.binomoidr.com

Understanding Gini Index and its Relevance Across the World Gini Index Sklearn It means an attribute with lower gini index should be preferred. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. Read more in the user guide.. Gini Index Sklearn.

From www.kdnuggets.com

Decision Tree Intuition From Concept to Application KDnuggets Gini Index Sklearn The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. It means an attribute with lower gini index should be preferred. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. In this tutorial, you covered a lot of details about decision trees; Criterion{“gini”, “entropy”,. Gini Index Sklearn.

From urbanage.lsecities.net

Sources and notes Gini Index Sklearn Read more in the user guide. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. The importance of a feature is computed as the (normalized) total reduction of. Gini Index Sklearn.

From altax.al

Change of tax rates and GINI index (inequality in national Gini Index Sklearn How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a lot of details about decision trees; It is also known as the. It means an attribute with lower gini index should be preferred. The gini. Gini Index Sklearn.

From pintu.co.id

Distribusi Pendapatan Definisi, Jenis, dan Pengukurannya Pintu Blog Gini Index Sklearn It is also known as the. Read more in the user guide. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. It means an attribute with lower gini index should be preferred. Here is an. Gini Index Sklearn.

From www.researchgate.net

Feature importance based on Gini index Download Scientific Diagram Gini Index Sklearn The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. It is also known as the. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a lot. Gini Index Sklearn.

From hxechwvhx.blob.core.windows.net

Gini Index Python Sklearn at Stacie Fox blog Gini Index Sklearn How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Read more in the user guide. In this tutorial, you covered a lot of details about decision trees; The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Gini index is a metric to measure how. Gini Index Sklearn.

From savingking.com.tw

Python機器學習 決策樹 (DecisionTreeClassifier) ; from sklearn.tree import Gini Index Sklearn Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. It means an attribute with lower gini index should be preferred. In this tutorial, you covered a. Gini Index Sklearn.

From ourworldindata.org

Measuring inequality what is the Gini coefficient? Our World in Data Gini Index Sklearn Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Read more. Gini Index Sklearn.

From energyeducation.ca

Gini coefficient Energy Education Gini Index Sklearn The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Read more in the user guide. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a lot of details about decision trees; Here is an example of how you can use gini impurity. Gini Index Sklearn.

From www.researchgate.net

The Lorenz curve and the Gini index. Download Scientific Diagram Gini Index Sklearn How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. It is also known as the. It means an attribute with lower. Gini Index Sklearn.

From codistwa.com

What's the Gini index for machine learning? Gini Index Sklearn It means an attribute with lower gini index should be preferred. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. In this tutorial, you covered a lot of details about decision trees; Read more in the user guide. The importance of a feature is computed as the (normalized) total reduction of the criterion. Gini Index Sklearn.

From atelier-yuwa.ciao.jp

Gini Index Calculation Example atelieryuwa.ciao.jp Gini Index Sklearn It is also known as the. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Read more in the user guide. In this. Gini Index Sklearn.

From sketchplanations.com

The Gini coefficient Sketchplanations Gini Index Sklearn How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. It is also known as the. It means an attribute with lower gini index should be preferred. Gini index is a metric to measure how often a randomly chosen element would. Gini Index Sklearn.

From investguiding.com

Gini Index Explained and Gini Coefficients Around the World (2024) Gini Index Sklearn It is also known as the. In this tutorial, you covered a lot of details about decision trees; The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. The gini index, also known as gini impurity, measures the. Gini Index Sklearn.

From www.researchgate.net

The Gini Index equals this formula with areas A and B. Gini Index = A Gini Index Sklearn In this tutorial, you covered a lot of details about decision trees; Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. Read more in the user guide. It means an attribute. Gini Index Sklearn.

From medium.com

Calculating Gini Coefficient in BigQuery with SQL by Evgeny Medvedev Gini Index Sklearn It is also known as the. It means an attribute with lower gini index should be preferred. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit.. Gini Index Sklearn.

From www.researchgate.net

Gini indexes. If equal distribution 0.610. If equal distribution Gini Index Sklearn It means an attribute with lower gini index should be preferred. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. In this tutorial, you covered a lot of details about decision trees; The gini. Gini Index Sklearn.

From hxechwvhx.blob.core.windows.net

Gini Index Python Sklearn at Stacie Fox blog Gini Index Sklearn The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. In this tutorial, you covered a lot of details about decision trees; The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. How they work, attribute selection measures such as information gain, gain. Gini Index Sklearn.

From www.studocu.com

Gini Koeffizient Definition Die Gini Ratio wurde vom italienischen Gini Index Sklearn In this tutorial, you covered a lot of details about decision trees; Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. It means an attribute with lower gini index should be preferred. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the. Gini Index Sklearn.

From hxechwvhx.blob.core.windows.net

Gini Index Python Sklearn at Stacie Fox blog Gini Index Sklearn It is also known as the. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. Read more in the user guide. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. How they work, attribute. Gini Index Sklearn.

From invatatiafaceri.ro

Índice de Gini explicado y coeficientes de Gini en todo el mundo Gini Index Sklearn The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. Here is an example of how you can use gini. Gini Index Sklearn.

From www.malaymail.com

Putting the Gini back in the bottle — Rais Hussin Malay Mail Gini Index Sklearn It is also known as the. Read more in the user guide. The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of. Gini Index Sklearn.

From www.researchgate.net

Box plots of the two Gini indexes employed in the study (LHS Gini_Disp Gini Index Sklearn Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. It is also known as the. In this tutorial, you covered a lot of details about decision trees; Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. How they work, attribute selection measures such as information gain, gain ratio,. Gini Index Sklearn.

From ocw.tudelft.nl

2.2.1 Measuring inequality with the Gini index TU Delft OCW Gini Index Sklearn Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. Read more in the user guide. It is also known as the. Here is an example of how you can use gini impurity to determine the best feature for splitting. Gini Index Sklearn.

From www.learndatasci.com

Gini Impurity LearnDataSci Gini Index Sklearn It is also known as the. The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a. Gini Index Sklearn.

From www.researchgate.net

Lorenz curve and Gini index for local oligarchy’s Source Gini Index Sklearn The importance of a feature is computed as the (normalized) total reduction of the criterion brought by that feature. In this tutorial, you covered a lot of details about decision trees; Read more in the user guide. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. How they work, attribute selection measures such as information gain,. Gini Index Sklearn.

From ourworldindata.org

inequality Gini coefficient Our World in Data Gini Index Sklearn Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a lot of details about decision trees; Gini index is a metric to measure how often a randomly chosen element would be incorrectly identified. Read more in the user guide. How they work, attribute selection measures such as information gain, gain ratio,. Gini Index Sklearn.

From www.slideserve.com

PPT The Gini Index PowerPoint Presentation, free download ID355591 Gini Index Sklearn The gini index, also known as gini impurity, measures the probability of a randomly chosen element being incorrectly. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a lot of details about decision trees; It is also known as the. It means an attribute with lower gini index should be preferred.. Gini Index Sklearn.

From www.researchgate.net

Interpretation of the Gini Index Download Scientific Diagram Gini Index Sklearn It is also known as the. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. Read more in the user guide. It means an attribute with lower gini index should be preferred. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the. Gini Index Sklearn.

From marketbusinessnews.com

What is the Gini Index? What does it measure? Market Business News Gini Index Sklearn How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. It means an attribute with lower gini index should be preferred. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. It is also known as the. Here is an example of how you can use gini impurity to determine the. Gini Index Sklearn.

From camera.edu.vn

Update 133+ gini and jony logo best camera.edu.vn Gini Index Sklearn It is also known as the. Read more in the user guide. How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. In this tutorial, you covered a lot of details about decision trees; Here is an example of how you. Gini Index Sklearn.

From hyperskill.org

Gini index · Hyperskill Gini Index Sklearn How they work, attribute selection measures such as information gain, gain ratio, and gini index, decision. Criterion{“gini”, “entropy”, “log_loss”}, default=”gini” the function to measure the quality of a split. Here is an example of how you can use gini impurity to determine the best feature for splitting in a decision tree, using the scikit. It means an attribute with lower. Gini Index Sklearn.