Markov Chain Matrix Example . Use the transition matrix and the initial state vector to find the state vector that. The markov chain is the process x 0,x 1,x 2,. The state of a markov chain at time t is the value ofx t. For example, if x t. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. What is a markov chain? Write transition matrices for markov chain problems. Ei) = 1=(2d ) 2 zd 8x. A markov process is a random process for which the future (the next step) depends only on the present state;

from www.chegg.com

The markov chain is the process x 0,x 1,x 2,. For example, if x t. A markov process is a random process for which the future (the next step) depends only on the present state; Ei) = 1=(2d ) 2 zd 8x. Use the transition matrix and the initial state vector to find the state vector that. What is a markov chain? For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. Write transition matrices for markov chain problems. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The state of a markov chain at time t is the value ofx t.

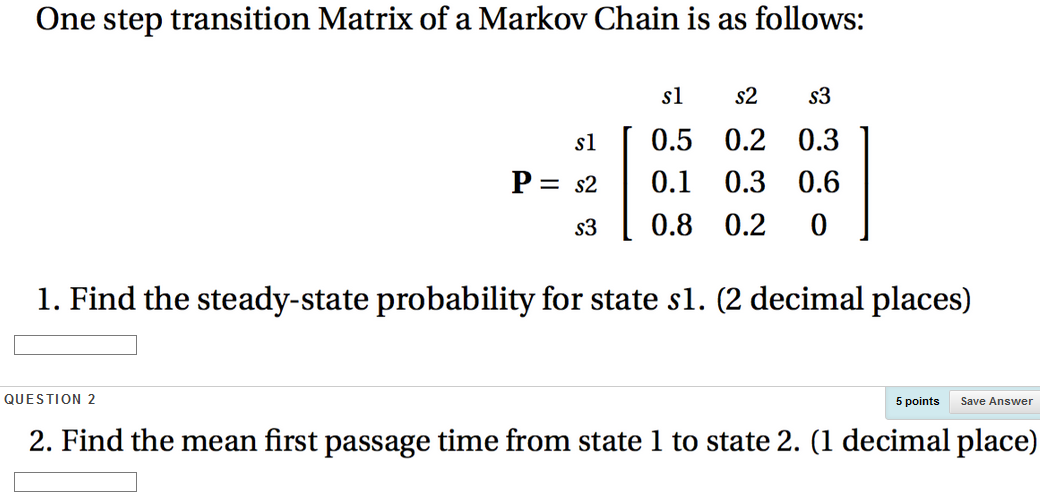

Solved One step transition Matrix of a Markov Chain is as

Markov Chain Matrix Example Use the transition matrix and the initial state vector to find the state vector that. The state of a markov chain at time t is the value ofx t. Write transition matrices for markov chain problems. What is a markov chain? For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. Ei) = 1=(2d ) 2 zd 8x. Use the transition matrix and the initial state vector to find the state vector that. The markov chain is the process x 0,x 1,x 2,. A markov process is a random process for which the future (the next step) depends only on the present state; For example, if x t. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules.

From www.chegg.com

Solved Let {X_n} Be A Markov Chain With The Following Tra... Markov Chain Matrix Example What is a markov chain? The markov chain is the process x 0,x 1,x 2,. For example, if x t. Ei) = 1=(2d ) 2 zd 8x. Write transition matrices for markov chain problems. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that. Markov Chain Matrix Example.

From www.pdfprof.com

chaine de markov matrice de transition Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. Write transition matrices for markov chain problems. What is a markov chain? For example, if x t. The state of a markov chain at time t is the value ofx t. A markov. Markov Chain Matrix Example.

From www.researchgate.net

(PDF) Markov chain model for multimodal biometric rank fusion Markov Chain Matrix Example Use the transition matrix and the initial state vector to find the state vector that. Ei) = 1=(2d ) 2 zd 8x. Write transition matrices for markov chain problems. For example, if x t. The state of a markov chain at time t is the value ofx t. The markov chain is the process x 0,x 1,x 2,. A markov. Markov Chain Matrix Example.

From www.chegg.com

Solved Consider The Markov Chain With Transition Matrix A... Markov Chain Matrix Example The state of a markov chain at time t is the value ofx t. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. Write transition matrices for markov chain problems. Use the transition matrix and the initial state vector to find the. Markov Chain Matrix Example.

From www.researchgate.net

Transition matrix of the 2D Markov chain shown in Fig. 3. Download Scientific Diagram Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Use the transition matrix and the initial state vector to find the state. Markov Chain Matrix Example.

From www.analyticsvidhya.com

A Comprehensive Guide on Markov Chain Analytics Vidhya Markov Chain Matrix Example Ei) = 1=(2d ) 2 zd 8x. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Write transition matrices for markov chain. Markov Chain Matrix Example.

From www.gaussianwaves.com

Implementing Markov Chain in Python GaussianWaves Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. Ei) = 1=(2d ) 2 zd 8x. The markov chain is the process x 0,x 1,x 2,. The state of a markov chain at time t is the value ofx t. For example,. Markov Chain Matrix Example.

From www.youtube.com

Markov Chains nstep Transition Matrix Part 3 YouTube Markov Chain Matrix Example The markov chain is the process x 0,x 1,x 2,. What is a markov chain? A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov process is a random process for which the future (the next step) depends only on the present state; For a markov chain, which. Markov Chain Matrix Example.

From www.geeksforgeeks.org

Finding the probability of a state at a given time in a Markov chain Set 2 Markov Chain Matrix Example The markov chain is the process x 0,x 1,x 2,. Use the transition matrix and the initial state vector to find the state vector that. A markov process is a random process for which the future (the next step) depends only on the present state; Ei) = 1=(2d ) 2 zd 8x. Write transition matrices for markov chain problems. A. Markov Chain Matrix Example.

From kim-hjun.medium.com

Markov Chain & Stationary Distribution by Kim Hyungjun Medium Markov Chain Matrix Example What is a markov chain? Write transition matrices for markov chain problems. The state of a markov chain at time t is the value ofx t. Ei) = 1=(2d ) 2 zd 8x. For example, if x t. The markov chain is the process x 0,x 1,x 2,. Use the transition matrix and the initial state vector to find the. Markov Chain Matrix Example.

From www.thoughtco.com

Definition and Example of a Markov Transition Matrix Markov Chain Matrix Example The markov chain is the process x 0,x 1,x 2,. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Use the transition matrix and the initial state vector to find the state vector that. For example, if x t. A markov process is a random process for which the. Markov Chain Matrix Example.

From www.chegg.com

Solved Determine The Limiting Distribution For The Markov... Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. A markov process is a random process for which the future (the next step) depends only on the present state; What is a markov chain? Write transition matrices for markov chain problems. Use. Markov Chain Matrix Example.

From medium.com

Demystifying Markov Clustering. Introduction to markov clustering… by Anurag Kumar Mishra Markov Chain Matrix Example What is a markov chain? The state of a markov chain at time t is the value ofx t. Write transition matrices for markov chain problems. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The markov chain is the process x 0,x 1,x 2,. A markov process is. Markov Chain Matrix Example.

From www.coursehero.com

[Solved] Chain irreplaceable samples Let P be the transition matrix of a... Course Hero Markov Chain Matrix Example The state of a markov chain at time t is the value ofx t. For example, if x t. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. Write transition matrices for markov chain problems. A markov process is a random process. Markov Chain Matrix Example.

From www.slideserve.com

PPT A Revealing Introduction to Hidden Markov Models PowerPoint Presentation ID2118545 Markov Chain Matrix Example Write transition matrices for markov chain problems. What is a markov chain? A markov process is a random process for which the future (the next step) depends only on the present state; The state of a markov chain at time t is the value ofx t. For example, if x t. Use the transition matrix and the initial state vector. Markov Chain Matrix Example.

From www.slideserve.com

PPT a tutorial on Markov Chain Monte Carlo (MCMC) PowerPoint Presentation ID437800 Markov Chain Matrix Example Ei) = 1=(2d ) 2 zd 8x. For example, if x t. A markov process is a random process for which the future (the next step) depends only on the present state; A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. What is a markov chain? The markov chain. Markov Chain Matrix Example.

From www.youtube.com

Matrix Limits and Markov Chains YouTube Markov Chain Matrix Example Ei) = 1=(2d ) 2 zd 8x. What is a markov chain? For example, if x t. A markov process is a random process for which the future (the next step) depends only on the present state; Use the transition matrix and the initial state vector to find the state vector that. The state of a markov chain at time. Markov Chain Matrix Example.

From www.youtube.com

Steadystate probability of Markov chain YouTube Markov Chain Matrix Example Write transition matrices for markov chain problems. For example, if x t. A markov process is a random process for which the future (the next step) depends only on the present state; Use the transition matrix and the initial state vector to find the state vector that. The markov chain is the process x 0,x 1,x 2,. The state of. Markov Chain Matrix Example.

From brilliant.org

Markov Chains Brilliant Math & Science Wiki Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. For example, if x t. A markov process is a random process for which the future (the next step) depends only on the present state; The markov chain is the process x 0,x. Markov Chain Matrix Example.

From andrewjmoodie.com

Markov Chain stratigraphic model Andrew J. Moodie Markov Chain Matrix Example A markov process is a random process for which the future (the next step) depends only on the present state; Ei) = 1=(2d ) 2 zd 8x. For example, if x t. What is a markov chain? The state of a markov chain at time t is the value ofx t. For a markov chain, which has k states, the. Markov Chain Matrix Example.

From www.slideserve.com

PPT Bayesian Methods with Monte Carlo Markov Chains II PowerPoint Presentation ID6581146 Markov Chain Matrix Example Use the transition matrix and the initial state vector to find the state vector that. Write transition matrices for markov chain problems. For example, if x t. A markov process is a random process for which the future (the next step) depends only on the present state; For a markov chain, which has k states, the state vector for an. Markov Chain Matrix Example.

From www.gaussianwaves.com

Markov Chains Simplified !! GaussianWaves Markov Chain Matrix Example The state of a markov chain at time t is the value ofx t. For example, if x t. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. What is a markov chain? Use the transition matrix and the initial state vector to find the state vector that. Write. Markov Chain Matrix Example.

From www.shiksha.com

Markov Chain Types, Properties and Applications Shiksha Online Markov Chain Matrix Example What is a markov chain? Use the transition matrix and the initial state vector to find the state vector that. A markov process is a random process for which the future (the next step) depends only on the present state; Write transition matrices for markov chain problems. A markov chain is a mathematical system that experiences transitions from one state. Markov Chain Matrix Example.

From www.slideserve.com

PPT Markov Chain Models PowerPoint Presentation, free download ID6262293 Markov Chain Matrix Example Use the transition matrix and the initial state vector to find the state vector that. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. For example, if x t. The markov chain is the process x 0,x 1,x 2,. Write transition matrices. Markov Chain Matrix Example.

From www.youtube.com

Finite Math Markov Transition Diagram to Matrix Practice YouTube Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. For example, if x t. A markov process is a random process for. Markov Chain Matrix Example.

From www.slideserve.com

PPT Markov Chains PowerPoint Presentation, free download ID6008214 Markov Chain Matrix Example The state of a markov chain at time t is the value ofx t. A markov process is a random process for which the future (the next step) depends only on the present state; What is a markov chain? Ei) = 1=(2d ) 2 zd 8x. For a markov chain, which has k states, the state vector for an observation. Markov Chain Matrix Example.

From www.slideserve.com

PPT Day 3 Markov Chains PowerPoint Presentation, free download ID5692743 Markov Chain Matrix Example For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Write transition matrices for markov chain problems. For example, if x t. Use. Markov Chain Matrix Example.

From www.researchgate.net

Network Markov Chain Representation denoted as N k . This graph... Download Scientific Diagram Markov Chain Matrix Example The markov chain is the process x 0,x 1,x 2,. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Use the transition matrix and the initial state vector to find the state vector that. For a markov chain, which has k states, the state vector for an observation period. Markov Chain Matrix Example.

From towardsdatascience.com

Markov models and Markov chains explained in real life probabilistic workout routine by Markov Chain Matrix Example A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The markov chain is the process x 0,x 1,x 2,. Write transition matrices for markov chain problems. The state of a markov chain at time t is the value ofx t. A markov process is a random process for which. Markov Chain Matrix Example.

From www.chegg.com

Solved One step transition Matrix of a Markov Chain is as Markov Chain Matrix Example A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Write transition matrices for markov chain problems. Use the transition matrix and the initial state vector to find the state vector that. What is a markov chain? Ei) = 1=(2d ) 2 zd 8x. The state of a markov chain. Markov Chain Matrix Example.

From www.youtube.com

(ML 18.5) Examples of Markov chains with various properties (part 2) YouTube Markov Chain Matrix Example The state of a markov chain at time t is the value ofx t. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. What is a markov chain? A markov process is a random process for which the future (the next step). Markov Chain Matrix Example.

From www.researchgate.net

Markov chains a, Markov chain for L = 1. States are represented by... Download Scientific Diagram Markov Chain Matrix Example The markov chain is the process x 0,x 1,x 2,. Ei) = 1=(2d ) 2 zd 8x. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. What is a markov chain? A markov process is a random process for which the future (the next step) depends only on the. Markov Chain Matrix Example.

From www.chegg.com

Solved Consider The Markov Chain With Transition Matrix W... Markov Chain Matrix Example What is a markov chain? Use the transition matrix and the initial state vector to find the state vector that. A markov process is a random process for which the future (the next step) depends only on the present state; Ei) = 1=(2d ) 2 zd 8x. The markov chain is the process x 0,x 1,x 2,. A markov chain. Markov Chain Matrix Example.

From www.youtube.com

MARKOV CHAINS Equilibrium Probabilities YouTube Markov Chain Matrix Example A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Ei) = 1=(2d ) 2 zd 8x. For a markov chain, which has k states, the state vector for an observation period , is a column vector defined by where, = probability that the. The markov chain is the process. Markov Chain Matrix Example.

From youtube.com

Finite Math Markov Chain SteadyState Calculation YouTube Markov Chain Matrix Example A markov process is a random process for which the future (the next step) depends only on the present state; What is a markov chain? Ei) = 1=(2d ) 2 zd 8x. The state of a markov chain at time t is the value ofx t. For example, if x t. For a markov chain, which has k states, the. Markov Chain Matrix Example.