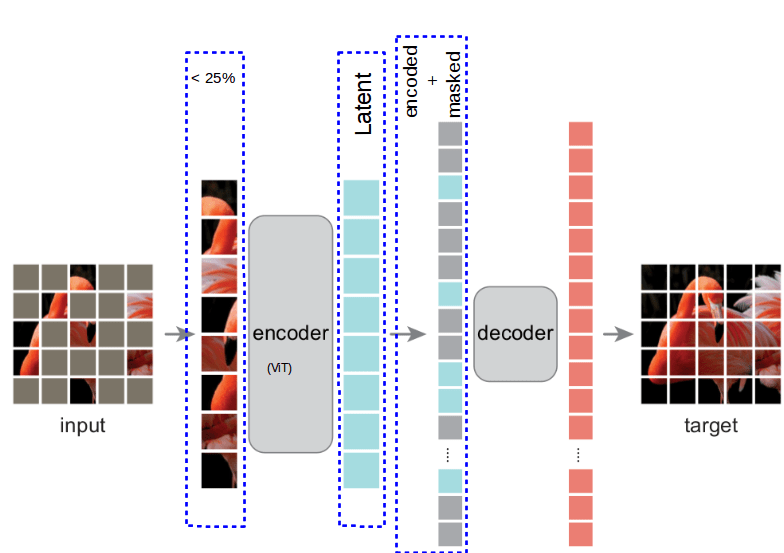

Masked Autoencoder Facebook . It is based on two core designs. Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels.

from mchromiak.github.io

We mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Our mae approach is simple:

Masked autoencoder (MAE) for visual representation learning. Form the

Masked Autoencoder Facebook Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple:

From www.catalyzex.com

3D Masked Autoencoders with Application to Anomaly Detection in Non Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple: It is based on two core designs. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From paperswithcode.com

Global Contrast Masked Autoencoders Are Powerful Pathological Masked Autoencoder Facebook Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. Masked Autoencoder Facebook.

From www.catalyzex.com

Improving Masked Autoencoders by Learning Where to Mask Paper and Code Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From www.catalyzex.com

Advancing Volumetric Medical Image Segmentation via GlobalLocal Masked Masked Autoencoder Facebook It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From paperswithcode.com

A simple, efficient and scalable contrastive masked autoencoder for Masked Autoencoder Facebook Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. It is based on two core designs. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From hub.baai.ac.cn

每日AI前沿术语:遮盖图像建模(Masked Image Modeling) 智源社区 Masked Autoencoder Facebook It is based on two core designs. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From laptrinhx.com

Masked Autoencoders Are Scalable Vision Learners LaptrinhX Masked Autoencoder Facebook We mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From velog.io

CAVMAE Contrastive AudioVisual Masked AutoEncoder (2022.08) Masked Autoencoder Facebook We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple: It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From www.catalyzex.com

Efficient Masked Autoencoders with SelfConsistency Paper and Code Masked Autoencoder Facebook This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From paperswithcode.com

Masked Autoencoders are Robust Data Augmentors Papers With Code Masked Autoencoder Facebook Our mae approach is simple: It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From www.catalyzex.com

SMAUG Sparse Masked Autoencoder for Efficient VideoLanguage Pre Masked Autoencoder Facebook This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. It is based on two core designs. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From www.catalyzex.com

UniM^2AE Multimodal Masked Autoencoders with Unified 3D Masked Autoencoder Facebook Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From analyticsindiamag.com

All you need to know about masked autoencoders Masked Autoencoder Facebook This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. It is based on two core designs. Our mae approach is simple: Masked Autoencoder Facebook.

From www.catalyzex.com

JointEmbedding Masked Autoencoder for Selfsupervised Learning of Masked Autoencoder Facebook It is based on two core designs. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Our mae approach is simple: Masked Autoencoder Facebook.

From paperswithcode.com

MultiMAE Multimodal Multitask Masked Autoencoders Papers With Code Masked Autoencoder Facebook Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. Masked Autoencoder Facebook.

From www.catalyzex.com

FMAE Frequencymasked Multimodal Autoencoder for Zinc Electrolysis Masked Autoencoder Facebook Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. It is based on two core designs. We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From medium.com

A Leap Forward in Computer Vision Facebook AI Says Masked Autoencoders Masked Autoencoder Facebook This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: It is based on two core designs. We mask random patches of the input image and reconstruct the missing pixels. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From www.catalyzex.com

Masked Autoencoder for Unsupervised Video Summarization Paper and Code Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From zhuanlan.zhihu.com

Masked Autoencoders Are Scalable Vision Learners 知乎 Masked Autoencoder Facebook We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From www.catalyzex.com

Emotic Masked Autoencoder with Attention Fusion for Facial Expression Masked Autoencoder Facebook We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Our mae approach is simple: Masked Autoencoder Facebook.

From mchromiak.github.io

Masked autoencoder (MAE) for visual representation learning. Form the Masked Autoencoder Facebook It is based on two core designs. Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From www.mdpi.com

Applied Sciences Free FullText MultiView Masked Autoencoder for Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: It is based on two core designs. Masked Autoencoder Facebook.

From www.catalyzex.com

Advancing Volumetric Medical Image Segmentation via GlobalLocal Masked Masked Autoencoder Facebook It is based on two core designs. We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From syncedreview.com

A Leap Forward in Computer Vision Facebook AI Says Masked Autoencoders Masked Autoencoder Facebook It is based on two core designs. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From www.catalyzex.com

AMAE Adaptation of PreTrained Masked Autoencoder for Dual Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From paperswithcode.com

TestTime Training with Masked Autoencoders Papers With Code Masked Autoencoder Facebook Our mae approach is simple: It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From www.catalyzex.com

SocialMAE Social Masked Autoencoder for Multiperson Motion Masked Autoencoder Facebook Our mae approach is simple: It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From www.catalyzex.com

Surface Masked AutoEncoder SelfSupervision for Cortical Imaging Data Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From www.catalyzex.com

Unsupervised Anomaly Detection in Medical Images with a Memory Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.

From crossmae.github.io

CrossMAE Rethinking Patch Dependence for Masked Autoencoders Masked Autoencoder Facebook Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. We mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Masked Autoencoder Facebook.

From www.catalyzex.com

AttentionGuided Masked Autoencoders For Learning Image Representations Masked Autoencoder Facebook This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple: It is based on two core designs. Masked Autoencoder Facebook.

From www.youtube.com

Masked Autoencoders (MAE) Paper Explained YouTube Masked Autoencoder Facebook Our mae approach is simple: We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. It is based on two core designs. Masked Autoencoder Facebook.

From www.catalyzex.com

MAEDFER Efficient Masked Autoencoder for Selfsupervised Dynamic Masked Autoencoder Facebook We mask random patches of the input image and reconstruct the missing pixels. This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. Our mae approach is simple: It is based on two core designs. Masked Autoencoder Facebook.

From www.catalyzex.com

RARE Robust Masked Graph Autoencoder Paper and Code CatalyzeX Masked Autoencoder Facebook This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. It is based on two core designs. We mask random patches of the input image and reconstruct the missing pixels. Our mae approach is simple: This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. Masked Autoencoder Facebook.

From www.catalyzex.com

Rethinking Vision Transformer and Masked Autoencoder in Multimodal Face Masked Autoencoder Facebook This repo hosts the code and models of masked autoencoders that listen [neurips 2022 bib]. It is based on two core designs. Our mae approach is simple: This paper studies a conceptually simple extension of masked autoencoders (mae) to spatiotemporal representation learning from. We mask random patches of the input image and reconstruct the missing pixels. Masked Autoencoder Facebook.