Java Hashmap Max Size . The default load factor of a hashmap is 0.75f. Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. To keep a hashmap working well, it changes size when it gets too full. I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. How do we decide when to increase the capacity? This means it recalculates the hashes and indexes for all the entries in it. Check is 0.0625 > 0.75 ? Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. We insert the first element, the current load factor will be 1/16 = 0.0625. The initial capacity is the capacity at the time the map is created. However, you can implement your. Finally, the default initial capacity of the hashmap is 16. As the number of elements in the hashmap. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the.

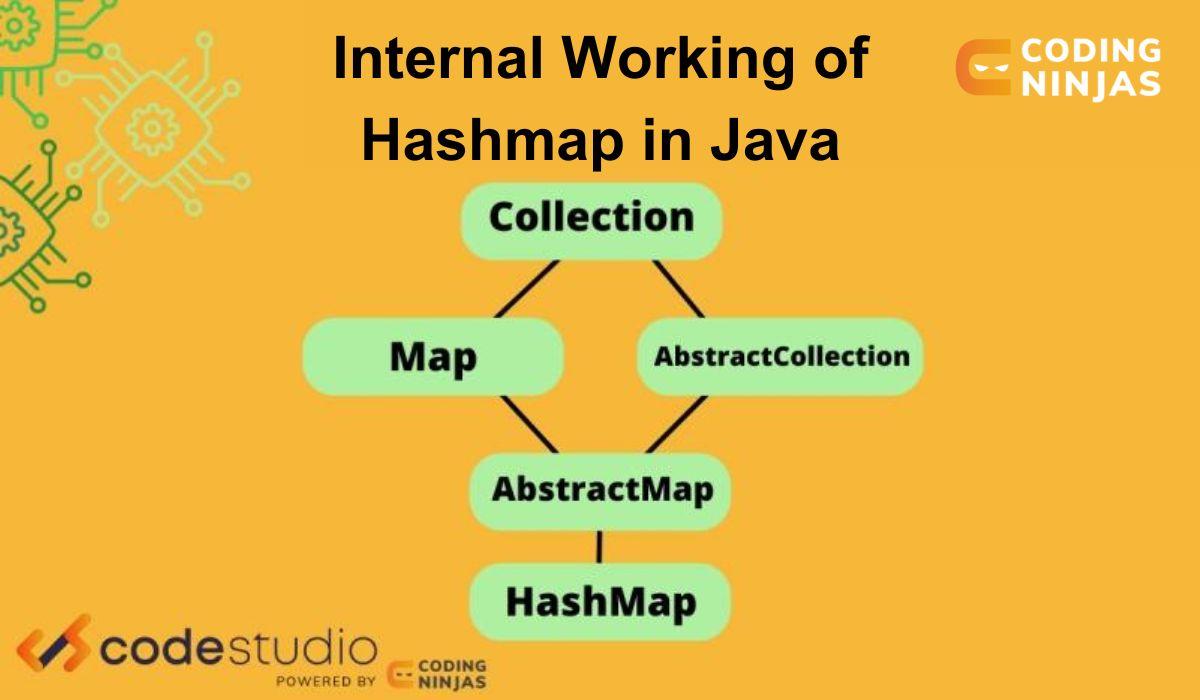

from www.codingninjas.com

To keep a hashmap working well, it changes size when it gets too full. How do we decide when to increase the capacity? Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. Check is 0.0625 > 0.75 ? As the number of elements in the hashmap. We insert the first element, the current load factor will be 1/16 = 0.0625. Finally, the default initial capacity of the hashmap is 16. The default load factor of a hashmap is 0.75f. This means it recalculates the hashes and indexes for all the entries in it.

Internal Working of HashMap in Java Coding Ninjas

Java Hashmap Max Size The default load factor of a hashmap is 0.75f. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. To keep a hashmap working well, it changes size when it gets too full. The initial capacity is the capacity at the time the map is created. As the number of elements in the hashmap. Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. This means it recalculates the hashes and indexes for all the entries in it. Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. How do we decide when to increase the capacity? However, you can implement your. Check is 0.0625 > 0.75 ? Finally, the default initial capacity of the hashmap is 16. The default load factor of a hashmap is 0.75f. We insert the first element, the current load factor will be 1/16 = 0.0625.

From www.youtube.com

Java interview How Hashmap works ? YouTube Java Hashmap Max Size To keep a hashmap working well, it changes size when it gets too full. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. We insert the first element, the current load factor will be 1/16 = 0.0625. Iteration over collection views requires time proportional to the capacity. Java Hashmap Max Size.

From www.testingdocs.com

Write a java program using HashMap to store name and age pairs and Java Hashmap Max Size Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. The initial capacity is the capacity at the time the map is created. Finally, the default initial capacity of the hashmap is 16. Let us take an example, since the initial capacity by default is 16, consider we have. Java Hashmap Max Size.

From www.geeksforgeeks.org

HashMap in Java Java Hashmap Max Size Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. Check is 0.0625 > 0.75 ? The default load factor of a hashmap is 0.75f. However, you can implement your. We insert the first element, the current load factor will be 1/16 = 0.0625. Iteration over collection views requires time. Java Hashmap Max Size.

From thecodinganalyst.github.io

Java HashMap Explained TheCodingAnalyst Java Hashmap Max Size I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. The initial capacity is the capacity at the time the map is created. Finally, the default initial capacity of the hashmap is 16. As the number of elements in the hashmap. This means it recalculates the hashes and. Java Hashmap Max Size.

From www.codingninjas.com

Internal Working of HashMap in Java Coding Ninjas CodeStudio Java Hashmap Max Size We insert the first element, the current load factor will be 1/16 = 0.0625. Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. However, you can implement your.. Java Hashmap Max Size.

From javadoubts.com

Java Tutorial Java HashMap Javadoubts Java Hashmap Max Size I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. The initial capacity is the capacity at the time the map is created. However, you can implement your. How do we decide when to increase the capacity? To keep a hashmap working well, it changes size when it. Java Hashmap Max Size.

From www.geeksforgeeks.org

Load Factor in HashMap in Java with Examples Java Hashmap Max Size This means it recalculates the hashes and indexes for all the entries in it. Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. How do we decide when to increase the capacity? As the number of elements in the hashmap. We insert the first element, the current load factor. Java Hashmap Max Size.

From crunchify.com

In Java how to Initialize HashMap? 7 different ways • Crunchify Java Hashmap Max Size How do we decide when to increase the capacity? Finally, the default initial capacity of the hashmap is 16. This means it recalculates the hashes and indexes for all the entries in it. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. Iteration over collection views requires. Java Hashmap Max Size.

From coderstea.in

The Magic Behind HashMap and How it works in Java CodersTea Java Hashmap Max Size Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. We insert the first element, the current load factor will be 1/16 = 0.0625. This means it recalculates the hashes and indexes for all the entries in it. To keep a hashmap working well, it changes size when it. Java Hashmap Max Size.

From crunchify.com

In Java how to Initialize HashMap? 7 different ways • Crunchify Java Hashmap Max Size The initial capacity is the capacity at the time the map is created. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. To keep a hashmap working well, it changes size when it gets too full. As the number of elements in the hashmap. Check is 0.0625. Java Hashmap Max Size.

From www.youtube.com

Internal Working of HashMap in Java How HashMap Works? YouTube Java Hashmap Max Size To keep a hashmap working well, it changes size when it gets too full. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. Check is 0.0625 > 0.75 ? Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets). Java Hashmap Max Size.

From tekolio.com

HashMap in Java Explained in Simple English with Examples. Tekolio Java Hashmap Max Size Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. How do we decide when to increase the capacity? I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. As the number of elements in the hashmap. However,. Java Hashmap Max Size.

From www.youtube.com

get size of java HashMap example YouTube Java Hashmap Max Size Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. The initial capacity is the capacity at the time the map is created. Finally, the default initial capacity of the hashmap is 16. I want to limit the maximum size of a hashmap to take metrics on a variety of. Java Hashmap Max Size.

From www.youtube.com

Hashmap Implementation in Java (Tutorial) YouTube Java Hashmap Max Size However, you can implement your. Finally, the default initial capacity of the hashmap is 16. To keep a hashmap working well, it changes size when it gets too full. We insert the first element, the current load factor will be 1/16 = 0.0625. I want to limit the maximum size of a hashmap to take metrics on a variety of. Java Hashmap Max Size.

From giobjiwjl.blob.core.windows.net

Java Hashmap Values To Array at Ashley Wojciechowski blog Java Hashmap Max Size I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. The initial capacity is the capacity at the time the map is created. As the number. Java Hashmap Max Size.

From www.javaguides.net

Java HashMap Java Hashmap Max Size The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. How do we decide when to increase the capacity? Finally, the default initial capacity of the hashmap is 16. Check is 0.0625 > 0.75 ? We insert the first element, the current load factor will be 1/16 =. Java Hashmap Max Size.

From www.blogforlearning.com

Learning Java Knowing and Understanding HashMap Classes in Java Blog Java Hashmap Max Size How do we decide when to increase the capacity? The initial capacity is the capacity at the time the map is created. This means it recalculates the hashes and indexes for all the entries in it. The default load factor of a hashmap is 0.75f. To keep a hashmap working well, it changes size when it gets too full. Iteration. Java Hashmap Max Size.

From www.youtube.com

What is HashMap in Java HashMap Internal Working Java HashMap YouTube Java Hashmap Max Size Check is 0.0625 > 0.75 ? However, you can implement your. How do we decide when to increase the capacity? I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets. Java Hashmap Max Size.

From java.isture.com

Map HashSet & HashMap 源码解析 Java学习笔记 Java Hashmap Max Size As the number of elements in the hashmap. How do we decide when to increase the capacity? We insert the first element, the current load factor will be 1/16 = 0.0625. I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. The java.util.hashmap.size() method of hashmap class is. Java Hashmap Max Size.

From klakwqlhg.blob.core.windows.net

Print The Key And Value In Hashmap Java at Anne Herman blog Java Hashmap Max Size This means it recalculates the hashes and indexes for all the entries in it. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. How do we decide when to increase the capacity? However, you can implement your. We insert the first element, the current load factor will. Java Hashmap Max Size.

From www.youtube.com

How to check the size of the HashMap? HashMap (Size) Java Java Hashmap Max Size We insert the first element, the current load factor will be 1/16 = 0.0625. To keep a hashmap working well, it changes size when it gets too full. This means it recalculates the hashes and indexes for all the entries in it. Check is 0.0625 > 0.75 ? The initial capacity is the capacity at the time the map is. Java Hashmap Max Size.

From ramanshankar.blogspot.com

Java Working of HashMap Java Hashmap Max Size How do we decide when to increase the capacity? Check is 0.0625 > 0.75 ? Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. However, you can implement your. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number. Java Hashmap Max Size.

From www.youtube.com

What is HashMap? HashMap Introduction Java Collection Framework Java Hashmap Max Size Finally, the default initial capacity of the hashmap is 16. I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. How do we decide when to increase the capacity? To keep a hashmap working well, it changes size when it gets too full. This means it recalculates the. Java Hashmap Max Size.

From www.codingninjas.com

Internal Working of HashMap in Java Coding Ninjas Java Hashmap Max Size To keep a hashmap working well, it changes size when it gets too full. As the number of elements in the hashmap. The initial capacity is the capacity at the time the map is created. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. This means it. Java Hashmap Max Size.

From yowatech.id

How to Use the Java Hashmap Data Structure Code With Example Yowatech Java Hashmap Max Size We insert the first element, the current load factor will be 1/16 = 0.0625. However, you can implement your. This means it recalculates the hashes and indexes for all the entries in it. Finally, the default initial capacity of the hashmap is 16. Let us take an example, since the initial capacity by default is 16, consider we have 16. Java Hashmap Max Size.

From www.edureka.co

Java HashMap Implementing HashMap in Java with Examples Edureka Java Hashmap Max Size I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. To keep a hashmap working well, it changes size when it gets too full. Finally, the default initial capacity of the hashmap is 16. We insert the first element, the current load factor will be 1/16 = 0.0625.. Java Hashmap Max Size.

From blog.skillfactory.ru

HashMap что это хэштаблицы в Java, подробное руководство Java Hashmap Max Size As the number of elements in the hashmap. This means it recalculates the hashes and indexes for all the entries in it. However, you can implement your. We insert the first element, the current load factor will be 1/16 = 0.0625. The default load factor of a hashmap is 0.75f. To keep a hashmap working well, it changes size when. Java Hashmap Max Size.

From javaconceptoftheday.com

How HashMap Works Internally In Java? Java Hashmap Max Size Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. Finally, the default initial capacity of the hashmap is 16. The initial capacity is the capacity at the time the map is created. However, you can implement your. This means it recalculates the hashes and indexes for all the entries. Java Hashmap Max Size.

From www.youtube.com

How HashMap works in Java? With Animation!! whats new in java8 tutorial Java Hashmap Max Size Check is 0.0625 > 0.75 ? We insert the first element, the current load factor will be 1/16 = 0.0625. As the number of elements in the hashmap. However, you can implement your. Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. I want to limit the maximum. Java Hashmap Max Size.

From www.youtube.com

Java Example to find Size Of Hashmap YouTube Java Hashmap Max Size I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. This means it recalculates the hashes and indexes for all the entries in it. To keep a hashmap working well, it changes size when it gets too full. The default load factor of a hashmap is 0.75f. How. Java Hashmap Max Size.

From www.youtube.com

HashMap Methods in Java HashMap Properties Practical Explanation Java Hashmap Max Size The default load factor of a hashmap is 0.75f. To keep a hashmap working well, it changes size when it gets too full. As the number of elements in the hashmap. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which refers to the number of the. Finally, the default initial capacity of the. Java Hashmap Max Size.

From javabypatel.blogspot.com

How Hashmap data structure works internally? How hashcode and equals Java Hashmap Max Size To keep a hashmap working well, it changes size when it gets too full. Iteration over collection views requires time proportional to the capacity of the hashmap instance (the number of buckets) plus its size. The default load factor of a hashmap is 0.75f. Let us take an example, since the initial capacity by default is 16, consider we have. Java Hashmap Max Size.

From www.masaischool.com

Understanding HashMap Data Structure With Examples Java Hashmap Max Size How do we decide when to increase the capacity? I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. The initial capacity is the capacity at the time the map is created. The java.util.hashmap.size() method of hashmap class is used to get the size of the map which. Java Hashmap Max Size.

From data-flair.training

Java HashMap Constructors & Methods of HashMap in Java DataFlair Java Hashmap Max Size However, you can implement your. Finally, the default initial capacity of the hashmap is 16. As the number of elements in the hashmap. Let us take an example, since the initial capacity by default is 16, consider we have 16 buckets right now. To keep a hashmap working well, it changes size when it gets too full. How do we. Java Hashmap Max Size.

From read.cholonautas.edu.pe

Java Initialize Hashmap With Multiple Values Printable Templates Free Java Hashmap Max Size I want to limit the maximum size of a hashmap to take metrics on a variety of hashing algorithms that i'm implementing. The initial capacity is the capacity at the time the map is created. As the number of elements in the hashmap. Finally, the default initial capacity of the hashmap is 16. We insert the first element, the current. Java Hashmap Max Size.