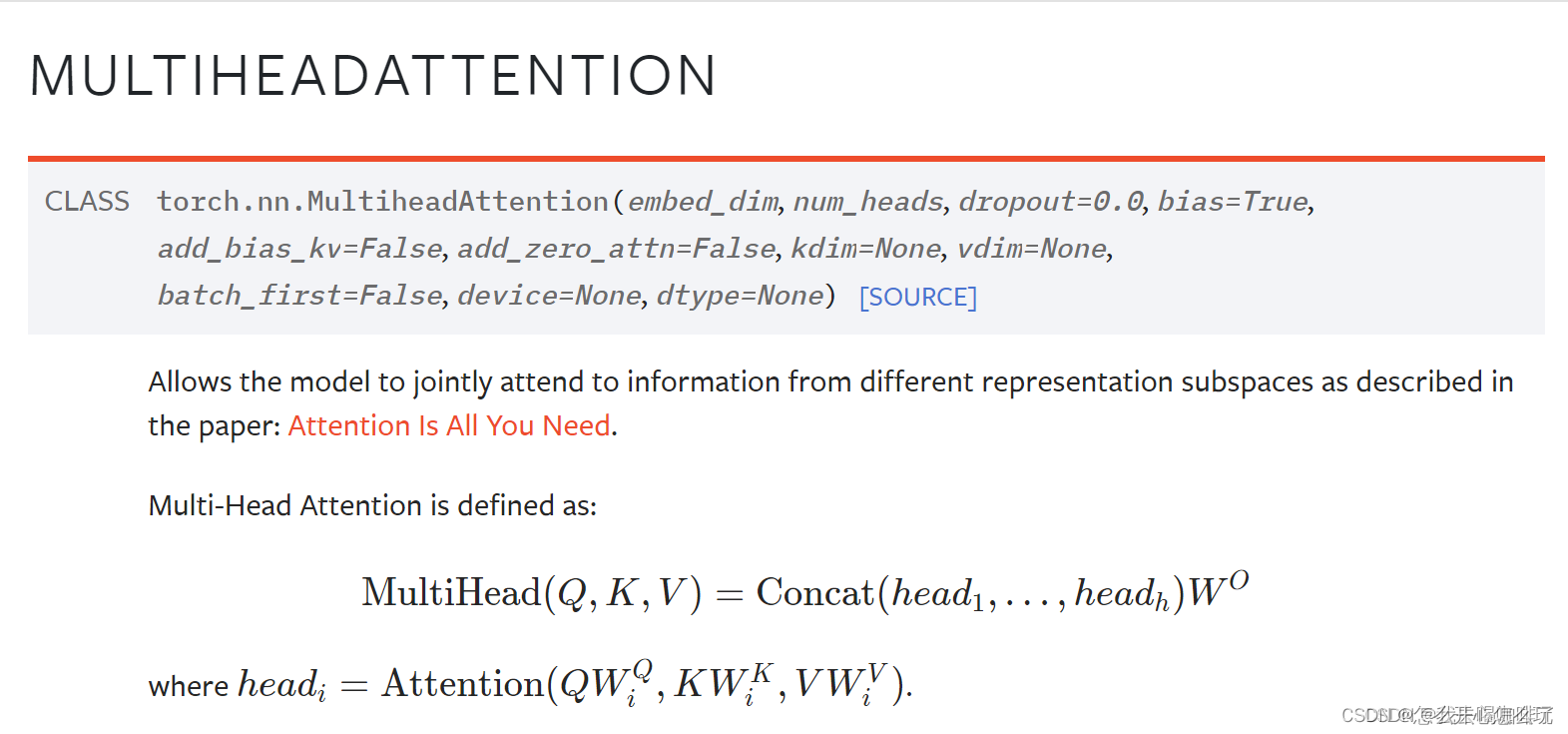

Torch.nn.multiheadattention Github . last active 3 days ago. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. i want to use pytorch's nn.multiheadattention but it doesn't work. Also check the usage example in. In plain pytorch, you can apply. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. I just want to use the functionality of. Instantly share code, notes, and snippets.

from blog.csdn.net

Also check the usage example in. i want to use pytorch's nn.multiheadattention but it doesn't work. Instantly share code, notes, and snippets. In plain pytorch, you can apply. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. I just want to use the functionality of. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. last active 3 days ago.

torch.nn.MultiheadAttention的使用和参数解析CSDN博客

Torch.nn.multiheadattention Github Also check the usage example in. last active 3 days ago. In plain pytorch, you can apply. Instantly share code, notes, and snippets. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. Also check the usage example in. i want to use pytorch's nn.multiheadattention but it doesn't work. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,.

From github.com

Runtime Error raised by `torch.nn.modules.activation.MultiheadAttention Torch.nn.multiheadattention Github I just want to use the functionality of. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Also check the usage example in. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. In plain pytorch, you can apply. last active 3 days ago. Instantly share code, notes, and snippets. i. Torch.nn.multiheadattention Github.

From github.com

🐛 [Bug] Softmax in nn.MultiheadAttention layer not fused with torch Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. In plain pytorch, you can apply. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. last active 3 days ago. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. i want to use pytorch's nn.multiheadattention but it doesn't work. Also check the usage example. Torch.nn.multiheadattention Github.

From github.com

module 'torch.nn' has no attribute 'MultiheadAttention' · Issue 13 Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. i want to use pytorch's nn.multiheadattention but it doesn't work. I just want to use the functionality of. last active 3 days ago. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Also check the usage example in. In plain pytorch, you can apply. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping. Torch.nn.multiheadattention Github.

From github.com

About nn.Multiheadattention Implementation · Issue 49296 · pytorch Torch.nn.multiheadattention Github Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. i want to use pytorch's nn.multiheadattention but it doesn't work. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Also check the usage example in. In plain pytorch,. Torch.nn.multiheadattention Github.

From github.com

torch.nn.MultiheadAttention module support in Smoothquant · Issue 960 Torch.nn.multiheadattention Github Multiheadattention (embed_dim, num_heads, dropout = 0.0,. Instantly share code, notes, and snippets. i want to use pytorch's nn.multiheadattention but it doesn't work. I just want to use the functionality of. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Also check the usage example in. last active. Torch.nn.multiheadattention Github.

From github.com

torch.nn.MultiheadAttention key_padding_mask and is_causal breaks Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. i want to use pytorch's nn.multiheadattention but it doesn't work. last active 3 days ago. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. In plain pytorch, you can apply. Also check the usage example in. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. for transformers, gradient clipping. Torch.nn.multiheadattention Github.

From blog.csdn.net

Torch 论文复现:Vision Transformer (ViT)_vit复现CSDN博客 Torch.nn.multiheadattention Github i want to use pytorch's nn.multiheadattention but it doesn't work. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. last active 3 days ago. I just want to use the functionality of. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. Instantly share code, notes, and snippets. In plain pytorch, you can apply. for transformers, gradient clipping can help to further stabilize the. Torch.nn.multiheadattention Github.

From github.com

format issue in document of torch.nn.MultiheadAttention · Issue 50919 Torch.nn.multiheadattention Github In plain pytorch, you can apply. Also check the usage example in. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. last active 3 days ago. I just want to use the functionality of. Instantly share code, notes, and snippets. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few. Torch.nn.multiheadattention Github.

From github.com

torch.jit.script failed to compile nn.MultiheadAttention when Torch.nn.multiheadattention Github class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. last active 3 days ago. Also check the usage example in. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Instantly share code, notes, and snippets. In plain. Torch.nn.multiheadattention Github.

From github.com

[Feature request] Query padding mask for nn.MultiheadAttention · Issue Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. I just want to use the functionality of. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. i want to use pytorch's nn.multiheadattention but it doesn't work. In plain pytorch, you can apply. last active 3 days ago. Also check the. Torch.nn.multiheadattention Github.

From github.com

Can't convert nn.multiheadAttetion(q,k,v) to Onnx when key isn't equal Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. last active 3 days ago. i want to use pytorch's nn.multiheadattention but it doesn't work. I just want to use the functionality of. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. In plain pytorch, you can apply. Also check the usage example in. for transformers, gradient clipping. Torch.nn.multiheadattention Github.

From www.youtube.com

visualizing nn.MultiheadAttention computation graph through torchviz Torch.nn.multiheadattention Github Multiheadattention (embed_dim, num_heads, dropout = 0.0,. last active 3 days ago. I just want to use the functionality of. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. In plain pytorch, you can apply. i want to use pytorch's nn.multiheadattention but. Torch.nn.multiheadattention Github.

From github.com

Trying to understand nn.MultiheadAttention coming from Keras · Issue Torch.nn.multiheadattention Github I just want to use the functionality of. In plain pytorch, you can apply. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Also check the usage example in. Instantly share code, notes, and snippets. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. last active 3 days ago. Multiheadattention (embed_dim,. Torch.nn.multiheadattention Github.

From github.com

Add rotary embeddings to MultiHeadAttention. · Issue 97899 · pytorch Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. I just want to use the functionality of. last active 3 days ago. In plain pytorch, you can apply. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. i want to use pytorch's nn.multiheadattention but. Torch.nn.multiheadattention Github.

From github.com

Typesetting in torch.nn.MultiheadAttention · Issue 74147 · pytorch Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. In plain pytorch, you can apply. i want to use pytorch's nn.multiheadattention but it doesn't work. I just want to use the functionality of. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Also check the. Torch.nn.multiheadattention Github.

From github.com

Trying to understand nn.MultiheadAttention coming from Keras · Issue Torch.nn.multiheadattention Github I just want to use the functionality of. i want to use pytorch's nn.multiheadattention but it doesn't work. Also check the usage example in. Instantly share code, notes, and snippets. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. In plain pytorch,. Torch.nn.multiheadattention Github.

From github.com

How to get nn.MultiheadAttention mid layer output · Issue 100293 Torch.nn.multiheadattention Github In plain pytorch, you can apply. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Also check the usage example in. I just want to use the functionality of. Instantly share code, notes, and snippets. last active 3 days ago. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. i. Torch.nn.multiheadattention Github.

From blog.csdn.net

【pytorch】 nn.MultiheadAttention 详解CSDN博客 Torch.nn.multiheadattention Github i want to use pytorch's nn.multiheadattention but it doesn't work. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. In plain pytorch, you can apply. Also check the usage example in. last active 3 days ago. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. Instantly share code, notes, and. Torch.nn.multiheadattention Github.

From github.com

nn.MultiHeadAttention should be able to return attention weights for Torch.nn.multiheadattention Github Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. Also check the usage example in. In plain pytorch, you can apply. last active 3 days ago. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Instantly share. Torch.nn.multiheadattention Github.

From github.com

SharedQK transformer for the transformer (nn.activation Torch.nn.multiheadattention Github i want to use pytorch's nn.multiheadattention but it doesn't work. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Instantly share code, notes, and snippets. I just want to use the functionality of. In plain pytorch,. Torch.nn.multiheadattention Github.

From github.com

C++ API `torchnnMultiheadAttention` Crashes by division by zero Torch.nn.multiheadattention Github In plain pytorch, you can apply. last active 3 days ago. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Also check the usage example in. I just want to use the functionality of. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. i. Torch.nn.multiheadattention Github.

From github.com

nn.MultiheadAttention causes gradients to NaN under some use Torch.nn.multiheadattention Github Also check the usage example in. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. In plain pytorch, you can apply. i want to use pytorch's nn.multiheadattention but it doesn't work. I just want to use. Torch.nn.multiheadattention Github.

From blog.csdn.net

【Transform(3)】【实践】使用Pytorch的torch.nn.MultiheadAttention来实现self Torch.nn.multiheadattention Github In plain pytorch, you can apply. Also check the usage example in. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. last active 3 days ago. i want to use pytorch's nn.multiheadattention but it doesn't work. for transformers, gradient clipping can help to further stabilize the training during the first few iterations,. Torch.nn.multiheadattention Github.

From www.youtube.com

Self Attention with torch.nn.MultiheadAttention Module YouTube Torch.nn.multiheadattention Github I just want to use the functionality of. i want to use pytorch's nn.multiheadattention but it doesn't work. Instantly share code, notes, and snippets. In plain pytorch, you can apply. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. last active 3 days ago. for transformers, gradient clipping can help to further stabilize the. Torch.nn.multiheadattention Github.

From github.com

Functional version of `MultiheadAttention`, `torch.nn.functional.multi Torch.nn.multiheadattention Github I just want to use the functionality of. In plain pytorch, you can apply. last active 3 days ago. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Also check the usage example in. i want to use pytorch's nn.multiheadattention but. Torch.nn.multiheadattention Github.

From blog.csdn.net

torch.nn.MultiheadAttention的使用和参数解析CSDN博客 Torch.nn.multiheadattention Github last active 3 days ago. Also check the usage example in. i want to use pytorch's nn.multiheadattention but it doesn't work. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. I just want to use the functionality of. In plain pytorch,. Torch.nn.multiheadattention Github.

From blog.csdn.net

使用 Pytorch 从头开始 构建您自己的 Transformer 在 Pytorch 中逐步构建 Transformer 模型(教程含源码 Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. last active 3 days ago. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. I just want to use the functionality of. Also check the usage example in. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. i. Torch.nn.multiheadattention Github.

From github.com

GitHub KyanChen/MakeMultiHeadNaive Use naive MultiheadAttention Torch.nn.multiheadattention Github In plain pytorch, you can apply. Also check the usage example in. I just want to use the functionality of. last active 3 days ago. Instantly share code, notes, and snippets. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. class. Torch.nn.multiheadattention Github.

From github.com

Pruning `torch.nn.MultiheadAttention` causes RuntimeError · Issue Torch.nn.multiheadattention Github last active 3 days ago. Instantly share code, notes, and snippets. In plain pytorch, you can apply. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. i want to use pytorch's nn.multiheadattention but it doesn't work. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. I just want to use. Torch.nn.multiheadattention Github.

From github.com

`attn_mask` in nn.MultiheadAttention is additive · Issue 21518 Torch.nn.multiheadattention Github In plain pytorch, you can apply. Instantly share code, notes, and snippets. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. I just want to use the functionality of. last active 3 days ago. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. i want to use pytorch's nn.multiheadattention but. Torch.nn.multiheadattention Github.

From github.com

Why do not use 'torch.nn.MultiheadAttention' to substitude 'Class Torch.nn.multiheadattention Github In plain pytorch, you can apply. Instantly share code, notes, and snippets. Also check the usage example in. I just want to use the functionality of. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. last active 3 days ago. class. Torch.nn.multiheadattention Github.

From yololife-sy.medium.com

[NLP — 트랜스포머 ] Multihead Attention 2 by Sooyeon, Lee Medium Torch.nn.multiheadattention Github In plain pytorch, you can apply. Instantly share code, notes, and snippets. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Also check the usage example in. i want to use pytorch's nn.multiheadattention but it doesn't work. I just want to use the functionality of. last active 3 days ago. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping. Torch.nn.multiheadattention Github.

From blog.csdn.net

【Transform(3)】【实践】使用Pytorch的torch.nn.MultiheadAttention来实现self Torch.nn.multiheadattention Github Instantly share code, notes, and snippets. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. last active 3 days ago. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. Also check the usage example in. In plain pytorch, you can apply. I just want to. Torch.nn.multiheadattention Github.

From github.com

Checking the dimensions of query, key, value in nn.MultiheadAttention Torch.nn.multiheadattention Github class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. i want to use pytorch's nn.multiheadattention but it doesn't work. last active 3 days ago. Also check the usage example in. Instantly share code, notes, and snippets. In plain pytorch, you can. Torch.nn.multiheadattention Github.

From github.com

Using replicating nn.MultiHeadAttention with multiple performer Torch.nn.multiheadattention Github last active 3 days ago. class torch.nn.multiheadattention(embed_dim, num_heads, dropout=0.0, bias=true,. i want to use pytorch's nn.multiheadattention but it doesn't work. Instantly share code, notes, and snippets. Multiheadattention (embed_dim, num_heads, dropout = 0.0,. for transformers, gradient clipping can help to further stabilize the training during the first few iterations, and also afterward. I just want to use. Torch.nn.multiheadattention Github.