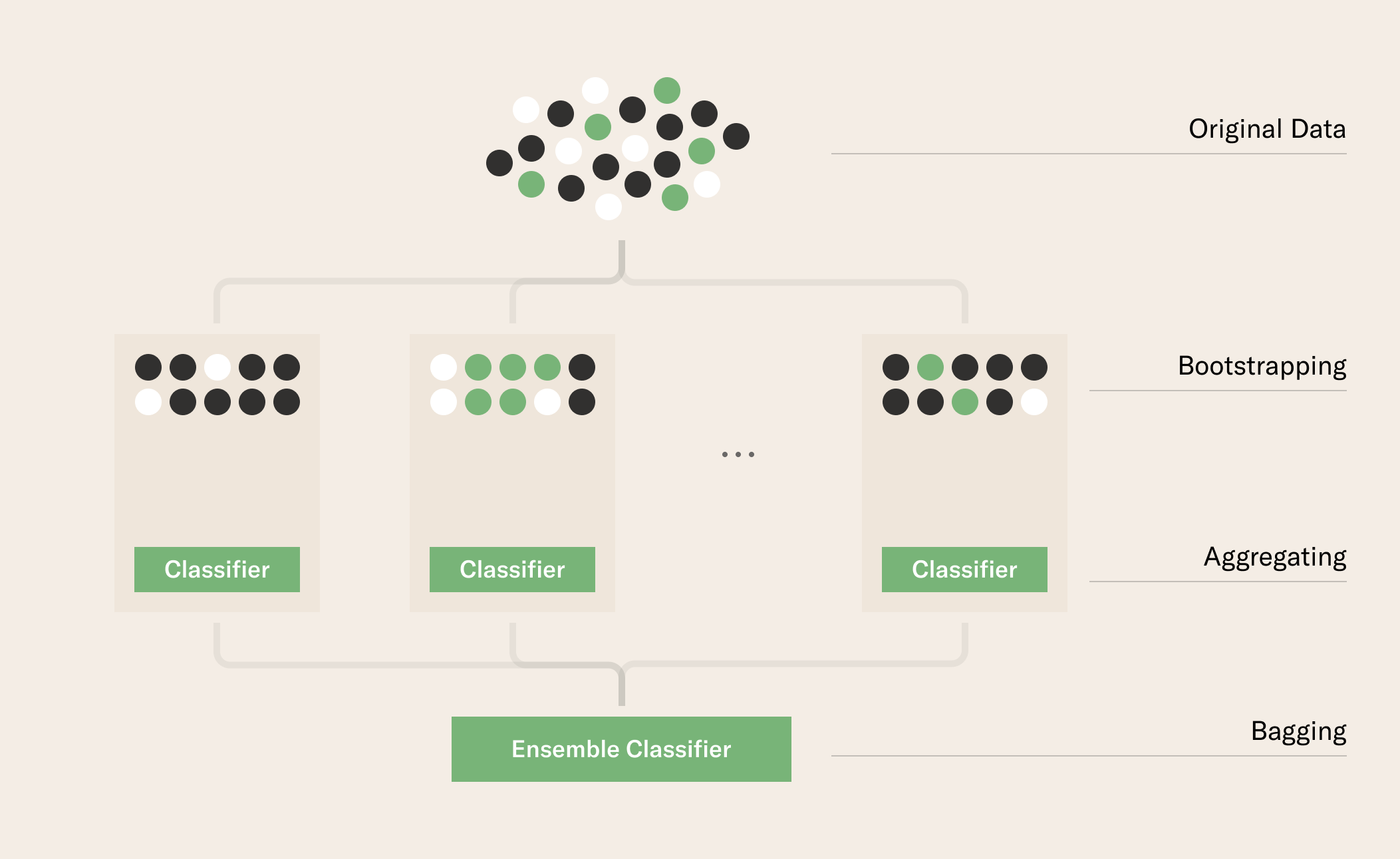

Bootstrapping Vs Bagging . bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. Which is the best one? bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. The underlying principle of bootstrapping relies on resampling a dataset with replacement. It is usually applied to decision tree methods. Can you give me an example for each?. what's the similarities and differences between these 3 methods: It decreases the variance and helps to avoid overfitting. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model.

from 3tdesign.edu.vn

bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. Which is the best one? bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. The underlying principle of bootstrapping relies on resampling a dataset with replacement. It decreases the variance and helps to avoid overfitting. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. It is usually applied to decision tree methods. Can you give me an example for each?. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method.

Update more than 110 difference between bagging and bootstrapping

Bootstrapping Vs Bagging bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. It decreases the variance and helps to avoid overfitting. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. what's the similarities and differences between these 3 methods: An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. It is usually applied to decision tree methods. Which is the best one? bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. Can you give me an example for each?. The underlying principle of bootstrapping relies on resampling a dataset with replacement.

From www.youtube.com

40 Bagging(Bootstrap AGGregating) Ensemble Learning Machine Bootstrapping Vs Bagging what's the similarities and differences between these 3 methods: bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. Can you give me an example for each?. It is usually applied to decision tree. Bootstrapping Vs Bagging.

From www.youtube.com

Bagging BootStrap aggreggation machine learning شرح عربي YouTube Bootstrapping Vs Bagging It is usually applied to decision tree methods. The underlying principle of bootstrapping relies on resampling a dataset with replacement. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any. Bootstrapping Vs Bagging.

From kidsdream.edu.vn

Top more than 121 bootstrapping and bagging best kidsdream.edu.vn Bootstrapping Vs Bagging Which is the best one? The underlying principle of bootstrapping relies on resampling a dataset with replacement. It decreases the variance and helps to avoid overfitting. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. It is usually applied to decision tree methods. An ensemble method is a technique that combines. Bootstrapping Vs Bagging.

From www.youtube.com

Bootstrapping, Bagging and Random Forests YouTube Bootstrapping Vs Bagging An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. It decreases the variance and helps to avoid overfitting. what's the similarities and differences between these 3 methods: . Bootstrapping Vs Bagging.

From www.youtube.com

Ensemble Learning, Bootstrap Aggregating (Bagging) and Boosting YouTube Bootstrapping Vs Bagging bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. what's the similarities and differences between these 3 methods: bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. An ensemble method is a technique that combines the predictions from multiple. Bootstrapping Vs Bagging.

From aiml.com

What is Bagging? How do you perform bagging and what are its advantages Bootstrapping Vs Bagging Which is the best one? It decreases the variance and helps to avoid overfitting. The underlying principle of bootstrapping relies on resampling a dataset with replacement. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together. Bootstrapping Vs Bagging.

From www.youtube.com

Bagging/Bootstrap Aggregating in Machine Learning with examples YouTube Bootstrapping Vs Bagging bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. It is usually applied to decision tree methods. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. Which is the best one? The underlying principle of bootstrapping relies on resampling a dataset with replacement. what's. Bootstrapping Vs Bagging.

From www.simplilearn.com

What is Bagging in Machine Learning And How to Perform Bagging Bootstrapping Vs Bagging bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. Can you give me an example for each?. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. what's. Bootstrapping Vs Bagging.

From pianalytix.com

Bootstrapping And Bagging Pianalytix Build RealWorld Tech Projects Bootstrapping Vs Bagging bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. The underlying principle of bootstrapping relies on resampling a dataset with replacement. It is usually applied to decision tree methods. what's the similarities and differences between these 3 methods: bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly. Bootstrapping Vs Bagging.

From dataaspirant.com

Bagging ensemble method Bootstrapping Vs Bagging It is usually applied to decision tree methods. The underlying principle of bootstrapping relies on resampling a dataset with replacement. what's the similarities and differences between these 3 methods: bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. bootstrap aggregating, better known as bagging,. Bootstrapping Vs Bagging.

From towardsdatascience.com

Ensemble Learning Bagging & Boosting by Fernando López Towards Bootstrapping Vs Bagging An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. Can you give me an example for each?. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. It is usually applied to decision tree methods. Which is. Bootstrapping Vs Bagging.

From shandrabarrows.blogspot.com

bagging predictors. machine learning Shandra Barrows Bootstrapping Vs Bagging bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. Can you give me an example for each?. The underlying principle of bootstrapping relies on resampling a dataset with replacement. It decreases the variance and helps to avoid overfitting. An ensemble method is a technique that combines the predictions from multiple machine. Bootstrapping Vs Bagging.

From pub.towardsai.net

Bagging vs. Boosting The Power of Ensemble Methods in Machine Learning Bootstrapping Vs Bagging An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method.. Bootstrapping Vs Bagging.

From www.youtube.com

14_10 Bootstrap aggregating or bagging YouTube Bootstrapping Vs Bagging bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. Which is the best one? The underlying principle of bootstrapping relies on resampling a dataset with replacement. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bootstrap aggregation (or bagging for short), is. Bootstrapping Vs Bagging.

From www.youtube.com

Blending and Bagging Bagging (Bootstrap Aggregation) Machine Bootstrapping Vs Bagging bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. The underlying principle of bootstrapping relies on resampling a dataset with replacement. It decreases the variance and helps to avoid overfitting. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. . Bootstrapping Vs Bagging.

From www.scribd.com

What Is Ensemble Method? Bagging, or Bootstrap Aggregating. Bagging Bootstrapping Vs Bagging bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. what's the similarities and differences between these 3 methods: It is usually applied to decision tree methods. It decreases the variance and helps to avoid overfitting. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms. Bootstrapping Vs Bagging.

From www.youtube.com

Bagging Bootstrap Aggregation Random Forest Ensemble Lesson 97 Bootstrapping Vs Bagging bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. Can you give me an example for each?. It decreases the variance and helps to avoid overfitting. The underlying principle of bootstrapping relies on resampling. Bootstrapping Vs Bagging.

From 3tdesign.edu.vn

Update more than 110 difference between bagging and bootstrapping Bootstrapping Vs Bagging bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. Which is the best one? what's the similarities and differences between these 3 methods: Can you give me. Bootstrapping Vs Bagging.

From thecontentauthority.com

Bootstrapping vs Bagging Differences And Uses For Each One Bootstrapping Vs Bagging Can you give me an example for each?. what's the similarities and differences between these 3 methods: bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. An ensemble method is. Bootstrapping Vs Bagging.

From www.researchgate.net

Bootstrap aggregation, or the Bagging technique (Lan 2017) Download Bootstrapping Vs Bagging It decreases the variance and helps to avoid overfitting. what's the similarities and differences between these 3 methods: Which is the best one? bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bootstrapping methods are. Bootstrapping Vs Bagging.

From medium.com

Bagging Machine Learning through visuals. 1 What is “Bagging Bootstrapping Vs Bagging Which is the best one? what's the similarities and differences between these 3 methods: bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. Can you give me an example for each?. bootstrapping methods are. Bootstrapping Vs Bagging.

From www.pluralsight.com

Ensemble Methods in Machine Learning Bagging Versus Boosting Pluralsight Bootstrapping Vs Bagging bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. Which is the best one? bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method.. Bootstrapping Vs Bagging.

From www.pluralsight.com

Ensemble Methods in Machine Learning Bagging Versus Boosting Pluralsight Bootstrapping Vs Bagging Can you give me an example for each?. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. The underlying principle of bootstrapping relies on resampling a dataset with replacement. what's the similarities and differences between these 3 methods: bootstrapping methods are used. Bootstrapping Vs Bagging.

From gaussian37.github.io

Overview Bagging gaussian37 Bootstrapping Vs Bagging bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. Which is the best one? bootstrapping methods are used to gain an understanding of the probability distribution for a. Bootstrapping Vs Bagging.

From www.youtube.com

Tutorial 42 Ensemble What is Bagging (Bootstrap Aggregation)? YouTube Bootstrapping Vs Bagging The underlying principle of bootstrapping relies on resampling a dataset with replacement. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. It decreases the variance and helps to avoid overfitting. bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. . Bootstrapping Vs Bagging.

From 3tdesign.edu.vn

Update more than 110 difference between bagging and bootstrapping Bootstrapping Vs Bagging Which is the best one? Can you give me an example for each?. It decreases the variance and helps to avoid overfitting. An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. The underlying principle of bootstrapping relies on resampling a dataset with replacement. . Bootstrapping Vs Bagging.

From medium.com

Bootstrapped Aggregation(Bagging) by Hema Anusha Medium Bootstrapping Vs Bagging An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. It decreases the variance and helps to avoid overfitting. what's the similarities and differences between these 3 methods: bootstrapping methods are used to gain an understanding of the probability distribution for a statistic. Bootstrapping Vs Bagging.

From blog.knoldus.com

Introduction to Ensemble Learning Knoldus Blogs Bootstrapping Vs Bagging bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bootstrap. Bootstrapping Vs Bagging.

From hudsonthames.org

Bagging in Financial Machine Learning Sequential Bootstrapping. Python Bootstrapping Vs Bagging Can you give me an example for each?. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. It is usually applied to decision tree methods. An ensemble method is a technique that combines the. Bootstrapping Vs Bagging.

From medium.com

Bootstrap, Bagging and Boosting. Bootstrap A resampling method for Bootstrapping Vs Bagging It decreases the variance and helps to avoid overfitting. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. what's the similarities and differences between these 3 methods: bootstrap aggregating, better known as bagging, stands. Bootstrapping Vs Bagging.

From wealthfit.com

How to Successfully Bootstrap Your Startup [Entrepreneurship] WealthFit Bootstrapping Vs Bagging what's the similarities and differences between these 3 methods: An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. Which is. Bootstrapping Vs Bagging.

From pianalytix.com

Bootstrapping And Bagging Pianalytix Build RealWorld Tech Projects Bootstrapping Vs Bagging what's the similarities and differences between these 3 methods: bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. Can you give me an example for each?. bootstrapping methods are used to gain an understanding of. Bootstrapping Vs Bagging.

From dataaspirant.com

Ensemble Methods Bagging Vs Boosting Difference Bootstrapping Vs Bagging It decreases the variance and helps to avoid overfitting. what's the similarities and differences between these 3 methods: It is usually applied to decision tree methods. bootstrapping methods are used to gain an understanding of the probability distribution for a statistic rather than taking it on face value. The underlying principle of bootstrapping relies on resampling a dataset. Bootstrapping Vs Bagging.

From www.analyticsvidhya.com

Ensemble Learning Methods Bagging, Boosting and Stacking Bootstrapping Vs Bagging An ensemble method is a technique that combines the predictions from multiple machine learning algorithms together to make more accurate predictions than any individual model. It decreases the variance and helps to avoid overfitting. Can you give me an example for each?. bootstrap aggregation (or bagging for short), is a simple and very powerful ensemble method. what's the. Bootstrapping Vs Bagging.

From www.youtube.com

Ensemble Learning Techniques in Machine Learning Bootstrap Bootstrapping Vs Bagging It decreases the variance and helps to avoid overfitting. bagging, also known as bootstrap aggregation, is the ensemble learning method that is commonly used to reduce. The underlying principle of bootstrapping relies on resampling a dataset with replacement. bootstrap aggregating, better known as bagging, stands out as a popular and widely implemented ensemble method. bootstrap aggregation (or. Bootstrapping Vs Bagging.