Robots.txt Example Disallow All . The site is not ready yet. In this chapter we'll cover a wide range of robots.txt file examples: Disallow all robots access to everything; Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. This is useful for many reasons. Allow all robots access to everything; The first template will stop all bots from crawling your site. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: You can use this as your default robots.txt: While it’s a public document, compliance with its directives. If you want to instruct all robots to stay away from your site, then this is the code you should put in. How to disallow all using robots.txt. Blocking a specific web crawler from a specific. Second_url/* the star will enable everything.

from www.vdigitalservices.com

Allow all robots access to everything; Second_url/* the star will enable everything. In this chapter we'll cover a wide range of robots.txt file examples: How to disallow all using robots.txt. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. You can use this as your default robots.txt: While it’s a public document, compliance with its directives. If you want to instruct all robots to stay away from your site, then this is the code you should put in. This is useful for many reasons. The site is not ready yet.

Using Robots.txt to Disallow All or Allow All How to Guide

Robots.txt Example Disallow All In this chapter we'll cover a wide range of robots.txt file examples: Blocking a specific web crawler from a specific. Second_url/* the star will enable everything. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. The site is not ready yet. If you want to instruct all robots to stay away from your site, then this is the code you should put in. In this chapter we'll cover a wide range of robots.txt file examples: You can use this as your default robots.txt: While it’s a public document, compliance with its directives. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Disallow all robots access to everything; How to disallow all using robots.txt. This is useful for many reasons. Allow all robots access to everything; The first template will stop all bots from crawling your site.

From reviewguruu.com

A Complete Guide to Robots.txt & Why It Matters Review Guruu Robots.txt Example Disallow All Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. The first template will stop all bots from crawling your site. The site is not ready yet. Disallow all robots access to everything; Allow all robots access to everything; You can use this as your default robots.txt: If you want to. Robots.txt Example Disallow All.

From www.firstpagedigital.sg

Guide To Robots.txt Disallow Command For SEO Robots.txt Example Disallow All Disallow all robots access to everything; This is useful for many reasons. You can use this as your default robots.txt: Second_url/* the star will enable everything. How to disallow all using robots.txt. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Allow all. Robots.txt Example Disallow All.

From stackoverflow.com

wordpress Disallow all pagination pages in robots.txt Stack Overflow Robots.txt Example Disallow All Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. The first template will stop all bots from crawling your site. Allow all robots access to everything; The site is not ready yet. If you have files or directories that you want to keep hidden from the public, do not ever. Robots.txt Example Disallow All.

From codepad.co

robots.txt Allow all and disallow xml extension Codepad Robots.txt Example Disallow All The site is not ready yet. In this chapter we'll cover a wide range of robots.txt file examples: Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. The first template will stop all bots from crawling your site. If you want to instruct all robots to stay away from your. Robots.txt Example Disallow All.

From www.reliablesoft.net

Robots.txt And SEO Easy Guide For Beginners Robots.txt Example Disallow All Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. Allow all robots access to everything; Second_url/* the star will enable everything. How to disallow all using robots.txt. If you want to instruct all robots to stay away from your site, then this is the code you should put in. The. Robots.txt Example Disallow All.

From reviewguruu.com

A Complete Guide to Robots.txt & Why It Matters Review Guruu Robots.txt Example Disallow All This is useful for many reasons. The site is not ready yet. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Disallow all robots. Robots.txt Example Disallow All.

From erikemanuelli.com

Robots.txt and SEO All You Need to Know [Practical Guide] Robots.txt Example Disallow All Second_url/* the star will enable everything. Disallow all robots access to everything; Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. If you want to instruct all robots to stay away from your site, then this is the code you should put in. This is useful for many reasons. The. Robots.txt Example Disallow All.

From www.reliablesoft.net

Robots.txt And SEO Easy Guide For Beginners Robots.txt Example Disallow All Blocking a specific web crawler from a specific. In this chapter we'll cover a wide range of robots.txt file examples: Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. If you have files or directories that you want to keep hidden from the public, do not ever just list them. Robots.txt Example Disallow All.

From reviewguruu.com

A Complete Guide to Robots.txt & Why It Matters Review Guruu Robots.txt Example Disallow All How to disallow all using robots.txt. You can use this as your default robots.txt: Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: If. Robots.txt Example Disallow All.

From www.youtube.com

Tutorial su robots.txt e disallow YouTube Robots.txt Example Disallow All Blocking a specific web crawler from a specific. Allow all robots access to everything; You can use this as your default robots.txt: While it’s a public document, compliance with its directives. This is useful for many reasons. Second_url/* the star will enable everything. Disallow all robots access to everything; If you have files or directories that you want to keep. Robots.txt Example Disallow All.

From neilpatel.com

How to Create the Perfect Robots.txt File for SEO Robots.txt Example Disallow All The site is not ready yet. Disallow all robots access to everything; Allow all robots access to everything; While it’s a public document, compliance with its directives. In this chapter we'll cover a wide range of robots.txt file examples: This is useful for many reasons. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on. Robots.txt Example Disallow All.

From stackoverflow.com

wordpress Disallow all pagination pages in robots.txt Stack Overflow Robots.txt Example Disallow All Allow all robots access to everything; If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: In this chapter we'll cover a wide range of robots.txt file examples: You can use this as your default robots.txt: How to disallow all using robots.txt. The first. Robots.txt Example Disallow All.

From seohub.net.au

A Complete Guide to Robots.txt & Why It Matters Robots.txt Example Disallow All This is useful for many reasons. Second_url/* the star will enable everything. You can use this as your default robots.txt: The first template will stop all bots from crawling your site. If you want to instruct all robots to stay away from your site, then this is the code you should put in. While it’s a public document, compliance with. Robots.txt Example Disallow All.

From www.youtube.com

masters In Robots.txt, how do I disallow all pages except for the Robots.txt Example Disallow All In this chapter we'll cover a wide range of robots.txt file examples: The site is not ready yet. How to disallow all using robots.txt. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. Disallow all robots access to everything; Second_url/* the star will enable everything. This is useful for many. Robots.txt Example Disallow All.

From hikeseo.co

Robots.txt File A Beginners Guide Hike SEO Robots.txt Example Disallow All This is useful for many reasons. Second_url/* the star will enable everything. Allow all robots access to everything; You can use this as your default robots.txt: Blocking a specific web crawler from a specific. While it’s a public document, compliance with its directives. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including. Robots.txt Example Disallow All.

From seranking.com

Robots.txt file How to Set it Up Properly and Check it After Robots.txt Example Disallow All In this chapter we'll cover a wide range of robots.txt file examples: While it’s a public document, compliance with its directives. The first template will stop all bots from crawling your site. You can use this as your default robots.txt: This is useful for many reasons. Disallow all robots access to everything; Second_url/* the star will enable everything. Blocking a. Robots.txt Example Disallow All.

From backlinko.com

Robots.txt and SEO Complete Guide Robots.txt Example Disallow All The site is not ready yet. How to disallow all using robots.txt. You can use this as your default robots.txt: Blocking a specific web crawler from a specific. While it’s a public document, compliance with its directives. If you want to instruct all robots to stay away from your site, then this is the code you should put in. The. Robots.txt Example Disallow All.

From atonce.com

Understanding What is Robot txt in SEO Essential Guide 2024 Robots.txt Example Disallow All If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Blocking a specific web crawler from a specific. Allow all robots access to everything; Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. Disallow. Robots.txt Example Disallow All.

From seranking.com

Robots.txt file How to Set it Up Properly and Check it After Robots.txt Example Disallow All The first template will stop all bots from crawling your site. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. Disallow all robots access to everything; This is useful for many reasons. While it’s a public document, compliance with its directives. Second_url/* the star will enable everything. Blocking a specific. Robots.txt Example Disallow All.

From seohub.net.au

A Complete Guide to Robots.txt & Why It Matters Robots.txt Example Disallow All Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. Blocking a specific web crawler from a specific. You can use this as your default robots.txt: Second_url/* the star will enable everything. Allow all robots access to everything; In this chapter we'll cover a wide range of robots.txt file examples: Disallow. Robots.txt Example Disallow All.

From wordify.com

Exploring WordPress Robots.txt Functions and Rules Wordify Robots.txt Example Disallow All The site is not ready yet. Blocking a specific web crawler from a specific. Second_url/* the star will enable everything. You can use this as your default robots.txt: Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. The first template will stop all bots from crawling your site. This is. Robots.txt Example Disallow All.

From the7eagles.com

What is Robots.txt in SEO and How to Practise it? Robots.txt Example Disallow All While it’s a public document, compliance with its directives. You can use this as your default robots.txt: Disallow all robots access to everything; Second_url/* the star will enable everything. In this chapter we'll cover a wide range of robots.txt file examples: If you have files or directories that you want to keep hidden from the public, do not ever just. Robots.txt Example Disallow All.

From www.edgeonline.com.au

What is Robots.txt An Exhaustive Guide to the Robots.txt File Robots.txt Example Disallow All While it’s a public document, compliance with its directives. If you want to instruct all robots to stay away from your site, then this is the code you should put in. This is useful for many reasons. Disallow all robots access to everything; You can use this as your default robots.txt: How to disallow all using robots.txt. Second_url/* the star. Robots.txt Example Disallow All.

From es.slideshare.net

Robots.txt Disallow areasof your site Robots.txt Example Disallow All This is useful for many reasons. In this chapter we'll cover a wide range of robots.txt file examples: How to disallow all using robots.txt. Blocking a specific web crawler from a specific. The first template will stop all bots from crawling your site. Second_url/* the star will enable everything. While it’s a public document, compliance with its directives. Using this. Robots.txt Example Disallow All.

From ignitevisibility.com

Robots.txt Disallow 2025 Guide for Marketers Robots.txt Example Disallow All If you want to instruct all robots to stay away from your site, then this is the code you should put in. How to disallow all using robots.txt. You can use this as your default robots.txt: Allow all robots access to everything; If you have files or directories that you want to keep hidden from the public, do not ever. Robots.txt Example Disallow All.

From www.semrush.com

Robots.Txt What Is Robots.Txt & Why It Matters for SEO Robots.txt Example Disallow All How to disallow all using robots.txt. Allow all robots access to everything; Disallow all robots access to everything; If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: This is useful for many reasons. While it’s a public document, compliance with its directives. The. Robots.txt Example Disallow All.

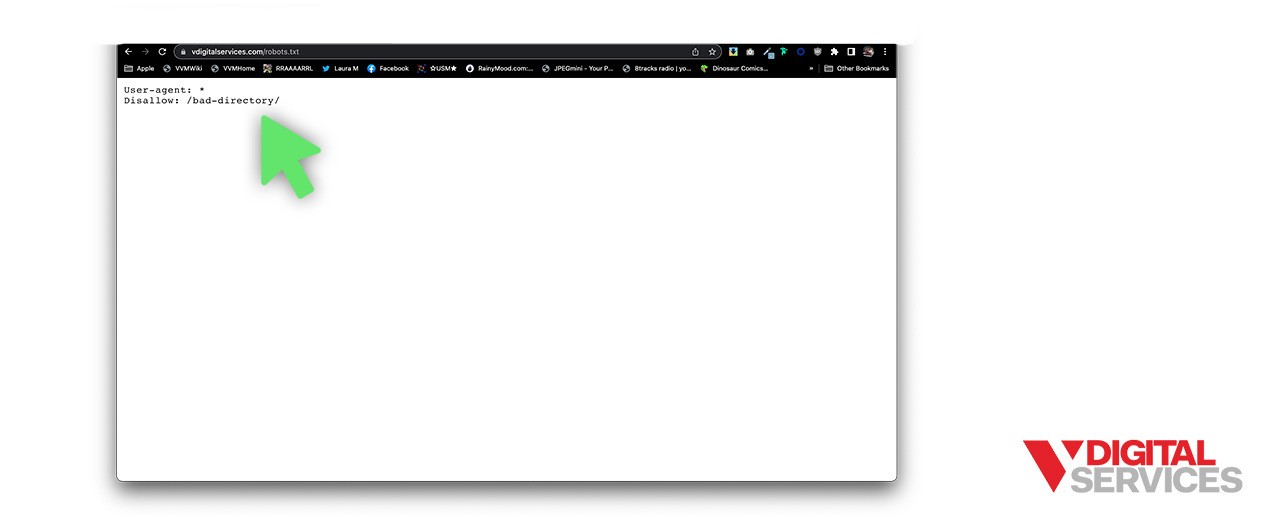

From www.vdigitalservices.com

Using Robots.txt to Disallow All or Allow All How to Guide Robots.txt Example Disallow All If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Allow all robots access to everything; In this chapter we'll cover a wide range of robots.txt file examples: The first template will stop all bots from crawling your site. If you want to instruct. Robots.txt Example Disallow All.

From hqseo.co.uk

Robots.txt Is It Necessary? A Complete Guide to Robots.txt Directives Robots.txt Example Disallow All How to disallow all using robots.txt. If you want to instruct all robots to stay away from your site, then this is the code you should put in. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Using this syntax in a robots.txt. Robots.txt Example Disallow All.

From www.semrush.com

A Complete Guide to Robots.txt & Why It Matters Robots.txt Example Disallow All In this chapter we'll cover a wide range of robots.txt file examples: You can use this as your default robots.txt: How to disallow all using robots.txt. Disallow all robots access to everything; If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Using this. Robots.txt Example Disallow All.

From techtotutorial.blogspot.com

Robots.txt Generate Tech to Tutorial Online Tutorial Robots.txt Example Disallow All If you want to instruct all robots to stay away from your site, then this is the code you should put in. While it’s a public document, compliance with its directives. This is useful for many reasons. The first template will stop all bots from crawling your site. Second_url/* the star will enable everything. Disallow all robots access to everything;. Robots.txt Example Disallow All.

From www.vdigitalservices.com

Using Robots.txt to Disallow All or Allow All How to Guide Robots.txt Example Disallow All If you want to instruct all robots to stay away from your site, then this is the code you should put in. You can use this as your default robots.txt: Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. If you have files or directories that you want to keep. Robots.txt Example Disallow All.

From www.vdigitalservices.com

Using Robots.txt to Disallow All or Allow All How to Guide Robots.txt Example Disallow All How to disallow all using robots.txt. While it’s a public document, compliance with its directives. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: Disallow all robots access to everything; Second_url/* the star will enable everything. If you want to instruct all robots. Robots.txt Example Disallow All.

From moz.com

What Is A Robots.txt File? Best Practices For Robot.txt Syntax Moz Robots.txt Example Disallow All Disallow all robots access to everything; Allow all robots access to everything; The first template will stop all bots from crawling your site. This is useful for many reasons. Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. In this chapter we'll cover a wide range of robots.txt file examples:. Robots.txt Example Disallow All.

From www.semrush.com

A Complete Guide to Robots.txt & Why It Matters Robots.txt Example Disallow All In this chapter we'll cover a wide range of robots.txt file examples: Using this syntax in a robots.txt file tells web crawlers to crawl all pages on www.example.com, including the homepage. While it’s a public document, compliance with its directives. Disallow all robots access to everything; The site is not ready yet. If you want to instruct all robots to. Robots.txt Example Disallow All.

From noaheakin.medium.com

A Brief Look At /robots.txt Files by Noah Eakin Medium Robots.txt Example Disallow All In this chapter we'll cover a wide range of robots.txt file examples: Second_url/* the star will enable everything. If you have files or directories that you want to keep hidden from the public, do not ever just list them all in robots.txt like this: If you want to instruct all robots to stay away from your site, then this is. Robots.txt Example Disallow All.