Python Logging In Databricks . Lets try the standard use of python’s logging module. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. By default it is returned with the level set to. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. The databricks sdk for python seamlessly integrates with the standard logging facility for python. I have a repo that have python files that use the built in logging module. This allows developers to easily enable. Well we can take a look at the root logger we returned. I have a bunch of notebooks that run in parallel through a. Additionally in some of the notebooks of the repo i want to use. I want to add custom logs that redirect in the spark driver logs. This allows developers to easily enable. What is the best practice for logging in databricks notebooks?

from datainsights.de

By default it is returned with the level set to. This allows developers to easily enable. I have a bunch of notebooks that run in parallel through a. Lets try the standard use of python’s logging module. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. Additionally in some of the notebooks of the repo i want to use. This allows developers to easily enable. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Well we can take a look at the root logger we returned. The databricks sdk for python seamlessly integrates with the standard logging facility for python.

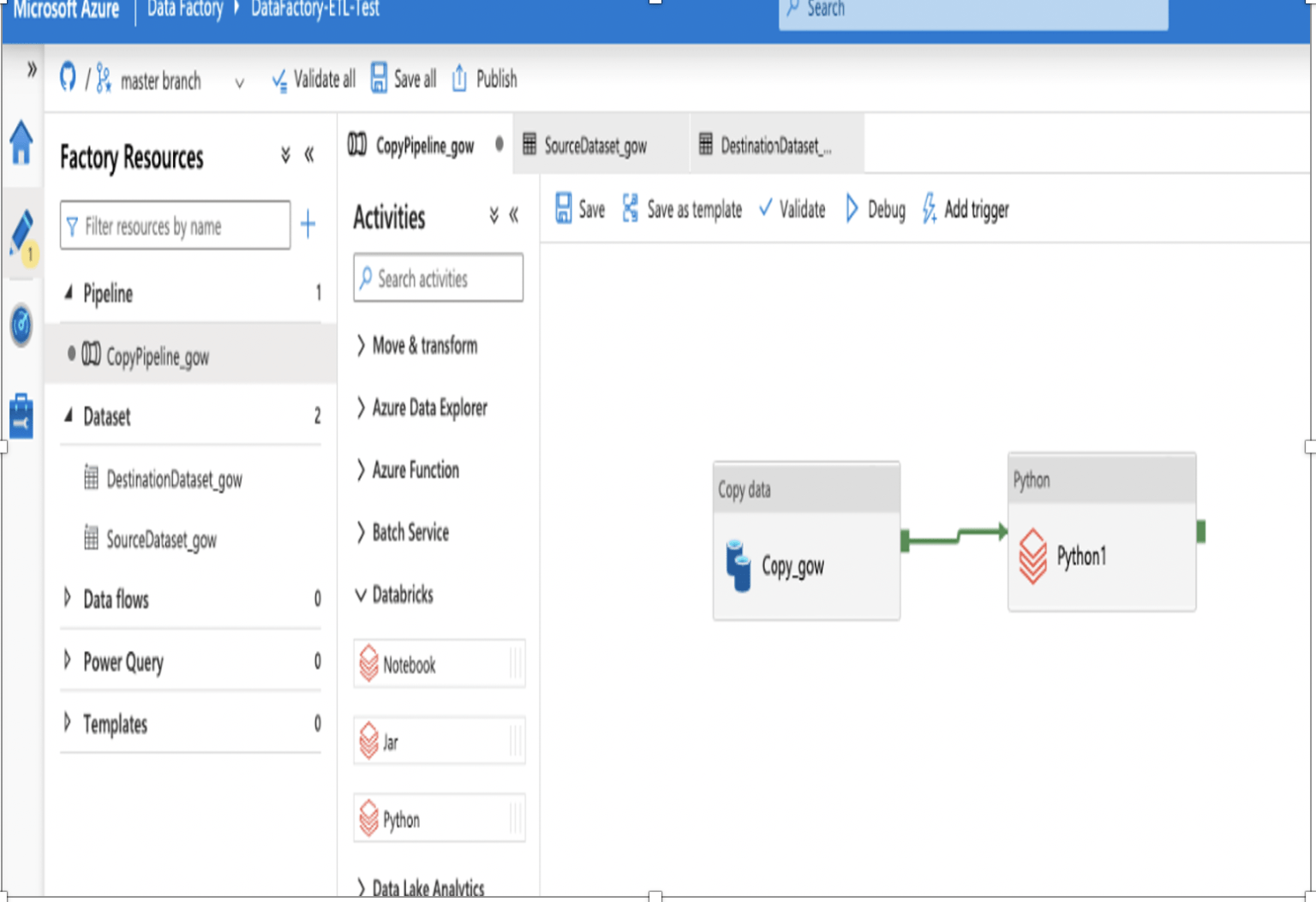

ETL using Databricks Python Activity in Azure Data Factory synvert

Python Logging In Databricks Well we can take a look at the root logger we returned. This allows developers to easily enable. The databricks sdk for python seamlessly integrates with the standard logging facility for python. The databricks sdk for python seamlessly integrates with the standard logging facility for python. I want to add custom logs that redirect in the spark driver logs. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. Additionally in some of the notebooks of the repo i want to use. What is the best practice for logging in databricks notebooks? This allows developers to easily enable. I have a repo that have python files that use the built in logging module. I have a bunch of notebooks that run in parallel through a. By default it is returned with the level set to. Lets try the standard use of python’s logging module. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. Well we can take a look at the root logger we returned.

From sematext.com

Python Logging Basics HowTo Tutorial, Examples & More Sematext Python Logging In Databricks I want to add custom logs that redirect in the spark driver logs. By default it is returned with the level set to. Additionally in some of the notebooks of the repo i want to use. This allows developers to easily enable. What is the best practice for logging in databricks notebooks? Lets try the standard use of python’s logging. Python Logging In Databricks.

From docs.databricks.com

Databricks SDK for Python Databricks on AWS Python Logging In Databricks Well we can take a look at the root logger we returned. By default it is returned with the level set to. Lets try the standard use of python’s logging module. This allows developers to easily enable. I have a repo that have python files that use the built in logging module. The databricks sql connector for python allows you. Python Logging In Databricks.

From blog.sentry.io

Logging in Python A Developer’s Guide Product Blog • Sentry Python Logging In Databricks By default it is returned with the level set to. Lets try the standard use of python’s logging module. Additionally in some of the notebooks of the repo i want to use. What is the best practice for logging in databricks notebooks? I want to add custom logs that redirect in the spark driver logs. Well we can take a. Python Logging In Databricks.

From pythongeeks.org

Logging in Python Python Geeks Python Logging In Databricks Additionally in some of the notebooks of the repo i want to use. I want to add custom logs that redirect in the spark driver logs. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. I have a repo that have python files that use the built in logging. Python Logging In Databricks.

From thepythoncode.com

Logging in Python The Python Code Python Logging In Databricks Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. What is the best practice for logging in databricks notebooks? This allows developers to easily enable. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. The databricks sdk for python. Python Logging In Databricks.

From stackoverflow.com

Logging in Databricks Python Notebooks Stack Overflow Python Logging In Databricks I have a bunch of notebooks that run in parallel through a. The databricks sdk for python seamlessly integrates with the standard logging facility for python. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs.. Python Logging In Databricks.

From stackoverflow.com

Logging in Databricks Python Notebooks Stack Overflow Python Logging In Databricks Lets try the standard use of python’s logging module. What is the best practice for logging in databricks notebooks? The databricks sdk for python seamlessly integrates with the standard logging facility for python. Well we can take a look at the root logger we returned. By default it is returned with the level set to. The databricks sdk for python. Python Logging In Databricks.

From www.youtube.com

COLOURED logging in Python YouTube Python Logging In Databricks By default it is returned with the level set to. Well we can take a look at the root logger we returned. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. I have a repo that have python files that use the built in logging module. The databricks sdk. Python Logging In Databricks.

From hevodata.com

Databricks Python The Ultimate Guide Simplified 101 Python Logging In Databricks I want to add custom logs that redirect in the spark driver logs. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. This allows developers to easily enable. By default. Python Logging In Databricks.

From www.youtube.com

Databricks Notebook Development Overview YouTube Python Logging In Databricks Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Well we can take a look at the root logger we returned. What is the best practice for logging in databricks notebooks? The databricks sdk for. Python Logging In Databricks.

From stackoverflow.com

Logging in Databricks Python Notebooks Stack Overflow Python Logging In Databricks Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. Additionally in some of the notebooks of the repo i want to use. By default it is returned with the level set to. The databricks sql connector for python allows you to use python code to run sql commands on. Python Logging In Databricks.

From datagy.io

Python Natural Log Calculate ln in Python • datagy Python Logging In Databricks What is the best practice for logging in databricks notebooks? I have a bunch of notebooks that run in parallel through a. Lets try the standard use of python’s logging module. This allows developers to easily enable. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. By default it is. Python Logging In Databricks.

From menziess.github.io

Install Python Packages on Databricks menziess blog Python Logging In Databricks The databricks sdk for python seamlessly integrates with the standard logging facility for python. This allows developers to easily enable. I have a repo that have python files that use the built in logging module. The databricks sdk for python seamlessly integrates with the standard logging facility for python. The databricks sql connector for python allows you to use python. Python Logging In Databricks.

From grabngoinfo.com

Databricks Widgets in Python Notebook Grab N Go Info Python Logging In Databricks I want to add custom logs that redirect in the spark driver logs. Lets try the standard use of python’s logging module. By default it is returned with the level set to. What is the best practice for logging in databricks notebooks? This allows developers to easily enable. This allows developers to easily enable. Additionally in some of the notebooks. Python Logging In Databricks.

From quadexcel.com

Azure Databricks using Python with PySpark Python Logging In Databricks I want to add custom logs that redirect in the spark driver logs. This allows developers to easily enable. I have a repo that have python files that use the built in logging module. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs. Python Logging In Databricks.

From stackoverflow.com

Logging in Databricks Python Notebooks Stack Overflow Python Logging In Databricks By default it is returned with the level set to. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. Well we can take a look at the root logger we returned. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Additionally in some of the. Python Logging In Databricks.

From github.com

GitHub srtamrakar/pythonlogger Convenient wrapper for logging Python Logging In Databricks Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. By default it is returned with the level set to. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Additionally in some of the notebooks of the repo i want to use. Well we can. Python Logging In Databricks.

From learn.microsoft.com

Run a Databricks Notebook with the activity Azure Data Factory Python Logging In Databricks This allows developers to easily enable. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. What is the best practice for logging in databricks notebooks? Additionally in some of the notebooks of the repo i. Python Logging In Databricks.

From stackoverflow.com

Logging in Databricks Python Notebooks Stack Overflow Python Logging In Databricks Lets try the standard use of python’s logging module. I have a repo that have python files that use the built in logging module. The databricks sdk for python seamlessly integrates with the standard logging facility for python. This allows developers to easily enable. I want to add custom logs that redirect in the spark driver logs. The databricks sql. Python Logging In Databricks.

From stackoverflow.com

pyspark Spark Error when running python script on databricks Stack Python Logging In Databricks The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. Lets try the standard use of python’s logging module. Additionally in some of the notebooks of the repo i want to use. I have a repo. Python Logging In Databricks.

From stackoverflow.com

Logging in Databricks Python Notebooks Stack Overflow Python Logging In Databricks Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. This allows developers to easily enable. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. What is the best practice for logging in databricks notebooks? Well we can take a. Python Logging In Databricks.

From stackoverflow.com

sql Databricks Python Optimization Stack Overflow Python Logging In Databricks Additionally in some of the notebooks of the repo i want to use. Lets try the standard use of python’s logging module. The databricks sdk for python seamlessly integrates with the standard logging facility for python. I have a repo that have python files that use the built in logging module. Can i use the existing logger classes to have. Python Logging In Databricks.

From medium.com

Better Logging in Databricks. (if you just want the code… by David Python Logging In Databricks Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. Additionally in some of the notebooks of the repo i want to use. By default it is returned with the level set to. I have a repo that have python files that use the built in logging module. The databricks. Python Logging In Databricks.

From www.youtube.com

How to do logging in Databricks [Python] YouTube Python Logging In Databricks This allows developers to easily enable. Well we can take a look at the root logger we returned. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Lets try the standard use of python’s logging module. I have a repo that have python files that use the built in logging module. I want to add. Python Logging In Databricks.

From www.arnondora.in.th

ฮาวทู Logging ไลค์อะโปร ใน Python Arnondora Python Logging In Databricks This allows developers to easily enable. This allows developers to easily enable. Lets try the standard use of python’s logging module. The databricks sdk for python seamlessly integrates with the standard logging facility for python. I have a bunch of notebooks that run in parallel through a. What is the best practice for logging in databricks notebooks? Additionally in some. Python Logging In Databricks.

From www.youtube.com

logging in python with log rotation and compression of rotated logs Python Logging In Databricks The databricks sdk for python seamlessly integrates with the standard logging facility for python. By default it is returned with the level set to. This allows developers to easily enable. The databricks sdk for python seamlessly integrates with the standard logging facility for python. I have a repo that have python files that use the built in logging module. This. Python Logging In Databricks.

From techvidvan.com

Logging in Python TechVidvan Python Logging In Databricks The databricks sdk for python seamlessly integrates with the standard logging facility for python. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. Lets try the standard use of python’s logging module. This allows developers to easily enable. Well we can take a look at the root logger we returned.. Python Logging In Databricks.

From twitter.com

Daily Databricks on Twitter "⚠Want to log the exact notebook cell that Python Logging In Databricks I have a bunch of notebooks that run in parallel through a. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Lets try the standard use of python’s logging module. The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. Well we can take a look. Python Logging In Databricks.

From community.databricks.com

Solved How do I use the Python Logging Module in a Repo? Databricks Python Logging In Databricks I have a repo that have python files that use the built in logging module. Additionally in some of the notebooks of the repo i want to use. This allows developers to easily enable. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. The databricks sdk for python seamlessly. Python Logging In Databricks.

From www.pythonpip.com

How to Use Logging in Python Python Logging In Databricks This allows developers to easily enable. What is the best practice for logging in databricks notebooks? This allows developers to easily enable. Additionally in some of the notebooks of the repo i want to use. I have a bunch of notebooks that run in parallel through a. The databricks sql connector for python allows you to use python code to. Python Logging In Databricks.

From www.toptal.com

Python Logging InDepth Tutorial Toptal® Python Logging In Databricks What is the best practice for logging in databricks notebooks? The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs or progress message in the spark driver logs. By default it is returned with the level set to. Lets try the standard use of. Python Logging In Databricks.

From grabngoinfo.com

Databricks Widgets in Python Notebook Grab N Go Info Python Logging In Databricks The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. The databricks sdk for python seamlessly integrates with the standard logging facility for python. The databricks sdk for python seamlessly integrates with the standard logging facility for python. Can i use the existing logger classes to have my application logs or. Python Logging In Databricks.

From betterstack.com

Logging in Python A Comparison of the Top 6 Libraries Better Stack Python Logging In Databricks I want to add custom logs that redirect in the spark driver logs. Well we can take a look at the root logger we returned. Lets try the standard use of python’s logging module. What is the best practice for logging in databricks notebooks? I have a repo that have python files that use the built in logging module. Can. Python Logging In Databricks.

From datainsights.de

ETL using Databricks Python Activity in Azure Data Factory synvert Python Logging In Databricks I have a repo that have python files that use the built in logging module. What is the best practice for logging in databricks notebooks? I want to add custom logs that redirect in the spark driver logs. This allows developers to easily enable. Lets try the standard use of python’s logging module. The databricks sdk for python seamlessly integrates. Python Logging In Databricks.

From learn.microsoft.com

Conectarse a Azure Databricks desde Python o R Microsoft Learn Python Logging In Databricks The databricks sql connector for python allows you to use python code to run sql commands on databricks resources. This allows developers to easily enable. The databricks sdk for python seamlessly integrates with the standard logging facility for python. I have a bunch of notebooks that run in parallel through a. This allows developers to easily enable. I want to. Python Logging In Databricks.