Increase Learning Rate With Batch Size . learning rate (lr): instead of decaying the learning rate, we increase the batch size during training. Perform a learning rate range test to find the maximum learning rate. in the realm of machine learning, the relationship between batch size and learning rate is like a dance: The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. when learning gradient descent, we learn that learning rate and batch size matter. batch size controls the accuracy of the estimate of the error gradient when training neural networks. A large batch size works well but the magnitude is typically. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. Finding the right rhythm and balance is key to a harmonious performance. There is a tension between batch size and the speed and stability of the learning process.

from www.pdfprof.com

Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. when learning gradient descent, we learn that learning rate and batch size matter. in the realm of machine learning, the relationship between batch size and learning rate is like a dance: my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. instead of decaying the learning rate, we increase the batch size during training. learning rate (lr): The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. Perform a learning rate range test to find the maximum learning rate. batch size controls the accuracy of the estimate of the error gradient when training neural networks. A large batch size works well but the magnitude is typically.

control batch size and learning rate to generalize well

Increase Learning Rate With Batch Size learning rate (lr): There is a tension between batch size and the speed and stability of the learning process. batch size controls the accuracy of the estimate of the error gradient when training neural networks. learning rate (lr): when learning gradient descent, we learn that learning rate and batch size matter. A large batch size works well but the magnitude is typically. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. Perform a learning rate range test to find the maximum learning rate. Finding the right rhythm and balance is key to a harmonious performance. instead of decaying the learning rate, we increase the batch size during training. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. in the realm of machine learning, the relationship between batch size and learning rate is like a dance:

From machinelearningmastery.com

How to Control the Stability of Training Neural Networks With the Batch Increase Learning Rate With Batch Size Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. instead of decaying the learning rate, we increase the batch size during training. Finding the right rhythm and balance. Increase Learning Rate With Batch Size.

From www.researchgate.net

Training loss reduction curves for different combinations of batch size Increase Learning Rate With Batch Size batch size controls the accuracy of the estimate of the error gradient when training neural networks. learning rate (lr): Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. Perform a learning rate range. Increase Learning Rate With Batch Size.

From ithelp.ithome.com.tw

【5】超參數 Batch size 與 Learning rate 的關係實驗 iT 邦幫忙一起幫忙解決難題,拯救 IT 人的一天 Increase Learning Rate With Batch Size A large batch size works well but the magnitude is typically. learning rate (lr): Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. There is a tension between. Increase Learning Rate With Batch Size.

From www.researchgate.net

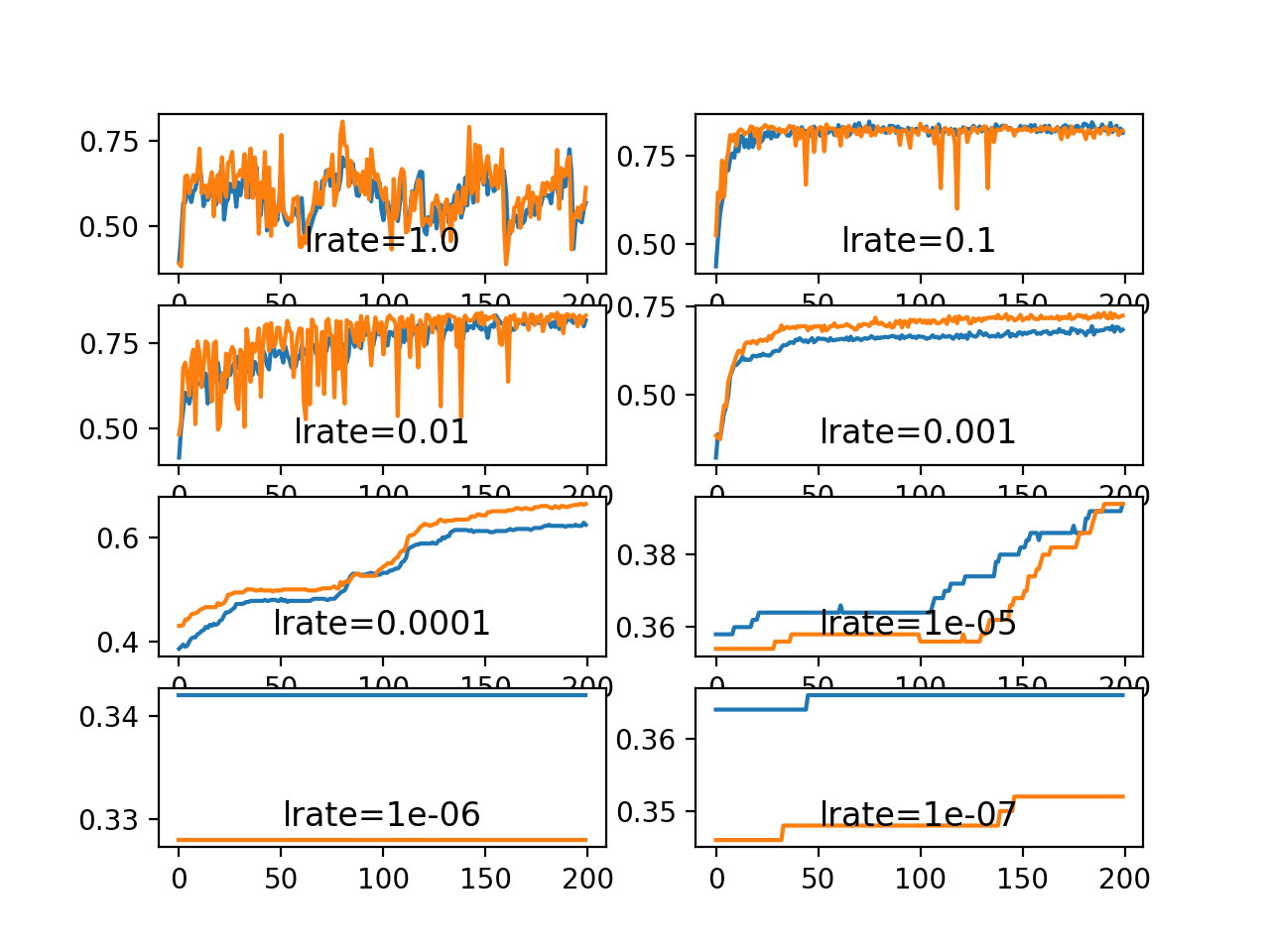

Accuracy according to learning rate, batch size, and epoch Download Increase Learning Rate With Batch Size batch size controls the accuracy of the estimate of the error gradient when training neural networks. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. learning rate (lr): Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. Perform a learning rate range. Increase Learning Rate With Batch Size.

From www.researchgate.net

Comparison of learning rate size and batch size experiment. Download Increase Learning Rate With Batch Size Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. batch size controls the accuracy of the estimate of the error gradient when training neural networks. Finding the right rhythm and balance is key to a harmonious performance. instead of decaying the learning rate, we increase the batch size during training. learning. Increase Learning Rate With Batch Size.

From wordpress.cs.vt.edu

Don’t Decay the Learning Rate, Increase the Batch Size Optimization Increase Learning Rate With Batch Size batch size controls the accuracy of the estimate of the error gradient when training neural networks. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. when learning gradient descent, we learn that learning rate and batch size matter. in the realm of machine learning, the relationship between batch size and learning. Increase Learning Rate With Batch Size.

From stats.stackexchange.com

python What is batch size in neural network? Cross Validated Increase Learning Rate With Batch Size Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. batch size controls the accuracy of the estimate of the error gradient when training neural networks. learning rate (lr): when learning gradient descent, we learn that learning rate and batch size matter. Perform a learning rate range test to find the maximum. Increase Learning Rate With Batch Size.

From pdfprof.com

keras batch size and learning rate Increase Learning Rate With Batch Size batch size controls the accuracy of the estimate of the error gradient when training neural networks. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. learning rate (lr): in the realm of. Increase Learning Rate With Batch Size.

From www.pdfprof.com

batch size epoch learning rate Increase Learning Rate With Batch Size There is a tension between batch size and the speed and stability of the learning process. A large batch size works well but the magnitude is typically. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. instead of decaying the learning rate, we increase the batch size during training. my understanding is. Increase Learning Rate With Batch Size.

From www.researchgate.net

mAP according to learning rate, batch size, and epoch (Yolov5s model Increase Learning Rate With Batch Size in the realm of machine learning, the relationship between batch size and learning rate is like a dance: batch size controls the accuracy of the estimate of the error gradient when training neural networks. A large batch size works well but the magnitude is typically. instead of decaying the learning rate, we increase the batch size during. Increase Learning Rate With Batch Size.

From yuiga.dev

【論文メモ】Don't Decay the Learning Rate, Increase the Batch Size 行李の底に収め Increase Learning Rate With Batch Size There is a tension between batch size and the speed and stability of the learning process. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. when learning gradient descent, we learn that learning rate and batch size matter. batch size controls the. Increase Learning Rate With Batch Size.

From deepai.org

Coupling Adaptive Batch Sizes with Learning Rates DeepAI Increase Learning Rate With Batch Size learning rate (lr): instead of decaying the learning rate, we increase the batch size during training. Perform a learning rate range test to find the maximum learning rate. when learning gradient descent, we learn that learning rate and batch size matter. batch size controls the accuracy of the estimate of the error gradient when training neural. Increase Learning Rate With Batch Size.

From www.semanticscholar.org

[PDF] A disciplined approach to neural network hyperparameters Part 1 Increase Learning Rate With Batch Size A large batch size works well but the magnitude is typically. when learning gradient descent, we learn that learning rate and batch size matter. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. my understanding is when i increase batch size, computed average gradient will be less noisy. Increase Learning Rate With Batch Size.

From www.researchgate.net

Influence of batch size and learning rate. Download Scientific Diagram Increase Learning Rate With Batch Size in the realm of machine learning, the relationship between batch size and learning rate is like a dance: Finding the right rhythm and balance is key to a harmonious performance. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. There is a tension between batch size and the speed and stability of the. Increase Learning Rate With Batch Size.

From www.researchgate.net

Learning rate and batch size used during training. Download Increase Learning Rate With Batch Size A large batch size works well but the magnitude is typically. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. Perform a learning rate range test to find the maximum learning rate. my understanding. Increase Learning Rate With Batch Size.

From pdfprof.com

keras batch size and learning rate Increase Learning Rate With Batch Size instead of decaying the learning rate, we increase the batch size during training. when learning gradient descent, we learn that learning rate and batch size matter. A large batch size works well but the magnitude is typically. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. in the realm of machine. Increase Learning Rate With Batch Size.

From www.pdfprof.com

increase batch size instead of learning rate Increase Learning Rate With Batch Size There is a tension between batch size and the speed and stability of the learning process. Perform a learning rate range test to find the maximum learning rate. in the realm of machine learning, the relationship between batch size and learning rate is like a dance: learning rate (lr): when learning gradient descent, we learn that learning. Increase Learning Rate With Batch Size.

From zhuanlan.zhihu.com

如何选择模型训练的batch size和learning rate 知乎 Increase Learning Rate With Batch Size instead of decaying the learning rate, we increase the batch size during training. in the realm of machine learning, the relationship between batch size and learning rate is like a dance: Perform a learning rate range test to find the maximum learning rate. There is a tension between batch size and the speed and stability of the learning. Increase Learning Rate With Batch Size.

From deepai.org

Automated Learning Rate Scheduler for Largebatch Training DeepAI Increase Learning Rate With Batch Size The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. when learning gradient descent, we learn that learning rate and batch size matter. in the realm of machine learning, the relationship between batch size and learning rate is like a dance: batch size controls the accuracy of the. Increase Learning Rate With Batch Size.

From www.youtube.com

AI Basics Accuracy, Epochs, Learning Rate, Batch Size and Loss YouTube Increase Learning Rate With Batch Size when learning gradient descent, we learn that learning rate and batch size matter. learning rate (lr): in the realm of machine learning, the relationship between batch size and learning rate is like a dance: my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning. Increase Learning Rate With Batch Size.

From www.baeldung.com

Relation Between Learning Rate and Batch Size Baeldung on Computer Increase Learning Rate With Batch Size Perform a learning rate range test to find the maximum learning rate. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. Finding the right rhythm and balance is key. Increase Learning Rate With Batch Size.

From www.pdfprof.com

PDF adam learning rate batch size PDF Télécharger Download Increase Learning Rate With Batch Size my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. There is a tension between batch size and the speed and stability of the learning process. Perform a learning rate range test to find the maximum learning rate. instead of decaying the learning rate,. Increase Learning Rate With Batch Size.

From www.pdfprof.com

batch size epoch learning rate Increase Learning Rate With Batch Size There is a tension between batch size and the speed and stability of the learning process. instead of decaying the learning rate, we increase the batch size during training. learning rate (lr): A large batch size works well but the magnitude is typically. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm.. Increase Learning Rate With Batch Size.

From onexception.dev

Optimizing Batch Size and Learning Rate for LLM Training Best Practices Increase Learning Rate With Batch Size in the realm of machine learning, the relationship between batch size and learning rate is like a dance: A large batch size works well but the magnitude is typically. Perform a learning rate range test to find the maximum learning rate. instead of decaying the learning rate, we increase the batch size during training. Batch, stochastic, and minibatch. Increase Learning Rate With Batch Size.

From www.pdfprof.com

increase batch size instead of learning rate Increase Learning Rate With Batch Size Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. learning rate (lr): when learning gradient descent, we learn that learning rate and batch size matter. Perform a learning rate range test to find the maximum learning rate. A large batch size works well but the magnitude is typically. my understanding is. Increase Learning Rate With Batch Size.

From www.baeldung.com

Relation Between Learning Rate and Batch Size Baeldung on Computer Increase Learning Rate With Batch Size learning rate (lr): A large batch size works well but the magnitude is typically. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. Finding the right rhythm and balance is key to a harmonious performance. batch size controls the accuracy of the estimate of the error gradient when training neural networks. . Increase Learning Rate With Batch Size.

From forums.fast.ai

Relationship between learning rate, batch size, and shuffling Deep Increase Learning Rate With Batch Size Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. batch size controls the accuracy of the estimate of the error gradient when training neural networks. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. Perform a learning. Increase Learning Rate With Batch Size.

From www.researchgate.net

Learning Rate curve at Batch size = 32, Optimizer = SGD (a Increase Learning Rate With Batch Size The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch size. Finding the right rhythm and balance is key to a harmonious performance. batch size controls the accuracy of the estimate of the error gradient when training neural networks. my understanding is when i increase batch size, computed average gradient. Increase Learning Rate With Batch Size.

From ps.is.mpg.de

Coupling Adaptive Batch Sizes with Learning Rates Perceiving Systems Increase Learning Rate With Batch Size A large batch size works well but the magnitude is typically. learning rate (lr): instead of decaying the learning rate, we increase the batch size during training. when learning gradient descent, we learn that learning rate and batch size matter. The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the. Increase Learning Rate With Batch Size.

From keepcoding.io

Relación de learning rate y batch size Increase Learning Rate With Batch Size There is a tension between batch size and the speed and stability of the learning process. Perform a learning rate range test to find the maximum learning rate. instead of decaying the learning rate, we increase the batch size during training. Finding the right rhythm and balance is key to a harmonious performance. when learning gradient descent, we. Increase Learning Rate With Batch Size.

From www.baeldung.com

Relation Between Learning Rate and Batch Size Baeldung on Computer Increase Learning Rate With Batch Size learning rate (lr): Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. batch size controls the accuracy of the estimate of the error gradient when training neural networks. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate. Increase Learning Rate With Batch Size.

From www.researchgate.net

RESULTS WITH DIFFERENT BATCH SIZES AND LEARNING RATES. THE BEST Increase Learning Rate With Batch Size Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. when learning gradient descent, we learn that learning rate and batch size matter. my understanding is when i increase batch size, computed average gradient will be less noisy and so i either keep same learning rate or. in the realm of machine. Increase Learning Rate With Batch Size.

From www.pdfprof.com

control batch size and learning rate to generalize well Increase Learning Rate With Batch Size when learning gradient descent, we learn that learning rate and batch size matter. Finding the right rhythm and balance is key to a harmonious performance. Batch, stochastic, and minibatch gradient descent are the three main flavors of the learning algorithm. my understanding is when i increase batch size, computed average gradient will be less noisy and so i. Increase Learning Rate With Batch Size.

From wordpress.cs.vt.edu

Don’t Decay the Learning Rate, Increase the Batch Size Optimization Increase Learning Rate With Batch Size learning rate (lr): batch size controls the accuracy of the estimate of the error gradient when training neural networks. There is a tension between batch size and the speed and stability of the learning process. in the realm of machine learning, the relationship between batch size and learning rate is like a dance: my understanding is. Increase Learning Rate With Batch Size.

From jihoonerd.github.io

Don't Decay the Learning Rate, Increase the Batch Size pult Increase Learning Rate With Batch Size when learning gradient descent, we learn that learning rate and batch size matter. Perform a learning rate range test to find the maximum learning rate. Finding the right rhythm and balance is key to a harmonious performance. learning rate (lr): The linear scaling rule posits that the learning rate should be adjusted in direct proportion to the batch. Increase Learning Rate With Batch Size.