Method Stacking Erf . Unlike bagging, stacking involves different models are trained on the same training dataset. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike boosting, a single model (called. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to train the meta model.

from setscholars.net

One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike boosting, a single model (called. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike bagging, stacking involves different models are trained on the same training dataset.

Mastering Stack Ensembles in Machine Learning A Deep Dive into

Method Stacking Erf In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike bagging, stacking involves different models are trained on the same training dataset. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike boosting, a single model (called. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. In model stacking, we use predictions made on the train data itself in order to train the meta model. The way to properly include these predictions is by dividing our train data.

From www.researchgate.net

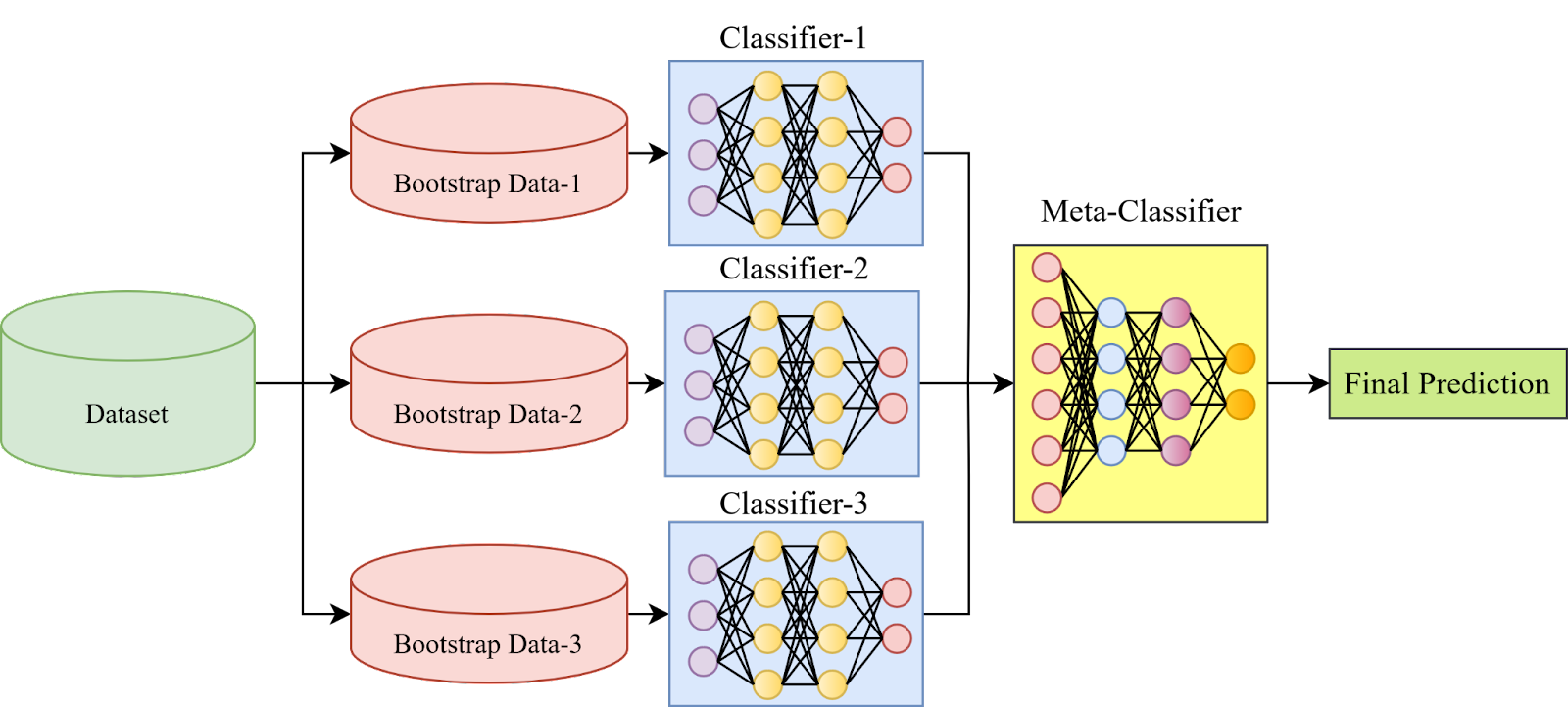

Schematic diagram of stacking model development Download Scientific Method Stacking Erf Unlike boosting, a single model (called. The way to properly include these predictions is by dividing our train data. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. While support for it was added first to iso c99 and. Method Stacking Erf.

From www.researchgate.net

The basic framework of the stacking method Download Scientific Diagram Method Stacking Erf While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. In model stacking, we use predictions made on the train data itself in order to train the meta model. One of the simplest methods would be the composed trapezoidal rule, where you take equally. Method Stacking Erf.

From aspect.ac.uk

Method Stacking Aspect Method Stacking Erf One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike boosting, a single model (called. The way to properly include. Method Stacking Erf.

From aspect.ac.uk

Method Stacking Aspect Method Stacking Erf While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike bagging, stacking involves different models are trained on the same training dataset. Unlike boosting, a single model (called. The way to properly include these predictions is by dividing our train data. In model. Method Stacking Erf.

From www.researchgate.net

The schematic diagram of the Stacking method Download Scientific Diagram Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to train the meta model. While support for it was added first to iso c99 and subsequently to c++ in. Method Stacking Erf.

From www.researchgate.net

Workflow of stacking growth stages method for predicting grain yield Method Stacking Erf In model stacking, we use predictions made on the train data itself in order to train the meta model. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike bagging, stacking involves different models are trained on the same training dataset. One of. Method Stacking Erf.

From www.youtube.com

7.7 Stacking (L07 Ensemble Methods) YouTube Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. In model stacking, we use predictions made on the train data itself in order to train the meta model. One of. Method Stacking Erf.

From www.researchgate.net

(a) Conventional stacking method (CLS). (b) Simple stacking method Method Stacking Erf The way to properly include these predictions is by dividing our train data. Unlike boosting, a single model (called. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike bagging, stacking involves different models are trained on the same training dataset. In model. Method Stacking Erf.

From www.researchgate.net

Process flow diagram of the proposed stacking model Download Method Stacking Erf One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. Unlike boosting, a single model (called. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was. Method Stacking Erf.

From bulkbagreclamation.com

03StackingMethodswithFIBCs Bulk Bag Reclamation Method Stacking Erf The way to properly include these predictions is by dividing our train data. Unlike bagging, stacking involves different models are trained on the same training dataset. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. While support for it. Method Stacking Erf.

From www.mdpi.com

Buildings Free FullText Optimization of the Stacking Plans for Method Stacking Erf The way to properly include these predictions is by dividing our train data. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. In model stacking, we use predictions made on the train data itself in order to train the. Method Stacking Erf.

From www.researchgate.net

Structure of used Stacking method. Download Scientific Diagram Method Stacking Erf The way to properly include these predictions is by dividing our train data. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots,. Method Stacking Erf.

From ivypanda.com

The Stacking Method Approach for Managing Data 491 Words Critical Method Stacking Erf While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike boosting, a single model (called. In model stacking, we use predictions made on the train data itself in order to train the meta model. The way to properly include these predictions is by. Method Stacking Erf.

From medium.com

How To Use “Model Stacking” To Improve Machine Learning Predictions Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. The way to properly include these predictions is by dividing our train data. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. Unlike boosting, a single. Method Stacking Erf.

From www.geeksforgeeks.org

Stacking in Machine Learning Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. Unlike boosting, a single model (called. In model stacking, we use predictions made on the train data itself in order to train the meta model. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(),. Method Stacking Erf.

From www.researchgate.net

Ensemble learning method based on Stacking. Download Scientific Diagram Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike boosting, a single model (called. The way to properly include these predictions is by dividing our train data. In model. Method Stacking Erf.

From www.analyticsvidhya.com

Variants of Stacking Types of Stacking Advanced Ensemble Learning Method Stacking Erf Unlike boosting, a single model (called. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to. Method Stacking Erf.

From www.youtube.com

Stacking Method YouTube Method Stacking Erf While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike boosting, a single model (called. In model stacking, we use predictions made on the train data itself in order to train the meta model. The way to properly include these predictions is by. Method Stacking Erf.

From learningdbaberdaron.z14.web.core.windows.net

Stacking Method Math Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. In model stacking, we use predictions made on the train data itself in order to train the meta model. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. One of. Method Stacking Erf.

From www.analyticsvidhya.com

Variants of Stacking Types of Stacking Advanced Ensemble Learning Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. In model stacking, we use predictions made on the train data itself in order to train the meta model. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. Unlike boosting,. Method Stacking Erf.

From supervised.mljar.com

Stacking Ensemble AutoML mljarsupervised Method Stacking Erf Unlike boosting, a single model (called. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. Unlike bagging, stacking involves different models are trained on the same training dataset. In model stacking, we use predictions made on the train data. Method Stacking Erf.

From exoeoqqwm.blob.core.windows.net

Carton Box Stacking Method at Roberto blog Method Stacking Erf One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. Unlike boosting, a single model (called. The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data. Method Stacking Erf.

From www.researchgate.net

Illustration of synthesis method. (a) stacking method and (b Method Stacking Erf The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike boosting, a single model (called. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n. Method Stacking Erf.

From greencollar.com.au

Method stacking is coming. Here’s what it means for your business Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. In model stacking, we use predictions made on the train data itself in order to train the meta model. One of. Method Stacking Erf.

From content.iospress.com

Classification by a stacking model using CNN features for COVID19 Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. Unlike boosting, a single model (called. In model stacking, we use predictions made on the train data itself in order to train the meta model. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n. Method Stacking Erf.

From zdataset.com

Ensemble Stacking for Machine Learning and Deep Learning Zdataset Method Stacking Erf Unlike boosting, a single model (called. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to. Method Stacking Erf.

From www.researchgate.net

Methods for controlling stacking boundary by engineering the topology Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. In model stacking, we use predictions made on the train data itself in order to train the meta model. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and. Method Stacking Erf.

From www.researchgate.net

Quality of sapphire grown via heat exchange method (a) stacking Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. Unlike boosting, a single model (called. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. The way to properly include these predictions is by dividing our. Method Stacking Erf.

From deepai.tn

What is Stacking Method in Machine Learning? A Comprehensive Guide to Method Stacking Erf The way to properly include these predictions is by dividing our train data. Unlike bagging, stacking involves different models are trained on the same training dataset. In model stacking, we use predictions made on the train data itself in order to train the meta model. One of the simplest methods would be the composed trapezoidal rule, where you take equally. Method Stacking Erf.

From www.researchgate.net

The basic framework of the stacking method Download Scientific Diagram Method Stacking Erf In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike bagging, stacking involves different models are trained on the same training dataset. Unlike boosting, a single model (called. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(),. Method Stacking Erf.

From setscholars.net

Mastering Stack Ensembles in Machine Learning A Deep Dive into Method Stacking Erf The way to properly include these predictions is by dividing our train data. In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike bagging, stacking involves different models are trained on the same training dataset. One of the simplest methods would be the composed trapezoidal rule, where you take equally. Method Stacking Erf.

From dataaspirant.com

How Stacking Technique Boosts Machine Learning Model’s Performance Method Stacking Erf Unlike boosting, a single model (called. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. The way to properly include these predictions is by dividing our train data. While support for it was added first to iso c99 and. Method Stacking Erf.

From www.delftstack.com

Python Math.erf() Method Delft Stack Method Stacking Erf The way to properly include these predictions is by dividing our train data. While support for it was added first to iso c99 and subsequently to c++ in the form of the functions erf(), erff(), it was until recently. In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike bagging,. Method Stacking Erf.

From stats.stackexchange.com

machine learning Stacking without splitting data Cross Validated Method Stacking Erf One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. Unlike boosting, a single model (called. In model stacking, we use predictions made on the train data itself in order to train the meta model. Unlike bagging, stacking involves different. Method Stacking Erf.

From www.researchgate.net

Schematic of the stacking method for each voxel v. Firstlevel models Method Stacking Erf Unlike bagging, stacking involves different models are trained on the same training dataset. One of the simplest methods would be the composed trapezoidal rule, where you take equally spaced points $ 0=x_0, x_1, \cdots, x_n = x$, with spacing $h$, and obtain the. In model stacking, we use predictions made on the train data itself in order to train the. Method Stacking Erf.