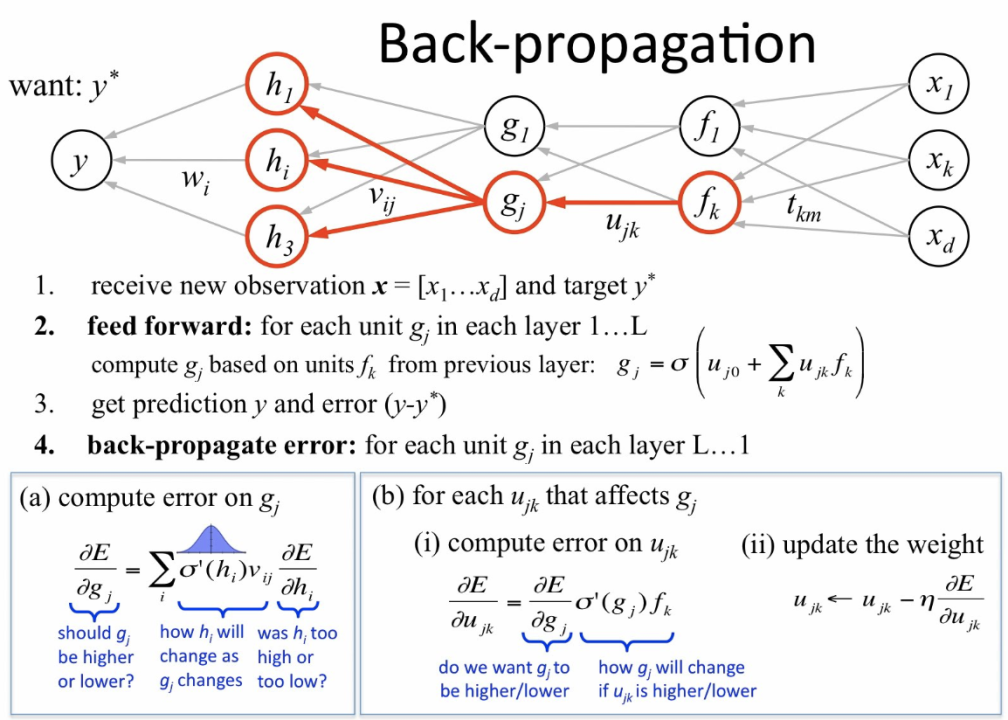

Back Propagation Neural Network Chain Rule . Way of computing the partial derivatives of a loss function with respect to the. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. the algorithm is used to effectively train a neural network through a method called chain rule. Linear classifiers can only draw linear decision boundaries. backpropagation (\backprop for short) is. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Assuming we know the structure of the computational graph beforehand. neural nets will be very large: computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. Impractical to write down gradient formula by hand for all parameters.

from www.linkedin.com

in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Linear classifiers can only draw linear decision boundaries. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. neural nets will be very large: Impractical to write down gradient formula by hand for all parameters. Way of computing the partial derivatives of a loss function with respect to the. Assuming we know the structure of the computational graph beforehand. backpropagation (\backprop for short) is. the algorithm is used to effectively train a neural network through a method called chain rule. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how.

Neural network Back propagation

Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Impractical to write down gradient formula by hand for all parameters. Way of computing the partial derivatives of a loss function with respect to the. neural nets will be very large: backpropagation (\backprop for short) is. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Linear classifiers can only draw linear decision boundaries. the algorithm is used to effectively train a neural network through a method called chain rule. Assuming we know the structure of the computational graph beforehand. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the.

From towardsdatascience.com

Understanding Backpropagation Algorithm by Simeon Kostadinov Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Linear classifiers can only draw linear decision boundaries. backpropagation (\backprop for short) is. the algorithm is used to effectively train a neural network through a method called chain rule. neural nets will be very large: computing the gradient. Back Propagation Neural Network Chain Rule.

From www.hashpi.com

Backpropagation equations applying the chain rule to a neural network Back Propagation Neural Network Chain Rule backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. the algorithm is used to effectively train a neural network through a method called chain rule. Way of. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Multivariable Chain Rule Back Propagation Artificial Neural Network a Back Propagation Neural Network Chain Rule the algorithm is used to effectively train a neural network through a method called chain rule. Linear classifiers can only draw linear decision boundaries. Way of computing the partial derivatives of a loss function with respect to the. Assuming we know the structure of the computational graph beforehand. neural nets will be very large: Impractical to write down. Back Propagation Neural Network Chain Rule.

From medium.com

BackPropagation is very simple. Who made it Complicated Back Propagation Neural Network Chain Rule backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. backpropagation (\backprop for short) is. Linear classifiers can only draw linear decision boundaries. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. Assuming we know the structure. Back Propagation Neural Network Chain Rule.

From stats.stackexchange.com

backpropagation Is my Neural Network chain rule correct? Cross Back Propagation Neural Network Chain Rule Assuming we know the structure of the computational graph beforehand. neural nets will be very large: Impractical to write down gradient formula by hand for all parameters. backpropagation (\backprop for short) is. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. backpropagation is a machine learning algorithm for. Back Propagation Neural Network Chain Rule.

From towardsdatascience.com

Understanding Backpropagation Algorithm by Simeon Kostadinov Back Propagation Neural Network Chain Rule backpropagation (\backprop for short) is. the algorithm is used to effectively train a neural network through a method called chain rule. Way of computing the partial derivatives of a loss function with respect to the. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Impractical to write down. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Back propagation neural network topology diagram. Download Scientific Back Propagation Neural Network Chain Rule Impractical to write down gradient formula by hand for all parameters. Assuming we know the structure of the computational graph beforehand. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Way of computing the partial derivatives of a loss function with respect to the. backpropagation is a machine learning algorithm. Back Propagation Neural Network Chain Rule.

From www.datasciencecentral.com

Neural Networks The Backpropagation algorithm in a picture Back Propagation Neural Network Chain Rule neural nets will be very large: Assuming we know the structure of the computational graph beforehand. Way of computing the partial derivatives of a loss function with respect to the. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. the algorithm is used to effectively. Back Propagation Neural Network Chain Rule.

From rushiblogs.weebly.com

The Journey of Back Propagation in Neural Networks Rushi blogs. Back Propagation Neural Network Chain Rule backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. the algorithm is used to effectively train a neural network through a method called chain rule. Linear classifiers can only draw linear decision boundaries. Impractical to write down gradient formula by hand for all parameters. Assuming we know the structure. Back Propagation Neural Network Chain Rule.

From www.qwertee.io

An introduction to backpropagation Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. Impractical to write down gradient formula by hand for all parameters. the algorithm is used to effectively train a. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Structure of back propagation neural network. Download Scientific Diagram Back Propagation Neural Network Chain Rule Linear classifiers can only draw linear decision boundaries. Assuming we know the structure of the computational graph beforehand. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Impractical to write down gradient. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Back propagation neural network configuration Download Scientific Diagram Back Propagation Neural Network Chain Rule the algorithm is used to effectively train a neural network through a method called chain rule. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Way of. Back Propagation Neural Network Chain Rule.

From studyglance.in

Back Propagation NN Tutorial Study Glance Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. neural nets will be very large: Way of computing the partial derivatives of a loss function with respect to the. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using. Back Propagation Neural Network Chain Rule.

From www.marktechpost.com

Backpropagation in Neural Networks MarkTechPost Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Assuming we know the structure of the computational graph beforehand. neural nets will be very large: Linear classifiers can only draw linear. Back Propagation Neural Network Chain Rule.

From www.qwertee.io

An introduction to backpropagation Back Propagation Neural Network Chain Rule computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. neural nets will be very large: Impractical to write down gradient formula by hand for all parameters. Way of. Back Propagation Neural Network Chain Rule.

From towardsdatascience.com

How Does BackPropagation Work in Neural Networks? by Kiprono Elijah Back Propagation Neural Network Chain Rule Impractical to write down gradient formula by hand for all parameters. backpropagation (\backprop for short) is. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. the algorithm is used to effectively train a neural network through a method called chain rule. Way of computing the partial derivatives of. Back Propagation Neural Network Chain Rule.

From www.youtube.com

Back Propagation Neural Network Basic Concepts Neural Networks Back Propagation Neural Network Chain Rule neural nets will be very large: backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Impractical to write down gradient formula by hand for all parameters. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. . Back Propagation Neural Network Chain Rule.

From userengineethel.z13.web.core.windows.net

Chain Rule Circuit Diagram Neural Network Back Propagation Neural Network Chain Rule backpropagation (\backprop for short) is. Way of computing the partial derivatives of a loss function with respect to the. Impractical to write down gradient formula by hand for all parameters. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. the algorithm is used to effectively. Back Propagation Neural Network Chain Rule.

From www.jeremyjordan.me

Neural networks training with backpropagation. Back Propagation Neural Network Chain Rule Assuming we know the structure of the computational graph beforehand. Linear classifiers can only draw linear decision boundaries. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. neural nets will be very large: the algorithm is used to effectively train a neural network through a method called chain rule.. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Structural model of the backpropagation neural network [30 Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Linear classifiers can only draw linear decision boundaries. neural nets will be very large: Assuming we know the structure of the computational graph beforehand. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can. Back Propagation Neural Network Chain Rule.

From www.youtube.com

Chain rule of differential with backpropagation Deep Learning Back Propagation Neural Network Chain Rule Assuming we know the structure of the computational graph beforehand. Way of computing the partial derivatives of a loss function with respect to the. backpropagation (\backprop for short) is. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. neural nets will be very large: Linear. Back Propagation Neural Network Chain Rule.

From www.geeksforgeeks.org

Backpropagation in Neural Network Back Propagation Neural Network Chain Rule in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Impractical to write down gradient formula by hand for all parameters. neural nets will be very large: computing the gradient in. Back Propagation Neural Network Chain Rule.

From math.stackexchange.com

partial derivative NN Backpropagation Computing \frac{{\rm d}E Back Propagation Neural Network Chain Rule backpropagation (\backprop for short) is. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. neural nets will be very large: in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Linear classifiers can only draw linear decision. Back Propagation Neural Network Chain Rule.

From towardsdatascience.com

Understanding Backpropagation Algorithm by Simeon Kostadinov Back Propagation Neural Network Chain Rule backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Impractical. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Forward propagation versus backward propagation. Download Scientific Back Propagation Neural Network Chain Rule computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. Linear classifiers can only draw linear decision boundaries. Impractical to write down gradient formula by hand for all parameters. Assuming we know the structure of the computational graph beforehand. in machine learning, backpropagation is a gradient estimation. Back Propagation Neural Network Chain Rule.

From medium.com

Andrew Ng Coursera Deep Learning Back Propagation explained simply Medium Back Propagation Neural Network Chain Rule backpropagation (\backprop for short) is. Way of computing the partial derivatives of a loss function with respect to the. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

The structure of back propagation neural network. Download Scientific Back Propagation Neural Network Chain Rule Way of computing the partial derivatives of a loss function with respect to the. neural nets will be very large: Impractical to write down gradient formula by hand for all parameters. Assuming we know the structure of the computational graph beforehand. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute.. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Back propagation neural network topology structural diagram. Download Back Propagation Neural Network Chain Rule backpropagation (\backprop for short) is. Linear classifiers can only draw linear decision boundaries. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. neural nets will be very large: the algorithm is used to effectively train a neural network through a method called chain rule. Way of computing the. Back Propagation Neural Network Chain Rule.

From www.linkedin.com

Neural network Back propagation Back Propagation Neural Network Chain Rule neural nets will be very large: Impractical to write down gradient formula by hand for all parameters. Linear classifiers can only draw linear decision boundaries. Way of computing the partial derivatives of a loss function with respect to the. the algorithm is used to effectively train a neural network through a method called chain rule. backpropagation is. Back Propagation Neural Network Chain Rule.

From kevintham.github.io

The Backpropagation Algorithm Kevin Tham Back Propagation Neural Network Chain Rule neural nets will be very large: Impractical to write down gradient formula by hand for all parameters. Linear classifiers can only draw linear decision boundaries. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. the algorithm is used to effectively train a neural network through a method called chain. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

The architecture of back propagation function neural network diagram Back Propagation Neural Network Chain Rule computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. Way of computing the partial derivatives of a loss function with respect to the. the algorithm is used to effectively train a neural network through a method called chain rule. in machine learning, backpropagation is a. Back Propagation Neural Network Chain Rule.

From medium.com

Backpropagation — Algorithm that tells “How A Neural Network Learns Back Propagation Neural Network Chain Rule Impractical to write down gradient formula by hand for all parameters. Linear classifiers can only draw linear decision boundaries. backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the.. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Feedforward Backpropagation Neural Network architecture. Download Back Propagation Neural Network Chain Rule Linear classifiers can only draw linear decision boundaries. the algorithm is used to effectively train a neural network through a method called chain rule. computing the gradient in the backpropagation algorithm helps to minimize the cost function and it can be implemented by using the. Impractical to write down gradient formula by hand for all parameters. Way of. Back Propagation Neural Network Chain Rule.

From www.mql5.com

the chain rule in back propagation from a coder's perspective Neural Back Propagation Neural Network Chain Rule backpropagation is a machine learning algorithm for training neural networks by using the chain rule to compute how. Assuming we know the structure of the computational graph beforehand. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Impractical to write down gradient formula by hand for all parameters. backpropagation. Back Propagation Neural Network Chain Rule.

From www.researchgate.net

Basic structure of backpropagation neural network. Download Back Propagation Neural Network Chain Rule Impractical to write down gradient formula by hand for all parameters. the algorithm is used to effectively train a neural network through a method called chain rule. in machine learning, backpropagation is a gradient estimation method commonly used for training neural networks to compute. Way of computing the partial derivatives of a loss function with respect to the.. Back Propagation Neural Network Chain Rule.