Xgb.train Weight . General parameters, booster parameters and task. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Methods including update and boost from xgboost.booster are designed for internal usage only. Before running xgboost, we must set three types of parameters: Weighting means increasing the contribution of. Check this for how xgboost handles weights: The sample_weight parameter allows you to specify a different weight for each training example. The wrapper function xgboost.train does. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,.

from www.modb.pro

Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. General parameters, booster parameters and task. Methods including update and boost from xgboost.booster are designed for internal usage only. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Check this for how xgboost handles weights: The sample_weight parameter allows you to specify a different weight for each training example. Weighting means increasing the contribution of. Before running xgboost, we must set three types of parameters: The wrapper function xgboost.train does.

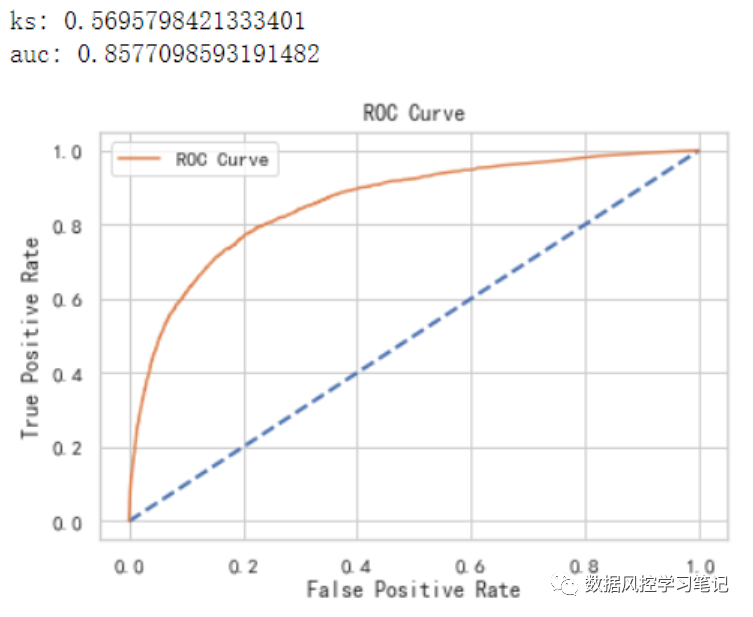

Xgboost简单建模——Kaggle项目GiveMeSomeCredit实战 墨天轮

Xgb.train Weight Before running xgboost, we must set three types of parameters: General parameters, booster parameters and task. Weighting means increasing the contribution of. Check this for how xgboost handles weights: The sample_weight parameter allows you to specify a different weight for each training example. Methods including update and boost from xgboost.booster are designed for internal usage only. The wrapper function xgboost.train does. Before running xgboost, we must set three types of parameters: For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,.

From www.uber.com

Productionizing Distributed XGBoost to Train Deep Tree Models with Xgb.train Weight Weighting means increasing the contribution of. Before running xgboost, we must set three types of parameters: The wrapper function xgboost.train does. Methods including update and boost from xgboost.booster are designed for internal usage only. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Check this for how xgboost handles weights: The sample_weight parameter allows you to specify a different weight for. Xgb.train Weight.

From codeantenna.com

xgboost使用train方法训练模型绘制学习曲线的方法 CodeAntenna Xgb.train Weight The wrapper function xgboost.train does. Check this for how xgboost handles weights: Before running xgboost, we must set three types of parameters: The sample_weight parameter allows you to specify a different weight for each training example. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. General parameters, booster parameters and task. Weighting means increasing the contribution of. Methods including update and. Xgb.train Weight.

From dzone.com

XGBoost A Deep Dive Into Boosting DZone Xgb.train Weight The wrapper function xgboost.train does. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Before running xgboost, we must set three types of parameters: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. General parameters, booster parameters and task. The sample_weight. Xgb.train Weight.

From dzone.com

XGBoost A Deep Dive Into Boosting DZone Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. General parameters, booster parameters and task. Check this for how xgboost handles weights: Weighting means increasing the contribution of. Before running xgboost, we must set three types of parameters: For instance, if the input is a numpy array on cpu but cuda is used for training, then. Xgb.train Weight.

From dalex.drwhy.ai

dalexxgboost Xgb.train Weight The wrapper function xgboost.train does. Methods including update and boost from xgboost.booster are designed for internal usage only. Check this for how xgboost handles weights: General parameters, booster parameters and task. Before running xgboost, we must set three types of parameters: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Weighting means increasing the contribution of. The sample_weight parameter allows you. Xgb.train Weight.

From github.com

train_xgb_boost TypeError Expected sequence or arraylike, got Xgb.train Weight Before running xgboost, we must set three types of parameters: Weighting means increasing the contribution of. The wrapper function xgboost.train does. Methods including update and boost from xgboost.booster are designed for internal usage only. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Check this for how xgboost handles weights: The sample_weight parameter allows you to specify a different weight for. Xgb.train Weight.

From blog.csdn.net

XGBoost原理及实战_trainrmsemean', 'testrmsemeanCSDN博客 Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. The sample_weight parameter allows you to specify a different weight for each training example. The wrapper function xgboost.train does. Check this for how xgboost handles weights: For instance, if the input is a numpy array on cpu but cuda. Xgb.train Weight.

From www.anyscale.com

How to Speed Up XGBoost Model Training Anyscale Xgb.train Weight The sample_weight parameter allows you to specify a different weight for each training example. General parameters, booster parameters and task. The wrapper function xgboost.train does. Check this for how xgboost handles weights: For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to. Xgb.train Weight.

From www.researchgate.net

Prediction result of train data from XGBoost model. a Results compared Xgb.train Weight Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. General parameters, booster parameters and task. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Check this for how xgboost handles weights: The wrapper function xgboost.train does. The sample_weight parameter allows you. Xgb.train Weight.

From wandb.ai

xgboostwaterconsumption Workspace Weights & Biases Xgb.train Weight Weighting means increasing the contribution of. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Before running xgboost, we must set three types of parameters: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Check this for how xgboost handles weights:. Xgb.train Weight.

From blog.csdn.net

[机器学习]XGBoost 训练时使用Weight,AUC指标的计算总结_python xgboost 计算aucCSDN博客 Xgb.train Weight Check this for how xgboost handles weights: Methods including update and boost from xgboost.booster are designed for internal usage only. Before running xgboost, we must set three types of parameters: The sample_weight parameter allows you to specify a different weight for each training example. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Weighting means increasing the contribution of. General parameters,. Xgb.train Weight.

From www.modb.pro

Xgboost简单建模——Kaggle项目GiveMeSomeCredit实战 墨天轮 Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. Before running xgboost, we must set three types of parameters: For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. The sample_weight parameter allows you to specify a. Xgb.train Weight.

From www.shiksha.com

XGBoost Algorithm in Machine Learning Shiksha Online Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. The wrapper function xgboost.train does. Check this for how xgboost handles weights: Before running xgboost, we must set three types of parameters: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Weighting means increasing the contribution of. The sample_weight parameter allows you to specify a different weight for. Xgb.train Weight.

From mljar.com

How to use early stopping in Xgboost training? MLJAR Xgb.train Weight Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. The sample_weight parameter allows you to specify a different weight for each training example. Check this for how xgboost handles weights: General parameters, booster parameters and task. The wrapper function xgboost.train does. Weighting means increasing the contribution of. Before running xgboost, we must set three types of parameters: Methods including update and. Xgb.train Weight.

From www.researchgate.net

10 XGB predictions of SWASH test data (grey markers) and observed Xgb.train Weight Weighting means increasing the contribution of. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. The wrapper function xgboost.train does. Check this for how xgboost handles weights: General parameters, booster parameters and. Xgb.train Weight.

From blog.csdn.net

ML之Xgboost:利用Xgboost模型对数据集(比马印第安人糖尿病)进行二分类预测(5年内是否患糖尿病)_xgb.train Xgb.train Weight Weighting means increasing the contribution of. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Before running xgboost, we must set three types of parameters: Methods including update and boost from xgboost.booster. Xgb.train Weight.

From blog.csdn.net

决策树可视化 xgb、lgb可视化_lgb回归模型可视化CSDN博客 Xgb.train Weight General parameters, booster parameters and task. Weighting means increasing the contribution of. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. The sample_weight parameter allows you to specify a different weight for each training example. Check this for how xgboost. Xgb.train Weight.

From zhuanlan.zhihu.com

『迷你教程』Xgboost特征重要性的计算方式一共有3种? 知乎 Xgb.train Weight For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Methods including update and boost from xgboost.booster are designed for internal usage only. General parameters, booster parameters and task. The wrapper function xgboost.train does. The sample_weight parameter allows you to specify. Xgb.train Weight.

From docs.wandb.ai

XGBoost Weights & Biases Documentation Xgb.train Weight Weighting means increasing the contribution of. Check this for how xgboost handles weights: General parameters, booster parameters and task. The sample_weight parameter allows you to specify a different weight for each training example. Before running xgboost, we must set three types of parameters: For instance, if the input is a numpy array on cpu but cuda is used for training,. Xgb.train Weight.

From stackoverflow.com

python The difference between feature importance and feature weights Xgb.train Weight Before running xgboost, we must set three types of parameters: The sample_weight parameter allows you to specify a different weight for each training example. Check this for how xgboost handles weights: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is. Xgb.train Weight.

From www.researchgate.net

Mean feature importance given by XGBoost using 5 different seeds to Xgb.train Weight Weighting means increasing the contribution of. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. General parameters, booster parameters and task. Before running xgboost, we must set three types of parameters: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Check. Xgb.train Weight.

From dmlc.github.io

An Introduction to XGBoost R package Xgb.train Weight Before running xgboost, we must set three types of parameters: The sample_weight parameter allows you to specify a different weight for each training example. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. General parameters, booster parameters and task. Methods including update and boost from xgboost.booster are designed for internal usage only. Check this for how xgboost handles weights: The wrapper. Xgb.train Weight.

From docs.primihub.com

纵向联邦XGBoost PrimiHub Xgb.train Weight Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Weighting means increasing the contribution of. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Methods including update and boost from xgboost.booster are designed for internal usage only. Check this for how. Xgb.train Weight.

From www.researchgate.net

Schematic illustration of the XGboost model. Download Scientific Diagram Xgb.train Weight The wrapper function xgboost.train does. General parameters, booster parameters and task. Before running xgboost, we must set three types of parameters: Weighting means increasing the contribution of. Check this for how xgboost handles weights: For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then. Xgb.train Weight.

From devopedia.org

XGBoost Xgb.train Weight General parameters, booster parameters and task. The sample_weight parameter allows you to specify a different weight for each training example. Methods including update and boost from xgboost.booster are designed for internal usage only. Weighting means increasing the contribution of. Check this for how xgboost handles weights: Before running xgboost, we must set three types of parameters: For instance, if the. Xgb.train Weight.

From machinelearningmastery.com

XGBoost With Python Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Before running xgboost, we must set three types of parameters: Weighting means increasing the contribution of. The wrapper function xgboost.train does. The sample_weight parameter allows you to specify a different weight for each training example. For instance, if the. Xgb.train Weight.

From mljar.com

How to use early stopping in Xgboost training? MLJAR Xgb.train Weight Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. The sample_weight parameter allows you to specify a different weight for each training example. Check this for how xgboost handles weights: The wrapper function xgboost.train does. Weighting means increasing the contribution of. General parameters, booster parameters and task. For instance, if the input is a numpy array on cpu but cuda is. Xgb.train Weight.

From www.youtube.com

R how to specify train and test indices for xgb.cv in R package Xgb.train Weight Weighting means increasing the contribution of. The wrapper function xgboost.train does. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Methods including update and boost from xgboost.booster are designed for internal usage. Xgb.train Weight.

From aws.amazon.com

Utilizing XGBoost training reports to improve your models AWS Machine Xgb.train Weight Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Before running xgboost, we must set three types of parameters: The wrapper function xgboost.train does. Weighting means increasing the contribution of. Check this. Xgb.train Weight.

From towardsdatascience.com

Selecting Optimal Parameters for XGBoost Model Training by Andrej Xgb.train Weight The sample_weight parameter allows you to specify a different weight for each training example. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. The wrapper function xgboost.train does. Check this for how xgboost handles weights: Methods including update and boost from xgboost.booster are designed for internal usage only. Weighting means increasing the contribution of. General parameters, booster parameters and task. Before. Xgb.train Weight.

From zhuanlan.zhihu.com

xgboost实战,一篇就好 知乎 Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Before running xgboost, we must set three types of parameters: The wrapper function xgboost.train does. Check this for. Xgb.train Weight.

From www.analyticsvidhya.com

XGBoost Parameters XGBoost Parameter Tuning Xgb.train Weight Methods including update and boost from xgboost.booster are designed for internal usage only. The sample_weight parameter allows you to specify a different weight for each training example. Before running xgboost, we must set three types of parameters: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. Check this for how xgboost handles weights: Weighting means increasing the contribution of. The wrapper. Xgb.train Weight.

From discuss.xgboost.ai

Results inconsistent when using weights and gpu_hist (R) XGBoost Xgb.train Weight Before running xgboost, we must set three types of parameters: Check this for how xgboost handles weights: Weighting means increasing the contribution of. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on cpu then transferred to gpu. Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,.. Xgb.train Weight.

From neptune.ai

XGBoost Everything You Need to Know Xgb.train Weight Before running xgboost, we must set three types of parameters: General parameters, booster parameters and task. Check this for how xgboost handles weights: Dmatrix (data, label=none, missing=none, weight=none, silent=false, feature_names=none, feature_types=none,. The wrapper function xgboost.train does. For instance, if the input is a numpy array on cpu but cuda is used for training, then the data is first processed on. Xgb.train Weight.

From arctansin.github.io

Chapter 2 Model Building Machine Learning Project Xgb.train Weight The sample_weight parameter allows you to specify a different weight for each training example. Methods including update and boost from xgboost.booster are designed for internal usage only. The wrapper function xgboost.train does. Check this for how xgboost handles weights: General parameters, booster parameters and task. Weighting means increasing the contribution of. For instance, if the input is a numpy array. Xgb.train Weight.