Multi Scale Training Pytorch . The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a.

from www.scaler.com

The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube.

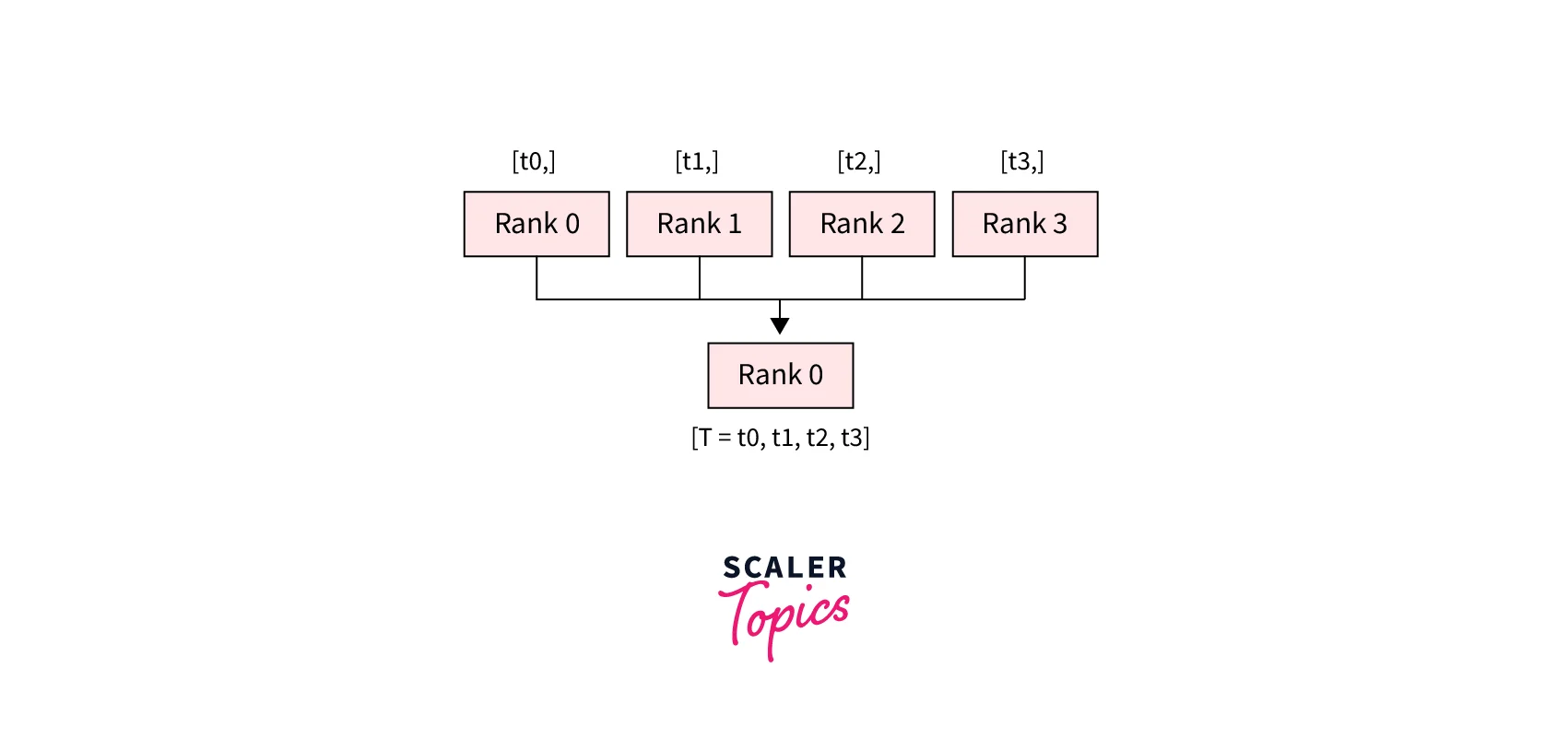

PyTorch API for Distributed Training Scaler Topics

Multi Scale Training Pytorch The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The code runs with recent pytorch. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube.

From www.scaler.com

PyTorch API for Distributed Training Scaler Topics Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Multi Scale Training Pytorch.

From www.telesens.co

Distributed data parallel training using Pytorch on AWS Telesens Multi Scale Training Pytorch The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. Multi Scale Training Pytorch.

From imagetou.com

Pytorch Multi Class Tutorial Image to u Multi Scale Training Pytorch This tutorial goes over how to set up a. The code runs with recent pytorch. The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From www.tutorialexample.com

Implement Mixed Precision Training with GradScaler in PyTorch PyTorch Tutorial Multi Scale Training Pytorch This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Multi Scale Training Pytorch.

From thevatsalsaglani.medium.com

Training and Deploying a MultiLabel Image Classifier using PyTorch, Flask, ReactJS and Firebase Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From github.com

pytorchmultiGPUtrainingtutorial/singlemachineandmultiGPUDistributedDataParallelslurm Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The code runs with recent pytorch. Multi Scale Training Pytorch.

From www.scaler.com

PyTorch API for Distributed Training Scaler Topics Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The code runs with recent pytorch. The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From imagetou.com

Pytorch Training Multi Gpu Image to u Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The code runs with recent pytorch. Multi Scale Training Pytorch.

From debuggercafe.com

MultiClass Semantic Segmentation Training using PyTorch Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From lightning.ai

How to Speed Up PyTorch Model Training Lightning AI Multi Scale Training Pytorch This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Multi Scale Training Pytorch.

From magazine.sebastianraschka.com

Accelerating PyTorch Model Training Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From www.anyscale.com

Introducing Ray Lightning Multinode PyTorch Lightning training made easy Anyscale Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The code runs with recent pytorch. Multi Scale Training Pytorch.

From www.anyscale.com

Fast and Scalable Model Training with PyTorch and Ray Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The code runs with recent pytorch. The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From github.com

GitHub CaoWGG/multiscaletraining multiscale training in pytorch Multi Scale Training Pytorch The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. Multi Scale Training Pytorch.

From aws.amazon.com

Build highperformance ML models using PyTorch 2.0 on AWS Part 1 AWS Machine Learning Blog Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The code runs with recent pytorch. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. Multi Scale Training Pytorch.

From twitter.com

PyTorch on Twitter "📢 Announcing TorchMultimodal Beta, a PyTorch domain library for training Multi Scale Training Pytorch The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From www.youtube.com

54 Quantization in PyTorch Mixed Precision Training Deep Learning Neural Network YouTube Multi Scale Training Pytorch The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. Multi Scale Training Pytorch.

From devblog.pytorchlightning.ai

Accessible MultiBillion Parameter Model Training with PyTorch Lightning + DeepSpeed by Multi Scale Training Pytorch The code runs with recent pytorch. This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. Multi Scale Training Pytorch.

From www.vrogue.co

How To Add Additional Layers In A Pre Trained Model Using Pytorch Vrogue Multi Scale Training Pytorch This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From laptrinhx.com

Efficient PyTorch training with Vertex AI LaptrinhX / News Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From www.scaler.com

Distributed Training with PyTorch Scaler Topics Multi Scale Training Pytorch This tutorial goes over how to set up a. The code runs with recent pytorch. The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From github.com

GitHub qianlimalab/Scaleteaching This is an official pytorch implementation for paper Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The code runs with recent pytorch. Multi Scale Training Pytorch.

From www.scaler.com

PyTorch API for Distributed Training Scaler Topics Multi Scale Training Pytorch This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Multi Scale Training Pytorch.

From www.youtube.com

PyTorch Lightning 10 Multi GPU Training YouTube Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. The code runs with recent pytorch. The recordings of our invited talks are now available on youtube. Multi Scale Training Pytorch.

From www.youtube.com

Multivariate Time Series Classification Tutorial with LSTM in PyTorch, PyTorch Lightning and Multi Scale Training Pytorch The code runs with recent pytorch. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From opensourcebiology.eu

PyTorch/XLA SPMD Scale Up Model Training and Serving with Automatic Parallelization Open Multi Scale Training Pytorch This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Multi Scale Training Pytorch.

From blog.csdn.net

(pytorch进阶之路)NormalizingFlow标准流_normalizing flowCSDN博客 Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From reinforz.co.jp

PyTorchとは?特徴からトレーニング、評価までを解説 Reinforz Insight Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From www.youtube.com

Training on multiple GPUs and multinode training with PyTorch DistributedDataParallel YouTube Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From github.com

multiscale training and single scale training · Issue 21 · nmhkahn/CARNpytorch · GitHub Multi Scale Training Pytorch Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. This tutorial goes over how to set up a. Multi Scale Training Pytorch.

From lambdalabs.com

Multi node PyTorch Distributed Training Guide For People In A Hurry Multi Scale Training Pytorch This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From www.vrogue.co

Pytorch Training Model vrogue.co Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From engineering.fb.com

How PyTorch powers AI training and inference Engineering at Meta Multi Scale Training Pytorch The recordings of our invited talks are now available on youtube. The code runs with recent pytorch. This tutorial goes over how to set up a. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From www.vrogue.co

Yolov3 Training And Inference In Pytorch vrogue.co Multi Scale Training Pytorch The code runs with recent pytorch. This tutorial goes over how to set up a. The recordings of our invited talks are now available on youtube. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. Multi Scale Training Pytorch.

From www.aime.info

Multi GPU training with Pytorch Multi Scale Training Pytorch The code runs with recent pytorch. Distributed training is a model training paradigm that involves spreading training workload across multiple worker nodes, therefore significantly. The recordings of our invited talks are now available on youtube. This tutorial goes over how to set up a. Multi Scale Training Pytorch.