How To Decide Executors In Spark . first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. use spark session variable to set number of executors dynamically (from within program). In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. optimising a spark application based on the number of executor instances is a critical aspect of achieving better.

from sparkbyexamples.com

use spark session variable to set number of executors dynamically (from within program). how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. optimising a spark application based on the number of executor instances is a critical aspect of achieving better.

Spark UI Understanding Spark Execution Spark By {Examples}

How To Decide Executors In Spark tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? optimising a spark application based on the number of executor instances is a critical aspect of achieving better. first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. use spark session variable to set number of executors dynamically (from within program). among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of.

From blogs.perficient.com

Azure Databricks Capacity Planning for optimum Spark Cluster / Blogs How To Decide Executors In Spark In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. optimising a spark application based on the number of executor instances is a critical aspect of achieving better. among the most critical aspects of spark tuning is deciding. How To Decide Executors In Spark.

From kontext.tech

Spark Basics Application, Driver, Executor, Job, Stage and Task How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. optimising a spark application based on the number of executor instances is a critical aspect. How To Decide Executors In Spark.

From www.youtube.com

Spark Architecture Simplified for Beginners YARN Client mode Spark How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. among the most critical aspects of spark tuning is deciding. How To Decide Executors In Spark.

From www.analyticsvidhya.com

Performance Tuning on Apache Spark for Data Engineers How To Decide Executors In Spark first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve. How To Decide Executors In Spark.

From sparkbyexamples.com

What is Spark Executor Spark By {Examples} How To Decide Executors In Spark tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. In apache spark, the number of cores and the number of executors are two important configuration parameters that. How To Decide Executors In Spark.

From backstage.forgerock.com

Prepare Spark Environment Autonomous Identity How To Decide Executors In Spark In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. optimising a spark application based on the number of executor instances is a critical aspect of achieving better. how to tune spark’s number of executors, executor core, and. How To Decide Executors In Spark.

From www.vrogue.co

Databricks Vs Synapse Spark Pools What When And Where vrogue.co How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of. How To Decide Executors In Spark.

From www.gangofcoders.net

What are workers, executors, cores in Spark Standalone cluster? Gang How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance. How To Decide Executors In Spark.

From sparkbyexamples.com

Spark Set JVM Options to Driver & Executors Spark By {Examples} How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. among the most critical aspects of spark tuning is deciding. How To Decide Executors In Spark.

From www.youtube.com

Spark Stages And Tasks (Part1) Spark Driver and Executor Bigdata How To Decide Executors In Spark among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63. How To Decide Executors In Spark.

From tianlangstudio.gitbooks.io

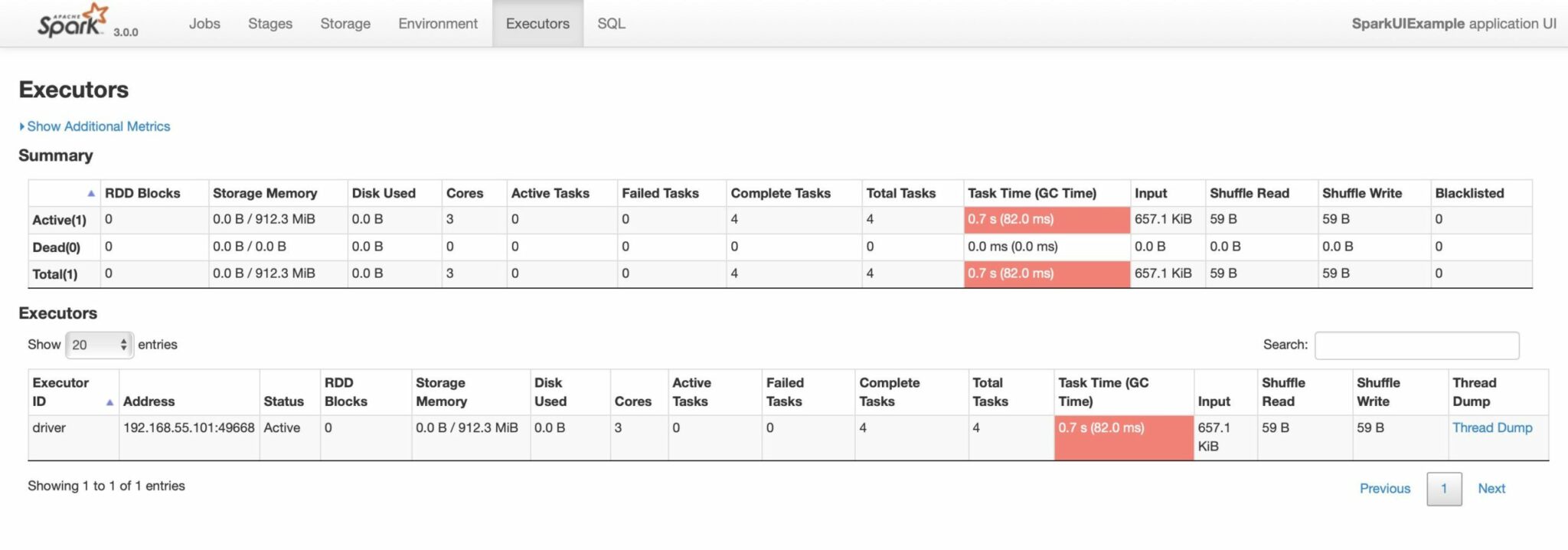

Executors Tab · 掌握Apache Spark How To Decide Executors In Spark tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. use spark session variable to set number. How To Decide Executors In Spark.

From multitechnewstoday.blogspot.com

Bacics of Apache spark in Big data Hadoop OnlineITGuru Multitechnews How To Decide Executors In Spark first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. use spark session variable to set number of executors dynamically (from within program). In. How To Decide Executors In Spark.

From allbigdatathings.blogspot.com

Controlling Executors and Cores in Spark Applications How To Decide Executors In Spark among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. tuning the number of executors, tasks, and memory allocation is a critical aspect of. How To Decide Executors In Spark.

From www.qubole.com

An Introduction to Apache Spark Optimization in Qubole How To Decide Executors In Spark among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In. How To Decide Executors In Spark.

From www.prathapkudupublog.com

Snippets Spark Executors and Drivers How To Decide Executors In Spark tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can. How To Decide Executors In Spark.

From subscription.packtpub.com

Apache Spark Quick Start Guide How To Decide Executors In Spark among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in. How To Decide Executors In Spark.

From www.simplilearn.com

Implementation of Spark Applications Tutorial Simplilearn How To Decide Executors In Spark how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. In apache spark, the number of cores and the number of executors are two important. How To Decide Executors In Spark.

From stackoverflow.com

apache spark What is and how to control Memory Storage in Executors How To Decide Executors In Spark how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly. How To Decide Executors In Spark.

From www.youtube.com

How to Choose Number of Executors and memory in Spark jobs? YouTube How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? . How To Decide Executors In Spark.

From www.slideserve.com

PPT Deploying and Administering Spark PowerPoint Presentation, free How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). tuning the number of executors, tasks, and memory allocation is a critical aspect of running a spark application in a cluster. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. In apache spark, the. How To Decide Executors In Spark.

From www.gangofcoders.net

How to set Apache Spark Executor memory Gang of Coders How To Decide Executors In Spark among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly. How To Decide Executors In Spark.

From spot.io

How to run Spark on reliably on spot instances Spot.io How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of. How To Decide Executors In Spark.

From medium.com

Spark tasks from driver to executors and more by Feng Li Medium How To Decide Executors In Spark how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? use spark session variable to set number of executors dynamically (from within program). In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance. How To Decide Executors In Spark.

From duyanghao.github.io

Executors Scheduling in Spark on k8s How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. among the most critical aspects of spark tuning is deciding. How To Decide Executors In Spark.

From spark.apache.org

UI Spark 3.0.0preview Documentation How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. among the most critical aspects of spark tuning is deciding. How To Decide Executors In Spark.

From www.youtube.com

Spark Executor Core & Memory Explained {தமிழ்} YouTube How To Decide Executors In Spark among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. optimising a spark application based on the number of executor instances is a critical. How To Decide Executors In Spark.

From stackoverflow.com

pyspark Spark in standalone mode do not take more than 2 executors How To Decide Executors In Spark first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. optimising a spark application based on the number of executor instances is a critical. How To Decide Executors In Spark.

From medium.com

An Introduction to Apache Spark. A flexible data processing framework How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. first 1 core and 1 gb is needed for os and hadoop daemons, so available. How To Decide Executors In Spark.

From www.simplilearn.com

Spark Parallelize The Essential Element of Spark How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. use spark session variable to set number of executors dynamically. How To Decide Executors In Spark.

From www.edureka.co

Apache Spark Architecture Distributed System Architecture Explained How To Decide Executors In Spark first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? tuning the number of executors, tasks, and memory allocation is a critical aspect of. How To Decide Executors In Spark.

From www.youtube.com

How to decide number of executors Apache Spark Interview Questions How To Decide Executors In Spark In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of your spark application. optimising a spark application based on the number of executor instances is a critical aspect of achieving better. how to tune spark’s number of executors, executor core, and. How To Decide Executors In Spark.

From data-flair.training

Apache Spark Executor for Executing Spark Tasks DataFlair How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). among the most critical aspects of spark tuning is deciding on the number of executors and the allocation of. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? optimising a spark application based. How To Decide Executors In Spark.

From itnext.io

Apache Spark Internals Tips and Optimizations by Javier Ramos ITNEXT How To Decide Executors In Spark how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. optimising a spark application based on the number of executor instances is a critical. How To Decide Executors In Spark.

From data-flair.training

How Apache Spark Works Runtime Spark Architecture DataFlair How To Decide Executors In Spark use spark session variable to set number of executors dynamically (from within program). first 1 core and 1 gb is needed for os and hadoop daemons, so available are 15 cores, 63 gb ram for each node. how to tune spark’s number of executors, executor core, and executor memory to improve the performance of the job? . How To Decide Executors In Spark.

From sparkbyexamples.com

Spark UI Understanding Spark Execution Spark By {Examples} How To Decide Executors In Spark optimising a spark application based on the number of executor instances is a critical aspect of achieving better. use spark session variable to set number of executors dynamically (from within program). In apache spark, the number of cores and the number of executors are two important configuration parameters that can significantly impact the resource utilization and performance of. How To Decide Executors In Spark.