Pytorch Kl Divergence Loss . With this loss function, you can compute. See the parameters, shape, and. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. Learn how to compute the kl divergence loss with pytorch using this function. See the parameters, return type, and deprecation warnings for. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. See examples of vaes applied to the mnist dataset and the latent space visualization. The main causes are using non. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction.

from www.sefidian.com

With this loss function, you can compute. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. See the parameters, return type, and deprecation warnings for. Learn how to compute the kl divergence loss with pytorch using this function. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. The main causes are using non. See examples of vaes applied to the mnist dataset and the latent space visualization. See the parameters, shape, and.

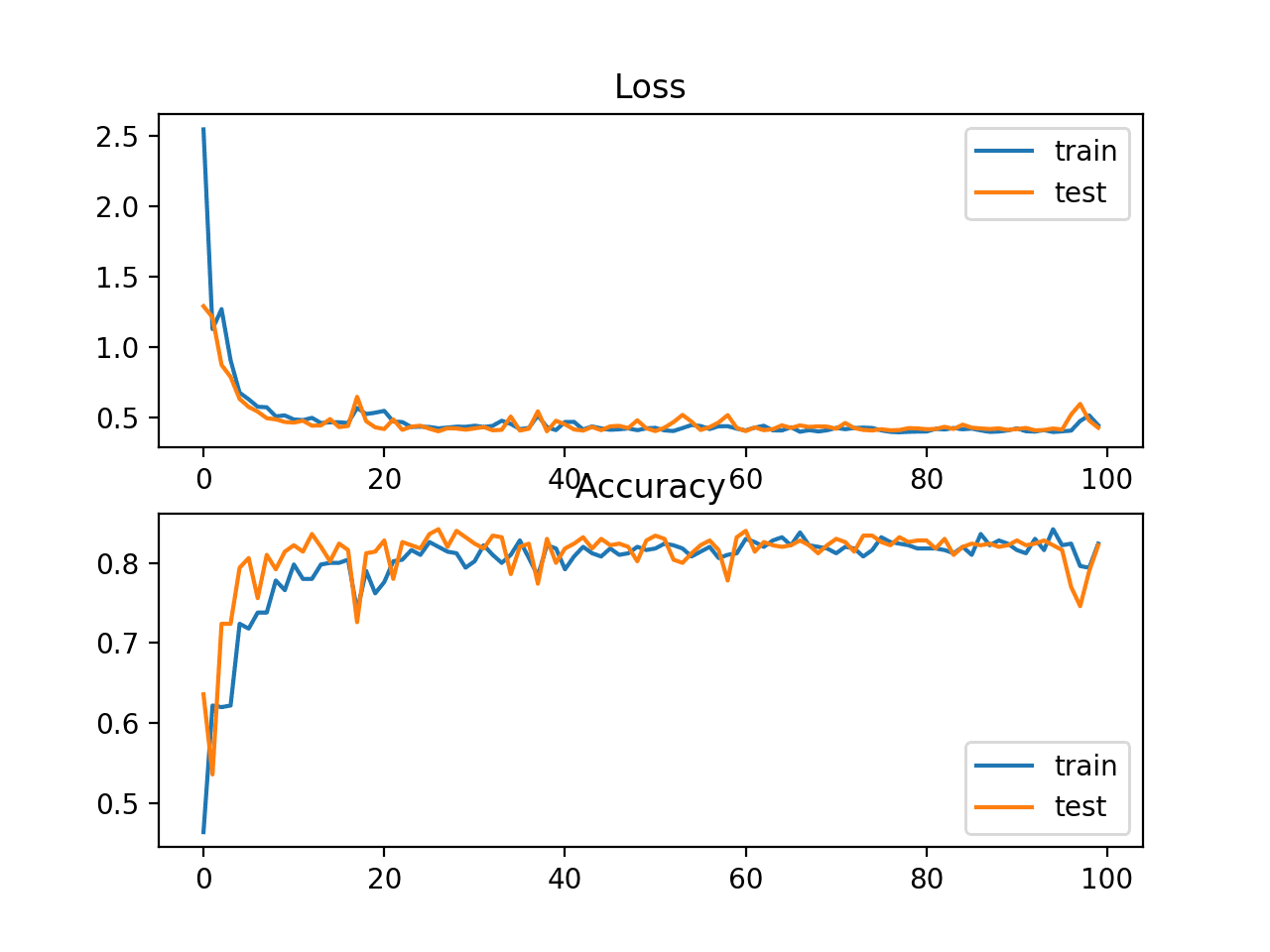

Common loss functions for training deep neural networks with Keras examples

Pytorch Kl Divergence Loss Learn how to compute the kl divergence loss with pytorch using this function. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. The main causes are using non. With this loss function, you can compute. Learn how to compute the kl divergence loss with pytorch using this function. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. See the parameters, shape, and. See the parameters, return type, and deprecation warnings for. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. See examples of vaes applied to the mnist dataset and the latent space visualization. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it.

From www.countbayesie.com

KullbackLeibler Divergence Explained — Count Bayesie Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. See examples of vaes applied to the mnist dataset and the latent space visualization. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. Learn. Pytorch Kl Divergence Loss.

From github.com

Backpropagation not working on KL divergence loss function due to data type mismatch · Issue Pytorch Kl Divergence Loss Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. See the parameters, shape, and. The main causes are using non. Learn how to compute the kl divergence loss with pytorch using this function. Learn how to compute and use kl divergence, a measure of difference between two probability distributions,. Pytorch Kl Divergence Loss.

From debuggercafe.com

Sparse Autoencoders using KL Divergence with PyTorch Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. With this loss function, you can compute. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. See examples of vaes applied to the mnist. Pytorch Kl Divergence Loss.

From stackoverflow.com

python Different results in computing KL Divergence using Pytorch Distributions vs manually Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to compute the kl divergence loss with pytorch using this function. The main causes are using non. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch.. Pytorch Kl Divergence Loss.

From www.researchgate.net

Reconstruction loss and KulbackLeibler (KL) divergence to train VAE. Download Scientific Diagram Pytorch Kl Divergence Loss With this loss function, you can compute. Learn how to compute the kl divergence loss with pytorch using this function. The main causes are using non. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. See examples of vaes applied to. Pytorch Kl Divergence Loss.

From www.youtube.com

The KL Divergence Data Science Basics YouTube Pytorch Kl Divergence Loss The main causes are using non. With this loss function, you can compute. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. A discussion thread about why kl divergence loss can. Pytorch Kl Divergence Loss.

From www.researchgate.net

Joint optimisation of the reconstruction loss, the KL divergence,... Download Scientific Diagram Pytorch Kl Divergence Loss See the parameters, shape, and. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. The main causes are using non. See the parameters, return type, and deprecation warnings for. With this loss function, you can compute. Learn how to compute and. Pytorch Kl Divergence Loss.

From code-first-ml.github.io

Understanding KLDivergence — CodeFirstML Pytorch Kl Divergence Loss See the parameters, shape, and. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in. Pytorch Kl Divergence Loss.

From analyticsindiamag.com

Ultimate Guide To Loss functions In PyTorch With Python Implementation Pytorch Kl Divergence Loss Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. Learn how to compute the kl divergence loss with pytorch using this function. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. See the parameters, shape, and. We’ll first see what. Pytorch Kl Divergence Loss.

From dxoqopbet.blob.core.windows.net

Pytorch Kl Divergence Matrix at Susan Perry blog Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. See the parameters, shape, and. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. With this loss function, you can compute. We’ll first see. Pytorch Kl Divergence Loss.

From tiao.io

Density Ratio Estimation for KL Divergence Minimization between Implicit Distributions Louis Tiao Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. The main causes are using non. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. With this loss function, you can compute. A. Pytorch Kl Divergence Loss.

From blog.paperspace.com

PyTorch Loss Functions Pytorch Kl Divergence Loss See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. Learn how to compute the kl divergence loss with pytorch using this function. See the parameters, shape, and. Learn how to use pytorch to implement and train variational. Pytorch Kl Divergence Loss.

From www.liberiangeek.net

How to Calculate KL Divergence Loss of Neural Networks in PyTorch? Liberian Geek Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. With this loss function, you can compute. See the parameters, shape, and. A discussion. Pytorch Kl Divergence Loss.

From debuggercafe.com

Sparse Autoencoders using KL Divergence with PyTorch Pytorch Kl Divergence Loss See examples of vaes applied to the mnist dataset and the latent space visualization. See the parameters, shape, and. See the parameters, return type, and deprecation warnings for. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. The main causes are using non. We’ll first see what normal distribution looks like,. Pytorch Kl Divergence Loss.

From www.liberiangeek.net

How to Calculate KL Divergence Loss in PyTorch? Liberian Geek Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. Learn how to compute the kl divergence loss with pytorch using this function. See the parameters, shape, and. With this loss function, you can compute. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from. Pytorch Kl Divergence Loss.

From github.com

VAE loss function · Issue 294 · pytorch/examples · GitHub Pytorch Kl Divergence Loss See the parameters, shape, and. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. See the parameters, return type, and deprecation warnings for. See examples of vaes applied to the mnist dataset and the latent space visualization. With this loss function,. Pytorch Kl Divergence Loss.

From github.com

GitHub matanle51/gaussian_kld_loss_pytorch KL divergence between two Multivariate/Univariate Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. See the parameters, shape, and. See examples of vaes applied to the mnist dataset and the latent space visualization. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. With this loss function,. Pytorch Kl Divergence Loss.

From dxoqopbet.blob.core.windows.net

Pytorch Kl Divergence Matrix at Susan Perry blog Pytorch Kl Divergence Loss A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See the parameters, shape, and. The main causes are using non. Learn how to compute the kl divergence loss with pytorch using this function. With this loss function, you can compute. See examples of vaes applied to the mnist dataset and the. Pytorch Kl Divergence Loss.

From github.com

Is KLDivergence loss missing in Aligner loss definition? · Issue 29 · lucidrains Pytorch Kl Divergence Loss See the parameters, shape, and. Learn how to compute the kl divergence loss with pytorch using this function. The main causes are using non. See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. We’ll first see what. Pytorch Kl Divergence Loss.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Kl Divergence Loss With this loss function, you can compute. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See examples of vaes applied to the mnist dataset and the latent space visualization. See the parameters, return type, and deprecation warnings for. See the parameters, shape, and. The main causes are using non. Learn. Pytorch Kl Divergence Loss.

From www.youtube.com

KL Divergence YouTube Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. The main causes are using non. See examples of vaes applied to the mnist dataset and the latent space visualization. See the parameters, return type, and deprecation warnings for. Learn how to. Pytorch Kl Divergence Loss.

From www.researchgate.net

Four different loss functions KL divergence loss (KL), categorical... Download Scientific Diagram Pytorch Kl Divergence Loss The main causes are using non. With this loss function, you can compute. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. See the parameters, shape, and. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. Learn how to compute. Pytorch Kl Divergence Loss.

From github.com

Distribution `kl_divergence` method · Issue 69468 · pytorch/pytorch · GitHub Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. With this loss function, you can compute. See the parameters, return type, and deprecation warnings for. Learn how to compute and use kl divergence, a measure of difference between two probability distributions,. Pytorch Kl Divergence Loss.

From github.com

GitHub cxliu0/KLLosspytorch A pytorch reimplementation of KLLoss (CVPR'2019) Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. With this loss function, you can compute. See the parameters, shape, and. The main. Pytorch Kl Divergence Loss.

From www.aporia.com

KullbackLeibler Divergence Aporia Pytorch Kl Divergence Loss With this loss function, you can compute. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. The main causes are using non. See the parameters, return type, and deprecation warnings for. See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to compute and use. Pytorch Kl Divergence Loss.

From iq.opengenus.org

KL Divergence Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. See the parameters, return type, and deprecation warnings for. Learn how to use pytorch. Pytorch Kl Divergence Loss.

From www.countbayesie.com

KullbackLeibler Divergence Explained — Count Bayesie Pytorch Kl Divergence Loss A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. Learn how to compute the kl divergence loss with pytorch using this function. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. Learn how to use pytorch to implement and train variational autoencoders. Pytorch Kl Divergence Loss.

From medium.com

Variational AutoEncoder, and a bit KL Divergence, with PyTorch by Tingsong Ou Medium Pytorch Kl Divergence Loss See the parameters, shape, and. The main causes are using non. See the parameters, return type, and deprecation warnings for. With this loss function, you can compute. See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality. Pytorch Kl Divergence Loss.

From www.youtube.com

Introduction to KLDivergence Simple Example with usage in TensorFlow Probability YouTube Pytorch Kl Divergence Loss See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. Learn how to compute the kl divergence loss. Pytorch Kl Divergence Loss.

From www.researchgate.net

Information loss and KL divergence on (a) 'Coffee' dataset (up), (b)... Download Scientific Pytorch Kl Divergence Loss With this loss function, you can compute. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. Learn how to compute the kl divergence. Pytorch Kl Divergence Loss.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. Learn how to compute the kl divergence loss with pytorch using this function. Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. See the parameters, shape, and. With this loss function, you can compute. The main causes are using non.. Pytorch Kl Divergence Loss.

From h1ros.github.io

Loss Functions in Deep Learning with PyTorch Stepbystep Data Science Pytorch Kl Divergence Loss Learn how to use pytorch to implement and train variational autoencoders (vaes), a kind of neural network for dimensionality reduction. See examples of vaes applied to the mnist dataset and the latent space visualization. Learn how to compute and use kl divergence, a measure of difference between two probability distributions, in pytorch. We’ll first see what normal distribution looks like,. Pytorch Kl Divergence Loss.

From tonydeep.github.io

CrossEntropy Loss là gì? Pytorch Kl Divergence Loss Learn how to compute the kl divergence loss with pytorch using this function. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See the parameters, shape, and. See examples of vaes applied to the mnist dataset and the latent space visualization. See the parameters, return type, and deprecation warnings for. With. Pytorch Kl Divergence Loss.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Kl Divergence Loss See the parameters, return type, and deprecation warnings for. We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. See examples of vaes applied to the mnist dataset and the latent space visualization. A discussion thread about why kl divergence loss can. Pytorch Kl Divergence Loss.

From www.sefidian.com

Common loss functions for training deep neural networks with Keras examples Pytorch Kl Divergence Loss We’ll first see what normal distribution looks like, and how to compute kl divergence, which is the objective function for optimizing vae’s latent space embedding, from the distribution. Learn how to compute the kl divergence loss with pytorch using this function. A discussion thread about why kl divergence loss can be negative in pytorch, and how to fix it. See. Pytorch Kl Divergence Loss.