Time Distributed Layer . Time distributed layer will do that job, it can apply the same transformation for a list of input data. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. To effectively learn how to use this. Every input should be at least 3d, and the dimension of index one of the. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. Finally, let’s use time distributed layers. That can work with several inputs. This wrapper allows to apply a layer to every temporal slice of an input. The input should be at least 3d, and the dimension of index one will be.

from medium.com

That can work with several inputs. Finally, let’s use time distributed layers. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Every input should be at least 3d, and the dimension of index one of the. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input.

How to work with Time Distributed data in a neural network by Patrice

Time Distributed Layer The input should be at least 3d, and the dimension of index one will be. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. To effectively learn how to use this. Every input should be at least 3d, and the dimension of index one of the. Time distributed layer will do that job, it can apply the same transformation for a list of input data. The input should be at least 3d, and the dimension of index one will be. This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. That can work with several inputs. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Finally, let’s use time distributed layers.

From www.youtube.com

PYTHON What is the role of TimeDistributed layer in Keras? YouTube Time Distributed Layer That can work with several inputs. The input should be at least 3d, and the dimension of index one will be. This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. To effectively learn how. Time Distributed Layer.

From stackoverflow.com

tensorflow Correct usage of keras SpatialDropout2D inside Time Distributed Layer To effectively learn how to use this. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Every input should be at least 3d, and the dimension of index one of the. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped. Time Distributed Layer.

From github.com

How to pass model into TimeDistributed layer ? (or convert model to Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. The timedistributed achieves this trick. Time Distributed Layer.

From link.springer.com

Correction to Speech emotion recognition using time distributed 2D Time Distributed Layer Finally, let’s use time distributed layers. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. That can work with several inputs. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. Time Distributed Layer.

From www.researchgate.net

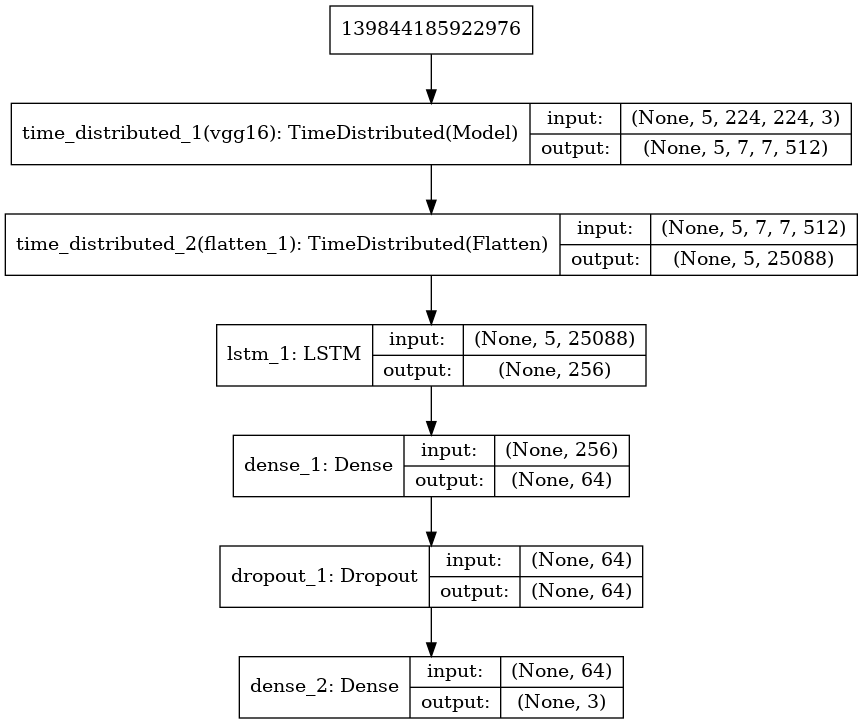

Structure of Time Distributed CNN model Download Scientific Diagram Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. Finally, let’s use time distributed layers. Every input should be at least 3d, and the dimension of index one of the. Time distributed layer will do that job, it can apply the same transformation for a list of input data. To effectively learn how to use. Time Distributed Layer.

From haibal.com

Conv3D HAIBAL Time Distributed Layer To effectively learn how to use this. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. Time Distributed Layer.

From www.pythonfixing.com

[FIXED] How to implement timedistributed dense (TDD) layer in PyTorch Time Distributed Layer That can work with several inputs. This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. To effectively learn how to use this. The input should be at least 3d, and the dimension of index one. Time Distributed Layer.

From www.researchgate.net

The structure of time distributed convolutional gated recurrent unit Time Distributed Layer To effectively learn how to use this. Every input should be at least 3d, and the dimension of index one of the. Time distributed layer will do that job, it can apply the same transformation for a list of input data. That can work with several inputs. This wrapper allows to apply a layer to every temporal slice of an. Time Distributed Layer.

From github.com

how the loss is calculated for timedistributed layer · Issue 8055 Time Distributed Layer The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Every input should be at least 3d, and the dimension of index one of the. Time distributed layer will do that job, it can apply the same transformation for a list of input data. The input should be at least. Time Distributed Layer.

From github.com

Keras TimeDistributed on a Model creates duplicate layers, and is Time Distributed Layer Time distributed layer will do that job, it can apply the same transformation for a list of input data. This wrapper allows to apply a layer to every temporal slice of an input. To effectively learn how to use this. That can work with several inputs. Finally, let’s use time distributed layers. This wrapper allows to apply a layer to. Time Distributed Layer.

From stackoverflow.com

tensorflow Correct usage of keras SpatialDropout2D inside Time Distributed Layer To effectively learn how to use this. This wrapper allows to apply a layer to every temporal slice of an input. Every input should be at least 3d, and the dimension of index one of the. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. The timedistributed layer in. Time Distributed Layer.

From www.researchgate.net

(Color online) Schematic diagram illustrating the time (i.e., layer Time Distributed Layer The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. To effectively learn how to use this. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of. Time Distributed Layer.

From exophnret.blob.core.windows.net

Time Distributed Lstm at John Carroll blog Time Distributed Layer Time distributed layer will do that job, it can apply the same transformation for a list of input data. To effectively learn how to use this. This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time.. Time Distributed Layer.

From stackoverflow.com

keras Get the output of nested intermediate model wrapped by Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of. Time Distributed Layer.

From medium.com

How to work with Time Distributed data in a neural network by Patrice Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. Finally, let’s use time distributed layers. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. This wrapper allows to. Time Distributed Layer.

From github.com

Issue with TimeDistributed + LSTM layer · Issue 18941 · kerasteam Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. The input should be at least 3d, and the dimension of. Time Distributed Layer.

From github.com

Keras TimeDistributed on a Model creates duplicate layers, and is Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. Every input should be at least 3d, and the dimension of index one of the. This wrapper allows to apply a layer to every temporal. Time Distributed Layer.

From www.researchgate.net

Block diagram of the line based time distributed architecture Time Distributed Layer The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that. Time Distributed Layer.

From www.researchgate.net

The architecture of the utilized neural network. The input sequence Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. To effectively learn how to use this. Finally, let’s use time distributed layers. That can work with several inputs. Every input should be at least 3d, and the dimension of index one of the. This wrapper allows to apply a layer to every temporal slice of. Time Distributed Layer.

From stackoverflow.com

tensorflow Keras TimeDistributed layer without LSTM Stack Overflow Time Distributed Layer The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Every input should be at least 3d, and the dimension of index one of the. Timedistributed layer applies the layer. Time Distributed Layer.

From stackoverflow.com

keras Confused about how to implement timedistributed LSTM + LSTM Time Distributed Layer Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. Every input should be at least 3d, and the dimension of index one of the. Time distributed layer will do that job, it can apply the same transformation for a list of input data. This wrapper allows. Time Distributed Layer.

From github.com

GitHub riyaj8888/TimeDistributedLayerwithMultiHeadAttentionfor Time Distributed Layer Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. Every input should be at least 3d, and the dimension of index one of the. This wrapper allows to apply a layer to every temporal slice of an input. That can work with several inputs. The timedistributed achieves this trick. Time Distributed Layer.

From www.youtube.com

understanding TimeDistributed layer in Tensorflow, keras in Urdu Time Distributed Layer This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. Time distributed layer will do that job, it can apply the same transformation for a list of input data. To effectively learn how to use this. Every input should be. Time Distributed Layer.

From stackoverflow.com

tensorflow Correct usage of keras SpatialDropout2D inside Time Distributed Layer That can work with several inputs. Finally, let’s use time distributed layers. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. This wrapper allows to apply a layer. Time Distributed Layer.

From link.springer.com

Correction to Speech emotion recognition using time distributed 2D Time Distributed Layer Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an. Time Distributed Layer.

From medium.com

How to work with Time Distributed data in a neural network by Patrice Time Distributed Layer The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. The timedistributed layer in keras is a wrapper layer that. Time Distributed Layer.

From www.researchgate.net

FIGURE Software pipeline for SNN input conversion to BTCHW tensors and Time Distributed Layer Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. This wrapper allows to apply a layer to every temporal slice of an input. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. Timedistributed is a wrapper layer. Time Distributed Layer.

From exophnret.blob.core.windows.net

Time Distributed Lstm at John Carroll blog Time Distributed Layer To effectively learn how to use this. The input should be at least 3d, and the dimension of index one will be. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. This wrapper allows to apply a layer to every temporal slice of an input. That can work with. Time Distributed Layer.

From www.researchgate.net

Detailed architecture with visualization of timedistributed layer Time Distributed Layer That can work with several inputs. Time distributed layer will do that job, it can apply the same transformation for a list of input data. Timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. To effectively learn how to use this. This wrapper allows to apply a layer to every temporal slice of. Time Distributed Layer.

From www.researchgate.net

a Basic framework of 2D convolution layer for handling time series Time Distributed Layer The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. This wrapper allows to apply a layer to every temporal slice of an input. This wrapper allows to apply a layer to every temporal slice of an input. Time distributed layer will do that job, it can apply the same. Time Distributed Layer.

From www.researchgate.net

Plots for Single Time distributed layer Model Download Scientific Diagram Time Distributed Layer Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. Time distributed layer will do that job, it can apply the same transformation for a list of input data. The input should be at least 3d, and the dimension of index one will be. This wrapper allows to apply a. Time Distributed Layer.

From www.researchgate.net

Detailed architecture with visualization of timedistributed layer Time Distributed Layer Every input should be at least 3d, and the dimension of index one of the. The timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for one time. Time distributed layer will do that job, it can apply the same transformation for a list of input data. This wrapper allows to apply a. Time Distributed Layer.

From github.com

What does the TimeDistributed layer in tensorflow actually do ? and Time Distributed Layer Finally, let’s use time distributed layers. Time distributed layer will do that job, it can apply the same transformation for a list of input data. That can work with several inputs. To effectively learn how to use this. This wrapper allows to apply a layer to every temporal slice of an input. Timedistributed layer applies the layer wrapped inside it. Time Distributed Layer.

From www.researchgate.net

Proposed structure of the 1DCNNLSTM model. Download Scientific Diagram Time Distributed Layer Finally, let’s use time distributed layers. The timedistributed layer in keras is a wrapper layer that allows applying a layer to every temporal slice of an input. That can work with several inputs. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. This wrapper allows to apply a layer. Time Distributed Layer.

From zhuanlan.zhihu.com

『迷你教程』LSTM网络下如何正确使用时间分布层 知乎 Time Distributed Layer That can work with several inputs. Finally, let’s use time distributed layers. Every input should be at least 3d, and the dimension of index one of the. Timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer wrapped inside. Time distributed layer will do that job, it can apply the same transformation. Time Distributed Layer.