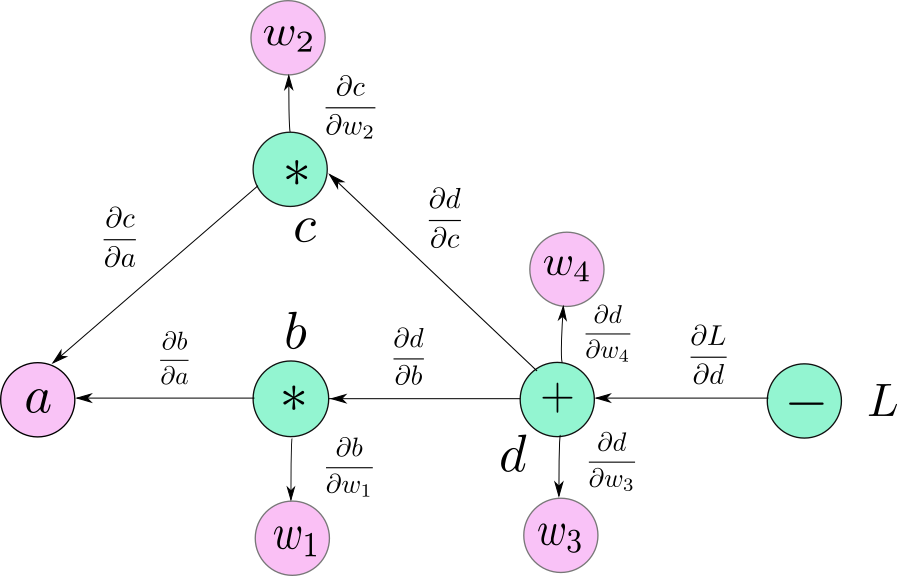

Pytorch Clear Graph . After i finish, i want to release the gpu memory the created backward graph. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): Is there any way that i could. So you don’t need to manually free the graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. If the output variable does not go out of scope in python, you can call del. However, we can use garbage collection to free unneeded. I use autograd.grad function with create_graph=true.

from blog.paperspace.com

So you don’t need to manually free the graph. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. Is there any way that i could. If the output variable does not go out of scope in python, you can call del. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. I use autograd.grad function with create_graph=true. After i finish, i want to release the gpu memory the created backward graph. However, we can use garbage collection to free unneeded. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule.

PyTorch Basics Understanding Autograd and Computation Graphs

Pytorch Clear Graph So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. If the output variable does not go out of scope in python, you can call del. Is there any way that i could. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. So you don’t need to manually free the graph. After i finish, i want to release the gpu memory the created backward graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): However, we can use garbage collection to free unneeded. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. I use autograd.grad function with create_graph=true.

From arcwiki.rs.gsu.edu

PyTorch Data Loader ARCTIC wiki Pytorch Clear Graph So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. However, we can use garbage collection to free unneeded. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. By tracing this graph from roots to leaves, you can automatically compute the. Pytorch Clear Graph.

From wandb.ai

Monitor Your PyTorch Models With Five Extra Lines of Code on Weights Pytorch Clear Graph However, we can use garbage collection to free unneeded. If the output variable does not go out of scope in python, you can call del. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. This. Pytorch Clear Graph.

From blog.finxter.com

TensorFlow vs PyTorch — Who’s Ahead in 2023? Be on the Right Side of Pytorch Clear Graph This post is based on pytorch v1.11, so some highlighted parts may differ across versions. After i finish, i want to release the gpu memory the created backward graph. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. Understanding how autograd and computation graphs works can. Pytorch Clear Graph.

From debuggercafe.com

Training from Scratch using PyTorch Pytorch Clear Graph This post is based on pytorch v1.11, so some highlighted parts may differ across versions. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. If the output variable does not go out of scope in python, you can call del. I was reading this article in which it says the graph. Pytorch Clear Graph.

From towardsdatascience.com

PyTorch Geometric Graph Embedding by Anuradha Wickramarachchi Pytorch Clear Graph By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. If the output variable does not go out of scope in python, you can call del. So you don’t need to manually free the graph. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. This. Pytorch Clear Graph.

From stackoverflow.com

onnx How to get the inference compute graph of the pytorch model Pytorch Clear Graph If the output variable does not go out of scope in python, you can call del. So you don’t need to manually free the graph. After i finish, i want to release the gpu memory the created backward graph. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. So, i’m trying to make. Pytorch Clear Graph.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Clear Graph However, we can use garbage collection to free unneeded. After i finish, i want to release the gpu memory the created backward graph. So you don’t need to manually free the graph. If the output variable does not go out of scope in python, you can call del. So, i’m trying to make sure that the computation graph is deleted. Pytorch Clear Graph.

From discuss.pytorch.org

How to print the computational graph of a Variable? PyTorch Forums Pytorch Clear Graph By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. However, we can use garbage collection to free unneeded. So you don’t need to manually free the graph. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. Is. Pytorch Clear Graph.

From tooploox.com

PyTorch vs. TensorFlow a detailed comparison Tooploox Pytorch Clear Graph After i finish, i want to release the gpu memory the created backward graph. I use autograd.grad function with create_graph=true. Is there any way that i could. If the output variable does not go out of scope in python, you can call del. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So you. Pytorch Clear Graph.

From www.upwork.com

TensorFlow vs. PyTorch Which Should You Use? Upwork Pytorch Clear Graph Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. I use autograd.grad function with create_graph=true. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So you don’t need to. Pytorch Clear Graph.

From github.com

GitHub szagoruyko/pytorchviz A small package to create Pytorch Clear Graph If the output variable does not go out of scope in python, you can call del. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): However, we can use garbage collection to free unneeded. Is there any way that i could. By tracing this graph from roots to leaves, you can. Pytorch Clear Graph.

From pytorch.org

PyTorch 2.0 PyTorch Pytorch Clear Graph After i finish, i want to release the gpu memory the created backward graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. Is there any way that i could. So you don’t need to manually free the graph. Understanding how autograd and computation graphs works can make life with pytorch. Pytorch Clear Graph.

From dongtienvietnam.com

Importing Pytorch In Jupyter Notebook A StepByStep Guide Pytorch Clear Graph So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. After i finish, i want to release the gpu memory the created backward graph. However, we can use garbage collection to free unneeded. By tracing this graph from roots to leaves, you can automatically compute the gradients. Pytorch Clear Graph.

From www.youtube.com

PyTorch 4 Computational Graph YouTube Pytorch Clear Graph However, we can use garbage collection to free unneeded. Is there any way that i could. I use autograd.grad function with create_graph=true. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. I was reading this article in which it says the graph will be cleaned in. Pytorch Clear Graph.

From github.com

GitHub davidbau/howtoreadpytorch Quick, visual, principled Pytorch Clear Graph I was reading this article in which it says the graph will be cleaned in the step loss.backward(): I use autograd.grad function with create_graph=true. However, we can use garbage collection to free unneeded. So you don’t need to manually free the graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule.. Pytorch Clear Graph.

From docs.neptune.ai

PyTorch Documentation Pytorch Clear Graph By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So you don’t need to manually free the graph. After i finish, i want to release the gpu memory the created backward graph. Is there any way. Pytorch Clear Graph.

From www.researchgate.net

Computational graph obtained from PyTorch for a linearinparameters Pytorch Clear Graph After i finish, i want to release the gpu memory the created backward graph. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): However, we can use garbage collection to free unneeded. This post is. Pytorch Clear Graph.

From www.youtube.com

PyTorch's Computational Graph + Torchviz PyTorch (2023) YouTube Pytorch Clear Graph However, we can use garbage collection to free unneeded. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): Understanding how autograd and computation graphs works can make life. Pytorch Clear Graph.

From medium.com

PyTorch, Dynamic Computational Graphs and Modular Deep Learning by Pytorch Clear Graph After i finish, i want to release the gpu memory the created backward graph. If the output variable does not go out of scope in python, you can call del. So you don’t need to manually free the graph. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. However, we can use garbage. Pytorch Clear Graph.

From www.geeksforgeeks.org

Computational Graph in PyTorch Pytorch Clear Graph So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. Is there any way that i could. However, we can use garbage collection to free unneeded. So you don’t need to. Pytorch Clear Graph.

From blog.paperspace.com

PyTorch Basics Understanding Autograd and Computation Graphs Pytorch Clear Graph So you don’t need to manually free the graph. I use autograd.grad function with create_graph=true. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. Understanding how autograd and computation graphs works. Pytorch Clear Graph.

From clear.ml

TensorBoardX with PyTorch ClearML Pytorch Clear Graph By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): Is there any way that i could. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but. Pytorch Clear Graph.

From debuggercafe.com

Traffic Sign Detection using PyTorch Faster RCNN with Custom Backbone Pytorch Clear Graph However, we can use garbage collection to free unneeded. So you don’t need to manually free the graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So, i’m trying to make sure that the computation. Pytorch Clear Graph.

From clear.ml

PyTorch MNIST ClearML Pytorch Clear Graph Is there any way that i could. After i finish, i want to release the gpu memory the created backward graph. If the output variable does not go out of scope in python, you can call del. I use autograd.grad function with create_graph=true. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but. Pytorch Clear Graph.

From velog.io

Pytorch 건드려보기 Pytorch로 하는 linear regression Pytorch Clear Graph If the output variable does not go out of scope in python, you can call del. After i finish, i want to release the gpu memory the created backward graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. Understanding how autograd and computation graphs works can make life with pytorch. Pytorch Clear Graph.

From aman.ai

Aman's AI Journal • Primers • PyTorch vs. TensorFlow Pytorch Clear Graph Is there any way that i could. If the output variable does not go out of scope in python, you can call del. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): After i finish, i want to release the gpu memory the created backward graph. So, i’m trying to make. Pytorch Clear Graph.

From www.reddit.com

NNViz can visualize any pytorch model r/pytorch Pytorch Clear Graph After i finish, i want to release the gpu memory the created backward graph. By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. However, we can use garbage. Pytorch Clear Graph.

From www.youtube.com

04 PyTorch tutorial How do computational graphs and autograd in Pytorch Clear Graph Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. After i finish, i want to release the gpu memory the created backward graph. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. So, i’m trying to make sure that the computation graph is deleted after processing each. Pytorch Clear Graph.

From hashdork.com

PyTorch Graph Neural Network Tutorial HashDork Pytorch Clear Graph So you don’t need to manually free the graph. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. After i finish, i want to release the gpu memory the created backward graph. However, we can use garbage collection. Pytorch Clear Graph.

From www.vedereai.com

Optimizing Production PyTorch Models’ Performance with Graph Pytorch Clear Graph By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. However, we can use garbage collection to free unneeded. After i finish, i want to release the gpu memory the created backward graph. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): So. Pytorch Clear Graph.

From www.exxactcorp.com

PyTorch Geometric vs Deep Graph Library Exxact Blog Pytorch Clear Graph This post is based on pytorch v1.11, so some highlighted parts may differ across versions. However, we can use garbage collection to free unneeded. I use autograd.grad function with create_graph=true. So you don’t need to manually free the graph. If the output variable does not go out of scope in python, you can call del. Understanding how autograd and computation. Pytorch Clear Graph.

From towardsdatascience.com

Introducing PyTorch Forecasting by Jan Beitner Towards Data Science Pytorch Clear Graph By tracing this graph from roots to leaves, you can automatically compute the gradients using the chain rule. Is there any way that i could. I was reading this article in which it says the graph will be cleaned in the step loss.backward(): This post is based on pytorch v1.11, so some highlighted parts may differ across versions. After i. Pytorch Clear Graph.

From github.re

GitHub Insights pytorch/pytorch Pytorch Clear Graph After i finish, i want to release the gpu memory the created backward graph. Is there any way that i could. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. Understanding how autograd and computation graphs works can make life with pytorch a whole lot easier. I use autograd.grad function with create_graph=true. By tracing. Pytorch Clear Graph.

From learnopencv.com

Pre Trained Models for Image Classification PyTorch Pytorch Clear Graph So, i’m trying to make sure that the computation graph is deleted after processing each batch, but none of the stuff i’ve tried. However, we can use garbage collection to free unneeded. So you don’t need to manually free the graph. I use autograd.grad function with create_graph=true. By tracing this graph from roots to leaves, you can automatically compute the. Pytorch Clear Graph.

From www.geeksforgeeks.org

Computational Graph in PyTorch Pytorch Clear Graph However, we can use garbage collection to free unneeded. After i finish, i want to release the gpu memory the created backward graph. If the output variable does not go out of scope in python, you can call del. This post is based on pytorch v1.11, so some highlighted parts may differ across versions. By tracing this graph from roots. Pytorch Clear Graph.