Huggingface Transformers Language Model . We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. After explaining their benefits compared to recurrent. Each trainer in trl is a light.

from zhuanlan.zhihu.com

Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Each trainer in trl is a light. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. After explaining their benefits compared to recurrent.

Huggingface Transformers(1)Hugging Face官方课程 知乎

Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. After explaining their benefits compared to recurrent. Each trainer in trl is a light. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing.

From www.linkedin.com

LoRA LowRank Adaptation Efficient for Large Language Models Huggingface Transformers Language Model We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such. Huggingface Transformers Language Model.

From huggingface.co

Accelerating Hugging Face Transformers with AWS Inferentia2 Huggingface Transformers Language Model We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Each trainer in trl is a light. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend. Huggingface Transformers Language Model.

From blog.futuresmart.ai

Hugging Face Transformers Model Huggingface Transformers Language Model We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. After explaining their benefits compared to recurrent. This conceptual blog aims to cover transformers, one of the most powerful models ever. Huggingface Transformers Language Model.

From huggingface.co

Models Hugging Face Huggingface Transformers Language Model We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Each trainer in trl is a light. After explaining their. Huggingface Transformers Language Model.

From www.youtube.com

HuggingFace Transformers AgentsLarge language models YouTube Huggingface Transformers Language Model After explaining their benefits compared to recurrent. Each trainer in trl is a light. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. We will now train our language model using. Huggingface Transformers Language Model.

From huggingface.co

Hugging Face Blog Huggingface Transformers Language Model Each trainer in trl is a light. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. After explaining their benefits compared to recurrent. This conceptual blog aims to cover transformers, one of the most powerful models ever created. Huggingface Transformers Language Model.

From stackoverflow.com

python Assign transformer layers to BERT weights Stack Overflow Huggingface Transformers Language Model Each trainer in trl is a light. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to. Huggingface Transformers Language Model.

From www.exxactcorp.com

Getting Started with Hugging Face Transformers for NLP Huggingface Transformers Language Model 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. After explaining their benefits compared to recurrent. Causal language modeling. Huggingface Transformers Language Model.

From jalammar.github.io

Interfaces for Explaining Transformer Language Models Jay Alammar Huggingface Transformers Language Model After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed. Huggingface Transformers Language Model.

From www.popular.pics

HuggingFace Transformers now extends to computer vision ・ popular.pics Huggingface Transformers Language Model 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. After explaining their benefits compared to recurrent. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to. Huggingface Transformers Language Model.

From www.scaler.com

Transformer Visualization and Explainabilitys Scaler Topics Huggingface Transformers Language Model After explaining their benefits compared to recurrent. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Each trainer in trl is a light. 🤗 transformers provides thousands of pretrained models to perform tasks on. Huggingface Transformers Language Model.

From blog.genesiscloud.com

Introduction to transformer models and Hugging Face library Genesis Huggingface Transformers Language Model After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Each trainer in trl is a light. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model. Huggingface Transformers Language Model.

From learningdaily.dev

Text summarization with Hugging Face Transformers by The Educative Huggingface Transformers Language Model 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. Each trainer in trl is a light. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed. Huggingface Transformers Language Model.

From fourthbrain.ai

HuggingFace Demo Building NLP Applications with Transformers FourthBrain Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. After explaining. Huggingface Transformers Language Model.

From www.philschmid.de

Efficient Large Language Model training with LoRA and Hugging Face Huggingface Transformers Language Model 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Causal language modeling predicts the next token in a sequence. Huggingface Transformers Language Model.

From dzone.com

Getting Started With Hugging Face Transformers DZone Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. Each trainer. Huggingface Transformers Language Model.

From stackoverflow.com

huggingface transformers Initialize masked language model with Huggingface Transformers Language Model Each trainer in trl is a light. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using. Huggingface Transformers Language Model.

From discuss.huggingface.co

Decision Transformer a question about the tutorial 🤗Transformers Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. Each trainer in trl is a light. 🤗 transformers provides thousands of pretrained models to perform tasks on. Huggingface Transformers Language Model.

From www.toolify.ai

vitgpt2imagecaptioning huggingface.co api & nlpconnect vitgpt2 Huggingface Transformers Language Model After explaining their benefits compared to recurrent. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train our language model using the run_language_modeling.py script. Huggingface Transformers Language Model.

From codingnote.cc

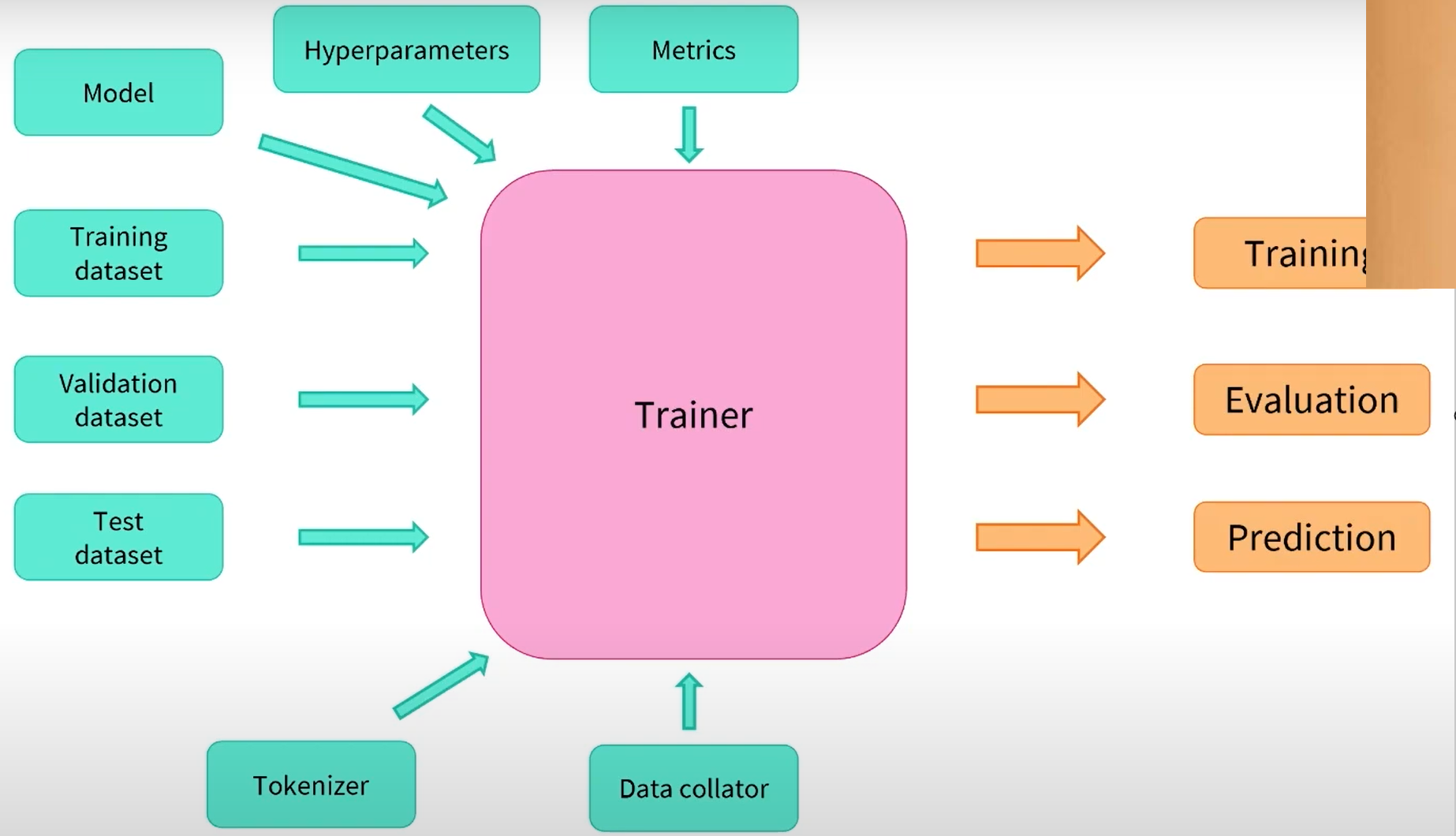

huggingface transformers使用指南之二——方便的trainer ⎝⎛CodingNote.cc Huggingface Transformers Language Model Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. Each trainer in trl is a light. After explaining their benefits compared to recurrent. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train. Huggingface Transformers Language Model.

From www.qwak.com

An introduction to Hugging Face transformers for NLP Qwak's Blog Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Each trainer in trl is a light. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. We will now train our language model using the run_language_modeling.py script. Huggingface Transformers Language Model.

From blog.csdn.net

hugging face transformers模型文件 config文件_huggingface configCSDN博客 Huggingface Transformers Language Model We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Each trainer in trl is a light. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend. Huggingface Transformers Language Model.

From www.amazon.in

Buy Natural Language Processing With Transformers Building Language Huggingface Transformers Language Model 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. After explaining their benefits compared to. Huggingface Transformers Language Model.

From www.plugger.ai

Plugger AI vs. Huggingface Simplifying AI Model Access and Scalability Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. After explaining their benefits compared to recurrent. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend. Huggingface Transformers Language Model.

From www.philschmid.de

Hugging Face Transformers Examples Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. After explaining their benefits compared to recurrent. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. Each trainer in trl is a light. 🤗 transformers provides thousands. Huggingface Transformers Language Model.

From www.youtube.com

Mastering HuggingFace Transformers StepByStep Guide to Model Huggingface Transformers Language Model Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. Each trainer in trl is a light. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed. Huggingface Transformers Language Model.

From blog.csdn.net

NLP LLM(Pretraining + Transformer代码篇 Huggingface Transformers Language Model After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed. Huggingface Transformers Language Model.

From analyticsindiamag.com

First Trillion Parameter Model on HuggingFace Mixture of Experts (MoE) Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Each trainer in trl is a light. Causal language modeling. Huggingface Transformers Language Model.

From cameronrwolfe.substack.com

T5 TexttoText Transformers (Part One) Huggingface Transformers Language Model Each trainer in trl is a light. After explaining their benefits compared to recurrent. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. 🤗 transformers provides thousands of pretrained models to perform tasks on different modalities such as. Causal language modeling predicts the next token in a sequence of tokens, and the model. Huggingface Transformers Language Model.

From www.modb.pro

【Transformer in NLP】Chapter 1 Hugging Face 生态系统 墨天轮 Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on. Huggingface Transformers Language Model.

From joiywukii.blob.core.windows.net

Huggingface Transformers Roberta at Shayna Johnson blog Huggingface Transformers Language Model Each trainer in trl is a light. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. After explaining their benefits compared to recurrent. 🤗 transformers provides thousands of pretrained models to perform tasks on. Huggingface Transformers Language Model.

From mehndidesign.zohal.cc

How To Use Hugging Face Transformer Models In Matlab Matlab Programming Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. 🤗 transformers. Huggingface Transformers Language Model.

From zhuanlan.zhihu.com

Huggingface Transformers(1)Hugging Face官方课程 知乎 Huggingface Transformers Language Model Each trainer in trl is a light. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. After explaining their benefits compared to recurrent. Causal language modeling predicts the next token in a sequence of. Huggingface Transformers Language Model.

From github.com

huggingfacetransformers/utils/check_dummies.py at master · microsoft Huggingface Transformers Language Model Each trainer in trl is a light. After explaining their benefits compared to recurrent. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. 🤗 transformers provides thousands. Huggingface Transformers Language Model.

From github.com

TPU out of memory (OOM) with flax train a language model GPT2 · Issue Huggingface Transformers Language Model This conceptual blog aims to cover transformers, one of the most powerful models ever created in natural language processing. We will now train our language model using the run_language_modeling.py script from transformers (newly renamed from. Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. 🤗 transformers. Huggingface Transformers Language Model.