Models.quantization.mobilenet_V2 . Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. The easiest way to achieve this might be to wrap the model into a larger model and add. Gpu inference is not yet supported. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the.

from www.mdpi.com

Gpu inference is not yet supported. It can also be used as a backbone in building more. The easiest way to achieve this might be to wrap the model into a larger model and add. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset.

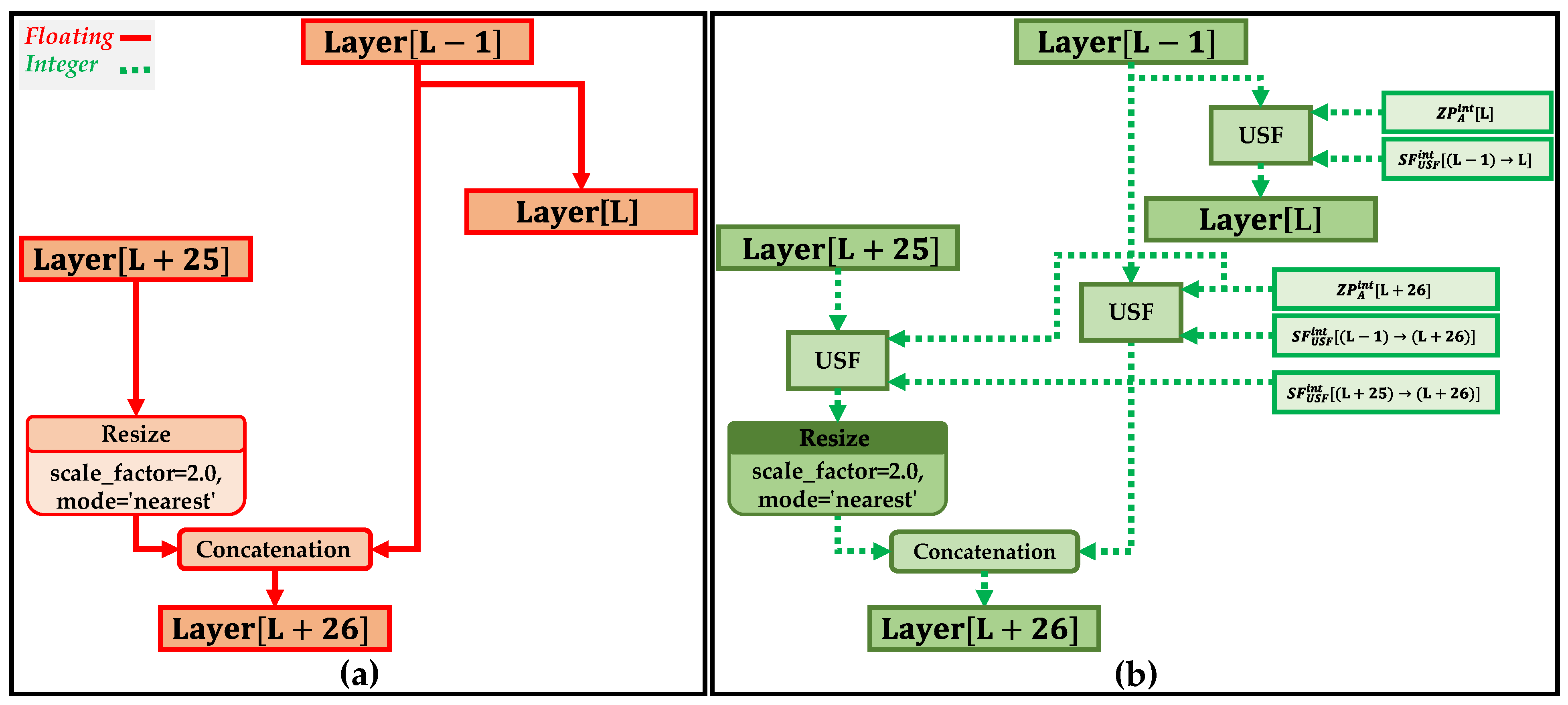

Electronics Free FullText Unified ScalingBased PureInteger

Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. It can also be used as a backbone in building more. The easiest way to achieve this might be to wrap the model into a larger model and add. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus.

From www.marktechpost.com

Meet SpQR (SparseQuantized Representation) A Compressed Format And Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger model and add. Gpu inference is not yet supported. Mobilenet_v2_quantizedweights. Models.quantization.mobilenet_V2.

From www.youtube.com

Model Quantization in Deep Neural Network (Post Training) YouTube Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. The easiest way to achieve this might be to wrap the. Models.quantization.mobilenet_V2.

From docs.nvidia.com

— Transfer Learning Toolkit 3.0 documentation Models.quantization.mobilenet_V2 Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. The. Models.quantization.mobilenet_V2.

From www.mathworks.com

Quantization Aware Training with File Exchange MATLAB Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Gpu. Models.quantization.mobilenet_V2.

From www.researchgate.net

Performance of Object Detection models for flower F102 dataset for Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into a larger. Models.quantization.mobilenet_V2.

From github.com

Implementation of (where is the quantizer Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. It can also be used as a backbone in building more. The easiest way to. Models.quantization.mobilenet_V2.

From huggingface.co

· Hugging Face Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. Gpu inference is not yet supported. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. The easiest way to achieve this might be to wrap. Models.quantization.mobilenet_V2.

From aihub.qualcomm.com

AI Hub Models.quantization.mobilenet_V2 The easiest way to achieve this might be to wrap the model into a larger model and add. Gpu inference is not yet supported. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. It can also be used as a backbone in building more. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu. Models.quantization.mobilenet_V2.

From github.com

Issue while retraining Models.quantization.mobilenet_V2 Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into a larger model and add. Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. It can. Models.quantization.mobilenet_V2.

From la.mathworks.com

Quantization Aware Training with File Exchange MATLAB Models.quantization.mobilenet_V2 Gpu inference is not yet supported. Gpu inference is not yet supported. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger. Models.quantization.mobilenet_V2.

From wiki.stmicroelectronics.cn

AIXCUBEAI support of ONNX and TensorFlow quantized models stm32mcu Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. The easiest way to achieve this might be to wrap the model into a larger model and add. It can also be used as a backbone in. Models.quantization.mobilenet_V2.

From www.youtube.com

Understanding AI Model Quantization, GGML vs GPTQ! YouTube Models.quantization.mobilenet_V2 Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger model and add. Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶. Models.quantization.mobilenet_V2.

From www.mdpi.com

Electronics Free FullText Unified ScalingBased PureInteger Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and. Models.quantization.mobilenet_V2.

From www.mdpi.com

Applied Sciences Free FullText ClippingBased Post Training 8Bit Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. It can also be used as a backbone in building more. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. The. Models.quantization.mobilenet_V2.

From www.scaler.com

Quantization and Pruning Scaler Topics Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Quantized models only. Models.quantization.mobilenet_V2.

From discuss.pytorch.org

+ SSDLite quantization results in different model Models.quantization.mobilenet_V2 Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. The easiest way to achieve this might be to wrap the model into a larger model and add. Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶. Models.quantization.mobilenet_V2.

From www.researchgate.net

Representative quantizationaware training scheme. Download Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Mobilenetv2 is a machine learning model that can classify. Models.quantization.mobilenet_V2.

From www.scaler.com

Quantization and Pruning Scaler Topics Models.quantization.mobilenet_V2 The easiest way to achieve this might be to wrap the model into a larger model and add. It can also be used as a backbone in building more. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above. Models.quantization.mobilenet_V2.

From www.pinnaxis.com

Deep Learning INT8 Quantization MATLAB Simulink, 42 OFF Models.quantization.mobilenet_V2 Gpu inference is not yet supported. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Quantized models only support inference and run on cpus. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu. Models.quantization.mobilenet_V2.

From coremltools.readme.io

Quantization Overview Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger model and add. It can also be used as a backbone in building more.. Models.quantization.mobilenet_V2.

From github.com

Model training warnings Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported.. Models.quantization.mobilenet_V2.

From github.com

to 8bit quantized tflite model conversion error Models.quantization.mobilenet_V2 Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenet_v2_quantizedweights (value) [source] ¶. Models.quantization.mobilenet_V2.

From blog.csdn.net

V2量化方法的研究及使用Pytorch quantization包遇到的问题 Models.quantization.mobilenet_V2 Gpu inference is not yet supported. Gpu inference is not yet supported. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenetv2 is a machine learning model that can classify images from. Models.quantization.mobilenet_V2.

From onnxruntime.ai

Quantize ONNX models onnxruntime Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Gpu inference is not yet supported. The easiest way to achieve. Models.quantization.mobilenet_V2.

From blog.tensorflow.org

Achieving Powerefficient Ondevice Image Recognition — Models.quantization.mobilenet_V2 Gpu inference is not yet supported. It can also be used as a backbone in building more. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. The easiest way to achieve. Models.quantization.mobilenet_V2.

From www.mdpi.com

Electronics Free FullText Improving Model Capacity of Quantized Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Gpu inference is not yet supported. The. Models.quantization.mobilenet_V2.

From www.rinf.tech

5 Reasons Why Machine Learning Quantization is Important for AI Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into a larger. Models.quantization.mobilenet_V2.

From quic.github.io

AIMET Model Quantization — AI Model Efficiency Toolkit Documentation Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported.. Models.quantization.mobilenet_V2.

From pytorch.org

Everything you need to know about TorchVision’s Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Gpu inference is not yet supported. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. The easiest way to achieve this might be to wrap the model into a larger model and add. Mobilenet_v2_quantizedweights. Models.quantization.mobilenet_V2.

From github.com

GPTQ Quantization (3bit and 4bit) · Issue 9 · ggerganov/llama.cpp Models.quantization.mobilenet_V2 It can also be used as a backbone in building more. The easiest way to achieve this might be to wrap the model into a larger model and add. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. Mobilenetv2. Models.quantization.mobilenet_V2.

From stackoverflow.com

quantization Float ops found in quantized TensorFlow model Models.quantization.mobilenet_V2 Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported. It can also be used as a backbone in building more. Gpu inference is not yet supported. The easiest way to achieve this might be to wrap the model into. Models.quantization.mobilenet_V2.

From github.com

SSD v2 quantized on COCO · Issue 5968 · tensorflow/models Models.quantization.mobilenet_V2 Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. Gpu inference is not yet supported. It can also be used as a backbone in building more. Gpu inference is not yet supported. The easiest way to. Models.quantization.mobilenet_V2.

From medium.com

Quantization (posttraining quantization) your (custom Models.quantization.mobilenet_V2 Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. It can also be used as a backbone in building more. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Gpu inference is not yet supported. Gpu. Models.quantization.mobilenet_V2.

From www.mdpi.com

Electronics Free FullText SuperResolution Model Quantized in Models.quantization.mobilenet_V2 The easiest way to achieve this might be to wrap the model into a larger model and add. Gpu inference is not yet supported. Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. It can also be used as a backbone in building more. Mobilenetv2 is a machine learning model. Models.quantization.mobilenet_V2.

From www.coditation.com

How to optimize large deep learning models using quantization Models.quantization.mobilenet_V2 Mobilenet_v2_quantizedweights (value) [source] ¶ the model builder above accepts the. Quantized models only support inference and run on cpus. Quantized models only support inference and run on cpus. Mobilenetv2 is a machine learning model that can classify images from the imagenet dataset. The easiest way to achieve this might be to wrap the model into a larger model and add.. Models.quantization.mobilenet_V2.