Spark Get Partition Size . we use spark's ui to monitor task times and shuffle read/write times. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. tuning the partition size is inevitably, linked to tuning the number of partitions. Consider the size and type of data each partition holds to ensure balanced distribution. There're at least 3 factors to. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. // for dataframe, convert to rdd first. This will give you insights into whether you need to repartition your data. Columnorname) → dataframe [source] ¶. Thus, the number of partitions relies on the size of the input. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). evaluate data distribution across partitions using tools like spark ui or dataframes api.

from exokeufcv.blob.core.windows.net

when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. // for dataframe, convert to rdd first. evaluate data distribution across partitions using tools like spark ui or dataframes api. Columnorname) → dataframe [source] ¶. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). tuning the partition size is inevitably, linked to tuning the number of partitions. Consider the size and type of data each partition holds to ensure balanced distribution. we use spark's ui to monitor task times and shuffle read/write times. There're at least 3 factors to. This will give you insights into whether you need to repartition your data.

Max Number Of Partitions In Spark at Manda Salazar blog

Spark Get Partition Size when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). // for dataframe, convert to rdd first. Columnorname) → dataframe [source] ¶. Thus, the number of partitions relies on the size of the input. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. Consider the size and type of data each partition holds to ensure balanced distribution. we use spark's ui to monitor task times and shuffle read/write times. This will give you insights into whether you need to repartition your data. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. There're at least 3 factors to. tuning the partition size is inevitably, linked to tuning the number of partitions.

From cookinglove.com

Spark partition size limit Spark Get Partition Size tuning the partition size is inevitably, linked to tuning the number of partitions. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. Thus, the number of partitions relies on the size of the input. Consider the size and type of data each partition holds to ensure balanced distribution. Columnorname) → dataframe. Spark Get Partition Size.

From cookinglove.com

Spark partition size limit Spark Get Partition Size when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. There're at least 3 factors to. Columnorname) → dataframe [source] ¶. we use spark's ui to monitor task times and shuffle read/write times. // for dataframe, convert to rdd first. evaluate data distribution across partitions using tools like spark ui or. Spark Get Partition Size.

From exokeufcv.blob.core.windows.net

Max Number Of Partitions In Spark at Manda Salazar blog Spark Get Partition Size evaluate data distribution across partitions using tools like spark ui or dataframes api. This will give you insights into whether you need to repartition your data. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd.. Spark Get Partition Size.

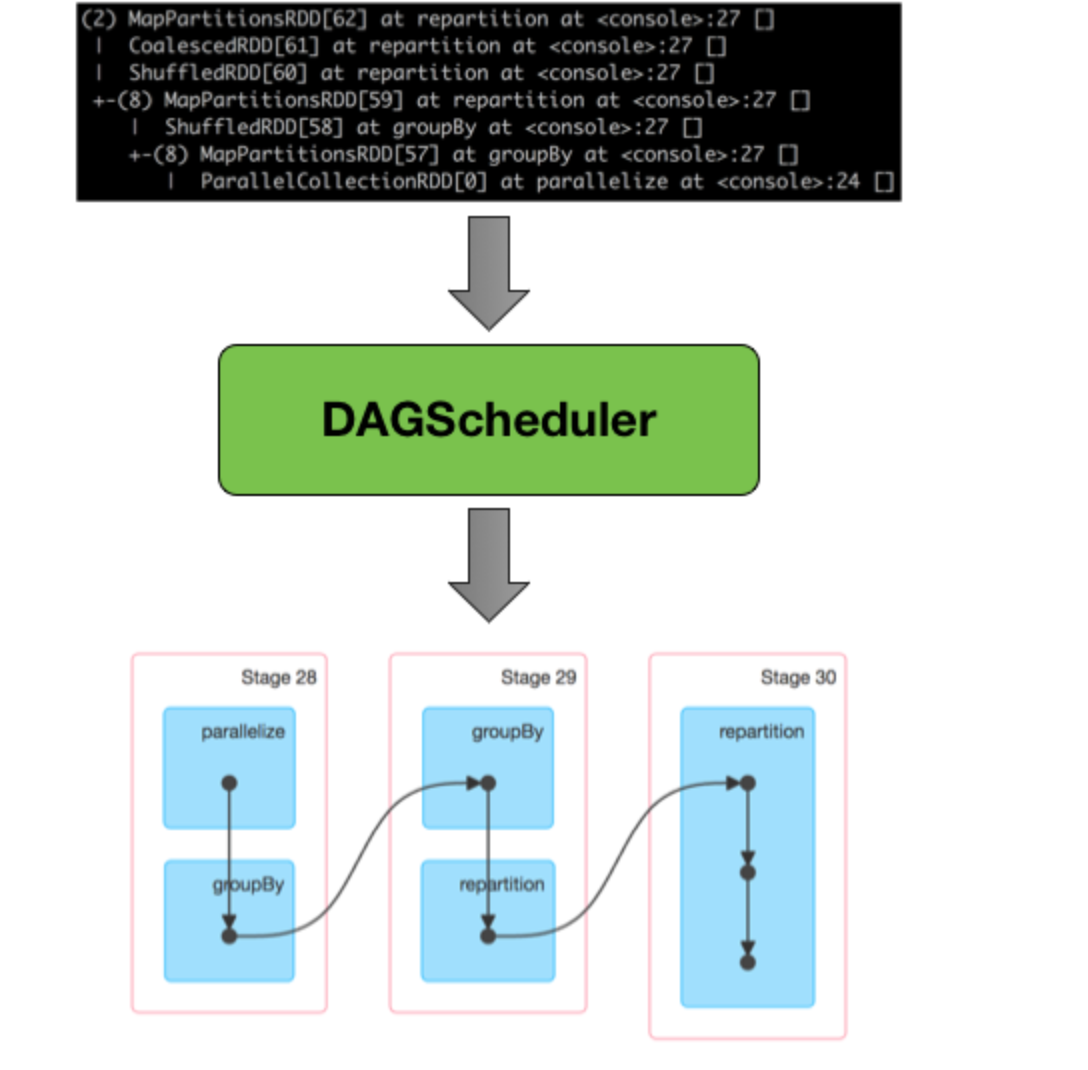

From zhuanlan.zhihu.com

Spark 之分区算子Repartition() vs Coalesce() 知乎 Spark Get Partition Size Columnorname) → dataframe [source] ¶. evaluate data distribution across partitions using tools like spark ui or dataframes api. This will give you insights into whether you need to repartition your data. Thus, the number of partitions relies on the size of the input. we use spark's ui to monitor task times and shuffle read/write times. spark rdd. Spark Get Partition Size.

From cookinglove.com

Spark partition size limit Spark Get Partition Size tuning the partition size is inevitably, linked to tuning the number of partitions. This will give you insights into whether you need to repartition your data. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). Consider the size and type of data each partition holds. Spark Get Partition Size.

From medium.com

Managing Spark Partitions. How data is partitioned and when do you… by xuan zou Medium Spark Get Partition Size when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). This will give you insights into whether you need to repartition your data. evaluate data distribution across partitions using tools like spark ui or dataframes api. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns. Spark Get Partition Size.

From dzone.com

Dynamic Partition Pruning in Spark 3.0 DZone Spark Get Partition Size This will give you insights into whether you need to repartition your data. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. Thus, the number of partitions relies on the size of the input. // for. Spark Get Partition Size.

From techvidvan.com

Apache Spark Partitioning and Spark Partition TechVidvan Spark Get Partition Size we use spark's ui to monitor task times and shuffle read/write times. Consider the size and type of data each partition holds to ensure balanced distribution. Columnorname) → dataframe [source] ¶. // for dataframe, convert to rdd first. evaluate data distribution across partitions using tools like spark ui or dataframes api. There're at least 3 factors to. . Spark Get Partition Size.

From medium.com

Spark Partitioning Partition Understanding Medium Spark Get Partition Size when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). Consider the size and type of data each partition holds to ensure balanced distribution. Columnorname) → dataframe [source] ¶. There're at least 3 factors to. // for dataframe, convert to rdd first. evaluate data distribution across. Spark Get Partition Size.

From stackoverflow.com

optimization spark.sql.files.maxPartitionBytes does not restrict partition size properly Spark Get Partition Size Consider the size and type of data each partition holds to ensure balanced distribution. Thus, the number of partitions relies on the size of the input. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). This will give you insights into whether you need to repartition. Spark Get Partition Size.

From sparkbyexamples.com

Spark Partitioning & Partition Understanding Spark By {Examples} Spark Get Partition Size Consider the size and type of data each partition holds to ensure balanced distribution. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a file in dataframe api using. Spark Get Partition Size.

From nebash.com

What's new in Apache Spark 3.0 dynamic partition pruning (2023) Spark Get Partition Size when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). Consider the size and type of data each partition holds to ensure balanced distribution. we use spark's ui to monitor task times and shuffle read/write times. Thus, the number of partitions relies on the size of. Spark Get Partition Size.

From blogs.perficient.com

Spark Partition An Overview / Blogs / Perficient Spark Get Partition Size This will give you insights into whether you need to repartition your data. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. tuning the partition size is inevitably, linked to tuning the number of partitions. we use spark's ui to monitor task times and shuffle read/write times. when reading. Spark Get Partition Size.

From www.researchgate.net

Spark partition an LMDB Database Download Scientific Diagram Spark Get Partition Size // for dataframe, convert to rdd first. evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. Columnorname) → dataframe [source] ¶. we use spark's ui to monitor task times and shuffle read/write times. This will give you. Spark Get Partition Size.

From www.youtube.com

How to partition and write DataFrame in Spark without deleting partitions with no new data Spark Get Partition Size There're at least 3 factors to. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. This will give you insights into whether you need to repartition your data. we use spark's ui to monitor task times and shuffle read/write times. when reading a table, spark defaults to read blocks with. Spark Get Partition Size.

From medium.com

Dynamic Partition Pruning. Query performance optimization in Spark… by Amit Singh Rathore Spark Get Partition Size // for dataframe, convert to rdd first. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. This will give you insights into whether you need to repartition your data. Columnorname) → dataframe [source] ¶. There're at. Spark Get Partition Size.

From cookinglove.com

Spark partition size limit Spark Get Partition Size when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). we use spark's ui to monitor task times and shuffle read/write times. evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a file in dataframe api using spark.read. Spark Get Partition Size.

From www.youtube.com

Spark Application Partition By in Spark Chapter 2 LearntoSpark YouTube Spark Get Partition Size // for dataframe, convert to rdd first. There're at least 3 factors to. tuning the partition size is inevitably, linked to tuning the number of partitions. we use spark's ui to monitor task times and shuffle read/write times. Columnorname) → dataframe [source] ¶. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark. Spark Get Partition Size.

From cookinglove.com

Spark partition size limit Spark Get Partition Size evaluate data distribution across partitions using tools like spark ui or dataframes api. Thus, the number of partitions relies on the size of the input. // for dataframe, convert to rdd first. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. we use spark's ui to monitor task times and. Spark Get Partition Size.

From github.com

GitHub AbsaOSS/sparkpartitionsizing Sizing partitions in Spark Spark Get Partition Size when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. we use spark's ui to monitor task times and shuffle read/write times. evaluate data distribution across partitions using tools like spark ui or dataframes api. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions,. Spark Get Partition Size.

From engineering.salesforce.com

How to Optimize Your Apache Spark Application with Partitions Salesforce Engineering Blog Spark Get Partition Size we use spark's ui to monitor task times and shuffle read/write times. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). Consider the size and type of data each partition holds to ensure balanced distribution. Thus, the number of partitions relies on the size of. Spark Get Partition Size.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Spark Get Partition Size Consider the size and type of data each partition holds to ensure balanced distribution. This will give you insights into whether you need to repartition your data. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). Columnorname) → dataframe [source] ¶. // for dataframe, convert to. Spark Get Partition Size.

From stackoverflow.com

optimization spark.sql.files.maxPartitionBytes does not restrict partition size properly Spark Get Partition Size Thus, the number of partitions relies on the size of the input. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. tuning the partition size is inevitably, linked to tuning the number of partitions. There're at least 3 factors to. // for dataframe, convert to rdd first. when reading a. Spark Get Partition Size.

From cookinglove.com

Spark partition size limit Spark Get Partition Size // for dataframe, convert to rdd first. Columnorname) → dataframe [source] ¶. Consider the size and type of data each partition holds to ensure balanced distribution. Thus, the number of partitions relies on the size of the input. This will give you insights into whether you need to repartition your data. evaluate data distribution across partitions using tools like. Spark Get Partition Size.

From sparkbyexamples.com

Get the Size of Each Spark Partition Spark By {Examples} Spark Get Partition Size evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. Consider the size and type of data each partition holds to ensure balanced distribution. This will give you insights into whether you need to repartition your data. Thus, the. Spark Get Partition Size.

From cookinglove.com

Spark partition size limit Spark Get Partition Size when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this with sql.files.maxpartitionbytes). evaluate data distribution across partitions using tools like spark ui or dataframes api. Thus, the number of partitions relies on the size of the input. This will give you insights into whether you need to repartition. Spark Get Partition Size.

From www.gangofcoders.net

How does Spark partition(ing) work on files in HDFS? Gang of Coders Spark Get Partition Size we use spark's ui to monitor task times and shuffle read/write times. There're at least 3 factors to. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first. Spark Get Partition Size.

From cloud-fundis.co.za

Dynamically Calculating Spark Partitions at Runtime Cloud Fundis Spark Get Partition Size // for dataframe, convert to rdd first. Thus, the number of partitions relies on the size of the input. This will give you insights into whether you need to repartition your data. tuning the partition size is inevitably, linked to tuning the number of partitions. evaluate data distribution across partitions using tools like spark ui or dataframes api.. Spark Get Partition Size.

From blog.csdn.net

Spark基础 之 Partition_spark partitionCSDN博客 Spark Get Partition Size spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. There're at least 3 factors to. when reading a table, spark defaults to read blocks with a maximum size of 128mb (though you can change this. Spark Get Partition Size.

From medium.com

Managing Partitions with Spark. If you ever wonder why everyone moved… by Irem Ertuerk Medium Spark Get Partition Size tuning the partition size is inevitably, linked to tuning the number of partitions. spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. when reading a table, spark defaults to read blocks with a maximum. Spark Get Partition Size.

From www.youtube.com

Why should we partition the data in spark? YouTube Spark Get Partition Size Columnorname) → dataframe [source] ¶. tuning the partition size is inevitably, linked to tuning the number of partitions. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a table, spark defaults to read blocks. Spark Get Partition Size.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} Spark Get Partition Size Columnorname) → dataframe [source] ¶. Consider the size and type of data each partition holds to ensure balanced distribution. This will give you insights into whether you need to repartition your data. evaluate data distribution across partitions using tools like spark ui or dataframes api. when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.),. Spark Get Partition Size.

From spaziocodice.com

Spark SQL Partitions and Sizes SpazioCodice Spark Get Partition Size spark rdd provides getnumpartitions, partitions.length and partitions.size that returns the length/size of current rdd partitions, in order to use this on dataframe, first you need to convert dataframe to rdd using df.rdd. we use spark's ui to monitor task times and shuffle read/write times. when reading a table, spark defaults to read blocks with a maximum size. Spark Get Partition Size.

From leecy.me

Spark partitions A review Spark Get Partition Size when reading a file in dataframe api using spark.read (e.g., spark.read.csv(), spark.read.parquet(), etc.), spark uses the. evaluate data distribution across partitions using tools like spark ui or dataframes api. Thus, the number of partitions relies on the size of the input. There're at least 3 factors to. This will give you insights into whether you need to repartition. Spark Get Partition Size.