Hadoop Yarn Gpu . As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. As of now, only nvidia gpus are supported by yarn. But it’s difficult for a vendor to implement such a. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. at present, yarn supports gpu/fpga device through a native, coupling way.

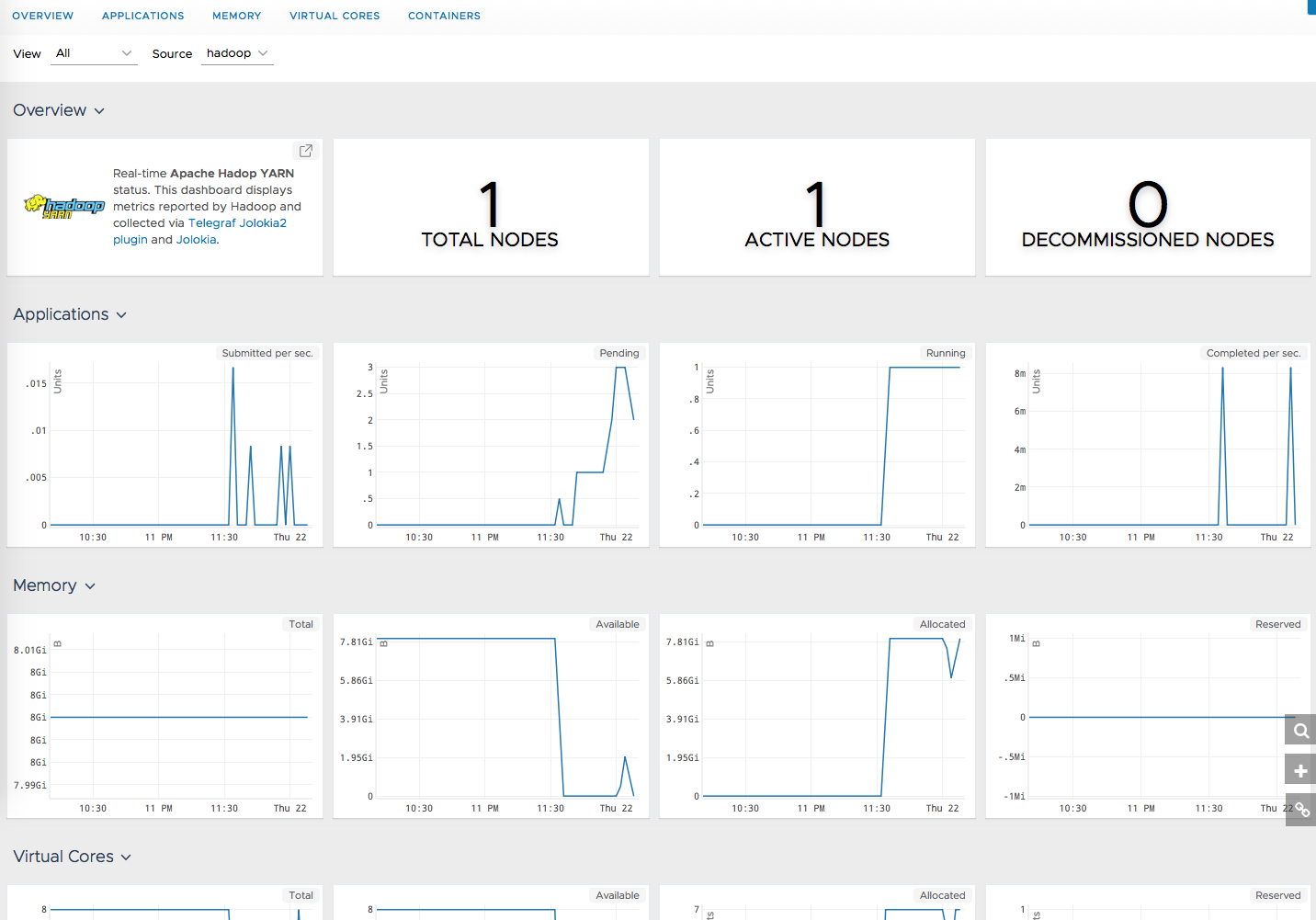

from docs.wavefront.com

hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. As of now, only nvidia gpus are supported by yarn. at present, yarn supports gpu/fpga device through a native, coupling way. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. But it’s difficult for a vendor to implement such a.

Apache Hadoop YARN Integration VMware Aria Operations for

Hadoop Yarn Gpu in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. As of now, only nvidia gpus are supported by yarn. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. at present, yarn supports gpu/fpga device through a native, coupling way. But it’s difficult for a vendor to implement such a. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a.

From yourserveradmin.com

Big Data Systems Hadoop YourServerAdmin Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. As of now, only nvidia gpus are supported by yarn. But it’s. Hadoop Yarn Gpu.

From stackoverflow.com

Yarn of hadoop 3.1.1 can't schedule GPU resource Stack Overflow Hadoop Yarn Gpu at present, yarn supports gpu/fpga device through a native, coupling way. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. But it’s difficult for a vendor to implement such a. hadoop yarn, the current dominant resource scheduling framework, starts to involve. Hadoop Yarn Gpu.

From cloud2data.com

Apache Hadoop And Yarn Features and functions of Hadoop YARN Cloud2Data Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. at present, yarn supports gpu/fpga device through a native, coupling way. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. But it’s difficult for a vendor to implement such a. following files recommended to. Hadoop Yarn Gpu.

From sparkdatabox.com

Hadoop YARN Spark Databox Hadoop Yarn Gpu But it’s difficult for a vendor to implement such a. As of now, only nvidia gpus are supported by yarn. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. in this paper, we present comprehensive techniques. Hadoop Yarn Gpu.

From techvidvan.com

Apache Hadoop Architecture HDFS, YARN & MapReduce TechVidvan Hadoop Yarn Gpu As of now, only nvidia gpus are supported by yarn. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. at present, yarn supports gpu/fpga device through a native, coupling way. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. hadoop yarn, the. Hadoop Yarn Gpu.

From www.geeksforgeeks.org

Hadoop Version 3.0 What's New? Hadoop Yarn Gpu as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. in. Hadoop Yarn Gpu.

From mindmajix.com

Hadoop Tutorial A Complete Guide for Hadoop Mindmajix Hadoop Yarn Gpu following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. But it’s difficult for a vendor to implement such a. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. in. Hadoop Yarn Gpu.

From www.oreilly.com

Apache Hadoop™ YARN Moving beyond MapReduce and Batch Processing with Hadoop Yarn Gpu As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. at present, yarn supports gpu/fpga device through a native, coupling way. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu. Hadoop Yarn Gpu.

From www.cloudduggu.com

CloudDuggu Apache Hadoop Yarn Tutorial Hadoop Yarn Gpu As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. As of now, only nvidia gpus are supported by yarn. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. But it’s difficult for a vendor to. Hadoop Yarn Gpu.

From stackoverflow.com

linux Hadoop Yarn Stuck at Running Job Ubuntu Stack Overflow Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. at present, yarn supports gpu/fpga device through a native, coupling way. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications. Hadoop Yarn Gpu.

From www.adaltas.com

Apache Hadoop YARN 3.0 État de l'art Adaltas Hadoop Yarn Gpu But it’s difficult for a vendor to implement such a. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. at present, yarn supports gpu/fpga device through a native, coupling way. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. as spark doesn't like much. Hadoop Yarn Gpu.

From www.youtube.com

What is Hadoop Yarn? Hadoop Yarn Tutorial Hadoop Yarn Architecture Hadoop Yarn Gpu as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. But it’s difficult for a vendor to implement such a. at present, yarn supports gpu/fpga device through a native, coupling way. hadoop yarn, the current dominant resource scheduling framework, starts to involve. Hadoop Yarn Gpu.

From data-flair.training

Install Hadoop 2 with YARN in PseudoDistributed Mode DataFlair Hadoop Yarn Gpu As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up. Hadoop Yarn Gpu.

From www.youtube.com

End to End Project using Spark/Hadoop Code Walkthrough Architecture Hadoop Yarn Gpu as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. As of now, only nvidia gpus are supported by yarn. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. following files recommended to be. Hadoop Yarn Gpu.

From www.onlinelearningcenter.in

Hadoop YARN Architecture Features, Components Online Learning Center Hadoop Yarn Gpu in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. As of now, only nvidia gpus are supported by yarn. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in.. Hadoop Yarn Gpu.

From www.youtube.com

Hadoop Tutorial The YARN YouTube Hadoop Yarn Gpu at present, yarn supports gpu/fpga device through a native, coupling way. As of now, only nvidia gpus are supported by yarn. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. But it’s difficult for a vendor to implement such a. hadoop yarn, the current dominant resource scheduling framework, starts. Hadoop Yarn Gpu.

From www.pinterest.com

YARN Hadoop Yarn, Novelty sign, Save Hadoop Yarn Gpu But it’s difficult for a vendor to implement such a. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. As of now, only nvidia gpus are supported by yarn. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. As one of the most widely. Hadoop Yarn Gpu.

From www.adaltas.com

Deep learning on YARN running Tensorflow and friends on Hadoop cluster Hadoop Yarn Gpu in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. at present, yarn supports gpu/fpga device through a native, coupling way. As of now, only nvidia gpus are supported by yarn. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+. Hadoop Yarn Gpu.

From www.youtube.com

What is Hadoop Yarn? Hadoop Yarn Tutorial Hadoop Yarn Architecture Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. As of now, only nvidia gpus are supported by yarn. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. . Hadoop Yarn Gpu.

From www.adaltas.com

YARN and GPU Distribution for Machine Learning Adaltas Hadoop Yarn Gpu But it’s difficult for a vendor to implement such a. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. As of now, only nvidia gpus are supported by yarn. at present, yarn supports gpu/fpga device through a native, coupling way. in. Hadoop Yarn Gpu.

From data-flair.training

Hadoop Ecosystem and Their Components A Complete Tutorial DataFlair Hadoop Yarn Gpu As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. following. Hadoop Yarn Gpu.

From www.youtube.com

Hadoop YARN YouTube Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. at present, yarn supports gpu/fpga device through a native, coupling way. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu. Hadoop Yarn Gpu.

From www.interviewbit.com

Hadoop Architecture Detailed Explanation InterviewBit Hadoop Yarn Gpu As of now, only nvidia gpus are supported by yarn. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. following files recommended to be. Hadoop Yarn Gpu.

From mentorspool.com

Big Data Hadoop MentorsPool Hadoop Yarn Gpu following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. But it’s difficult for a vendor to implement such a. As of now, only nvidia gpus are supported by yarn. at present, yarn supports gpu/fpga device through a. Hadoop Yarn Gpu.

From stacklima.com

Architecture Hadoop YARN StackLima Hadoop Yarn Gpu But it’s difficult for a vendor to implement such a. at present, yarn supports gpu/fpga device through a native, coupling way. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. As of now, only nvidia gpus are supported by yarn. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark. Hadoop Yarn Gpu.

From sparkdatabox.com

Hadoop YARN Spark Databox Hadoop Yarn Gpu As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. But it’s difficult for a vendor to implement such a. as. Hadoop Yarn Gpu.

From www.edureka.co

Apache Hadoop YARN Introduction to YARN Architecture Edureka Hadoop Yarn Gpu at present, yarn supports gpu/fpga device through a native, coupling way. But it’s difficult for a vendor to implement such a. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. following files recommended to. Hadoop Yarn Gpu.

From www.edureka.co

Apache Hadoop YARN Introduction to YARN Architecture Edureka Hadoop Yarn Gpu following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. But it’s difficult for a vendor to implement such a. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to. Hadoop Yarn Gpu.

From itnext.io

The Implementation Principles of Hadoop YARN by Dwen ITNEXT Hadoop Yarn Gpu at present, yarn supports gpu/fpga device through a native, coupling way. As of now, only nvidia gpus are supported by yarn. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but. Hadoop Yarn Gpu.

From had00b.blogspot.com

Setup Apache Hadoop on your machine (singlenode cluster) Had00b Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. But it’s difficult for a vendor to implement such a. at present, yarn supports gpu/fpga device through a native, coupling way. As of now, only nvidia. Hadoop Yarn Gpu.

From aws.amazon.com

Apache Hadoop YARN AWS Big Data Blog Hadoop Yarn Gpu As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. But it’s difficult for a vendor to implement such a. at present, yarn supports gpu/fpga device through a native, coupling way. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but. Hadoop Yarn Gpu.

From sstar1314.github.io

Hadoop ResourceManager Yarn SStar1314 Hadoop Yarn Gpu at present, yarn supports gpu/fpga device through a native, coupling way. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. As one of the most widely used cluster scheduling frameworks, hadoop yarn only supported cpu and memory. But it’s difficult for a vendor to implement such a. as spark. Hadoop Yarn Gpu.

From docs.wavefront.com

Apache Hadoop YARN Integration VMware Aria Operations for Hadoop Yarn Gpu hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. as spark doesn't like much yarn resources as of hadoop. Hadoop Yarn Gpu.

From www.xenonstack.com

Apache Hadoop Benefits and Working with GPU Hadoop Yarn Gpu As of now, only nvidia gpus are supported by yarn. following files recommended to be configured to enable gpu scheduling on yarn 3.2.1 and later. at present, yarn supports gpu/fpga device through a native, coupling way. in this paper, we present comprehensive techniques that can effectively support multiple deep learning applications in a. hadoop yarn, the. Hadoop Yarn Gpu.

From slideplayer.com

Distributed SAR Image Change Detection with OpenCLEnabled Spark ppt Hadoop Yarn Gpu As of now, only nvidia gpus are supported by yarn. hadoop yarn, the current dominant resource scheduling framework, starts to involve gpu scheduling in. as spark doesn't like much yarn resources as of hadoop 3.0.0 (spark is said to work with hadoop 2.6+ but it implicitly means up to. following files recommended to be configured to enable. Hadoop Yarn Gpu.