Torch Jit Parallel . But are there any gotchas using. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. Calling dataparallel on the jitted module. We’ll then look at some code samples. A model can utilize a fork torchscript. A parallel ode solver for pytorch. It seems like you cannot jit a dataparallel object. Any torchscript program can be saved from a. Torchscript is a way to create serializable and optimizable models from pytorch code. The two important apis for dynamic parallelism are: I am wondering if we can call forward() in parallel. Following the official tutorial, i thought that the following code would. In this blog post, we’ll provide an overview of torch.jit: Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. What it is, and at a high level, how it works.

from blog.csdn.net

We’ll then look at some code samples. But are there any gotchas using. In this blog post, we’ll provide an overview of torch.jit: Torchscript is a way to create serializable and optimizable models from pytorch code. It seems like you cannot jit a dataparallel object. What it is, and at a high level, how it works. I am wondering if we can call forward() in parallel. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Following the official tutorial, i thought that the following code would. Any torchscript program can be saved from a.

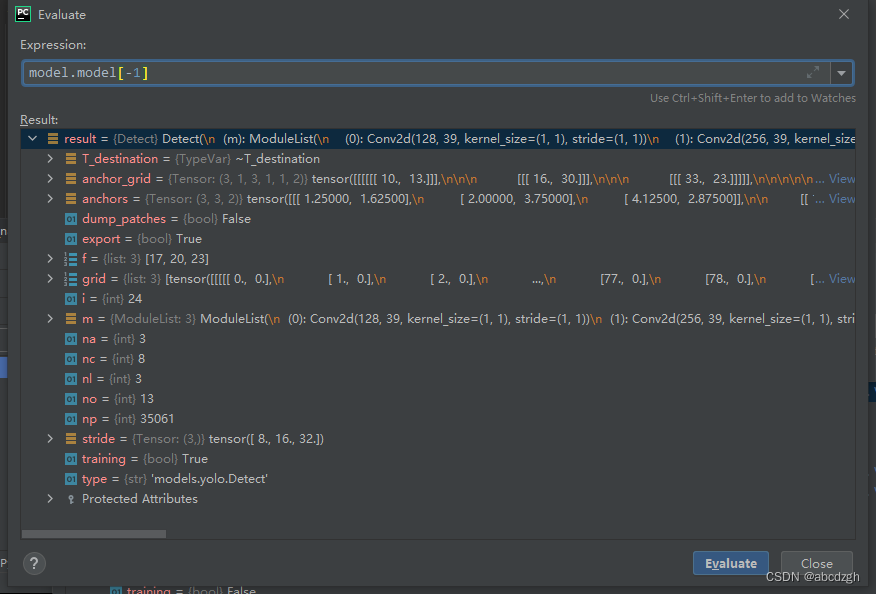

关于torch.jit.trace在yolov8中出现的问题CSDN博客

Torch Jit Parallel I am wondering if we can call forward() in parallel. In this blog post, we’ll provide an overview of torch.jit: Any torchscript program can be saved from a. We’ll then look at some code samples. Torchscript is a way to create serializable and optimizable models from pytorch code. But are there any gotchas using. What it is, and at a high level, how it works. Following the official tutorial, i thought that the following code would. The two important apis for dynamic parallelism are: A model can utilize a fork torchscript. A parallel ode solver for pytorch. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. I am wondering if we can call forward() in parallel. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. It seems like you cannot jit a dataparallel object. Calling dataparallel on the jitted module.

From blog.csdn.net

关于torch.jit.trace在yolov8中出现的问题CSDN博客 Torch Jit Parallel Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. Calling dataparallel on the jitted module. I am wondering if we can call forward() in parallel. It seems like you cannot jit a dataparallel object. A model can utilize a fork torchscript. What it is, and at a high level, how it. Torch Jit Parallel.

From github.com

torch.jit.fork examples are empty · Issue 50082 · pytorch/pytorch · GitHub Torch Jit Parallel Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. What it is, and at a high level, how it works. Torchscript is a way to create serializable and optimizable models from pytorch code. But are there any gotchas using. The two important apis for dynamic parallelism are: I am wondering if we can call forward() in parallel. A parallel ode solver for. Torch Jit Parallel.

From github.com

torch.jit.load support specifying a target device. · Issue 775 Torch Jit Parallel A model can utilize a fork torchscript. What it is, and at a high level, how it works. Torchscript is a way to create serializable and optimizable models from pytorch code. I am wondering if we can call forward() in parallel. In this blog post, we’ll provide an overview of torch.jit: The two important apis for dynamic parallelism are: Calling. Torch Jit Parallel.

From www.researchgate.net

Joint torque analysis of the parallel mechanism; i represents the ith Torch Jit Parallel We’ll then look at some code samples. What it is, and at a high level, how it works. Torchscript is a way to create serializable and optimizable models from pytorch code. Following the official tutorial, i thought that the following code would. Any torchscript program can be saved from a. The two important apis for dynamic parallelism are: Each inference. Torch Jit Parallel.

From github.com

[JIT] torch.jit.script should not error out with "No forward method was Torch Jit Parallel We’ll then look at some code samples. Torchscript is a way to create serializable and optimizable models from pytorch code. It seems like you cannot jit a dataparallel object. Any torchscript program can be saved from a. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. A parallel ode solver for pytorch. What it is, and at a high level, how it. Torch Jit Parallel.

From github.com

torch.jit.trace() does not support variant length input? · Issue 15391 Torch Jit Parallel It seems like you cannot jit a dataparallel object. Torchscript is a way to create serializable and optimizable models from pytorch code. In this blog post, we’ll provide an overview of torch.jit: We’ll then look at some code samples. Calling dataparallel on the jitted module. Any torchscript program can be saved from a. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in.. Torch Jit Parallel.

From github.com

torch.jit._trace.TracingCheckError Tracing failed sanity checks! ERROR Torch Jit Parallel Calling dataparallel on the jitted module. A model can utilize a fork torchscript. We’ll then look at some code samples. A parallel ode solver for pytorch. Torchscript is a way to create serializable and optimizable models from pytorch code. In this blog post, we’ll provide an overview of torch.jit: It seems like you cannot jit a dataparallel object. What it. Torch Jit Parallel.

From www.olightstore.uk

Torch Light Beam Principle and Related Parts Torch Jit Parallel Calling dataparallel on the jitted module. We’ll then look at some code samples. In this blog post, we’ll provide an overview of torch.jit: Any torchscript program can be saved from a. Following the official tutorial, i thought that the following code would. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one.. Torch Jit Parallel.

From blog.csdn.net

关于torch.jit.trace在yolov8中出现的问题CSDN博客 Torch Jit Parallel Any torchscript program can be saved from a. The two important apis for dynamic parallelism are: We’ll then look at some code samples. Calling dataparallel on the jitted module. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Torchscript is a way to create serializable and optimizable models from pytorch code. In this blog post, we’ll provide an overview of torch.jit: But. Torch Jit Parallel.

From blog.csdn.net

TorchScript (将动态图转为静态图)(模型部署)(jit)(torch.jit.trace)(torch.jit.script Torch Jit Parallel In this blog post, we’ll provide an overview of torch.jit: Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. But are there any gotchas using. Any torchscript program can be saved from a. I am wondering if we can call forward() in parallel. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. A. Torch Jit Parallel.

From blog.csdn.net

torch.jit.trace与torch.jit.script的区别CSDN博客 Torch Jit Parallel The two important apis for dynamic parallelism are: Torchscript is a way to create serializable and optimizable models from pytorch code. We’ll then look at some code samples. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. Any torchscript program can be saved from a. Following the official tutorial, i thought. Torch Jit Parallel.

From discuss.pytorch.org

How to ensure the correctness of the torch script jit PyTorch Forums Torch Jit Parallel Following the official tutorial, i thought that the following code would. In this blog post, we’ll provide an overview of torch.jit: We’ll then look at some code samples. A model can utilize a fork torchscript. A parallel ode solver for pytorch. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. The two important apis for dynamic parallelism are: Calling dataparallel on the. Torch Jit Parallel.

From github.com

'torchjitscriptErrorReport' from 'torchjitload('modelpath Torch Jit Parallel A parallel ode solver for pytorch. It seems like you cannot jit a dataparallel object. What it is, and at a high level, how it works. Torchscript is a way to create serializable and optimizable models from pytorch code. Calling dataparallel on the jitted module. I am wondering if we can call forward() in parallel. Each inference thread invokes a. Torch Jit Parallel.

From github.com

How to train a torchjitscriptModule? · Issue 28478 · pytorch Torch Jit Parallel It seems like you cannot jit a dataparallel object. Calling dataparallel on the jitted module. I am wondering if we can call forward() in parallel. Torchscript is a way to create serializable and optimizable models from pytorch code. In this blog post, we’ll provide an overview of torch.jit: Each inference thread invokes a jit interpreter that executes the ops of. Torch Jit Parallel.

From github.com

Torch Jit compatibility for torch geometric and dependencies? · Issue Torch Jit Parallel A parallel ode solver for pytorch. I am wondering if we can call forward() in parallel. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Any torchscript program can be saved from a. A model can utilize a fork torchscript. Calling dataparallel on the jitted module. What it is, and at a high level, how it works. We’ll then look at some. Torch Jit Parallel.

From github.com

In torchjitscriptModule module = torchjitload("xxx.pt"), How Torch Jit Parallel Any torchscript program can be saved from a. Calling dataparallel on the jitted module. I am wondering if we can call forward() in parallel. The two important apis for dynamic parallelism are: What it is, and at a high level, how it works. We’ll then look at some code samples. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Each inference thread. Torch Jit Parallel.

From juejin.cn

TorchScript 系列解读(二):Torch jit tracer 实现解析 掘金 Torch Jit Parallel It seems like you cannot jit a dataparallel object. Calling dataparallel on the jitted module. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. The two important apis for dynamic parallelism are: I am wondering if we can call forward() in parallel. We’ll then look at some code samples. What it is, and at a high level, how it works. Each inference. Torch Jit Parallel.

From blog.csdn.net

关于torch.jit.trace在yolov8中出现的问题CSDN博客 Torch Jit Parallel A parallel ode solver for pytorch. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Calling dataparallel on the jitted module. It seems like you cannot jit a dataparallel object. Any torchscript program can be saved from a. We’ll then look at some code samples. I am wondering if we can call forward() in parallel. Each inference thread invokes a jit interpreter. Torch Jit Parallel.

From fyodwdatp.blob.core.windows.net

Torch.jit.script Vs Torch.jit.trace at Gerald Levy blog Torch Jit Parallel A parallel ode solver for pytorch. Any torchscript program can be saved from a. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. A model can utilize a fork torchscript. The two important apis for dynamic parallelism are: But are there any gotchas using. I am wondering if we can call. Torch Jit Parallel.

From www.openteams.com

torch Justintime compilation (JIT) for Rless model deployment Torch Jit Parallel It seems like you cannot jit a dataparallel object. Any torchscript program can be saved from a. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Following the official tutorial, i thought that the following code would. But are there any gotchas using. A model can utilize a fork torchscript. The two important apis for dynamic parallelism are: Calling dataparallel on the. Torch Jit Parallel.

From github.com

GitHub ShaharSarShalom/torchjitscriptexample Demonstrate how to Torch Jit Parallel I am wondering if we can call forward() in parallel. A model can utilize a fork torchscript. In this blog post, we’ll provide an overview of torch.jit: Following the official tutorial, i thought that the following code would. Any torchscript program can be saved from a. Each inference thread invokes a jit interpreter that executes the ops of a model. Torch Jit Parallel.

From www.researchgate.net

Welding torch movement feed during deposition on flat walls (parallel Torch Jit Parallel It seems like you cannot jit a dataparallel object. The two important apis for dynamic parallelism are: Torchscript is a way to create serializable and optimizable models from pytorch code. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. In this blog post, we’ll provide an overview of torch.jit: A model. Torch Jit Parallel.

From github.com

`torch.jit.trace` memory usage increase although forward is constant Torch Jit Parallel Any torchscript program can be saved from a. We’ll then look at some code samples. What it is, and at a high level, how it works. It seems like you cannot jit a dataparallel object. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. A parallel ode solver for pytorch. But. Torch Jit Parallel.

From blog.csdn.net

关于torch.jit.trace在yolov8中出现的问题CSDN博客 Torch Jit Parallel The two important apis for dynamic parallelism are: Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. I am wondering if we can call forward() in parallel. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Torchscript is a way to create serializable and optimizable models from pytorch code. But are there any. Torch Jit Parallel.

From github.com

how to use torch.jit.script with toch.nn.DataParallel · Issue 67438 Torch Jit Parallel A model can utilize a fork torchscript. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. In this blog post, we’ll provide an overview of torch.jit: Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Following the official tutorial, i thought that the following code would. I am wondering if we can call. Torch Jit Parallel.

From github.com

torch.jit.script unable to fuse elementwise operations · Issue 76799 Torch Jit Parallel Any torchscript program can be saved from a. But are there any gotchas using. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. Calling dataparallel on the jitted module. I am wondering if we can call forward() in parallel. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. In this blog post, we’ll. Torch Jit Parallel.

From github.com

`torch.jit.load` fails when function parameters use nonASCII Torch Jit Parallel It seems like you cannot jit a dataparallel object. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. A model can utilize a fork torchscript. But are there any gotchas using. I am wondering if we can call forward() in parallel. We’ll then look at some code samples. Calling dataparallel on the jitted module. Each inference thread invokes a jit interpreter that. Torch Jit Parallel.

From github.com

Performance issue with torch.jit.trace(), slow prediction in C++ (CPU Torch Jit Parallel Any torchscript program can be saved from a. A model can utilize a fork torchscript. A parallel ode solver for pytorch. Calling dataparallel on the jitted module. We’ll then look at some code samples. Torchscript is a way to create serializable and optimizable models from pytorch code. The two important apis for dynamic parallelism are: I am wondering if we. Torch Jit Parallel.

From www.educba.com

PyTorch JIT Script and Modules of PyTorch JIT with Example Torch Jit Parallel Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. Any torchscript program can be saved from a. Torchscript is a way to create serializable and optimizable models from pytorch code. A parallel ode solver for pytorch. We’ll then look at some code samples. I am wondering if we can call forward() in parallel. It seems like you cannot jit a dataparallel object.. Torch Jit Parallel.

From github.com

use torch.jit.trace export pytorch 2 torchscript fail. · Issue 131 Torch Jit Parallel Following the official tutorial, i thought that the following code would. A model can utilize a fork torchscript. What it is, and at a high level, how it works. In this blog post, we’ll provide an overview of torch.jit: I am wondering if we can call forward() in parallel. Each inference thread invokes a jit interpreter that executes the ops. Torch Jit Parallel.

From github.com

`torch.jit.trace` memory usage increase although forward is constant Torch Jit Parallel Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. The two important apis for dynamic parallelism are: In this blog post, we’ll provide an overview of torch.jit: A model can utilize a fork torchscript. A parallel ode solver for pytorch. We’ll then look at some code samples. What it is, and at a high level, how it works. Calling dataparallel on the. Torch Jit Parallel.

From github.com

undefined reference to torchjitload · Issue 39718 · pytorch Torch Jit Parallel We’ll then look at some code samples. Any torchscript program can be saved from a. It seems like you cannot jit a dataparallel object. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. The two important apis for dynamic parallelism are: What it is, and at a high level, how it. Torch Jit Parallel.

From giodqlpzb.blob.core.windows.net

Torch.jit.script Cuda at Lynne Lockhart blog Torch Jit Parallel It seems like you cannot jit a dataparallel object. The two important apis for dynamic parallelism are: Any torchscript program can be saved from a. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one by one. Nn.modulelist = torch.jit.script(parallelmodulelist([nn.rnn(input_size=self.feature_count, hidden_size=self.hidden_size) for i in. In this blog post, we’ll provide an overview of torch.jit:. Torch Jit Parallel.

From blog.csdn.net

关于torch.jit.trace在yolov8中出现的问题CSDN博客 Torch Jit Parallel But are there any gotchas using. We’ll then look at some code samples. Any torchscript program can be saved from a. A parallel ode solver for pytorch. In this blog post, we’ll provide an overview of torch.jit: A model can utilize a fork torchscript. Each inference thread invokes a jit interpreter that executes the ops of a model inline, one. Torch Jit Parallel.

From github.com

[torch.jit.trace] torch.jit.trace fixed batch size CNN · Issue 38472 Torch Jit Parallel What it is, and at a high level, how it works. Following the official tutorial, i thought that the following code would. I am wondering if we can call forward() in parallel. In this blog post, we’ll provide an overview of torch.jit: We’ll then look at some code samples. A model can utilize a fork torchscript. Torchscript is a way. Torch Jit Parallel.