Databricks Number Of Partitions . To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. It takes a partition number,. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. I've heard from other engineers. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Repartition to the specified number of partitions using the specified partitioning expressions.

from giodjzcjv.blob.core.windows.net

Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. Repartition to the specified number of partitions using the specified partitioning expressions. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. It takes a partition number,. The 200 partitions might be too large if a user is working with. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? I've heard from other engineers. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25.

Databricks Partition Performance at David Hoard blog

Databricks Number Of Partitions Spark by default uses 200 partitions when doing transformations. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. I've heard from other engineers. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with. It takes a partition number,. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Repartition to the specified number of partitions using the specified partitioning expressions. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. How does one calculate the 'optimal' number of partitions based on the size of the dataframe?

From morioh.com

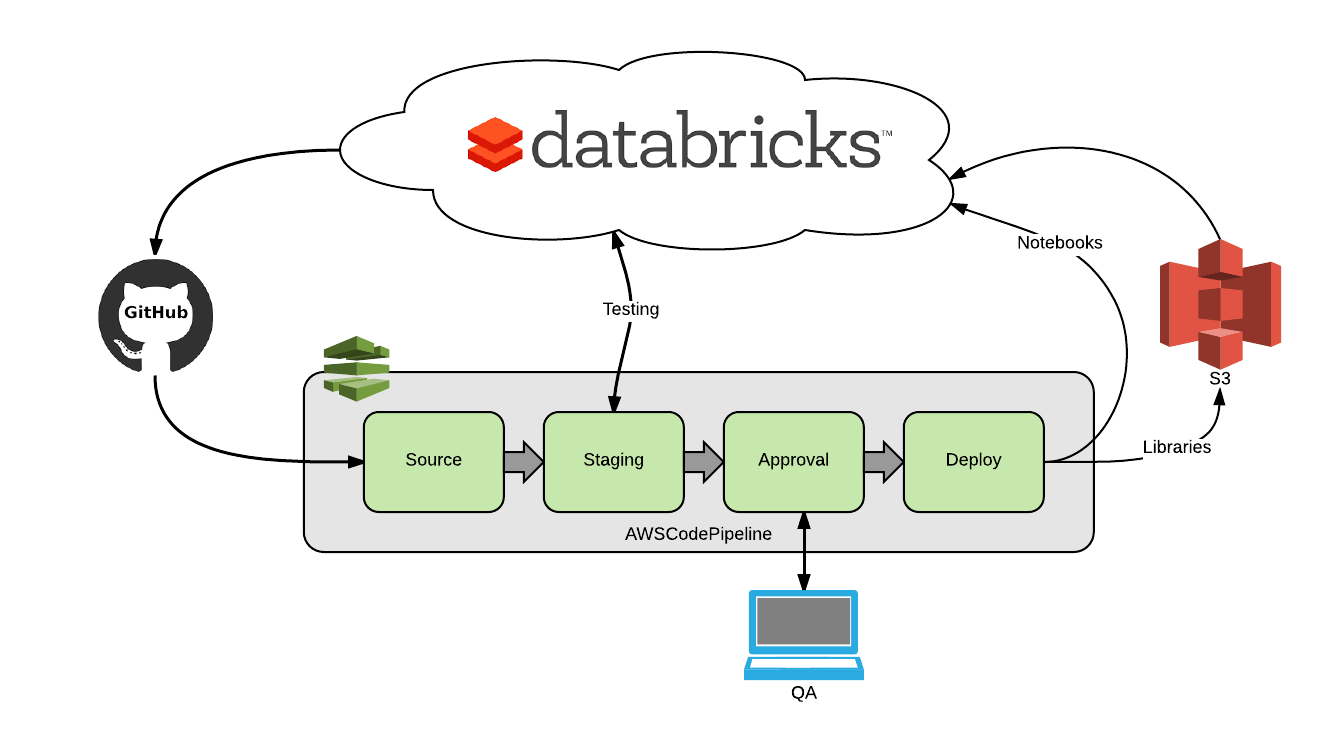

Databricks Deployment via Jenkins Databricks Number Of Partitions Spark by default uses 200 partitions when doing transformations. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. I've heard from other engineers. The 200 partitions might be too large if a user is working with. To utiltize the cores available properly especially in the. Databricks Number Of Partitions.

From giodjzcjv.blob.core.windows.net

Databricks Partition Performance at David Hoard blog Databricks Number Of Partitions Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. It takes a partition number,. I've heard from other engineers. The 200 partitions might be too large if a user is working with. How does one. Databricks Number Of Partitions.

From www.vrogue.co

Databricks Cluster Pricing Aws Printable Templates vrogue.co Databricks Number Of Partitions To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. I've heard from other engineers. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. It takes a partition number,. The 200 partitions might be too large if a user is working with.. Databricks Number Of Partitions.

From www.vrogue.co

Databricks Interview Questions Guide To Databricks Interview Questions Databricks Number Of Partitions To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Repartition to the specified number of partitions using the specified partitioning expressions. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. At initial run, it. Databricks Number Of Partitions.

From www.techmobius.com

Now build reliable data and ML workflows with Databricks!TechMobius Databricks Number Of Partitions Spark by default uses 200 partitions when doing transformations. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. I've. Databricks Number Of Partitions.

From www.semanticscholar.org

Table 1 from Enumeration of the Partitions of an Integer into Parts of Databricks Number Of Partitions Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. The 200 partitions might be too large if a user is working with. I've heard from other engineers. It takes a partition number,. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you. Databricks Number Of Partitions.

From www.devopsschool.com

What is Databricks and use cases of Databricks? Databricks Number Of Partitions At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. I've heard from other engineers. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with. To utiltize the cores available properly especially in the. Databricks Number Of Partitions.

From www.vrogue.co

What Is Azure Databricks Data Science Data Visualization Tools Vrogue Databricks Number Of Partitions How does one calculate the 'optimal' number of partitions based on the size of the dataframe? I've heard from other engineers. The 200 partitions might be too large if a user is working with. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. Repartition to the specified number of partitions using. Databricks Number Of Partitions.

From towardsdatascience.com

Getting Started with Databricks. A Beginners Guide to Databricks by Databricks Number Of Partitions Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. It takes a partition number,. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with. This article provides an overview. Databricks Number Of Partitions.

From templates.udlvirtual.edu.pe

What Is Databricks Unit Printable Templates Databricks Number Of Partitions At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. Repartition to the specified number of partitions using the specified partitioning expressions. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with. Learn how. Databricks Number Of Partitions.

From docs.databricks.com

Partition discovery for external tables Databricks on AWS Databricks Number Of Partitions Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? The 200 partitions might be too large if a user is working with. It takes a partition number,. I've heard from other engineers. At initial run, it generates around 25 partitions within the delta. Databricks Number Of Partitions.

From fire-insights.readthedocs.io

Browsing Databricks Tables — Sparkflows 0.0.1 documentation Databricks Number Of Partitions It takes a partition number,. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. Repartition to the specified number of partitions using the specified partitioning expressions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. To utiltize the cores available properly especially in the. Databricks Number Of Partitions.

From www.altexsoft.com

Databricks Lakehouse Platform Pros and Cons AltexSoft Databricks Number Of Partitions I've heard from other engineers. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Learn how to use the show partitions syntax of the sql language in databricks sql. Databricks Number Of Partitions.

From huggingface.co

Databricks ️ Hugging Face up to 40 faster training and tuning of Databricks Number Of Partitions Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. It takes a partition number,. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. The 200 partitions might be too. Databricks Number Of Partitions.

From docs.acceldata.io

Databricks Acceldata Data Observability Cloud Databricks Number Of Partitions Repartition to the specified number of partitions using the specified partitioning expressions. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. It takes a partition number,. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Learn how to use the show partitions syntax of the sql language. Databricks Number Of Partitions.

From blog.fabric.microsoft.com

Using Azure Databricks with Microsoft Fabric and OneLake Блоґ Databricks Number Of Partitions Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. The 200 partitions might be too large if a user is working with. Repartition to the specified number of partitions using the specified partitioning expressions. Spark by default uses 200 partitions when doing transformations. It takes a partition number,. This article provides an overview of how you can partition tables. Databricks Number Of Partitions.

From www.invivoo.com

Partenariat Databricks INVIVOO Databricks Number Of Partitions How does one calculate the 'optimal' number of partitions based on the size of the dataframe? Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. It takes a partition number,. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. At initial run, it generates around 25 partitions within the delta. Databricks Number Of Partitions.

From www.redpill-linpro.com

Databricks Redpill Linpro Databricks Number Of Partitions It takes a partition number,. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Repartition to the specified number of partitions using the specified partitioning expressions. Spark by default uses 200 partitions when doing transformations. This article provides an overview of how you. Databricks Number Of Partitions.

From www.projectpro.io

DataFrames number of partitions in spark scala in Databricks Databricks Number Of Partitions Spark by default uses 200 partitions when doing transformations. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Learn how to use the show partitions syntax of the sql. Databricks Number Of Partitions.

From www.databricks.com

Getting Started with Databricks Data Science and Engineering Workspace Databricks Number Of Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. It takes a partition number,. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. To utiltize the cores available properly. Databricks Number Of Partitions.

From docs.databricks.com

July 2020 Databricks on AWS Databricks Number Of Partitions To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. It takes a partition number,. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. I've heard from other engineers. This article provides an overview of how you can partition. Databricks Number Of Partitions.

From www.youtube.com

How To Fix The Selected Disk Already Contains the Maximum Number of Databricks Number Of Partitions Spark by default uses 200 partitions when doing transformations. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? It takes a partition number,. Repartition to the specified number of partitions using the specified partitioning expressions. This article provides an overview of how you can partition tables on databricks and specific recommendations around when. Databricks Number Of Partitions.

From findy-tools.io

Databricksとは?機能や特徴・製品の概要まとめ Databricks Number Of Partitions I've heard from other engineers. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Repartition to the specified number of partitions using the specified partitioning expressions. The 200 partitions might be too large if a user is working with. At initial run, it generates around 25 partitions within the delta (no issue as. Databricks Number Of Partitions.

From qiita.com

Apache AirflowのマネージドワークフローによるAWS Databricksのワークロードのオーケストレーション airflow Databricks Number Of Partitions It takes a partition number,. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Spark by default uses 200 partitions when doing transformations. How does one calculate the 'optimal' number of partitions based on the. Databricks Number Of Partitions.

From fabioms.com.br

How to get and publish data from Azure Databricks to Power BI Databricks Number Of Partitions Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. I've heard from other engineers. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. This article provides an overview of how you can partition tables. Databricks Number Of Partitions.

From azurelib.com

How to number records in PySpark Azure Databricks? Databricks Number Of Partitions Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. The 200 partitions might be too large if a user is working with. Repartition to the specified number of partitions using the specified partitioning expressions. Spark by default uses 200 partitions when doing transformations. At initial run, it generates around 25 partitions. Databricks Number Of Partitions.

From www.inovex.de

databricks inovex GmbH Databricks Number Of Partitions Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. I've heard from other engineers. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? The 200 partitions might be too large if a user is. Databricks Number Of Partitions.

From www.youtube.com

databricks shortcuts hide or show line numbers YouTube Databricks Number Of Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. It takes a partition number,. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions.. Databricks Number Of Partitions.

From bryteflow.com

Connect Oracle Database to Databricks, CDC in Realtime BryteFlow Databricks Number Of Partitions I've heard from other engineers. It takes a partition number,. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. At initial run, it generates around 25 partitions within the delta (no issue as it's possible the key resulted in data falling into 25. Spark by default uses 200. Databricks Number Of Partitions.

From www.youtube.com

100. Databricks Pyspark Spark Architecture Internals of Partition Databricks Number Of Partitions To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Repartition to the specified number of partitions using the specified partitioning expressions. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. How does one calculate the 'optimal' number of partitions based on the size of. Databricks Number Of Partitions.

From thewindowsupdate.com

Leverage Azure Databricks jobs orchestration from Azure Data Factory Databricks Number Of Partitions How does one calculate the 'optimal' number of partitions based on the size of the dataframe? I've heard from other engineers. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. It takes a partition number,. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. Repartition to the specified number of. Databricks Number Of Partitions.

From www.vrogue.co

Building Robust Production Data Pipelines With Databricks Delta Youtube Databricks Number Of Partitions I've heard from other engineers. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. Ideal number of partitions = (100*1028)/128 = 803.25 ~ 804. At initial run, it generates around 25 partitions within the delta. Databricks Number Of Partitions.

From www.youtube.com

Partitions in Data bricks YouTube Databricks Number Of Partitions I've heard from other engineers. Learn how to use the show partitions syntax of the sql language in databricks sql and databricks runtime. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Repartition to the specified number of partitions using the specified partitioning expressions. Ideal number of partitions. Databricks Number Of Partitions.

From k21academy.com

Azure Databricks & Synapse Analytics Unveiling the Use Cases Databricks Number Of Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. It takes a partition number,. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with. To utiltize the cores available properly especially in the last iteration, the. Databricks Number Of Partitions.

From www.confluent.io

Databricks DE Databricks Number Of Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. To utiltize the cores available properly especially in the last iteration, the number of shuffle partitions. It takes a partition number,. How does one calculate the 'optimal' number of partitions based on the size of the dataframe? At initial. Databricks Number Of Partitions.