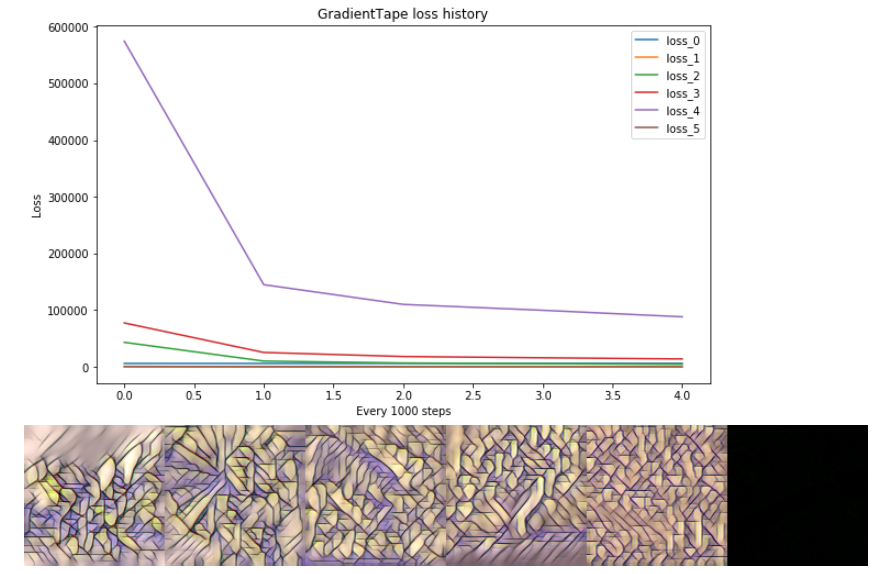

Gradienttape Multiple Losses . When we optimize keras models, we pass. To calculate multiple losses, you need multiple tapes. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. Utilize a custom loss function; Handle multiple inputs and/or outputs with different spatial dimensions; Access gradients for specific layers and update them in a unique manner; If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. To compute multiple gradients over the same computation, create a persistent gradient tape. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. This allows multiple calls to the. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. So later we can get the. This allows multiple calls to the gradient() method as. To compute multiple gradients over the same computation, create a gradient tape with persistent=true.

from stackoverflow.com

To compute multiple gradients over the same computation, create a persistent gradient tape. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. This allows multiple calls to the gradient() method as. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. Handle multiple inputs and/or outputs with different spatial dimensions; When we optimize keras models, we pass. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. To calculate multiple losses, you need multiple tapes. Access gradients for specific layers and update them in a unique manner;

python Why does my model work with `tf.GradientTape()` but fail when

Gradienttape Multiple Losses When we optimize keras models, we pass. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. To calculate multiple losses, you need multiple tapes. To compute multiple gradients over the same computation, create a gradient tape with persistent=true. When we optimize keras models, we pass. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. So later we can get the. This allows multiple calls to the gradient() method as. To compute multiple gradients over the same computation, create a persistent gradient tape. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. Handle multiple inputs and/or outputs with different spatial dimensions; This allows multiple calls to the. Access gradients for specific layers and update them in a unique manner; Utilize a custom loss function;

From whatishappeningnow.org

Cool Tensorflow Gradienttape Tutorial 2022 » What'Up Now Gradienttape Multiple Losses So later we can get the. To calculate multiple losses, you need multiple tapes. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. This allows multiple calls to the gradient() method as. To compute multiple gradients over the same computation, create a gradient tape with persistent=true. That’s. Gradienttape Multiple Losses.

From discuss.pytorch.org

Multiple Networks Multiple Losses autograd PyTorch Forums Gradienttape Multiple Losses Handle multiple inputs and/or outputs with different spatial dimensions; Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. To compute multiple gradients over the same computation, create a gradient. Gradienttape Multiple Losses.

From blog.shikoan.com

TensorFlow2.0のGradientTapeを複数使う場合のサンプル Shikoan's ML Blog Gradienttape Multiple Losses This allows multiple calls to the gradient() method as. When we optimize keras models, we pass. So later we can get the. Handle multiple inputs and/or outputs with different spatial dimensions; To calculate multiple losses, you need multiple tapes. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’. Gradienttape Multiple Losses.

From pyimagesearch.com

Using TensorFlow and GradientTape to train a Keras model PyImageSearch Gradienttape Multiple Losses This allows multiple calls to the. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. That’s not to say you couldn’t create custom. Gradienttape Multiple Losses.

From www.teledynelecroy.com

Serial Data Eye Diagram and Jitter Analysis Teledyne LeCroy Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a gradient tape with persistent=true. To calculate multiple losses, you need multiple tapes. Utilize a custom loss function; This allows multiple calls to the gradient() method as. To compute multiple gradients over the same computation, create a persistent gradient tape. Access gradients for specific layers and update them in a unique. Gradienttape Multiple Losses.

From www.delftstack.com

TensorFlow Gradient Tape Delft Stack Gradienttape Multiple Losses This allows multiple calls to the. To compute multiple gradients over the same computation, create a persistent gradient tape. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. To calculate multiple losses, you need multiple tapes. To compute multiple gradients over the same computation, create a gradient tape with persistent=true. We. Gradienttape Multiple Losses.

From www.semanticscholar.org

Figure 5 from A Crack Segmentation Model Combining Morphological Gradienttape Multiple Losses Access gradients for specific layers and update them in a unique manner; So later we can get the. Utilize a custom loss function; Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. To calculate multiple losses, you need multiple tapes. When we optimize keras models, we pass. Handle multiple inputs and/or. Gradienttape Multiple Losses.

From mengyeren.com

Scaling Forward Gradient With Local Losses Gradienttape Multiple Losses Handle multiple inputs and/or outputs with different spatial dimensions; This allows multiple calls to the gradient() method as. When we optimize keras models, we pass. To compute multiple gradients over the same computation, create a gradient tape with persistent=true. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. So later we can get the.. Gradienttape Multiple Losses.

From klaelccgu.blob.core.windows.net

Tensorflow Gradienttape Optimizer at John Madden blog Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a persistent gradient tape. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. This allows multiple calls to the. Handle multiple inputs and/or outputs with different spatial dimensions; This allows multiple calls to the gradient() method as. Access gradients for specific layers. Gradienttape Multiple Losses.

From tech.nkhn37.net

【TensorFlow】GradientTapeの自動微分による勾配の計算方法|Python Tech Gradienttape Multiple Losses Handle multiple inputs and/or outputs with different spatial dimensions; When we optimize keras models, we pass. Access gradients for specific layers and update them in a unique manner; This allows multiple calls to the. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of. Gradienttape Multiple Losses.

From pyimagesearch.com

Keras Multiple outputs and multiple losses PyImageSearch Gradienttape Multiple Losses To calculate multiple losses, you need multiple tapes. Utilize a custom loss function; To compute multiple gradients over the same computation, create a gradient tape with persistent=true. So later we can get the. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. If at any point, we. Gradienttape Multiple Losses.

From debuggercafe.com

Basics of TensorFlow GradientTape DebuggerCafe Gradienttape Multiple Losses To calculate multiple losses, you need multiple tapes. Access gradients for specific layers and update them in a unique manner; To compute multiple gradients over the same computation, create a persistent gradient tape. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. So later we can get the. To compute multiple gradients over the. Gradienttape Multiple Losses.

From www.cnblogs.com

tf.GradientTape() 使用 kpwong 博客园 Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a persistent gradient tape. Utilize a custom loss function; This allows multiple calls to the gradient() method as. So later we can get the. Handle multiple inputs and/or outputs with different spatial dimensions; To calculate multiple losses, you need multiple tapes. If at any point, we want to use multiple variables. Gradienttape Multiple Losses.

From www.youtube.com

Automatic Differentiation for ABSOLUTE beginners "with tf.GradientTape Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a persistent gradient tape. Utilize a custom loss function; When we optimize keras models, we pass. Access gradients for specific layers and update them in a unique manner; To compute multiple gradients over the same computation, create a gradient tape with persistent=true. That’s not to say you couldn’t create custom training. Gradienttape Multiple Losses.

From debuggercafe.com

Linear Regression using TensorFlow GradientTape Gradienttape Multiple Losses This allows multiple calls to the. So later we can get the. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. Handle multiple inputs and/or outputs with different spatial dimensions; To compute multiple gradients over the same computation, create a gradient tape with persistent=true. That’s not to say you couldn’t create. Gradienttape Multiple Losses.

From pyimagesearch.com

How to Use 'tf.GradientTape' PyImageSearch Gradienttape Multiple Losses That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. This allows multiple calls to the gradient() method as. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. Tf.gradienttape() is used to record the operations on. Gradienttape Multiple Losses.

From blog.csdn.net

tensorflow 2.0 深度学习(第一部分 part1)_with tf.gradienttape() as tape Gradienttape Multiple Losses This allows multiple calls to the gradient() method as. So later we can get the. Handle multiple inputs and/or outputs with different spatial dimensions; If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. This allows multiple calls to the. That’s not. Gradienttape Multiple Losses.

From pinkwink.kr

Tensorflow의 GradientTape을 이용한 미분 Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a persistent gradient tape. This allows multiple calls to the gradient() method as. So later we can get the. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. To compute multiple gradients. Gradienttape Multiple Losses.

From www.mdpi.com

Information Free FullText Improving Performance in Person Gradienttape Multiple Losses We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. When we optimize keras models, we pass. So later we can get the. This allows multiple calls to the gradient() method as. Access. Gradienttape Multiple Losses.

From www.youtube.com

EP05. GradientTape 텐서플로우 튜토리얼 YouTube Gradienttape Multiple Losses This allows multiple calls to the. To calculate multiple losses, you need multiple tapes. Access gradients for specific layers and update them in a unique manner; Utilize a custom loss function; To compute multiple gradients over the same computation, create a gradient tape with persistent=true. To compute multiple gradients over the same computation, create a persistent gradient tape. We can. Gradienttape Multiple Losses.

From rmoklesur.medium.com

Gradient Descent with TensorflowGradientTape() by Moklesur Rahman Gradienttape Multiple Losses Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. This allows multiple calls to the. To compute multiple gradients over the same computation, create a persistent gradient tape. To compute multiple gradients over the same computation, create a gradient tape with persistent=true. If at any point, we want to use multiple. Gradienttape Multiple Losses.

From medium.com

How to Train a CNN Using tf.GradientTape by BjørnJostein Singstad Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a gradient tape with persistent=true. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. To calculate multiple losses, you need multiple tapes. Tf.gradienttape() is used to record the operations on the trainable. Gradienttape Multiple Losses.

From klaelccgu.blob.core.windows.net

Tensorflow Gradienttape Optimizer at John Madden blog Gradienttape Multiple Losses Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. Access gradients for specific layers and update them in a unique manner; That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. This allows multiple calls to the. To compute multiple gradients over the same computation, create. Gradienttape Multiple Losses.

From www.codingninjas.com

Finding Gradient in Tensorflow using tf.GradientTape Coding Ninjas Gradienttape Multiple Losses If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. Utilize a custom loss function; Access gradients for specific layers and update them in a unique manner; To compute multiple gradients over the same computation, create a gradient tape with persistent=true. This. Gradienttape Multiple Losses.

From github.com

GitHub somjit101/DCGANGradientTape A study of the use of the Gradienttape Multiple Losses Access gradients for specific layers and update them in a unique manner; So later we can get the. This allows multiple calls to the. Handle multiple inputs and/or outputs with different spatial dimensions; That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. To calculate multiple losses, you need multiple tapes. This allows multiple calls. Gradienttape Multiple Losses.

From github.com

Difference in training accuracy and loss using gradientTape vs model Gradienttape Multiple Losses Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. When we optimize keras models, we pass. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. To compute multiple gradients over the same. Gradienttape Multiple Losses.

From stackoverflow.com

python Why does my model work with `tf.GradientTape()` but fail when Gradienttape Multiple Losses This allows multiple calls to the. Utilize a custom loss function; So later we can get the. To compute multiple gradients over the same computation, create a persistent gradient tape. This allows multiple calls to the gradient() method as. Handle multiple inputs and/or outputs with different spatial dimensions; Access gradients for specific layers and update them in a unique manner;. Gradienttape Multiple Losses.

From www.youtube.com

Episode 67. Tensorflow 2 GradientTape YouTube Gradienttape Multiple Losses This allows multiple calls to the. To compute multiple gradients over the same computation, create a persistent gradient tape. We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. Access gradients for specific layers and update them in a unique manner; So later we can get the. Utilize. Gradienttape Multiple Losses.

From www.researchgate.net

The average transport AC losses per layer of multiplelayer Roebel Gradienttape Multiple Losses We can get the training losses by calling the ‘training_one_epoch’ function in each epoch, and validation loss by calling the ‘validation_loss’ function. To calculate multiple losses, you need multiple tapes. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. This allows multiple calls to the. That’s not to say you couldn’t. Gradienttape Multiple Losses.

From github.com

GitHub XBCoder128/TF_GradientTape tensorflow梯度带讲解,以及附上了numpy实现的全连接神经 Gradienttape Multiple Losses When we optimize keras models, we pass. This allows multiple calls to the gradient() method as. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. This. Gradienttape Multiple Losses.

From www.reddit.com

[Tutorial] Basics of TensorFlow GradientTape r/deeplearning Gradienttape Multiple Losses If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. This allows multiple calls to the. This allows multiple calls to the gradient() method as. Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic. Gradienttape Multiple Losses.

From blog.csdn.net

tensorflow 2.0 深度学习(第一部分 part1)_with tf.gradienttape() as tape Gradienttape Multiple Losses To compute multiple gradients over the same computation, create a persistent gradient tape. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. This allows multiple calls to the. To calculate multiple losses, you need multiple tapes. If at any point, we want to use multiple variables in our calculations, all we need to do. Gradienttape Multiple Losses.

From klaelccgu.blob.core.windows.net

Tensorflow Gradienttape Optimizer at John Madden blog Gradienttape Multiple Losses Tf.gradienttape() is used to record the operations on the trainable weights (variables) in its context for automatic differentiation. To compute multiple gradients over the same computation, create a persistent gradient tape. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or tuple of those variables. This. Gradienttape Multiple Losses.

From mengyeren.com

Scaling Forward Gradient With Local Losses Gradienttape Multiple Losses Handle multiple inputs and/or outputs with different spatial dimensions; To compute multiple gradients over the same computation, create a gradient tape with persistent=true. To compute multiple gradients over the same computation, create a persistent gradient tape. If at any point, we want to use multiple variables in our calculations, all we need to do is give tape.gradient a list or. Gradienttape Multiple Losses.

From debuggercafe.com

Linear Regression using TensorFlow GradientTape Gradienttape Multiple Losses This allows multiple calls to the. That’s not to say you couldn’t create custom training loops with keras and tensorflow 1.x. To compute multiple gradients over the same computation, create a gradient tape with persistent=true. To compute multiple gradients over the same computation, create a persistent gradient tape. Handle multiple inputs and/or outputs with different spatial dimensions; To calculate multiple. Gradienttape Multiple Losses.