What Is A Good Kl Divergence . put simply, the kl divergence between two probability distributions measures how different the two distributions are. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. this is where the kl divergence comes in. I'll introduce the definition of. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Kl divergence is formally defined as follows.

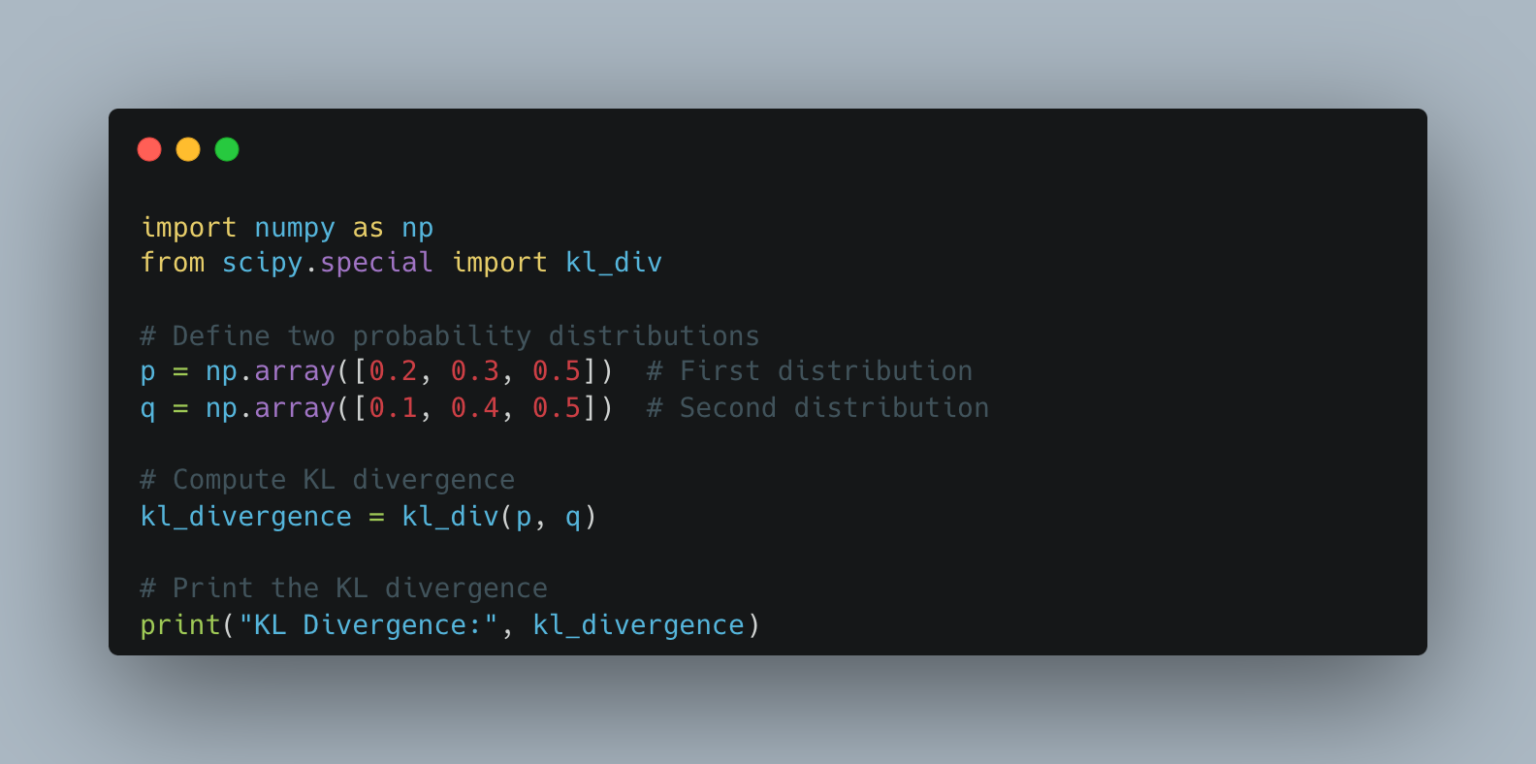

from www.pythonclear.com

I'll introduce the definition of. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Kl divergence is formally defined as follows. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. put simply, the kl divergence between two probability distributions measures how different the two distributions are. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. this is where the kl divergence comes in.

What is Python KL Divergence? Explained in 2 Simple examples Python

What Is A Good Kl Divergence Kl divergence is formally defined as follows. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. I'll introduce the definition of. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Kl divergence is formally defined as follows. put simply, the kl divergence between two probability distributions measures how different the two distributions are. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions.

From www.researchgate.net

KL divergence against time for all samplers Download Scientific Diagram What Is A Good Kl Divergence as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Kl divergence is formally defined as follows. I'll introduce the definition of. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. this is where the kl divergence. What Is A Good Kl Divergence.

From www.youtube.com

KL Divergence YouTube What Is A Good Kl Divergence this is where the kl divergence comes in. I'll introduce the definition of. put simply, the kl divergence between two probability distributions measures how different the two distributions are. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. Kl divergence is formally defined as follows. as we've seen,. What Is A Good Kl Divergence.

From www.researchgate.net

KLdivergence, KL ( q p ) for p as defined in Figure 1 and q being a What Is A Good Kl Divergence put simply, the kl divergence between two probability distributions measures how different the two distributions are. I'll introduce the definition of. Kl divergence is formally defined as follows. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. . What Is A Good Kl Divergence.

From towardsdatascience.com

Demystifying KL Divergence Towards Data Science What Is A Good Kl Divergence as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. I'll introduce the definition of. Kl divergence is formally defined as follows. this is where the kl divergence comes in.. What Is A Good Kl Divergence.

From www.pythonclear.com

What is Python KL Divergence? Explained in 2 Simple examples Python What Is A Good Kl Divergence Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. put simply, the kl divergence between two probability distributions measures how different the two distributions are. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. I'll introduce the definition. What Is A Good Kl Divergence.

From twitter.com

Frank Nielsen on Twitter "Fact (KL>BD) KullbackLeibler divergence What Is A Good Kl Divergence I'll introduce the definition of. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. put simply, the kl divergence between two probability distributions measures how different the two distributions are. today, we will be discussing kl divergence,. What Is A Good Kl Divergence.

From adamsspallown.blogspot.com

Calculate Kl Divergence Continuous From Data Adams Spallown What Is A Good Kl Divergence Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Kl. What Is A Good Kl Divergence.

From www.researchgate.net

JS divergence vs. KL divergence. Download Scientific Diagram What Is A Good Kl Divergence today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. I'll introduce the definition of. Kl divergence is formally defined. What Is A Good Kl Divergence.

From johnny.is-a.dev

KL Divergence An Overview Johnny Champagne What Is A Good Kl Divergence put simply, the kl divergence between two probability distributions measures how different the two distributions are. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. as we've seen, we. What Is A Good Kl Divergence.

From adamsspallown.blogspot.com

Calculate Kl Divergence Continuous From Data Adams Spallown What Is A Good Kl Divergence put simply, the kl divergence between two probability distributions measures how different the two distributions are. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance” or. What Is A Good Kl Divergence.

From www.machinelearningplus.com

KL Divergence What is it and mathematical details explained Machine What Is A Good Kl Divergence Kl divergence is formally defined as follows. I'll introduce the definition of. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. put simply, the kl divergence between two probability. What Is A Good Kl Divergence.

From code-first-ml.github.io

Understanding KLDivergence — CodeFirstML What Is A Good Kl Divergence as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. put simply, the kl divergence between two probability distributions measures how different the two distributions are. I'll introduce. What Is A Good Kl Divergence.

From iq.opengenus.org

KL Divergence What Is A Good Kl Divergence today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. I'll introduce the definition of. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. this is where the kl divergence comes in. Kl divergence is formally defined as follows. . What Is A Good Kl Divergence.

From timvieira.github.io

KLdivergence as an objective function — Graduate Descent What Is A Good Kl Divergence I'll introduce the definition of. Kl divergence is formally defined as follows. this is where the kl divergence comes in. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. put simply, the kl divergence between two probability distributions measures how different the two distributions are. as. What Is A Good Kl Divergence.

From blog.dailydoseofds.com

A Visual and Intuitive Guide to KL Divergence What Is A Good Kl Divergence I'll introduce the definition of. Kl divergence is formally defined as follows. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. put simply, the kl divergence between two probability. What Is A Good Kl Divergence.

From uli.rocks

KL Divergence Made Easy What Is A Good Kl Divergence today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. put simply, the kl divergence between two probability distributions measures how different the two distributions are. Kl divergence is formally defined as follows. as we've seen, we can use kl divergence to minimize how much information loss we. What Is A Good Kl Divergence.

From code-first-ml.github.io

Understanding KLDivergence — CodeFirstML What Is A Good Kl Divergence as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. I'll introduce the definition of. this is where the kl divergence comes in. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Kl divergence is formally defined. What Is A Good Kl Divergence.

From iq.opengenus.org

KL Divergence What Is A Good Kl Divergence this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. today, we will be discussing kl divergence, a very popular metric. What Is A Good Kl Divergence.

From bekaykang.github.io

KL Divergence Bekay What Is A Good Kl Divergence today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Kl divergence is formally defined as follows. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance”. What Is A Good Kl Divergence.

From www.youtube.com

Introduction to KLDivergence Simple Example with usage in What Is A Good Kl Divergence today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. this is where the kl divergence comes in. put simply, the kl divergence between two probability distributions. What Is A Good Kl Divergence.

From ycc.idv.tw

YC Note 剖析深度學習 (2):你知道Cross Entropy和KL Divergence代表什麼意義嗎?談機器學習裡的資訊理論 What Is A Good Kl Divergence Kl divergence is formally defined as follows. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. this is where the kl divergence comes in. I'll introduce the definition of. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. . What Is A Good Kl Divergence.

From www.machinelearningplus.com

KL Divergence What is it and mathematical details explained Machine What Is A Good Kl Divergence today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. I'll introduce the definition of. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Kl divergence is formally defined. What Is A Good Kl Divergence.

From www.researchgate.net

An illustration of KL divergence between truncated posterior and What Is A Good Kl Divergence put simply, the kl divergence between two probability distributions measures how different the two distributions are. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. I'll introduce the definition of.. What Is A Good Kl Divergence.

From www.youtube.com

The KL Divergence Data Science Basics YouTube What Is A Good Kl Divergence Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. put simply, the kl divergence between two probability distributions measures how different the two distributions are. this is where. What Is A Good Kl Divergence.

From www.slideserve.com

PPT Machine Learning PowerPoint Presentation, free download ID8938198 What Is A Good Kl Divergence put simply, the kl divergence between two probability distributions measures how different the two distributions are. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. Kl divergence is formally. What Is A Good Kl Divergence.

From www.youtube.com

Intuitively Understanding the KL Divergence YouTube What Is A Good Kl Divergence I'll introduce the definition of. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. this is where the kl divergence comes in. Kl divergence is formally defined as follows. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating. What Is A Good Kl Divergence.

From ha5ha6.github.io

Entropy & KL Divergence Jiexin Wang What Is A Good Kl Divergence Kl divergence is formally defined as follows. I'll introduce the definition of. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. this is where the kl divergence. What Is A Good Kl Divergence.

From www.youtube.com

Entropy Cross Entropy KL Divergence Quick Explained YouTube What Is A Good Kl Divergence Kl divergence is formally defined as follows. this is where the kl divergence comes in. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. I'll introduce the definition of. put simply, the kl divergence between two probability distributions measures how different the two distributions are. Think of. What Is A Good Kl Divergence.

From lykcrypto.com

Divergence Explained Letting You Know Crypto What Is A Good Kl Divergence Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. I'll introduce the definition of. today, we will be discussing kl divergence, a very popular metric used in data science. What Is A Good Kl Divergence.

From nipunbatra.github.io

Nipun Batra Blog Understanding KLDivergence What Is A Good Kl Divergence this is where the kl divergence comes in. put simply, the kl divergence between two probability distributions measures how different the two distributions are. today, we will be discussing kl divergence, a very popular metric used in data science to measure the difference. Kl divergence is formally defined as follows. I'll introduce the definition of. Think of. What Is A Good Kl Divergence.

From www.researchgate.net

Average KL divergence (a) average KL divergence in 0150 s, (b What Is A Good Kl Divergence I'll introduce the definition of. this is where the kl divergence comes in. Kl divergence is formally defined as follows. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions.. What Is A Good Kl Divergence.

From www.researchgate.net

The KL divergence between a supposed correct model and other models. We What Is A Good Kl Divergence Kl divergence is formally defined as follows. I'll introduce the definition of. Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. this is where the kl divergence comes in. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution.. What Is A Good Kl Divergence.

From www.researchgate.net

Both plots demonstrate the improvement of the KL divergence objective What Is A Good Kl Divergence Think of it like a mathematical ruler that tells us the “distance” or difference between two probability distributions. put simply, the kl divergence between two probability distributions measures how different the two distributions are. I'll introduce the definition of. Kl divergence is formally defined as follows. this is where the kl divergence comes in. today, we will. What Is A Good Kl Divergence.

From www.researchgate.net

This is a visualization of the KL divergence. (top row) Given an What Is A Good Kl Divergence put simply, the kl divergence between two probability distributions measures how different the two distributions are. as we've seen, we can use kl divergence to minimize how much information loss we have when approximating a distribution. I'll introduce the definition of. Kl divergence is formally defined as follows. today, we will be discussing kl divergence, a very. What Is A Good Kl Divergence.