Databricks List Files In S3 Bucket . There are usually in the magnitude of millions of files in the folder. I'm trying to generate a list of all s3 files in a bucket/folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Access s3 buckets using instance profiles. I'm getting new data in near real time to this bucket via an s3 bucket synch. This article explains how to connect to aws s3 from databricks. Work with files and object storage efficiently. You can use the utilities to: Most examples in this article focus on using volumes. You can use hadoop api for accessing files on s3 (spark uses it as well): My question is the following: For this example, we are using data files stored. Databricks provides several apis for listing files in cloud object storage.

from serverless-stack.com

For this example, we are using data files stored. Work with files and object storage efficiently. Access s3 buckets using instance profiles. This article explains how to connect to aws s3 from databricks. My question is the following: Databricks provides several apis for listing files in cloud object storage. I'm getting new data in near real time to this bucket via an s3 bucket synch. Most examples in this article focus on using volumes. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. You can use hadoop api for accessing files on s3 (spark uses it as well):

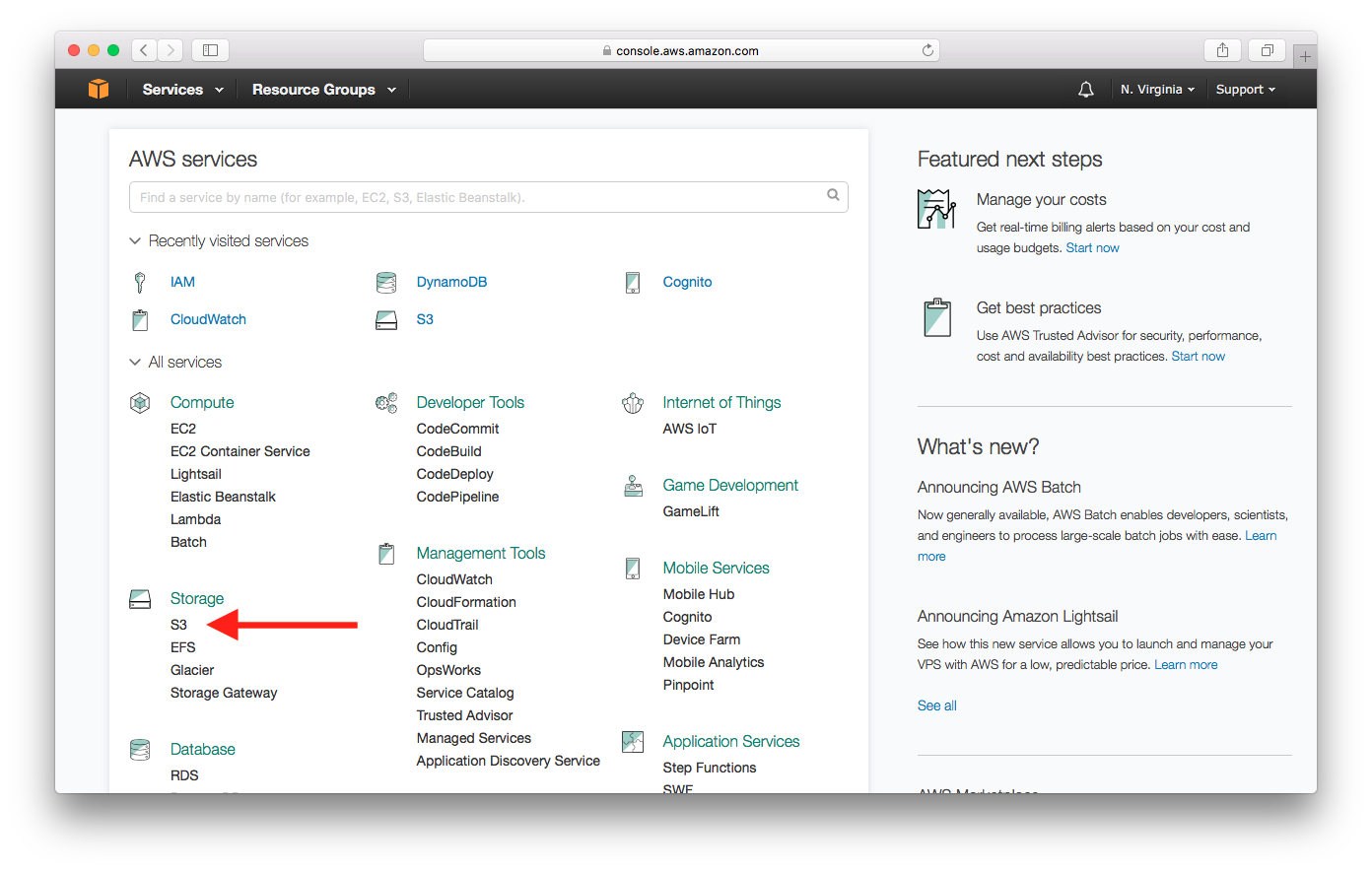

Create an S3 Bucket for File Uploads

Databricks List Files In S3 Bucket I'm trying to generate a list of all s3 files in a bucket/folder. This article explains how to connect to aws s3 from databricks. I'm getting new data in near real time to this bucket via an s3 bucket synch. You can use the utilities to: You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. You can use hadoop api for accessing files on s3 (spark uses it as well): Access s3 buckets using instance profiles. Most examples in this article focus on using volumes. My question is the following: I'm trying to generate a list of all s3 files in a bucket/folder. Databricks provides several apis for listing files in cloud object storage. There are usually in the magnitude of millions of files in the folder. Work with files and object storage efficiently. For this example, we are using data files stored.

From pronteff.com

How does Angular configuration for Amazon S3 buckets works? Databricks List Files In S3 Bucket I'm getting new data in near real time to this bucket via an s3 bucket synch. This article explains how to connect to aws s3 from databricks. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Most examples in this article focus on using volumes. My question is the following: Databricks provides several. Databricks List Files In S3 Bucket.

From www.databricks.com

How to Use the Bucket Brigade to Secure Your Public AWS S3 Buckets Databricks List Files In S3 Bucket My question is the following: Most examples in this article focus on using volumes. For this example, we are using data files stored. This article explains how to connect to aws s3 from databricks. Databricks provides several apis for listing files in cloud object storage. I'm getting new data in near real time to this bucket via an s3 bucket. Databricks List Files In S3 Bucket.

From manuals.supernaeyeglass.com

S3 Storage Bucket Configurations Options , Operations and Settings Databricks List Files In S3 Bucket There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Work with files and object storage efficiently. You can use hadoop api for accessing files on s3 (spark uses it as well): Access s3 buckets using instance profiles. For this example,. Databricks List Files In S3 Bucket.

From awesomeopensource.com

S3 Bucket Loader Databricks List Files In S3 Bucket Access s3 buckets using instance profiles. You can use the utilities to: There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. I'm trying to generate a list of all s3 files in a bucket/folder. My question is the following: For. Databricks List Files In S3 Bucket.

From databricks.com

Using AWS Lambda with Databricks for ETL Automation and ML Model Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. Work with files and object storage efficiently. Access s3 buckets using instance profiles. I'm trying to generate a list of all s3 files in a bucket/folder. Databricks provides several apis for listing files in cloud. Databricks List Files In S3 Bucket.

From hevodata.com

Databricks S3 Integration 3 Easy Steps Databricks List Files In S3 Bucket You can use the utilities to: You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Access s3 buckets using instance profiles. Work with files and object storage efficiently. This article explains how to connect to aws s3 from databricks. There are usually in the magnitude of millions of files in the folder. For. Databricks List Files In S3 Bucket.

From exomvtrag.blob.core.windows.net

List All Files In S3 Bucket Aws Cli at Donald McDonald blog Databricks List Files In S3 Bucket For this example, we are using data files stored. My question is the following: Most examples in this article focus on using volumes. You can use the utilities to: You can use hadoop api for accessing files on s3 (spark uses it as well): I'm trying to generate a list of all s3 files in a bucket/folder. I'm getting new. Databricks List Files In S3 Bucket.

From www.youtube.com

How to Mount or Connect your AWS S3 Bucket in Databricks YouTube Databricks List Files In S3 Bucket You can use hadoop api for accessing files on s3 (spark uses it as well): Access s3 buckets using instance profiles. This article explains how to connect to aws s3 from databricks. Databricks provides several apis for listing files in cloud object storage. Most examples in this article focus on using volumes. My question is the following: There are usually. Databricks List Files In S3 Bucket.

From www.twilio.com

How to Store and Display Media Files Using Python and Amazon S3 Buckets Databricks List Files In S3 Bucket For this example, we are using data files stored. I'm getting new data in near real time to this bucket via an s3 bucket synch. You can use the utilities to: I'm trying to generate a list of all s3 files in a bucket/folder. Most examples in this article focus on using volumes. Databricks provides several apis for listing files. Databricks List Files In S3 Bucket.

From docs.aws.amazon.com

Naming S3 buckets in your data layers AWS Prescriptive Guidance Databricks List Files In S3 Bucket There are usually in the magnitude of millions of files in the folder. You can use hadoop api for accessing files on s3 (spark uses it as well): I'm getting new data in near real time to this bucket via an s3 bucket synch. My question is the following: You can use the utilities to: I'm trying to generate a. Databricks List Files In S3 Bucket.

From fyozctcso.blob.core.windows.net

How To List Files In S3 Bucket Python at Jessica Garcia blog Databricks List Files In S3 Bucket I'm trying to generate a list of all s3 files in a bucket/folder. Databricks provides several apis for listing files in cloud object storage. You can use hadoop api for accessing files on s3 (spark uses it as well): There are usually in the magnitude of millions of files in the folder. This article explains how to connect to aws. Databricks List Files In S3 Bucket.

From binaryguy.tech

Quickest Ways to List Files in S3 Bucket Databricks List Files In S3 Bucket Most examples in this article focus on using volumes. Access s3 buckets using instance profiles. Databricks provides several apis for listing files in cloud object storage. You can use the utilities to: You can use hadoop api for accessing files on s3 (spark uses it as well): There are usually in the magnitude of millions of files in the folder.. Databricks List Files In S3 Bucket.

From exomvtrag.blob.core.windows.net

List All Files In S3 Bucket Aws Cli at Donald McDonald blog Databricks List Files In S3 Bucket You can use the utilities to: There are usually in the magnitude of millions of files in the folder. I'm getting new data in near real time to this bucket via an s3 bucket synch. For this example, we are using data files stored. Access s3 buckets using instance profiles. Databricks provides several apis for listing files in cloud object. Databricks List Files In S3 Bucket.

From www.youtube.com

Databricks Mounts Mount your AWS S3 bucket to Databricks YouTube Databricks List Files In S3 Bucket Databricks provides several apis for listing files in cloud object storage. Most examples in this article focus on using volumes. Work with files and object storage efficiently. Access s3 buckets using instance profiles. My question is the following: There are usually in the magnitude of millions of files in the folder. This article explains how to connect to aws s3. Databricks List Files In S3 Bucket.

From wdevelop.com

AWS how to get a number of files in S3 bucket web developer blog Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. I'm trying to generate a list of all s3 files in a bucket/folder. Work with files and object storage efficiently. You can use the utilities to: I'm getting new data in near real time to this bucket via an s3 bucket synch. There are. Databricks List Files In S3 Bucket.

From www.radishlogic.com

How to upload a file to S3 Bucket using boto3 and Python Radish Logic Databricks List Files In S3 Bucket You can use hadoop api for accessing files on s3 (spark uses it as well): I'm getting new data in near real time to this bucket via an s3 bucket synch. This article explains how to connect to aws s3 from databricks. Databricks provides several apis for listing files in cloud object storage. There are usually in the magnitude of. Databricks List Files In S3 Bucket.

From exojrklgq.blob.core.windows.net

List Number Of Objects In S3 Bucket at Todd Hancock blog Databricks List Files In S3 Bucket You can use the utilities to: Most examples in this article focus on using volumes. My question is the following: For this example, we are using data files stored. Work with files and object storage efficiently. Access s3 buckets using instance profiles. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. I'm trying. Databricks List Files In S3 Bucket.

From www.geeksforgeeks.org

How To Store Data in a S3 Bucket? Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Most examples in this article focus on using volumes. You can use hadoop api for accessing files on s3 (spark uses it as well): This article explains how to connect to aws s3 from databricks. I'm getting new data in near real time to. Databricks List Files In S3 Bucket.

From www.youtube.com

Databricks File System(DBFS) overview in Azure Databricks explained in Databricks List Files In S3 Bucket I'm trying to generate a list of all s3 files in a bucket/folder. My question is the following: This article explains how to connect to aws s3 from databricks. Work with files and object storage efficiently. Access s3 buckets using instance profiles. Most examples in this article focus on using volumes. I'm getting new data in near real time to. Databricks List Files In S3 Bucket.

From aws.amazon.com

What’s in your S3 Bucket? Using machine learning to quickly visualize Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. My question is the following: I'm getting new data in near real time to this bucket via an s3 bucket synch. There are usually in the magnitude of millions of files in the folder. This. Databricks List Files In S3 Bucket.

From www.geeksforgeeks.org

How To Store Data in a S3 Bucket? Databricks List Files In S3 Bucket Work with files and object storage efficiently. This article explains how to connect to aws s3 from databricks. There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Databricks provides several apis for listing files in cloud object storage. You can. Databricks List Files In S3 Bucket.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. My question is the following: Access s3 buckets using instance profiles. This article explains how to connect to aws s3 from databricks. I'm getting new data in near real time to this bucket via an s3 bucket synch. Work with files and object storage. Databricks List Files In S3 Bucket.

From learn.microsoft.com

Want to pull files from nested S3 bucket folders and want to save them Databricks List Files In S3 Bucket Work with files and object storage efficiently. For this example, we are using data files stored. Databricks provides several apis for listing files in cloud object storage. There are usually in the magnitude of millions of files in the folder. My question is the following: This article explains how to connect to aws s3 from databricks. You can use hadoop. Databricks List Files In S3 Bucket.

From fyozctcso.blob.core.windows.net

How To List Files In S3 Bucket Python at Jessica Garcia blog Databricks List Files In S3 Bucket My question is the following: For this example, we are using data files stored. You can use hadoop api for accessing files on s3 (spark uses it as well): Databricks provides several apis for listing files in cloud object storage. Most examples in this article focus on using volumes. You can use the utilities to: You can list files on. Databricks List Files In S3 Bucket.

From www.mssqltips.com

Databricks Unity Catalog and Volumes StepbyStep Guide Databricks List Files In S3 Bucket Access s3 buckets using instance profiles. Databricks provides several apis for listing files in cloud object storage. You can use hadoop api for accessing files on s3 (spark uses it as well): Most examples in this article focus on using volumes. There are usually in the magnitude of millions of files in the folder. My question is the following: Work. Databricks List Files In S3 Bucket.

From www.howtoforge.com

How to configure event notifications in S3 Bucket on AWS Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. There are usually in the magnitude of millions of files in the folder. Most examples in this article focus on using volumes. You can use the utilities to: For this example, we are using data files stored. Access s3 buckets using instance profiles. I'm. Databricks List Files In S3 Bucket.

From towardsdatascience.com

How I connect an S3 bucket to a Databricks notebook to do analytics Databricks List Files In S3 Bucket There are usually in the magnitude of millions of files in the folder. This article explains how to connect to aws s3 from databricks. Databricks provides several apis for listing files in cloud object storage. Access s3 buckets using instance profiles. Work with files and object storage efficiently. My question is the following: I'm trying to generate a list of. Databricks List Files In S3 Bucket.

From serverless-stack.com

Create an S3 Bucket for File Uploads Databricks List Files In S3 Bucket There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. You can use hadoop api for accessing files on s3 (spark uses it as well): Access s3 buckets using instance profiles. I'm. Databricks List Files In S3 Bucket.

From stackoverflow.com

databricks load file from s3 bucket path parameter Stack Overflow Databricks List Files In S3 Bucket I'm trying to generate a list of all s3 files in a bucket/folder. Work with files and object storage efficiently. My question is the following: There are usually in the magnitude of millions of files in the folder. Databricks provides several apis for listing files in cloud object storage. Most examples in this article focus on using volumes. You can. Databricks List Files In S3 Bucket.

From docs.tooljet.com

Upload and Download Files on AWS S3 Bucket ToolJet Databricks List Files In S3 Bucket I'm trying to generate a list of all s3 files in a bucket/folder. You can use the utilities to: Databricks provides several apis for listing files in cloud object storage. There are usually in the magnitude of millions of files in the folder. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Work. Databricks List Files In S3 Bucket.

From serverless-stack.com

Create an S3 Bucket for File Uploads Databricks List Files In S3 Bucket You can use the utilities to: Work with files and object storage efficiently. Access s3 buckets using instance profiles. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. Databricks provides several apis for listing files in cloud object storage. You can use hadoop api. Databricks List Files In S3 Bucket.

From www.youtube.com

How to Create S3 Bucket in AWS Step by Step Tricknology YouTube Databricks List Files In S3 Bucket Access s3 buckets using instance profiles. You can use hadoop api for accessing files on s3 (spark uses it as well): You can use the utilities to: For this example, we are using data files stored. I'm getting new data in near real time to this bucket via an s3 bucket synch. My question is the following: I'm trying to. Databricks List Files In S3 Bucket.

From sst.dev

Create an S3 Bucket for File Uploads Databricks List Files In S3 Bucket You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. This article explains how to connect to aws s3 from databricks. For this example, we are using data files stored. I'm trying to generate a list of all s3 files in a bucket/folder. You can use the utilities to: Most examples in this article. Databricks List Files In S3 Bucket.

From www.techdevpillar.com

How to list files in S3 bucket with AWS CLI and python Tech Dev Pillar Databricks List Files In S3 Bucket I'm getting new data in near real time to this bucket via an s3 bucket synch. Databricks provides several apis for listing files in cloud object storage. This article explains how to connect to aws s3 from databricks. You can use the utilities to: You can use hadoop api for accessing files on s3 (spark uses it as well): My. Databricks List Files In S3 Bucket.

From www.oceanproperty.co.th

Listing All AWS S3 Objects In A Bucket Using Java Baeldung, 56 OFF Databricks List Files In S3 Bucket Access s3 buckets using instance profiles. I'm getting new data in near real time to this bucket via an s3 bucket synch. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. My question is the following: I'm trying to generate a list of all. Databricks List Files In S3 Bucket.