Pyspark Rdd Map Reduce . map and reduce are methods of rdd class, which has interface similar to scala collections. What you pass to methods. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. reduces the elements of this rdd using the specified commutative and associative binary operator. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using.

from blog.csdn.net

spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. reduces the elements of this rdd using the specified commutative and associative binary operator. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis.

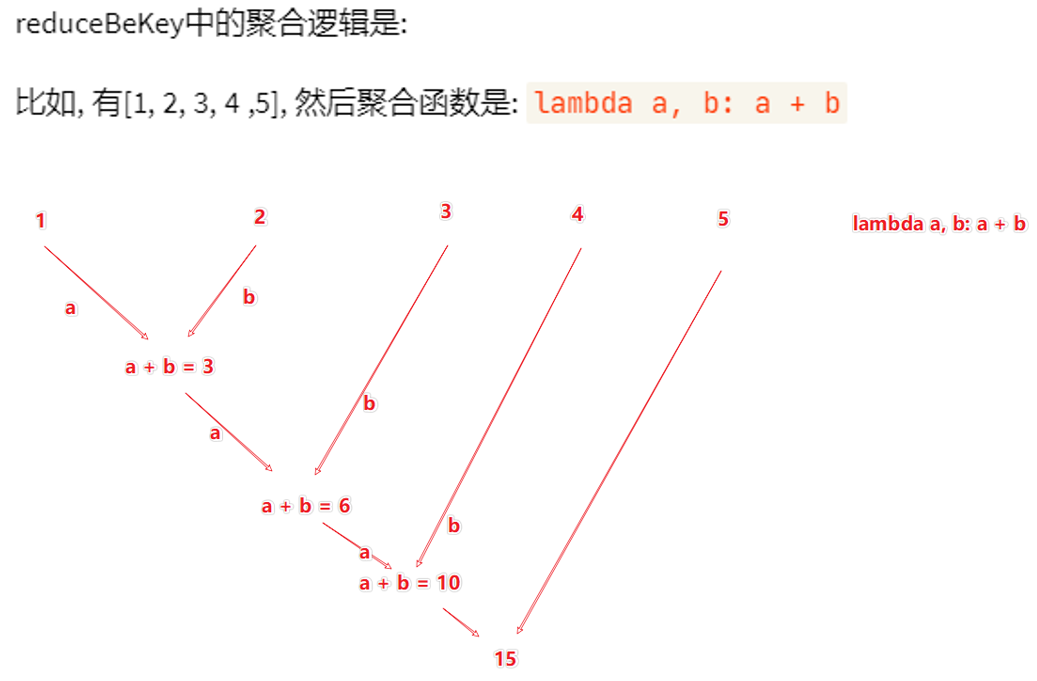

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter

Pyspark Rdd Map Reduce map and reduce are methods of rdd class, which has interface similar to scala collections. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. reduces the elements of this rdd using the specified commutative and associative binary operator. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. map and reduce are methods of rdd class, which has interface similar to scala collections. What you pass to methods. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using.

From blog.csdn.net

PySpark reduce reduceByKey用法_pyspark reducebykeyCSDN博客 Pyspark Rdd Map Reduce Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. reduces the elements of this rdd using the specified commutative. Pyspark Rdd Map Reduce.

From legiit.com

Big Data, Map Reduce And PySpark Using Python Legiit Pyspark Rdd Map Reduce this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. map and reduce are methods of rdd class, which has. Pyspark Rdd Map Reduce.

From www.projectpro.io

PySpark RDD Cheat Sheet A Comprehensive Guide Pyspark Rdd Map Reduce spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. map and reduce are methods. Pyspark Rdd Map Reduce.

From blog.csdn.net

【Spark】PySpark的RDD与DataFrame的转换与使用_pyspark中dataframe.rdd.mapCSDN博客 Pyspark Rdd Map Reduce spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. this pyspark rdd tutorial will help you understand what is rdd. Pyspark Rdd Map Reduce.

From legiit.com

Big Data, Map Reduce And PySpark Using Python Legiit Pyspark Rdd Map Reduce What you pass to methods. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. pyspark can also read any. Pyspark Rdd Map Reduce.

From blog.csdn.net

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter Pyspark Rdd Map Reduce Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. reduces the elements of this rdd using the specified commutative and associative binary operator. What you pass to methods. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’. Pyspark Rdd Map Reduce.

From legiit.com

Big Data, Map Reduce And PySpark Using Python Legiit Pyspark Rdd Map Reduce pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. map and reduce are methods of rdd class, which has interface similar to scala collections. reduces the elements of this rdd using the specified commutative and associative binary operator. What you pass to methods. spark rdd. Pyspark Rdd Map Reduce.

From legiit.com

Big Data, Map Reduce And PySpark Using Python Legiit Pyspark Rdd Map Reduce spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. map and reduce are methods. Pyspark Rdd Map Reduce.

From legiit.com

Big Data, Map Reduce And PySpark Using Python Legiit Pyspark Rdd Map Reduce map and reduce are methods of rdd class, which has interface similar to scala collections. reduces the elements of this rdd using the specified commutative and associative binary operator. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. pyspark. Pyspark Rdd Map Reduce.

From blog.csdn.net

pysparkRddgroupbygroupByKeycogroupgroupWith用法_pyspark rdd groupby Pyspark Rdd Map Reduce What you pass to methods. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. this pyspark rdd tutorial will help. Pyspark Rdd Map Reduce.

From www.youtube.com

Pyspark RDD Operations Actions in Pyspark RDD Fold vs Reduce Glom Pyspark Rdd Map Reduce What you pass to methods. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. map and reduce are methods of rdd class, which has interface similar to scala collections. reduces the elements of this rdd using the specified commutative and. Pyspark Rdd Map Reduce.

From zhuanlan.zhihu.com

PySpark实战 17:使用 Python 扩展 PYSPARK:RDD 和用户定义函数 (1) 知乎 Pyspark Rdd Map Reduce reduces the elements of this rdd using the specified commutative and associative binary operator. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. What you. Pyspark Rdd Map Reduce.

From sparkbyexamples.com

PySpark RDD Tutorial Learn with Examples Spark By {Examples} Pyspark Rdd Map Reduce this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. map and reduce are methods of rdd class, which has interface. Pyspark Rdd Map Reduce.

From sparkbyexamples.com

PySpark Create RDD with Examples Spark by {Examples} Pyspark Rdd Map Reduce map and reduce are methods of rdd class, which has interface similar to scala collections. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. reduces the elements of this rdd using the specified commutative and associative binary operator. Callable [. Pyspark Rdd Map Reduce.

From sparkbyexamples.com

Convert PySpark RDD to DataFrame Spark By {Examples} Pyspark Rdd Map Reduce this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. map and reduce are methods of rdd class, which has interface similar to scala collections. spark rdd reduce () aggregate action function is used to calculate min, max, and total of. Pyspark Rdd Map Reduce.

From www.javatpoint.com

PySpark RDD javatpoint Pyspark Rdd Map Reduce What you pass to methods. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. this pyspark rdd tutorial will. Pyspark Rdd Map Reduce.

From www.youtube.com

How to use map RDD transformation in PySpark PySpark 101 Part 3 Pyspark Rdd Map Reduce Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. What you pass to methods. reduces the elements of this rdd using the specified commutative and associative binary operator. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’. Pyspark Rdd Map Reduce.

From blog.csdn.net

【Python】PySpark 数据计算 ① ( RDDmap 方法 RDDmap 语法 传入普通函数 传入 lambda Pyspark Rdd Map Reduce reduces the elements of this rdd using the specified commutative and associative binary operator. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an. Pyspark Rdd Map Reduce.

From www.youtube.com

Practical RDD action reduce in PySpark using Jupyter PySpark 101 Pyspark Rdd Map Reduce Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. map and reduce are methods of rdd class, which has interface similar to scala collections. reduces the elements of this rdd using the specified commutative and associative binary operator. What you pass to methods. spark. Pyspark Rdd Map Reduce.

From zhuanlan.zhihu.com

PySpark Transformation/Action 算子详细介绍 知乎 Pyspark Rdd Map Reduce reduces the elements of this rdd using the specified commutative and associative binary operator. What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. this. Pyspark Rdd Map Reduce.

From www.analyticsvidhya.com

Create RDD in Apache Spark using Pyspark Analytics Vidhya Pyspark Rdd Map Reduce What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. pyspark can also read any hadoop inputformat or write any hadoop. Pyspark Rdd Map Reduce.

From www.vrogue.co

Introduction To Big Data Using Pyspark Map Filter Reduce In Python Vrogue Pyspark Rdd Map Reduce spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. reduces the elements of this rdd using the specified commutative and. Pyspark Rdd Map Reduce.

From www.analyticsvidhya.com

Spark Transformations and Actions On RDD Pyspark Rdd Map Reduce What you pass to methods. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. reduces the elements of this rdd using the specified commutative and. Pyspark Rdd Map Reduce.

From blog.csdn.net

PySpark数据分析基础核心数据集RDD原理以及操作一文详解(一)_rdd中rCSDN博客 Pyspark Rdd Map Reduce What you pass to methods. reduces the elements of this rdd using the specified commutative and associative binary operator. map and reduce are methods of rdd class, which has interface similar to scala collections. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. Callable [ [. Pyspark Rdd Map Reduce.

From scales.arabpsychology.com

PySpark Convert RDD To DataFrame (With Example) Pyspark Rdd Map Reduce spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. map and reduce are methods. Pyspark Rdd Map Reduce.

From blog.csdn.net

pyspark RDD reduce、reduceByKey、reduceByKeyLocally用法CSDN博客 Pyspark Rdd Map Reduce pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its. Pyspark Rdd Map Reduce.

From blog.csdn.net

pyspark RDD reduce、reduceByKey、reduceByKeyLocally用法CSDN博客 Pyspark Rdd Map Reduce reduces the elements of this rdd using the specified commutative and associative binary operator. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. this. Pyspark Rdd Map Reduce.

From blog.csdn.net

PySpark 之 map_pyspark中mapCSDN博客 Pyspark Rdd Map Reduce map and reduce are methods of rdd class, which has interface similar to scala collections. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. . Pyspark Rdd Map Reduce.

From medium.com

Pyspark RDD. Resilient Distributed Datasets (RDDs)… by Muttineni Sai Pyspark Rdd Map Reduce this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. spark rdd reduce () aggregate action function is used to calculate. Pyspark Rdd Map Reduce.

From ittutorial.org

PySpark RDD Example IT Tutorial Pyspark Rdd Map Reduce What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. pyspark can also read any hadoop inputformat or write any hadoop. Pyspark Rdd Map Reduce.

From blog.csdn.net

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter Pyspark Rdd Map Reduce this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. reduces the elements of this rdd using the specified commutative and. Pyspark Rdd Map Reduce.

From blog.csdn.net

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter Pyspark Rdd Map Reduce this pyspark rdd tutorial will help you understand what is rdd (resilient distributed dataset) , its advantages, and how to create an rdd and use it,. What you pass to methods. map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [ [ t , t ] , t ] ) →. Pyspark Rdd Map Reduce.

From blog.csdn.net

PythonPySpark案例实战:Spark介绍、库安装、编程模型、RDD对象、flat Map、reduce By Key、filter Pyspark Rdd Map Reduce reduces the elements of this rdd using the specified commutative and associative binary operator. map and reduce are methods of rdd class, which has interface similar to scala collections. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. pyspark can also read any hadoop. Pyspark Rdd Map Reduce.

From github.com

GitHub devjey/pysparkmapreducealgorithm An algorithm to help map Pyspark Rdd Map Reduce pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. What you pass to methods. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. this pyspark rdd tutorial will help. Pyspark Rdd Map Reduce.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Pyspark Rdd Map Reduce pyspark can also read any hadoop inputformat or write any hadoop outputformat, for both ‘new’ and ‘old’ hadoop mapreduce apis. Callable [ [ t , t ] , t ] ) → t [source] ¶ reduces the elements of this rdd using. map and reduce are methods of rdd class, which has interface similar to scala collections. . Pyspark Rdd Map Reduce.