How To Do Data Profiling In Pyspark . The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. With the support of spark dataframes beyond pandas dataframes, this new release allows. To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Particularly, spark rose as one of the most used and adopted engines by the data community. Spark provides a variety of apis for working with data,. Data profiling gives us statistics about different.

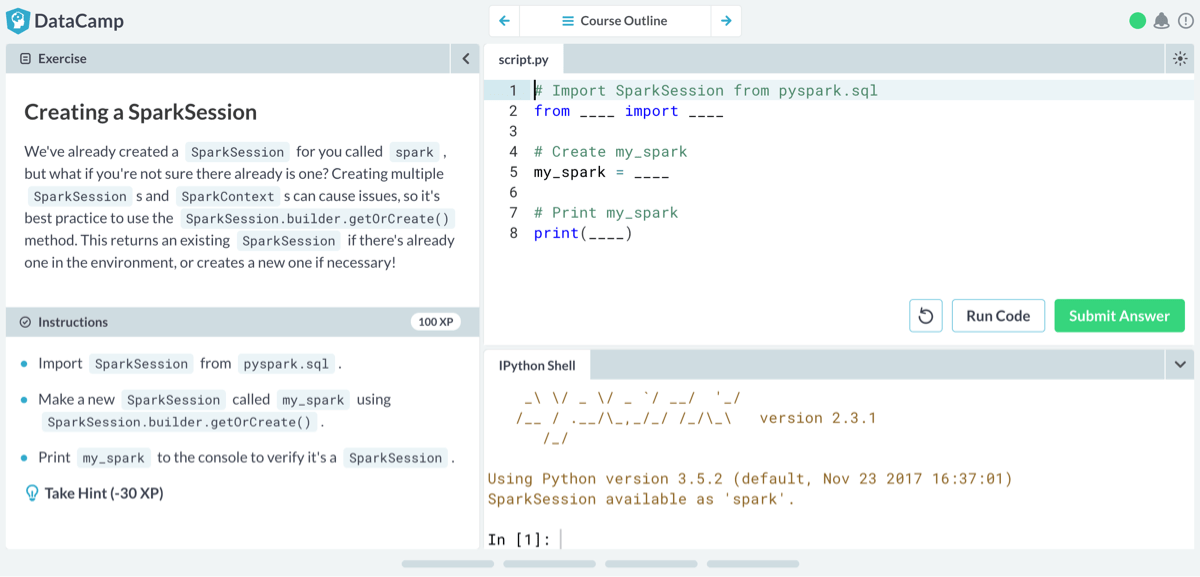

from www.datacamp.com

The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Particularly, spark rose as one of the most used and adopted engines by the data community. To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. Data profiling gives us statistics about different. With the support of spark dataframes beyond pandas dataframes, this new release allows.

New Track Big Data with PySpark DataCamp

How To Do Data Profiling In Pyspark In a python environment, pyspark api is a a great tool to do a variety of data quality checks. With the support of spark dataframes beyond pandas dataframes, this new release allows. Data profiling gives us statistics about different. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Spark provides a variety of apis for working with data,. To avoid this, we often use data profiling and data validation techniques. Particularly, spark rose as one of the most used and adopted engines by the data community. In a python environment, pyspark api is a a great tool to do a variety of data quality checks.

From stackoverflow.com

dataframe Data Profiling using Pyspark Stack Overflow How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. Particularly, spark rose as one of the most used and adopted engines by the data community. Data profiling gives us statistics about different. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. To avoid this, we often use data profiling. How To Do Data Profiling In Pyspark.

From pub.towardsai.net

Pyspark MLlib Classification using Pyspark ML by Muttineni Sai How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. Spark provides a variety of apis for working with data,. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. In a python environment, pyspark api is a a great. How To Do Data Profiling In Pyspark.

From www.slideteam.net

SQL Server Data Profiling Dashboard Indicating Business Intelligence How To Do Data Profiling In Pyspark Data profiling gives us statistics about different. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. With the support of spark dataframes beyond pandas dataframes, this new release allows. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution,. How To Do Data Profiling In Pyspark.

From github.com

GitHub How To Do Data Profiling In Pyspark The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Particularly, spark rose as one of the most used and adopted engines by the data community. With the support of spark dataframes beyond pandas dataframes, this new release allows. In a python environment, pyspark api is. How To Do Data Profiling In Pyspark.

From www.globalsqa.com

PySpark Cheat Sheet GlobalSQA How To Do Data Profiling In Pyspark In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Particularly, spark rose as one of the most used and adopted engines by the data community. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Spark. How To Do Data Profiling In Pyspark.

From www.javatpoint.com

PySpark RDD javatpoint How To Do Data Profiling In Pyspark To avoid this, we often use data profiling and data validation techniques. Spark provides a variety of apis for working with data,. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. In a python environment, pyspark api is a a great tool to do a. How To Do Data Profiling In Pyspark.

From learn.microsoft.com

Data profiling on azure synapse using pyspark Microsoft Q&A How To Do Data Profiling In Pyspark With the support of spark dataframes beyond pandas dataframes, this new release allows. Particularly, spark rose as one of the most used and adopted engines by the data community. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. In a python environment, pyspark api is. How To Do Data Profiling In Pyspark.

From www.reddit.com

A PySpark Schema Generator from JSON r/dataengineering How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. Particularly, spark rose as one of the most used and adopted engines by the data community. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. To avoid this, we often use data profiling and data. How To Do Data Profiling In Pyspark.

From www.programmingfunda.com

How to Change DataType of Column in PySpark DataFrame How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. Data profiling gives us statistics about different. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. To avoid this, we often use data profiling and data validation techniques. Spark. How To Do Data Profiling In Pyspark.

From www.vrogue.co

Preprocessing Bigquery Data With Pyspark On Dataproc vrogue.co How To Do Data Profiling In Pyspark In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Particularly, spark rose as one of the most used and adopted engines by the data community. Data profiling gives us statistics about different. Spark provides a variety of apis for working with data,. With the support of spark dataframes beyond pandas. How To Do Data Profiling In Pyspark.

From docs.oracle.com

Exercise 3 Machine Learning with PySpark How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. Spark provides a variety of apis for working with data,. With the support of spark dataframes beyond pandas dataframes, this new release allows. Data profiling gives us statistics about different. The implementation is based on utilizing built in functions and data structures provided by. How To Do Data Profiling In Pyspark.

From www.programmingfunda.com

How to read CSV files using PySpark » Programming Funda How To Do Data Profiling In Pyspark The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. With the support of spark dataframes beyond. How To Do Data Profiling In Pyspark.

From www.freecodecamp.org

How to Use PySpark for Data Processing and Machine Learning How To Do Data Profiling In Pyspark The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. To avoid this, we often use data profiling and data validation techniques. Data profiling gives us statistics. How To Do Data Profiling In Pyspark.

From www.youtube.com

Introduction to PySpark Data Manipulation Basics YouTube How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. Data profiling gives. How To Do Data Profiling In Pyspark.

From www.databricks.com

Memory Profiling in PySpark Databricks Blog How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. Data profiling gives us statistics about different. To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis. How To Do Data Profiling In Pyspark.

From sparkbyexamples.com

PySpark Create DataFrame with Examples Spark By {Examples} How To Do Data Profiling In Pyspark Data profiling gives us statistics about different. To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. With the support of spark dataframes beyond pandas dataframes, this new release. How To Do Data Profiling In Pyspark.

From sparkbyexamples.com

PySpark withColumn() Usage with Examples Spark by {Examples} How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. Data profiling gives us statistics about different. With the support of spark dataframes beyond pandas dataframes, this new release allows. Particularly, spark rose as one of the most used and adopted engines by the data community. The implementation is based on utilizing built in functions and data structures provided by. How To Do Data Profiling In Pyspark.

From blog.ditullio.fr

Quick setup for PySpark with IPython notebook Nico's Blog How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. To avoid this, we often use data profiling and data validation techniques. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Data profiling gives us statistics about different. Particularly, spark rose as one of the. How To Do Data Profiling In Pyspark.

From kuntalganguly.blogspot.com

Big Data Analytics and Machine Learning Configure IPython Notebook How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. Data profiling gives us statistics about different. To avoid this, we often use data profiling. How To Do Data Profiling In Pyspark.

From data-flair.training

Pyspark Profiler Methods and Functions DataFlair How To Do Data Profiling In Pyspark The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Particularly, spark rose as one of the most used and adopted engines by the data community. Spark provides a variety of apis for working with data,. In a python environment, pyspark api is a a great. How To Do Data Profiling In Pyspark.

From www.linkedin.com

Data Profiling in PySpark A Practical Guide How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. To avoid this, we often use data. How To Do Data Profiling In Pyspark.

From www.oreilly.com

1. Introduction to Spark and PySpark Data Algorithms with Spark [Book] How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Particularly, spark rose as one of the most used and adopted engines by the data community. In a python environment, pyspark api is a a great. How To Do Data Profiling In Pyspark.

From medium.com

How does PySpark work? — step by step (with pictures) How To Do Data Profiling In Pyspark In a python environment, pyspark api is a a great tool to do a variety of data quality checks. With the support of spark dataframes beyond pandas dataframes, this new release allows. Data profiling gives us statistics about different. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution,. How To Do Data Profiling In Pyspark.

From www.askpython.com

Print Data Using PySpark A Complete Guide AskPython How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. Data profiling gives us statistics about different. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. With the support of spark dataframes beyond pandas dataframes, this new release allows. The implementation is based on utilizing built in functions and data. How To Do Data Profiling In Pyspark.

From www.scientiamobile.com

WURFL Microservice Pyspark Device Detection Tutorial ScientiaMobile How To Do Data Profiling In Pyspark Data profiling gives us statistics about different. With the support of spark dataframes beyond pandas dataframes, this new release allows. Particularly, spark rose as one of the most used and adopted engines by the data community. To avoid this, we often use data profiling and data validation techniques. The implementation is based on utilizing built in functions and data structures. How To Do Data Profiling In Pyspark.

From www.databricks.com

How to Profile PySpark Databricks Blog How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. To avoid this, we often use data profiling and data validation techniques. Spark provides a variety of apis for working with data,. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. With the support. How To Do Data Profiling In Pyspark.

From docs.whylabs.ai

Apache Spark WhyLabs Documentation How To Do Data Profiling In Pyspark Spark provides a variety of apis for working with data,. Data profiling gives us statistics about different. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. To avoid this, we often use data profiling and data validation techniques. With the support of spark dataframes beyond. How To Do Data Profiling In Pyspark.

From www.databricks.com

How to Profile PySpark Databricks Blog How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. To avoid this, we often use data profiling and data validation techniques. Data profiling gives. How To Do Data Profiling In Pyspark.

From webframes.org

How To Create Dataframe In Pyspark From Csv How To Do Data Profiling In Pyspark To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Spark provides a variety of apis for working with data,. With the support of spark dataframes beyond pandas dataframes, this new release allows. Particularly, spark rose as one of. How To Do Data Profiling In Pyspark.

From www.programmingfunda.com

PySpark DataFrame Tutorial for Beginners » Programming Funda How To Do Data Profiling In Pyspark The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. To avoid this, we often use data profiling and data validation techniques. Data profiling gives us statistics about different. In a python environment, pyspark api is a a great tool to do a variety of data. How To Do Data Profiling In Pyspark.

From www.datacamp.com

New Track Big Data with PySpark DataCamp How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. Spark provides a variety of apis for working with data,. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. With the support of spark dataframes beyond pandas dataframes, this new release allows. Data profiling. How To Do Data Profiling In Pyspark.

From www.vrogue.co

Steps To Read Csv File In Pyspark Learn Easy Steps www.vrogue.co How To Do Data Profiling In Pyspark Data profiling gives us statistics about different. The implementation is based on utilizing built in functions and data structures provided by python/pyspark to perform aggregation, summarization, filtering, distribution, regex matches, etc. Particularly, spark rose as one of the most used and adopted engines by the data community. With the support of spark dataframes beyond pandas dataframes, this new release allows.. How To Do Data Profiling In Pyspark.

From www.datacamp.com

New Track Big Data with PySpark DataCamp How To Do Data Profiling In Pyspark Particularly, spark rose as one of the most used and adopted engines by the data community. With the support of spark dataframes beyond pandas dataframes, this new release allows. To avoid this, we often use data profiling and data validation techniques. In a python environment, pyspark api is a a great tool to do a variety of data quality checks.. How To Do Data Profiling In Pyspark.

From www.linkedin.com

Data Profiling How To Do Data Profiling In Pyspark In a python environment, pyspark api is a a great tool to do a variety of data quality checks. Data profiling gives us statistics about different. Spark provides a variety of apis for working with data,. With the support of spark dataframes beyond pandas dataframes, this new release allows. The implementation is based on utilizing built in functions and data. How To Do Data Profiling In Pyspark.

From medium.com

How to do Data Profiling/Quality Check on Data in Spark — Big Data(With How To Do Data Profiling In Pyspark With the support of spark dataframes beyond pandas dataframes, this new release allows. Spark provides a variety of apis for working with data,. In a python environment, pyspark api is a a great tool to do a variety of data quality checks. To avoid this, we often use data profiling and data validation techniques. Data profiling gives us statistics about. How To Do Data Profiling In Pyspark.