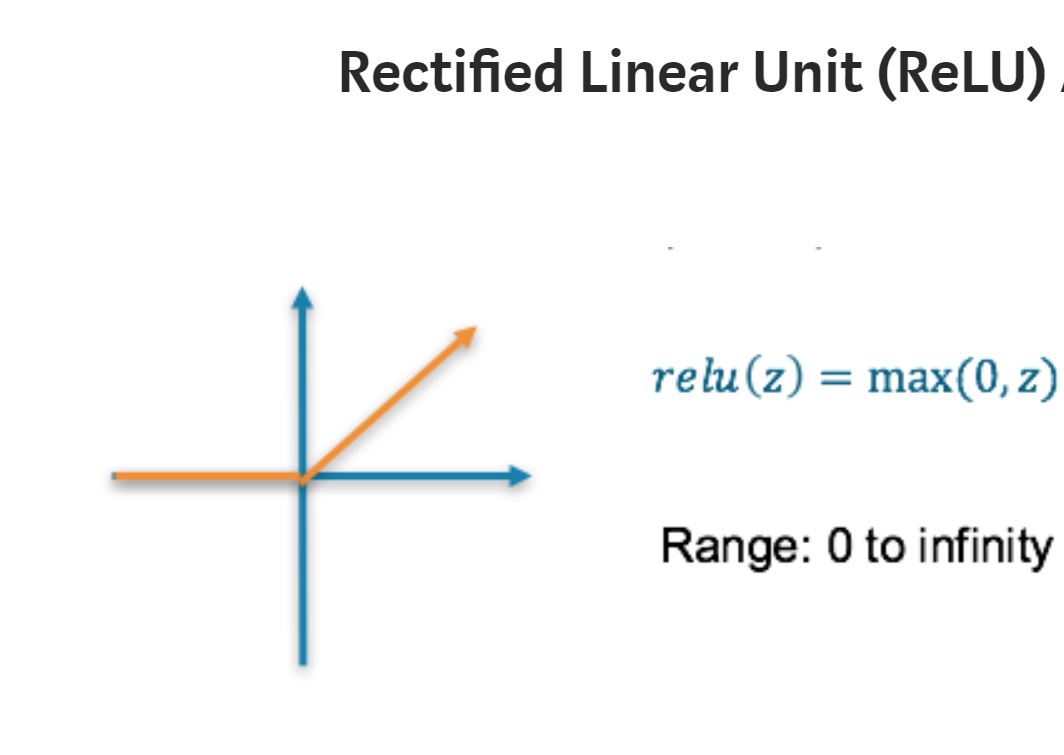

Rectified Linear Meaning . It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. In essence, the function returns 0 if it receives a negative input, and if it receives a. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The kink in the function is the source of. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. The identity function f(x)=x is a basic linear activation, unbounded in its range.

from www.vrogue.co

In essence, the function returns 0 if it receives a negative input, and if it receives a. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The kink in the function is the source of. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The identity function f(x)=x is a basic linear activation, unbounded in its range. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance.

Rectified Linear Unit Relu Activation Function Deep L vrogue.co

Rectified Linear Meaning The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The kink in the function is the source of. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. In essence, the function returns 0 if it receives a negative input, and if it receives a. The identity function f(x)=x is a basic linear activation, unbounded in its range. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue.

From www.researchgate.net

Rectified linear unit illustration Download Scientific Diagram Rectified Linear Meaning The identity function f(x)=x is a basic linear activation, unbounded in its range. It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its. Rectified Linear Meaning.

From www.oreilly.com

Rectified Linear Unit Neural Networks with R [Book] Rectified Linear Meaning It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear activation function or relu for short is a piecewise linear function. Rectified Linear Meaning.

From www.researchgate.net

Activation function (ReLu). ReLu Rectified Linear Activation Rectified Linear Meaning Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. In essence, the function returns 0 if it receives a negative input, and if it receives. Rectified Linear Meaning.

From www.practicalserver.net

Write a program to display a graph for ReLU (Rectified Linear Unit Rectified Linear Meaning The kink in the function is the source of. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. Rectified linear units, or relus, are a type of activation. Rectified Linear Meaning.

From www.researchgate.net

The Rectified Linear Unit (ReLU) activation function Download Rectified Linear Meaning In essence, the function returns 0 if it receives a negative input, and if it receives a. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron.. Rectified Linear Meaning.

From www.slideserve.com

PPT Convolutional Networks PowerPoint Presentation, free download Rectified Linear Meaning The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. An activation function in the context of neural. Rectified Linear Meaning.

From www.slideteam.net

Ann Relu Rectified Linear Unit Activation Function Ppt Professional Rectified Linear Meaning Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is a. Rectified Linear Meaning.

From www.slideteam.net

Deep Learning Function Rectified Linear Units Relu Training Ppt Rectified Linear Meaning It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Rectified linear units, or relus, are a type of activation function that. Rectified Linear Meaning.

From www.researchgate.net

Rectified linear unit as activation function Download Scientific Diagram Rectified Linear Meaning The kink in the function is the source of. In essence, the function returns 0 if it receives a negative input, and if it receives a. It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. Relu, or rectified linear unit, represents. Rectified Linear Meaning.

From deep.ai

Deep Learning using Rectified Linear Units (ReLU) DeepAI Rectified Linear Meaning It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The identity function f(x)=x is a basic linear activation,. Rectified Linear Meaning.

From stackdiary.com

ReLU (Rectified Linear Unit) Glossary & Definition Rectified Linear Meaning The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. Rectified linear units, or relus, are a type of activation function that are. Rectified Linear Meaning.

From www.scribd.com

Rectified Linear Unit PDF Rectified Linear Meaning The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The identity function f(x)=x is a basic linear activation, unbounded in its range. Relu,. Rectified Linear Meaning.

From www.researchgate.net

Approximation of Rectified Linear Unit Function Download Scientific Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. The rectified linear activation function or relu for short is a piecewise linear function. Rectified Linear Meaning.

From morioh.com

Rectified Linear Unit (ReLU) Activation Function Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The kink in the function is the source of. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. The identity function f(x)=x. Rectified Linear Meaning.

From www.vrogue.co

Rectified Linear Unit Relu Activation Function Deep L vrogue.co Rectified Linear Meaning The kink in the function is the source of. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. In essence, the function returns 0 if it receives a negative input, and if it receives a. It has become the default activation function for many types of. Rectified Linear Meaning.

From www.researchgate.net

Rectified Linear Unit (ReLU) [72] Download Scientific Diagram Rectified Linear Meaning The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. The identity function f(x)=x is a basic linear activation, unbounded in its range. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity. Rectified Linear Meaning.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function [16] Download Rectified Linear Meaning Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. Rectified linear units, or relus, are a type of activation function that are linear in the positive. Rectified Linear Meaning.

From machinelearningmastery.com

A Gentle Introduction to the Rectified Linear Unit (ReLU Rectified Linear Meaning Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear activation function or relu for short is a piecewise linear function that will. Rectified Linear Meaning.

From www.researchgate.net

Rectified Linear Unit v/s Leaky Rectified Linear Unit Download Rectified Linear Meaning The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. An activation function in the context of neural networks is a mathematical function applied to the output of a neuron. The rectified linear activation function or relu for short is a piecewise linear function. Rectified Linear Meaning.

From www.slideserve.com

PPT Lecture 2. Basic Neurons PowerPoint Presentation, free download Rectified Linear Meaning The kink in the function is the source of. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves. Rectified Linear Meaning.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function [16] Download Rectified Linear Meaning Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. In essence, the function returns 0 if. Rectified Linear Meaning.

From www.youtube.com

Rectified Linear Unit(relu) Activation functions YouTube Rectified Linear Meaning The kink in the function is the source of. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better. Rectified Linear Meaning.

From www.vrogue.co

Rectified Linear Unit Relu Introduction And Uses In M vrogue.co Rectified Linear Meaning The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. It has become the default activation function for. Rectified Linear Meaning.

From www.researchgate.net

Rectified linear unit (ReLU) activation function Download Scientific Rectified Linear Meaning The identity function f(x)=x is a basic linear activation, unbounded in its range. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The kink in the function is the source of. A rectified linear unit, or relu, is a form of activation function used commonly. Rectified Linear Meaning.

From www.slideteam.net

Relu Rectified Linear Unit Activation Function Artificial Neural Rectified Linear Meaning The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. The kink in the function is the source. Rectified Linear Meaning.

From www.aiplusinfo.com

Rectified Linear Unit (ReLU) Introduction and Uses in Machine Learning Rectified Linear Meaning Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. In essence, the function returns 0 if it receives a negative input, and if it receives a. It has become the default activation function for many types of neural networks because a model that uses it. Rectified Linear Meaning.

From www.researchgate.net

Functions including exponential linear unit (ELU), parametric rectified Rectified Linear Meaning It has become the default activation function for many types of neural networks because a model that uses it is easier to train and often achieves better performance. The identity function f(x)=x is a basic linear activation, unbounded in its range. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning. Rectified Linear Meaning.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function Download Scientific Rectified Linear Meaning The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational. In essence, the function returns 0 if it. Rectified Linear Meaning.

From www.researchgate.net

Leaky rectified linear unit (α = 0.1) Download Scientific Diagram Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The kink in the function is the source of. The identity function f(x)=x is a basic linear activation, unbounded in its range. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero. Rectified Linear Meaning.

From www.researchgate.net

2 Rectified Linear Unit function Download Scientific Diagram Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The identity function f(x)=x is a basic linear activation, unbounded in its range. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero.. Rectified Linear Meaning.

From www.mplsvpn.info

Rectified Linear Unit Activation Function In Deep Learning MPLSVPN Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. In essence, the function returns 0 if it receives a negative input, and if it receives a. The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients. Rectified Linear Meaning.

From www.youtube.com

Tutorial 10 Activation Functions Rectified Linear Unit(relu) and Leaky Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. The kink in the function is the source of. The identity function f(x)=x. Rectified Linear Meaning.

From www.gabormelli.com

Sshaped Rectified Linear Activation Function GMRKB Rectified Linear Meaning The rectified linear activation function or relu for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will output zero. In essence, the function returns 0 if it receives a negative input, and if it receives a. The rectified linear unit (relu) is an activation function that introduces the property of. Rectified Linear Meaning.

From www.researchgate.net

Residual connection unit. ReLU rectified linear units. Download Rectified Linear Meaning The rectified linear unit (relu) is an activation function that introduces the property of nonlinearity to a deep learning model and solves the vanishing gradients issue. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. The identity function f(x)=x is a basic linear activation, unbounded. Rectified Linear Meaning.

From www.youtube.com

Leaky ReLU Activation Function Leaky Rectified Linear Unit function Rectified Linear Meaning A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The identity function f(x)=x is a basic linear activation, unbounded in its range. Rectified linear units, or relus, are a type of activation function that are linear in the positive dimension, but zero in the negative dimension. Relu, or rectified linear unit,. Rectified Linear Meaning.