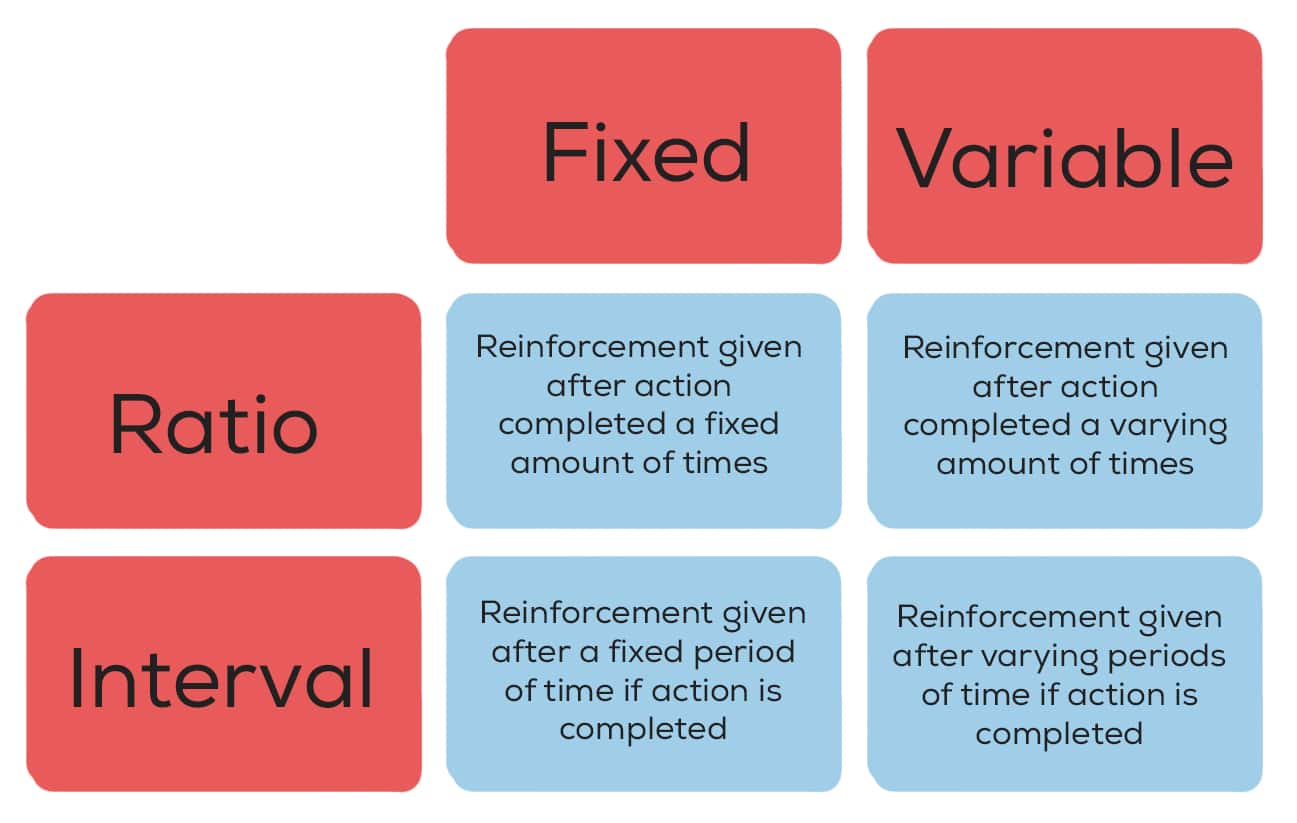

Example Of A Variable Ratio Reinforcement Schedule . The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. The reinforcement, like the jackpot. There are four types of reinforcement. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning.

from practicalpie.com

A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). There are four types of reinforcement. The reinforcement, like the jackpot.

Variable Interval Reinforcement Schedule (Examples) Practical Psychology

Example Of A Variable Ratio Reinforcement Schedule A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like the jackpot. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. There are four types of reinforcement. A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning.

From asdtoddler.fpg.unc.edu

Step 1.7 Select a schedule of reinforcement Example Of A Variable Ratio Reinforcement Schedule Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). A fixed ratio. Example Of A Variable Ratio Reinforcement Schedule.

From lasztim80materialdb.z13.web.core.windows.net

Schedules Of Reinforcement Worksheets Example Of A Variable Ratio Reinforcement Schedule The reinforcement, like the jackpot. A fixed ratio schedule is predictable and. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The variable ratio schedule is unpredictable and yields high. Example Of A Variable Ratio Reinforcement Schedule.

From www.verywellmind.com

VariableRatio Schedule Characteristics and Examples Example Of A Variable Ratio Reinforcement Schedule Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. A reinforcement schedule refers to the delivery. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Unit 6 Learning PowerPoint Presentation, free download ID1671019 Example Of A Variable Ratio Reinforcement Schedule Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A fixed ratio schedule is predictable and. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Reinforcement Schedules PowerPoint Presentation, free download Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). There are four types of reinforcement. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Reinforcement Schedules PowerPoint Presentation, free download Example Of A Variable Ratio Reinforcement Schedule There are four types of reinforcement. The reinforcement, like the jackpot. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A reinforcement schedule refers to the delivery. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Reinforcement Schedules PowerPoint Presentation, free download Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. There are four types of reinforcement. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after. Example Of A Variable Ratio Reinforcement Schedule.

From helpfulprofessor.com

15 Reinforcement Schedule Examples (Of all Types) (2024) Example Of A Variable Ratio Reinforcement Schedule A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). A fixed ratio schedule is predictable and. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a. Example Of A Variable Ratio Reinforcement Schedule.

From techlingsendest.weebly.com

RealLife Examples Of Schedules Of Reinforcement Extra Quality Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). A fixed ratio schedule is predictable and. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after. Example Of A Variable Ratio Reinforcement Schedule.

From www.verywellmind.com

How Schedules of Reinforcement Work Example Of A Variable Ratio Reinforcement Schedule The reinforcement, like the jackpot. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). There are four types of reinforcement. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). Schedules of reinforcement are rules that. Example Of A Variable Ratio Reinforcement Schedule.

From study.com

Variable Ratio Reinforcement Schedule & Examples Lesson Example Of A Variable Ratio Reinforcement Schedule A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like the jackpot. There are four types of reinforcement. A reinforcement schedule. Example Of A Variable Ratio Reinforcement Schedule.

From helpfulprofessor.com

15 Reinforcement Schedule Examples (Of all Types) (2024) Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. There are four types of reinforcement. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a. Example Of A Variable Ratio Reinforcement Schedule.

From mavink.com

Variable Ratio Schedule Of Reinforcement Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. There are four types of reinforcement. A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The reinforcement, like the jackpot. Variable ratio reinforcement is one way to. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Reinforcement Schedules PowerPoint Presentation, free download Example Of A Variable Ratio Reinforcement Schedule The reinforcement, like the jackpot. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Edward L. Thorndike PowerPoint Presentation, free download ID Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The reinforcement, like the jackpot. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if. Example Of A Variable Ratio Reinforcement Schedule.

From helpfulprofessor.com

15 Variable Ratio Schedule Examples (2024) Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. There are four types of reinforcement. The reinforcement, like the jackpot. The variable ratio schedule is unpredictable and yields high and steady response rates, with. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideshare.net

schedules of reinforcement Example Of A Variable Ratio Reinforcement Schedule Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g.,. Example Of A Variable Ratio Reinforcement Schedule.

From studylib.net

Schedule of Reinforcement Definition and Examples Effects Fixed Example Of A Variable Ratio Reinforcement Schedule There are four types of reinforcement. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like the jackpot. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A fixed ratio schedule is predictable and. Variable ratio reinforcement. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Reinforcement Schedules PowerPoint Presentation, free download Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. There are four types of reinforcement. The reinforcement, like the jackpot. A reinforcement schedule refers to the delivery of a. Example Of A Variable Ratio Reinforcement Schedule.

From www.youtube.com

Schedules of Reinforcement VCE Psychology YouTube Example Of A Variable Ratio Reinforcement Schedule A fixed ratio schedule is predictable and. The reinforcement, like the jackpot. There are four types of reinforcement. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. Variable ratio reinforcement is one way to. Example Of A Variable Ratio Reinforcement Schedule.

From www.youtube.com

What is Variable Ratio Reinforcement Schedule? Reinforcement Learning Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. A fixed ratio schedule is predictable and. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it. Example Of A Variable Ratio Reinforcement Schedule.

From www.crossrivertherapy.com

Variable Ratio Schedule & Examples Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The reinforcement, like the jackpot. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if. Example Of A Variable Ratio Reinforcement Schedule.

From edgelimits.com

Schedule of Reinforcement (Behavioral psychology) Example Of A Variable Ratio Reinforcement Schedule A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g.,. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Behaviorism PowerPoint Presentation, free download ID2152777 Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The reinforcement, like the jackpot. A fixed ratio schedule is predictable and. Variable ratio reinforcement is one way to schedule. Example Of A Variable Ratio Reinforcement Schedule.

From ar.inspiredpencil.com

Schedules Of Reinforcement Example Of A Variable Ratio Reinforcement Schedule There are four types of reinforcement. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. A fixed ratio schedule is predictable and. The reinforcement, like the jackpot. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is a reinforcement. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Schedules of Reinforcement Chapter 13 PowerPoint Presentation Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like the jackpot. There are four types of reinforcement. A fixed ratio schedule is predictable and. Schedules of. Example Of A Variable Ratio Reinforcement Schedule.

From www.youtube.com

What are the Reinforcement Schedules in Operant Conditioning? YouTube Example Of A Variable Ratio Reinforcement Schedule A fixed ratio schedule is predictable and. There are four types of reinforcement. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The reinforcement, like the jackpot. Schedules of reinforcement. Example Of A Variable Ratio Reinforcement Schedule.

From parentgraph.org

Schedules of Reinforcement What you need to know Parent Graph Example Of A Variable Ratio Reinforcement Schedule A fixed ratio schedule is predictable and. There are four types of reinforcement. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like the jackpot. Schedules of. Example Of A Variable Ratio Reinforcement Schedule.

From mavink.com

Variable Ratio Schedule Of Reinforcement Example Of A Variable Ratio Reinforcement Schedule Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A fixed ratio schedule is predictable and. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood. Example Of A Variable Ratio Reinforcement Schedule.

From www.abatherapistjobs.com

Schedules of Reinforcement Example Of A Variable Ratio Reinforcement Schedule A fixed ratio schedule is predictable and. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like the jackpot. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. A reinforcement schedule refers to the delivery of. Example Of A Variable Ratio Reinforcement Schedule.

From www.abatherapistjobs.com

Schedules of Reinforcement Example Of A Variable Ratio Reinforcement Schedule The reinforcement, like the jackpot. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). A fixed ratio schedule is predictable and. The variable ratio schedule is a reinforcement schedule that involves. Example Of A Variable Ratio Reinforcement Schedule.

From practicalpie.com

Variable Interval Reinforcement Schedule (Examples) Practical Psychology Example Of A Variable Ratio Reinforcement Schedule Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). The reinforcement, like. Example Of A Variable Ratio Reinforcement Schedule.

From www.simplypsychology.org

Schedules of Reinforcement in Psychology (Examples) Example Of A Variable Ratio Reinforcement Schedule There are four types of reinforcement. A reinforcement schedule refers to the delivery of a reward (reinforcer) to strengthen a behavior (i.e., make it occur more frequently). The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in. Example Of A Variable Ratio Reinforcement Schedule.

From helpfulprofessor.com

15 Reinforcement Schedule Examples (Of all Types) (2024) Example Of A Variable Ratio Reinforcement Schedule The reinforcement, like the jackpot. There are four types of reinforcement. Schedules of reinforcement are rules that control the timing and frequency of reinforcement delivery in operant conditioning. A fixed ratio schedule is predictable and. The variable ratio schedule is a reinforcement schedule that involves providing reinforcement after a variable number of responses. Variable ratio reinforcement is one way to. Example Of A Variable Ratio Reinforcement Schedule.

From www.slideserve.com

PPT Reinforcement Schedules PowerPoint Presentation, free download Example Of A Variable Ratio Reinforcement Schedule The variable ratio schedule is unpredictable and yields high and steady response rates, with little if any pause after reinforcement (e.g., gambler). A fixed ratio schedule is predictable and. Variable ratio reinforcement is one way to schedule reinforcements in order to increase the likelihood of desired behaviors. The reinforcement, like the jackpot. There are four types of reinforcement. Schedules of. Example Of A Variable Ratio Reinforcement Schedule.