Elasticsearch Keyword Tokenizer . The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer allows you to use token filters like lowercase. Avoid using the word_delimiter filter to split. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer.

from blog.csdn.net

The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. Avoid using the word_delimiter filter to split. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer allows you to use token filters like lowercase. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer.

ElasticSearch学习并使用_elasticsearch中文文档CSDN博客

Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. Avoid using the word_delimiter filter to split. It shouldn't matter either way for aggregations once you've. The keyword tokenizer allows you to use token filters like lowercase. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer.

From underthehood.meltwater.com

How we upgraded an old, 3PB large, Elasticsearch cluster without Elasticsearch Keyword Tokenizer I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer is a “noop” tokenizer that accepts. Elasticsearch Keyword Tokenizer.

From help.aliyun.com

如何TairSearch分词器_云原生内存数据库Tair(Tair)阿里云帮助中心 Elasticsearch Keyword Tokenizer A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same. Elasticsearch Keyword Tokenizer.

From stackoverflow.com

elasticsearch How keyword type gets stored and analyzed in elastic Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. It shouldn't matter either way for aggregations once you've. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. I try. Elasticsearch Keyword Tokenizer.

From 9to5answer.com

[Solved] ElasticSearch Analyzer and Tokenizer for Emails 9to5Answer Elasticsearch Keyword Tokenizer For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. Avoid using the word_delimiter filter to split. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. It shouldn't matter either way for aggregations once you've. The keyword tokenizer allows you to use. Elasticsearch Keyword Tokenizer.

From geekdaxue.co

马士兵ElasticSearch 2. script、ik分词器与集群部署 《Java 学习笔记》 极客文档 Elasticsearch Keyword Tokenizer It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is. Elasticsearch Keyword Tokenizer.

From www.devopsschool.com

Understanding Elasticsearch Keywords and Terminology Elasticsearch Keyword Tokenizer It shouldn't matter either way for aggregations once you've. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. Avoid using the word_delimiter. Elasticsearch Keyword Tokenizer.

From opster.com

Elasticsearch Text Analyzers Tokenizers, Standard Analyzers & Stopwords Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer allows you to use token filters like lowercase. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. Avoid. Elasticsearch Keyword Tokenizer.

From www.cnblogs.com

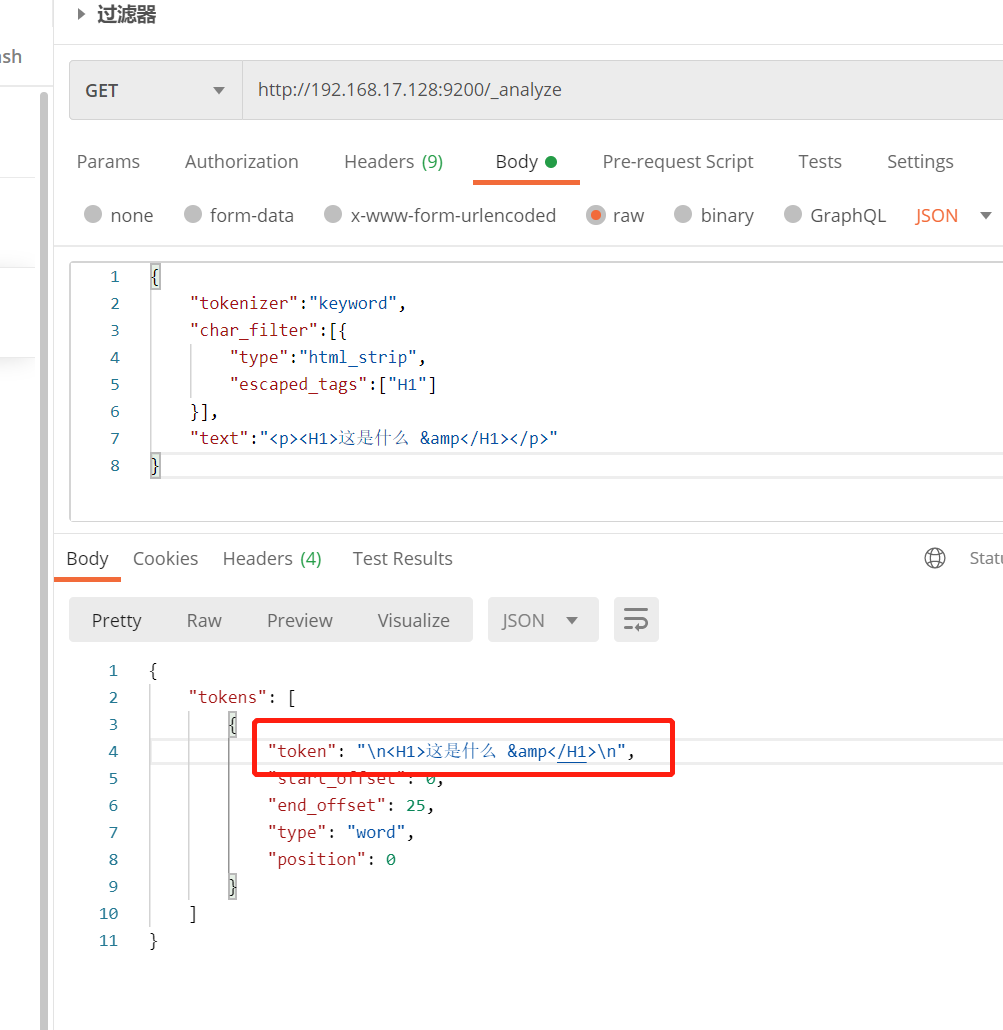

elasticsearch分词器 character filter ,tokenizer,token filter 孙龙程序员 博客园 Elasticsearch Keyword Tokenizer It shouldn't matter either way for aggregations once you've. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. For these use cases,. Elasticsearch Keyword Tokenizer.

From geekdaxue.co

Elasticsearch(ES) Elasticsearch 基础 《资料库》 极客文档 Elasticsearch Keyword Tokenizer Avoid using the word_delimiter filter to split. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it. Elasticsearch Keyword Tokenizer.

From smnh.me

Indexing and Searching Arbitrary JSON Data using Elasticsearch smnh Elasticsearch Keyword Tokenizer I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. It shouldn't matter either way for aggregations once you've. The keyword tokenizer allows you to use token filters like. Elasticsearch Keyword Tokenizer.

From bbs.huaweicloud.com

【愚公系列】2022年12月 Elasticsearch数据库ELK添加中文分词器插件(三)云社区华为云 Elasticsearch Keyword Tokenizer For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer allows. Elasticsearch Keyword Tokenizer.

From blog.csdn.net

es elasticsearch 八 mapping 映射 、复杂数据类型_elasticsearch8 tokenizerCSDN博客 Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. Avoid using the word_delimiter filter to split. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. A tokenizer is an. Elasticsearch Keyword Tokenizer.

From opster.com

Elasticsearch Text Analyzers Tokenizers, Standard Analyzers & Stopwords Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. For these use cases, we recommend using. Elasticsearch Keyword Tokenizer.

From noti.st

Elasticsearch You know, for Search Elasticsearch Keyword Tokenizer I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. The keyword tokenizer allows. Elasticsearch Keyword Tokenizer.

From www.thirdrocktechkno.com

Elasticsearch Meaning, Components and Use Cases Thirdock Techkno Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. It shouldn't matter either way for aggregations once you've. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a. Elasticsearch Keyword Tokenizer.

From www.linkedin.com

Elasticsearch ProTips Part I Sharding Elasticsearch Keyword Tokenizer For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. It shouldn't matter either way for aggregations once you've. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. Avoid using the word_delimiter filter to split. The keyword tokenizer is a “noop” tokenizer. Elasticsearch Keyword Tokenizer.

From aqlu.gitbook.io

Lowercase Tokenizer Elasticsearch Reference Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. I try to make an autocomplete function with angularjs and elasticsearch on. Elasticsearch Keyword Tokenizer.

From www.atatus.com

Beginner's Guide to Elasticsearch API Indexing and Searching Data Elasticsearch Keyword Tokenizer Avoid using the word_delimiter filter to split. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer allows you to use token filters like lowercase.. Elasticsearch Keyword Tokenizer.

From stackoverflow.com

elasticsearch Elastic Search Keyword Stuffing and Scoring Stack Elasticsearch Keyword Tokenizer It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. Avoid using the word_delimiter. Elasticsearch Keyword Tokenizer.

From blog.csdn.net

ElasticSearch学习并使用_elasticsearch中文文档CSDN博客 Elasticsearch Keyword Tokenizer For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. Avoid using the word_delimiter filter to split. The keyword tokenizer allows you to use token filters like lowercase. The keyword tokenizer is a. Elasticsearch Keyword Tokenizer.

From www.cnblogs.com

elasticsearch分词器 character filter ,tokenizer,token filter 孙龙程序员 博客园 Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer allows you to use token filters like lowercase. The keyword tokenizer is a “noop” tokenizer. Elasticsearch Keyword Tokenizer.

From 9to5answer.com

[Solved] How to setup a tokenizer in elasticsearch 9to5Answer Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer allows you to use token filters like lowercase. For these use cases, we recommend using. Elasticsearch Keyword Tokenizer.

From juejin.cn

【9种】ElasticSearch分词器详解,一文get!!! 博学谷狂野架构师 ElasticSearch 分词 掘金 Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. The keyword tokenizer is a “noop” tokenizer that accepts whatever text. Elasticsearch Keyword Tokenizer.

From softjourn.com

Elasticsearch 101 Key Concepts, Benefits & Use Cases Softjourn Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks. Elasticsearch Keyword Tokenizer.

From www.nan.fyi

Rebuilding Babel The Tokenizer Elasticsearch Keyword Tokenizer Avoid using the word_delimiter filter to split. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. It shouldn't matter either way for aggregations once you've. A tokenizer is an essential component of the analyzer that receives a. Elasticsearch Keyword Tokenizer.

From blog.csdn.net

Elasticsearch中keyword和numeric对性能的影响分析_elasticsearch keyword和数字类型哪个性能好 Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. Avoid using the word_delimiter filter to split. I try to make an autocomplete. Elasticsearch Keyword Tokenizer.

From viblo.asia

Elasticsearch 2 Cơ chế hoạt động của Elasticsearch Viblo Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer is a “noop” tokenizer. Elasticsearch Keyword Tokenizer.

From mystudylab.tistory.com

[Elasticsearch] 3. 인덱스 설계 (Analyzer, Tokenizer) Elasticsearch Keyword Tokenizer Avoid using the word_delimiter filter to split. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. The keyword tokenizer allows you to. Elasticsearch Keyword Tokenizer.

From stackoverflow.com

elasticsearch How keyword type gets stored and analyzed in elastic Elasticsearch Keyword Tokenizer I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. It shouldn't matter either way for aggregations once you've. For these use cases, we recommend using the word_delimiter filter with the keyword tokenizer. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same. Elasticsearch Keyword Tokenizer.

From www.cnblogs.com

elasticsearch分词器 character filter ,tokenizer,token filter 孙龙程序员 博客园 Elasticsearch Keyword Tokenizer Avoid using the word_delimiter filter to split. I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. It shouldn't matter either way for aggregations once you've. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. The keyword tokenizer. Elasticsearch Keyword Tokenizer.

From www.wikitechy.com

elasticsearch analyzer elasticsearch analysis By Microsoft Elasticsearch Keyword Tokenizer I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. It shouldn't matter either way for aggregations once you've. For these use cases, we recommend using the word_delimiter filter. Elasticsearch Keyword Tokenizer.

From github.com

GitHub map4d/map4dtokenizerelasticsearch Map4D tokenizer Elasticsearch Keyword Tokenizer The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. Avoid using the word_delimiter filter to split. It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text. Elasticsearch Keyword Tokenizer.

From stackoverflow.com

elasticsearch How to write a elastic search query to get the list of Elasticsearch Keyword Tokenizer The keyword tokenizer allows you to use token filters like lowercase. Avoid using the word_delimiter filter to split. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact. Elasticsearch Keyword Tokenizer.

From medium.com

Simple NGram Tokenizer in Elasticsearch by Aong Wachi ConvoLab Elasticsearch Keyword Tokenizer A tokenizer is an essential component of the analyzer that receives a stream of characters as input, breaks it down into individual tokens. Avoid using the word_delimiter filter to split. It shouldn't matter either way for aggregations once you've. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as. Elasticsearch Keyword Tokenizer.

From astconsulting.in

Implementing Custom Tokenizers in Elasticsearch Elasticsearch Keyword Tokenizer I try to make an autocomplete function with angularjs and elasticsearch on a given field, for example countryname. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact same text as a single term. The keyword tokenizer is a “noop” tokenizer that accepts whatever text it is given and outputs the exact. Elasticsearch Keyword Tokenizer.