Torch Embedding Input . torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. transformers most often have as input the addition of something and a position embedding. pytorch provides the nn.embedding module to create embedding layers. Here's a breakdown of what happens inside this module: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. For example, position 1 to 128 represented as. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,.

from zhuanlan.zhihu.com

Here's a breakdown of what happens inside this module: transformers most often have as input the addition of something and a position embedding. For example, position 1 to 128 represented as. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. pytorch provides the nn.embedding module to create embedding layers. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,.

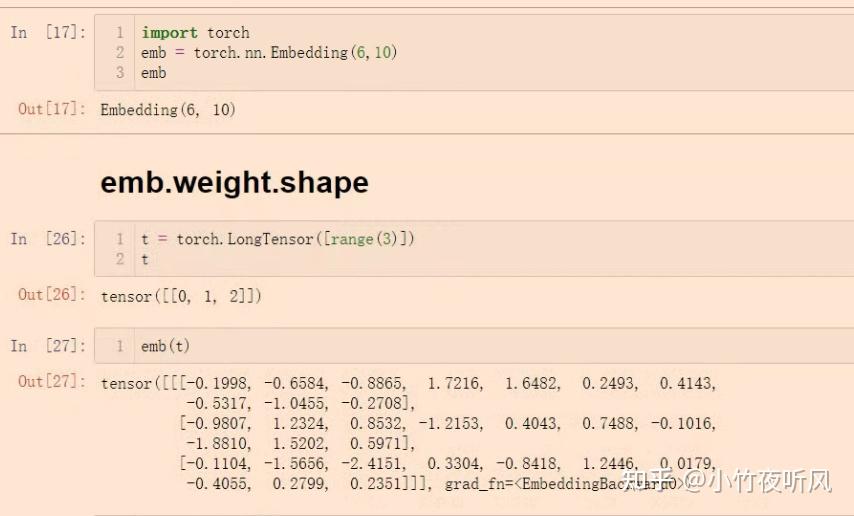

Torch.nn.Embedding的用法 知乎

Torch Embedding Input nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. transformers most often have as input the addition of something and a position embedding. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. For example, position 1 to 128 represented as. pytorch provides the nn.embedding module to create embedding layers. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. Here's a breakdown of what happens inside this module:

From blog.csdn.net

torch.reshape(input, shape)函数使用举例_inputs.reshapeCSDN博客 Torch Embedding Input i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. For example, position 1 to 128 represented as. Here's a breakdown of what happens inside this module: This mapping is done through an embedding matrix, which is a. transformers most often have as input the addition of something and a position. Torch Embedding Input.

From colab.research.google.com

Google Colab Torch Embedding Input torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. transformers most often have as input the addition of something and a position embedding. i need some clarity on how to correctly prepare inputs for different components of nn,. Torch Embedding Input.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Torch Embedding Input torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. transformers most often have as input the addition of something and a position embedding. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. pytorch provides the nn.embedding module to create embedding layers. i need some clarity on. Torch Embedding Input.

From blog.csdn.net

Embedding 对象 embedding(input) 是读取第 input 词的词向量_inputs和embedding关系CSDN博客 Torch Embedding Input nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. pytorch provides the nn.embedding module to create embedding layers. i need some clarity on how to correctly prepare. Torch Embedding Input.

From github.com

GitHub PyTorch implementation of some Torch Embedding Input Here's a breakdown of what happens inside this module: For example, position 1 to 128 represented as. pytorch provides the nn.embedding module to create embedding layers. This mapping is done through an embedding matrix, which is a. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. nn.embedding is a. Torch Embedding Input.

From blog.csdn.net

torch.nn.Embedding()参数讲解_nn.embedding参数CSDN博客 Torch Embedding Input transformers most often have as input the addition of something and a position embedding. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known. Torch Embedding Input.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Torch Embedding Input pytorch provides the nn.embedding module to create embedding layers. Here's a breakdown of what happens inside this module: torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. transformers most often have as input the addition of something and a position embedding. nn.embedding is a pytorch layer that maps. Torch Embedding Input.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding Input pytorch provides the nn.embedding module to create embedding layers. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. For example, position 1 to 128 represented as. This mapping is done through an embedding matrix, which is a. Here's a breakdown of what happens inside this module: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense. Torch Embedding Input.

From www.youtube.com

torch.nn.Embedding explained (+ Characterlevel language model) YouTube Torch Embedding Input pytorch provides the nn.embedding module to create embedding layers. This mapping is done through an embedding matrix, which is a. transformers most often have as input the addition of something and a position embedding. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. For example,. Torch Embedding Input.

From blog.csdn.net

Embedding 对象 embedding(input) 是读取第 input 词的词向量_inputs和embedding关系CSDN博客 Torch Embedding Input nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. transformers most often have as input the addition of something and a position embedding. Here's a breakdown of what. Torch Embedding Input.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch Embedding Input This mapping is done through an embedding matrix, which is a. For example, position 1 to 128 represented as. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. Here's a breakdown of what happens inside this module: pytorch provides the nn.embedding module to create embedding layers. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense. Torch Embedding Input.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding Input This mapping is done through an embedding matrix, which is a. Here's a breakdown of what happens inside this module: i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. pytorch provides the nn.embedding module to create embedding layers. transformers most often have as input the addition of something and. Torch Embedding Input.

From blog.csdn.net

torch.nn.embedding的工作原理_nn.embedding原理CSDN博客 Torch Embedding Input Here's a breakdown of what happens inside this module: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. For example, position 1 to 128 represented as. i need. Torch Embedding Input.

From coderzcolumn.com

How to Use GloVe Word Embeddings With PyTorch Networks? Torch Embedding Input transformers most often have as input the addition of something and a position embedding. This mapping is done through an embedding matrix, which is a. pytorch provides the nn.embedding module to create embedding layers. Here's a breakdown of what happens inside this module: For example, position 1 to 128 represented as. nn.embedding is a pytorch layer that. Torch Embedding Input.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch Embedding Input nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. transformers most often have as input the addition of something and a position embedding. Here's a breakdown of what. Torch Embedding Input.

From github.com

index out of range in self torch.embedding(weight, input, padding_idx Torch Embedding Input Here's a breakdown of what happens inside this module: For example, position 1 to 128 represented as. transformers most often have as input the addition of something and a position embedding. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. pytorch provides the nn.embedding module to create embedding layers.. Torch Embedding Input.

From theaisummer.com

How Positional Embeddings work in SelfAttention (code in Pytorch) AI Torch Embedding Input i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. Here's a breakdown of what happens inside this module: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. pytorch provides the. Torch Embedding Input.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding Input This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. transformers most often have as input the addition of something and a position embedding. pytorch provides the nn.embedding module to create embedding layers. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of. Torch Embedding Input.

From zhuanlan.zhihu.com

无脑入门pytorch系列(一)—— nn.embedding 知乎 Torch Embedding Input For example, position 1 to 128 represented as. pytorch provides the nn.embedding module to create embedding layers. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. transformers. Torch Embedding Input.

From blog.csdn.net

torch.nn.Embedding参数详解之num_embeddings,embedding_dim_torchembeddingCSDN博客 Torch Embedding Input i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. This mapping is done through an embedding matrix, which is a. Here's a breakdown of what happens inside this module: For example, position 1 to 128 represented as. nn.embedding is a pytorch layer that. Torch Embedding Input.

From stackoverflow.com

deep learning Faster way to do multiple embeddings in PyTorch Torch Embedding Input transformers most often have as input the addition of something and a position embedding. Here's a breakdown of what happens inside this module: i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. pytorch provides the nn.embedding module to create embedding layers. For example, position 1 to 128 represented as.. Torch Embedding Input.

From exoxmgifz.blob.core.windows.net

Torch.embedding Source Code at David Allmon blog Torch Embedding Input nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Here's a breakdown of what happens inside this module: transformers most often have as input the addition of something and a position embedding. For example, position 1 to 128 represented as. This mapping is done through an. Torch Embedding Input.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Torch Embedding Input This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. transformers most often have as input the addition of something and a position embedding. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. pytorch provides the nn.embedding. Torch Embedding Input.

From www.youtube.com

torch.nn.Embedding How embedding weights are updated in Torch Embedding Input torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. transformers most often have as input the addition of something and a position embedding. pytorch provides the nn.embedding module to create embedding layers. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Here's a breakdown of what happens. Torch Embedding Input.

From t.zoukankan.com

pytorch中,嵌入层torch.nn.embedding的计算方式 走看看 Torch Embedding Input torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a. Here's a breakdown of what happens inside this module: transformers most often have as input the addition of something. Torch Embedding Input.

From velog.io

[토치의 호흡] 05 NLP Basic "Text to Tensor" Torch Embedding Input For example, position 1 to 128 represented as. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. This mapping is done through an embedding matrix, which is a. Here's a breakdown of what happens inside this module: nn.embedding is a pytorch layer that. Torch Embedding Input.

From aitechtogether.com

pytorch复习笔记nn.Embedding()的用法 AI技术聚合 Torch Embedding Input Here's a breakdown of what happens inside this module: pytorch provides the nn.embedding module to create embedding layers. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. . Torch Embedding Input.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Torch Embedding Input This mapping is done through an embedding matrix, which is a. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Here's a breakdown of what happens inside this module: i need some clarity on how to correctly prepare inputs. Torch Embedding Input.

From ericpengshuai.github.io

【PyTorch】RNN 像我这样的人 Torch Embedding Input Here's a breakdown of what happens inside this module: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. transformers most often have as input the addition of something. Torch Embedding Input.

From velog.io

[토치의 호흡] 05 NLP Basic "Text to Tensor" Torch Embedding Input transformers most often have as input the addition of something and a position embedding. Here's a breakdown of what happens inside this module: pytorch provides the nn.embedding module to create embedding layers. For example, position 1 to 128 represented as. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed. Torch Embedding Input.

From zhuanlan.zhihu.com

无脑入门pytorch系列(一)—— nn.embedding 知乎 Torch Embedding Input i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,. pytorch provides the nn.embedding module to create embedding layers. Here's a breakdown of what happens inside this module: transformers most often have as input the addition of something and a position embedding. For example, position 1 to 128 represented as.. Torch Embedding Input.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch Embedding Input For example, position 1 to 128 represented as. pytorch provides the nn.embedding module to create embedding layers. transformers most often have as input the addition of something and a position embedding. Here's a breakdown of what happens inside this module: i need some clarity on how to correctly prepare inputs for different components of nn, mainly nn.embedding,.. Torch Embedding Input.

From blog.csdn.net

【Pytorch基础教程28】浅谈torch.nn.embedding_torch embeddingCSDN博客 Torch Embedding Input Here's a breakdown of what happens inside this module: torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. pytorch provides the nn.embedding module to create embedding layers. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. i need some clarity on how to correctly prepare inputs for. Torch Embedding Input.

From github.com

GitHub CyberZHG/torchpositionembedding Position embedding in PyTorch Torch Embedding Input Here's a breakdown of what happens inside this module: transformers most often have as input the addition of something and a position embedding. This mapping is done through an embedding matrix, which is a. pytorch provides the nn.embedding module to create embedding layers. i need some clarity on how to correctly prepare inputs for different components of. Torch Embedding Input.

From blog.csdn.net

torch.nn.Embedding()的固定化_embedding 固定初始化CSDN博客 Torch Embedding Input transformers most often have as input the addition of something and a position embedding. torch.nn.functional.embedding(input, weight, padding_idx=none, max_norm=none, norm_type=2.0, scale_grad_by_freq=false,. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. For example, position 1 to 128 represented as. pytorch provides the nn.embedding module to create. Torch Embedding Input.